In my experience, you have it backwards. If you are spending more than $300 on a CPU you want it to last more than a year or even a couple years. I have always spent more while looking forward, while there is no reliable way to future-proof anything in tech, having a level head and thinking past bar charts in current games helps a lot.

When other people were buying Core 2 Duo and/or Quad and overclocking it to the sky, I bought into Nehalem at a higher price and locked in what ended up being more than 5 years on Core i7-950. After that, while other people were buying 6700K Skylakes and pushing close to 5.0 ghz already then, I bought into HEDT and have now spent almost 4 years on a 5820K running at 4.3. In both cases, I took a lower core clock and less per-thread performance in order to ensure I had more cores than was mainstream at the time. And in both cases, having more cores at a lower clock ended up being more future-proof than having fewer cores at a higher clock.

Those people who thought they were being smart buying i5-4590K instead of i7-4790K? They got

fucked because it turned out games ended up getting multi-threaded a lot more quickly than anyone expected and 4c/4t without HT was like running the race with only 1 leg. The people on 4790K's today are starting to go through the same thing, as 4c/8t is starting to reach it's limits and most people buying into a gaming ecosystem are thinking of what's next, not what's now.

So let's use our galaxy brains and think about this a moment.

New chips, more firepower.

hexus.net

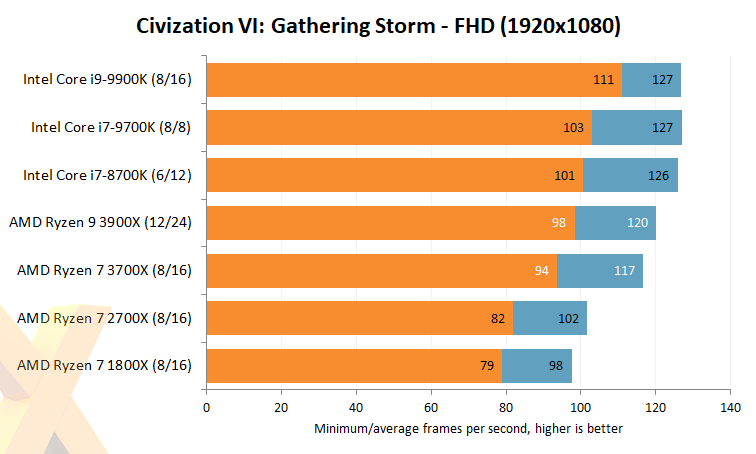

Now you look at this and you think to yourself, OH MY GOD AMD GETS KILLED BY INTEL STILL IN GAMES I'D BETTER BUY A 9900K RIGHT NOW

But then you realize that you haven't played a game at 1080p on your fire-breathing LED light show Master Race God Machine in like 8 years now. And then well...

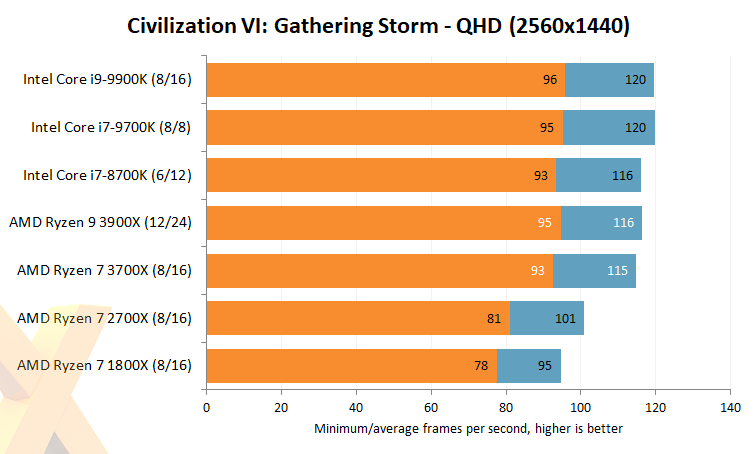

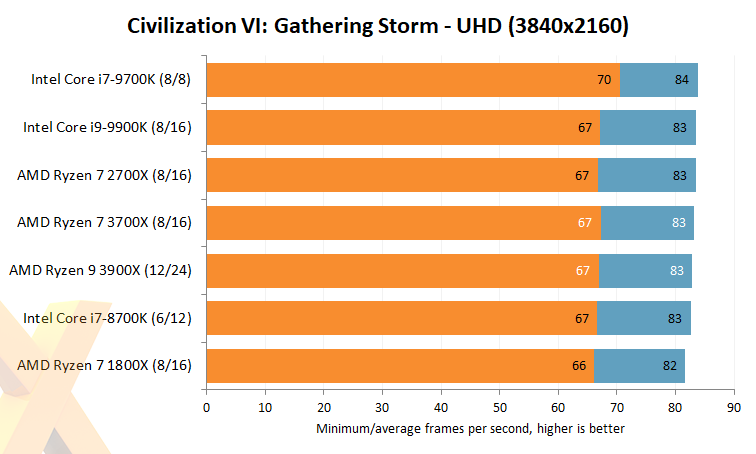

Well...that seems a bit more realistic. Yeah. And then if you realize that you haven't been a resolutionlet for the past 4 years and you're gaming in 4K like I am, this is what you see:

Hexus.net are the only people crazy enough to "benchmark" CPU's at 4K resolution, so don't blame me for this. I didn't think of using a 100% GPU-bound situation to prove a point, but it's proven nonetheless.

What I'm saying is that in any real-world situation, more likely than not you are GPU-bound to some extent and the tests in 1080p where the 9900K kills the 3900X are only relevant if you are one of those CSGO players who swears you need to be at 400 fps or you can't win. Because it's literally irrelevant to everyone else who is a PC gamer.

But then you add in doing actual other stuff while gaming, and then the picture looks more muddled.

So let's look at gaming while streaming, an example making the CPU do more than just run the game.

Wow, huh. The 9900K's entire lead against the 3900X just evaporates when you make it stream your gameplay at the same time you are playing the game. That's a big hmmm. (Of course, if you are truly a galaxy brain, you are using the NVENC encoder on Shadowplay or OBS for your streaming in which case your result is that bar at the top. But I'm just demonstrating what happens when 12c/24t takes on 8c/16t and doing more than just playing the game.)

Also, for people who think they are smart by buying a 9700K instead of a 9900K or 3900X, well....you're making the same mistake those people who thought they were smart buying an i5-4590K instead of an i7-4790K back in the day.

Here we can see the 8c/8t 9700K turning into a stuttery mess when trying to play a game and stream it at the same time. The 8c/16t 9900K and the 12c/24t 3900X are casual as fuck doing this. Don't be a brainlet and buy the 9700K instead of the 3700X, 3900X, or 9900K and think you're future-proof because you're not.

So in summary, what have we learned?

(1) More cores + more threads at a lower clock speed is more future-proof than fewer cores + fewer threads at higher clock speed

(2) Real-world gaming consists of way more than looking at bar charts

(3) Smart people always think about the future 1-2 year down the road, not right this second when investing in a new gaming machine

If we take these lessons into consideration, it's fairly clear who is more future-proof here despite losing at bar charts in 1080p.