Really? What games were you playing and what CPU do you have? I've been running my GTX 970 since late 2014 and it's been able to do 1080p 60 with pretty much every game.

About the 1060, not at all if you have a GTX 970 that can clock to around 1400-1500MHz.

With both GPUs near their max overclocks, which would be 1.9-2GHz on the GTX 1060 and 1450-1500MHz+ on the GTX 970 it's around 10-15% faster, it's not a worthwhile upgrade at all unless you're looking for more ram.

Digital Foundry included a stock and overclocked GTX 970 in their

GTX 1060 review.

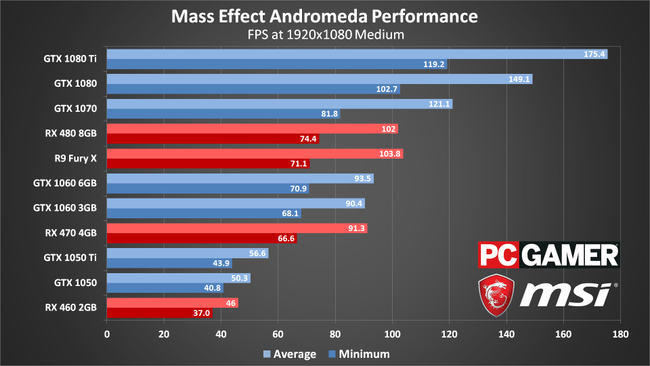

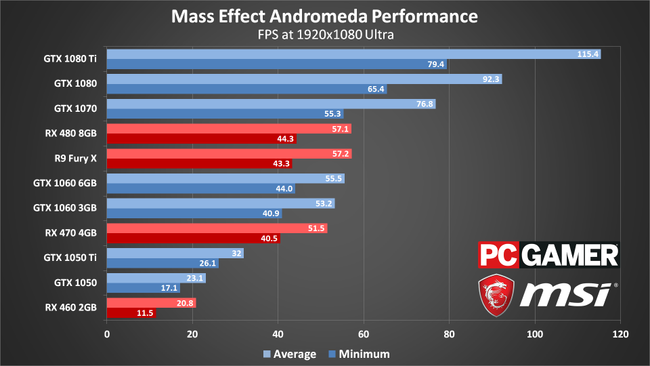

You're best bet is to upgrade to a GPU that is 50% or more faster if you want a worthwhile upgrade, like the GTX 1070 or perhaps even one of AMD's upcoming VEGA GPUs. I myself prefer upgrading to GPUs that are twice or more faster like the GTX 1080 or future GPUs.

It's best to wait to see what Vega offers in a few weeks, if AMD brings solid competition then you could potentially have a decent upgrade path from either AMD or NVIDIA as price adjustments often come when there's solid competition.