KojiKnight

Member

Do 50GB discs matter? How many PS3 games used them... MGS4 is about the only one I can think of, and that's because it had hours of HD video and audio stored on it.

Enhanced 386, mirite?

Sure... We know what's inside the Wii after all.

For that one it's Geizhals.at. But I see xbitlabs for one measured significantly less. So maybe you should heed my previous warnings and not take this as too accurateWhat is the source of this info?

Well... it depends. Off the shelf parts (like RAM) are easy to look up part numbers and get information on. Custom parts, however, you can't tell just by looking at them unless that information is public for some reason.

For example, we know the 3DS processor is a custom ARM with a custom PICA200 soldered onto it, but we have no idea it's actual individual power draw or speed in mhz. The ram, however on the 3DS is a known and widely available 128MB chip who's serial number made it very easy to find out info on.

The wattage we'll be able to mostly figure out once the system is out and we start hooking it up to a wattage meter... but in so far as individual parts, we might run into the same problem GPU/CPU wise.

Yeah, I wanted to point that out: the Wikipedia entry of the Hollywood GPU in the Wii makes no mention of DirectX support, number of SPUs, and such.

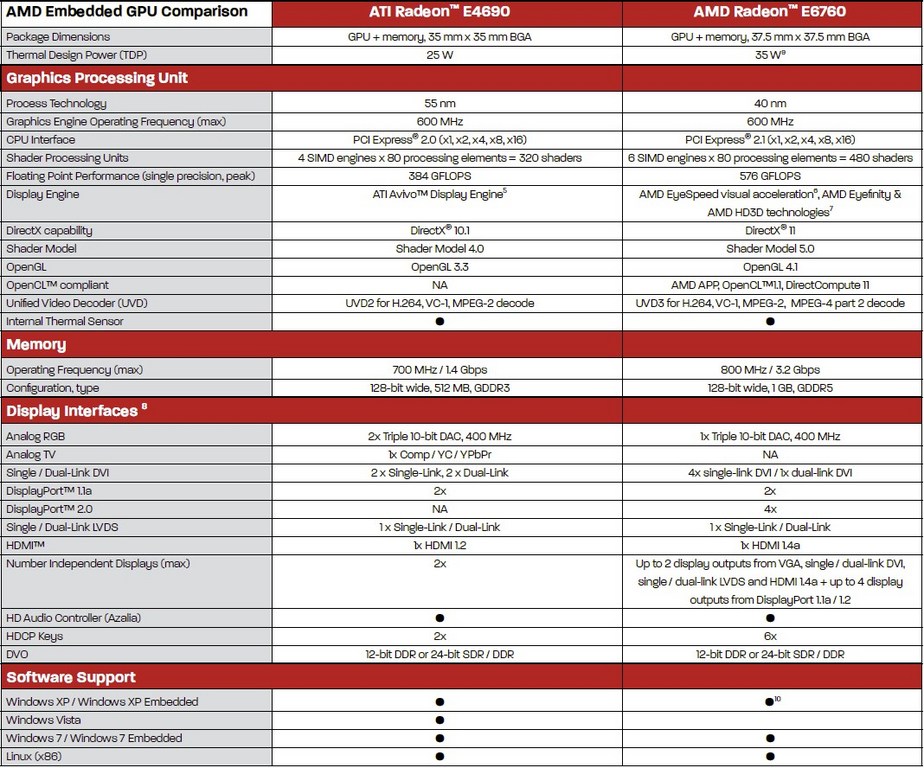

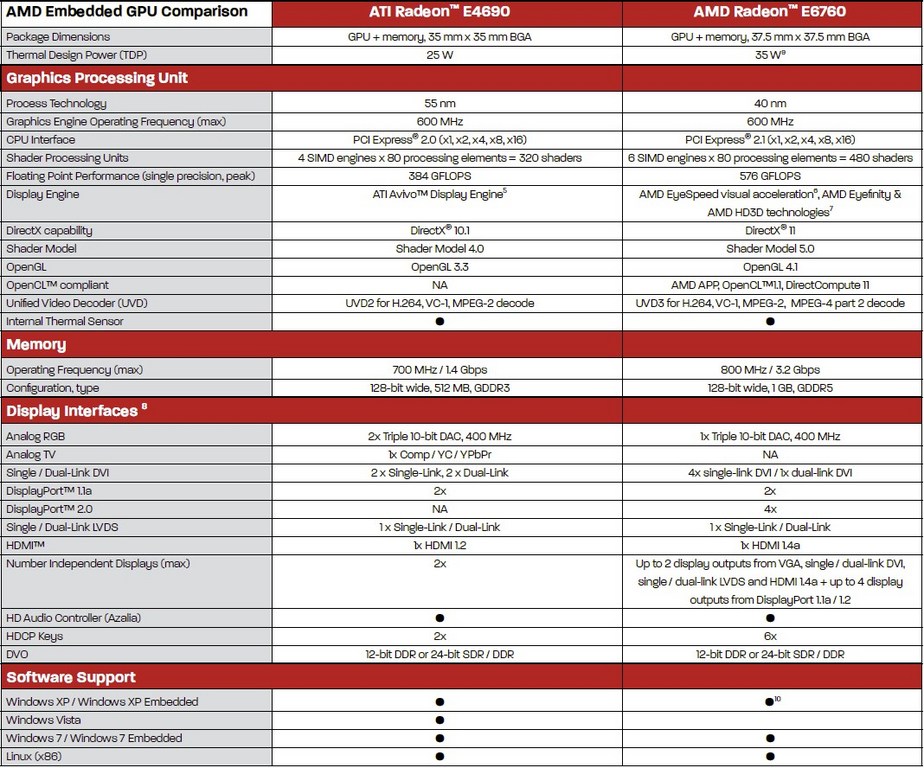

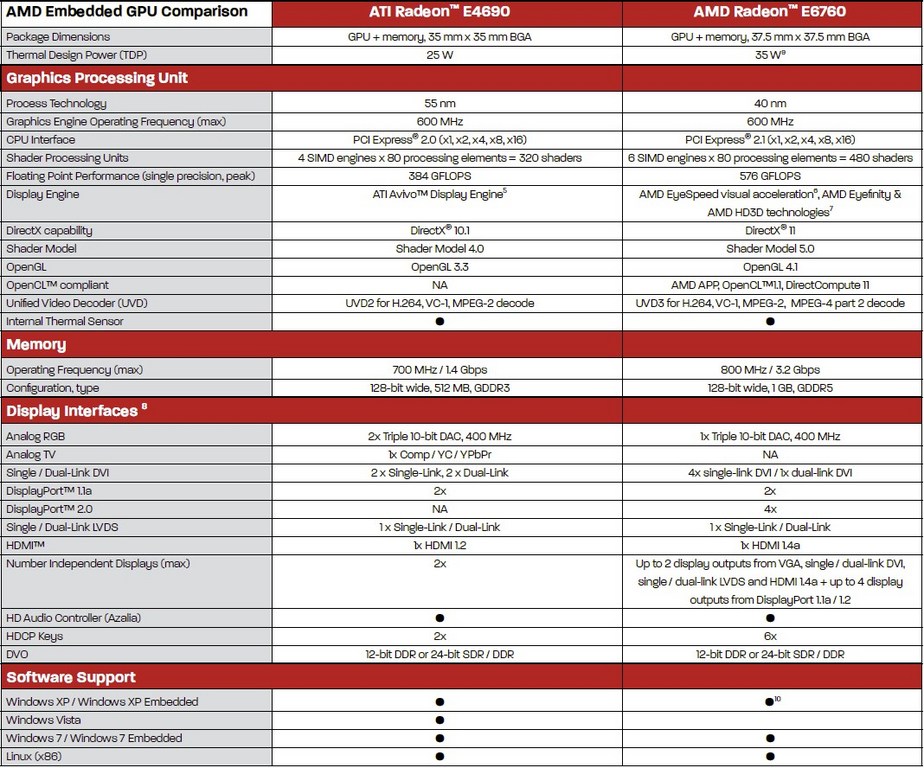

Since the WiiU has a GPGU made by AMD, and based on what the OP has said, the following product (on the right) looks like a perfect candidate right ?

Typical draw for a unit means the unit is "well" loaded, but not doing anything abnormal (like a power-on sequence, or a power virus, or any other pathological case). Typical for a device would be the sum of the typical draws of the units that get used during the typical use of the device. For something like the WiiU I'd guess 'device typical' is the sum of typical draws of the CPU + GPU + buses + edram + RAM + pad streaming (that includes pad radio).Yeah I'm confused. I thought 75w was it's max draw at time, but typically 45. What typically means is anyone's guess. Is that while sitting at the menu, watching a movie, miiversing, or full on gaming?

No, it was first reported as being 75W. That's how we've known the figure for a long time now (well, since WUST4 or something).I thought some dude took a photo of the PSU a while ago and it was 90W? I'm possibly remembering wrong though.

Yeah, I wanted to point that out: the Wikipedia entry of the Hollywood GPU in the Wii makes no mention of DirectX support, number of SPUs, and such.

Since the WiiU has a GPGU made by AMD, and based on what the OP has said, the following product (on the right) looks like a perfect candidate right ?

But if Nintendo uses this off the shelve product, then in what did they spend their R&D budgets these last years ?

576 GFLOPS would make it about 3x less powerful than what the PS4 will be supposed to be. This doesn't sound too bad frankly.

Could someone put this recent power draw discussion into layman's terms? It seems like this could be a decent indicator of what we're looking at for overall performance.

Typical draw for a unit means the unit is "well" loaded, but not doing anything abnormal (like a power-on sequence, or a power virus, or any other pathological case). Typical for a device would be the sum of the typical draws of the units that get used during the typical use of the device. For something like the WiiU I'd guess 'device typical' is the sum of typical draws of the CPU + GPU + buses + edram + RAM + pad streaming (that includes pad radio).

No, it was first reported as being 75W. That's how we've known the figure for a long time now (well, since WUST4 or something).

Right. If you lookup the term 'power virus' you'll see that it's a condition in a device where it draws too much power above the norm, and is normally associated with intentional, perhaps malicious programming of the device to deliberately get it in such a state. It's not impossible for a perfectly good 'useful' code though to have the characteristics of a power virus. Actually, in some modern architectures where the 'typical' power draw relies on a bunch of snooze-like features for the idling blocks in the device, the manual might tell you what not to do so your code would not unintentionally turn into a power virus. Crazy fun times we're living in.And by "well", that would generally mean "playing a game"? Even then though, the power levels will fluctuate right? NSMBU won't suck as much juice as CoD.

Right. If you lookup the term 'power virus' you'll see that it's a condition in a device where it draws too much power above the norm, and is normally associated with intentional, perhaps malicious programming of the device to deliberately get it in such a state. It's not impossible for a perfectly good 'useful' code though to have the characteristics of a power virus. Actually, in some modern architectures where the 'typical' power draw relies on a bunch of snooze-like features for the idling blocks in the device, the manual might tell you what not to do so your code would not unintentionally turn into a power virus. Crazy fun times we're living in.

Yeah, I wanted to point that out: the Wikipedia entry of the Hollywood GPU in the Wii makes no mention of DirectX support, number of SPUs, and such.

Since the WiiU has a GPGU made by AMD, and based on what the OP has said, the following product (on the right) looks like a perfect candidate right ?

But if Nintendo uses this off the shelve product, then in what did they spend their R&D budgets these last years ?

576 GFLOPS would make it about 3x less powerful than what the PS4 will be supposed to be. This doesn't sound too bad frankly.

So that 1TFLOP talk about Samaritan was related to some precise architecture ? If yes, then we don't know what the 1.8 TFLOPS of the PS4 will suffice then either. But thanks for pointing this out. When you think of what WiiU could have ended up be based on some negative developer talk, having an E6760 in WiiU's insides is definitely good news.Quite a few people here, including me, believe that the e6760 might be the most similar to Wii U's custom GPU, and we've thought this for a while now. Regarding your GFLOP rating, keep in mind that although it's 576GFLOPs, the e6760 is a slightly more powerful GPU than the Radeon4850, which is a 1000GFLOP (or 1TFLOP) GPU. This is why I don't like FLOP comparison between GPUs unless the comparison is made between two GPUs from the same architecture.

But if Nintendo uses this off the shelve product, then in what did they spend their R&D budgets these last years ?

So that 1TFLOP talk about Samaritan was related to some precise architecture ? If yes, then we don't know what the 1.8 TFLOPS of the PS4 will suffice then either. But thanks for pointing this out. When you think of what WiiU could have ended up be based on some negative developer talk, having an E6760 in WiiU's insides is definitely good news.

HDMI 1.4a would mean also Dolby True HD. That would be a great confirmation (read 5.1 or 7.1 is supported).

Basically you're asking me if a GPU could draw 30W in the quoted power-draw frame? Yes, it could.So I don't want to get this wrong, but when 45W was mentioned for typical draw, then that could have been while playing NSMBU and that the Wii U could quite possible draw much more for CoD. Therefore, when we're subtracting wattages to get to the GPU wattage, it could very well be a GPU that can draw 30W and therefore more powerful than we are thinking.

Or am I missing something fundamental here?

Chances are you could squash them with your thumb.Do we know anything about the ARM processors that are most likely in the controller and possibly in the system itself?

Basically you're asking me if a GPU could draw 30W in the quoted power-draw frame? Yes, it could.

Why is everybody so sure that the GPU in the WiiU is E6760 based? This Chip is capable of "DirectX 11" Shader Model5/openGL 4.0 and we KNOW that the WiiU Chip can't do that.

The OP Rumor states that the GPU "supports Shader Model 4.0 (DirectX 10.1 and OpenGL 3.3 equivalent functionality)", which actually looks exatly what the E4690 from the specsheet brings to the table... Combined with the first claims that the WiiU GPU is not even 2x 360, this paints a very clear picture for me..

The wiiU is much more likely running on a underclocked E4690 rather than a E6760. I want to be optimistic too, but even the most cautiously optimistic predictions have been smashed into bits in the past (see the espresso fiasco).

Why is everybody so sure that the GPU in the WiiU is E6760 based? This Chip is capable of "DirectX 11" Shader Model5/openGL 4.0 and we KNOW that the WiiU Chip can't do that.

The OP Rumor states that the GPU "supports Shader Model 4.0 (DirectX 10.1 and OpenGL 3.3 equivalent functionality)", which actually looks exatly what the E4690 from the specsheet brings to the table... Combined with the first claims that the WiiU GPU is not even 2x 360, this paints a very clear picture for me..

The wiiU is much more likely running on a underclocked E4690 rather than a E6760. I want to be optimistic too, but even the most cautiously optimistic predictions have been smashed into bits in the past (see the espresso fiasco).

It was only stated in OP's topic (for instance), which also has the espresso rumor included, which basically is such a weird thing that it must be true.

Other rumors also heavily pointed out that the WiiU GPU is not in the DX11 generation.

Makes sense, cause the Wii U's tech is from the past. amidoinitrite?

So I don't want to get this wrong, but when 45W was mentioned for typical draw

Actually antonz, I think this is the kind of thread the non-tech Wii U fans would like to stay.

Haha.

40w

The Original post was lifted from a Gaf Member who has been in this very thread telling people that the data present is not complete and specifically pointed out the fact the GPU is actually beyond the baselines listed.

This entire thread should have been nuked a long time ago considering the threads source came in and called out the thread.

Now that you mention it this topic's rumor states the Wii U only has 1GB but it's confirmed to have 2GB.

Only if this rumor is from past dev kits and not the final one which I'm guessing.

i looked some new WiiU footage

Ninja gaiden seam sub720 with poor AA (wait retail version for confirm because is strange, is under the PS3/X360 version)

http://www.youtube.com/watch?v=FAreMlwF-3A&feature=plcp

and Tank tank is 1280x360 no AA (+ probably 850x480 on gamepad. it's surely multiplayer mode with aproximatly the same pixel number on TV and Gamepad)

http://www.youtube.com/watch?v=Eo7wPaz4LDQ&feature=plcp

http://imageshack.us/a/img99/1466/tanktank.jpg

welcome to the nextgen sub720 world

EDIT:

i verified another time the NG video, it's probably dynamic resolution for stabilize framerate

sometimes 576p, sometimes 630p but i have grabed one 720p screen too

I do agree its useful for Non tech fans it just gets so tiring dealing with the same group of certain people.

I'm going to assume this was already posted

Well the issue there is the documentation specifically mentions ram available for use by Developers at this time. So it is accurate in that regard.

I'm going to assume this was already posted but, this is not good

http://forum.beyond3d.com/showpost.php?p=1666876&postcount=3313

In the end it's not as bad as it looks, some Tank Tank gimmick game is SD for some controller/multiplayer related version, and NG is a port.

But still.

The system does have 2GB of ram but the Developers only get to play with 1GB right now pending any future changes to the 1GB reserved for System use.

Which means...?

(Sorry, I'm not the best at specs)

I'm going to assume this was already posted but, this is not good

http://forum.beyond3d.com/showpost.php?p=1666876&postcount=3313

In the end it's not as bad as it looks, some Tank Tank gimmick game is SD for some controller/multiplayer related version, and NG is a port.

But still.

What does he mean with "under the PS3/360 version"?

You know how me 'n' thee disagree quite a lot?How are you guys still discussing specs with no specs?

Also does it even matter now? I mean so far it seems the technology is more than competent and should be okay for the next 5 years. Obviously it won't stand up to what ever MS and Sony have planned but I think they can carve out their own slice of the gaming pie.

What does he mean with "under the PS3/360 version"?

iPad3 style?If the pixel counting above is accurate, we're in for some giggles.

I'm ashamed to admit that I haven't the first idea to what you refer.iPad3 style?

You know how me 'n' thee disagree quite a lot?

This is one of those times.

If the pixel counting above is accurate, we're in for some giggles.

I can't buy those excuses when during any other generational transition we'd already have:Far be it for me to defend the Wii U, but we can still fall back on the bad SDK/early software/ports/learning the new CPU/whatever excuse.

That said of course it's not positive.

At this point to make a definitive determination I really, really need some GPU functional units/clocks or gflops numbers.

There just isn't one though.a game that actually looks significantly better than anything on PS360.

Far be it for me to defend the Wii U, but we can still fall back on the bad SDK/early software/ports/learning the new CPU/whatever excuse.

That said of course it's not positive.

At this point to make a definitive determination I really, really need some GPU functional units/clocks or gflops numbers. Or a game that actually looks significantly better than anything on PS360.

HDMI 1.4a would mean also Dolby True HD. That would be a great confirmation (read 5.1 or 7.1 is supported).

I'm referring to pixel-counting induced giggles, naturally.I'm ashamed to admit that I haven't the first idea to what you refer.

You forgot "yet".There just isn't one though.