It can't be both.

I'd like to point out that the tweets throughout the year were mostly very vague and I thought people interpreted way too many things into them. The outright "The Wii U is a custom 45nm #power7 chip" tweet only happened this week. But yeah, it did happen after all. And the account is legitimately linked to IBM.

This is basically the list of the problems I have with the idea:

http://en.wikipedia.org/wiki/POWER7#Specifications

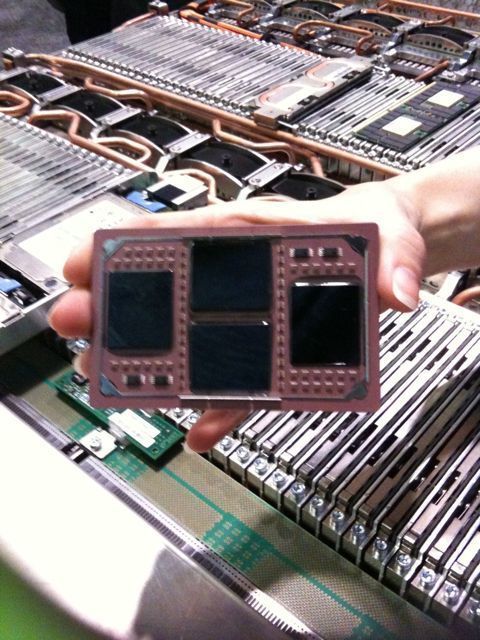

Typical Power7 products look like this:

This is an 800W, 3GHz, 32-core multi-chip module. Each core has 4-way SMT, so each module can run 128 threads in parallel.

Can you scale it down, theoretically, practically? Sure you could. Each individual core is around 25W. Clock it lower, around 2GHz or so, reduce the voltage, and you could feasibly get something you can stick in a console.

Problem is, you can fit 4 Broadway cores in half the die size and half the power budget, clock them the same, and you'll end up outperforming the Power7. 4 real cores vs one core with SMT is not an even contest.

Doesn't matter that the architecture is newer. What Power7 added in features is utterly useless in a gaming context.

Decimal floating point units are nice to have if you want predictable precision and rounding when handling real-world currency, but there's no reason to ever use them in a game. They're just wasted transistors. So is much of the rest.

One thing that bears repeating is that Broadway is not even a bad CPU architecture. There is no insult embedded into the idea that it may be the one again. It's a fine core architecture, performs well at any mix of code you can think of, and does it with low power usage and small die size. Its lineage may be ancient, but that doesn't mean it's lacking anything significant when built on a modern process node.

Xenon CPU cores and the Cell PPU (which are identical twins) are very, very bad performers for their clock speeds in general-purpose code, and they suck up surprising amounts of electricity to boot. 3.2GHz may sound impressive, but it really only helps with hand-tuned SIMD loops. If you just throw random code at them, they are matched and beaten by any reasonably efficient architecture, like the one Broadway used, at half the clocks or even less.

So, back to Power7, there is a technical possibility to scale it down to maybe a couple cores, scale back the SMT, remove some of the execution units, strip most of the massive core-to-core communication logic (and the on-die dual GBit ethernet links!), etc pp ... and end up with a decent console CPU that just barely squeezes into a reasonable power and cost budget. I just don't see how it makes economical sense for either IBM or Nintendo to have gone down that route.

Nintendo would have had to pay extra for the customization, and most likely extra on top due to basing their chip on IBM's current crown-jewel architecture. Then they'd pay extra in manufacturing because the chips would still end up larger.

At the same time, going with Broadway architecture instantly ensures robust Wii BC, because the CPU would be cycle-accurate and there'd be zero glitches, no "emulation" at all on that side. That Broadway++ would be cheaper to license and cheaper to manufacture, and easier to understand is the other corollary set of benefits.

Until someone pops off the heatspreader and demonstrates that there really is something else in the Wii U, CPU-wise, I'll continue to stick to my previous expectations:

*multi-core version of Broadway, clocked 2~3x higher than the Wii

*eDRAM cache instead of SRAM

*45nm SOI

(the last two would satisfy the earlier "some of the same technology [as in Power7]" tweets just nicely)

This is not intended to be a party poop, never was. It just makes the most sense to me given the small size and low power consumption of the overall system, and also economically.