The actual quote was "40W in idle mode, 75W in full charge"It's terrible how everything that comes out about Wii U gets spun.

Iwata "it uses 40 watts"

GAF within a couple days: "as you see by these ironclad calculations, Wii U can be drawing no less than 70 watts confirmed, possibly more. That is if we assume they havent already re engineered the power supply to go much higher which they obviously probably have already"

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Rumor: Wii U final specs

- Thread starter EatChildren

- Start date

I am positive it is the Wii U which draws 75 W, not the PSU.One thing I'm confused by now: If the max psu draw is 75w, and it say 60% efficient as some of you think; then when the psu is only drawing 40w as Iwata said for 'normal gameplay', are you suggesting the Wii U will only be using 24w power?? Seriously guys?

Are we certain he wasn't talking about the system drawing 75w max, not the psu?

edit: what alfolla said. But I think the GPU draws the same amount of power, regardless of what is enables around, which make the 40 W a good basis to discuss about.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

You're utterly confused. The efficiency of a PSU has zilch to do with its output rating, which is how PSUs are rated*. You can have a 65W PSU that is 10% efficient, and it will continue to be a 65W PSU - that's what it can deliver. The latter is chosen so it can satisfy the maximal power draw of the console not from the wall but from the PSU (apparently).You are not getting 60w out of a max rated PSU of 75w, not physically possible.

* apparently efficiency is output/input, but the input is not quoted - you have to actually measure it.

bgassassin

Member

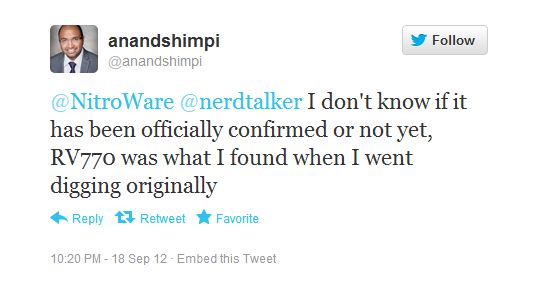

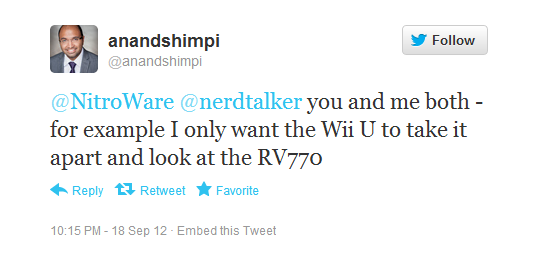

I forgot to respond to this, but if anything this confirms what we knew a year ago that an underclocked RV770 was the placeholder GPU. And considering that GPU is 55nm, I don't get how some can continually harp on the performance deficiencies of that line when we know Nintendo is not going to use a stock 55nm GPU. And they aren't just going to die-shrink it and be done. With what was in that dev kit being ~576GFLOPs on a "large for today's time" process, it should seem rather likely that the final being on a smaller process in a similarly-size retail case can be slightly over 600GFLOPs and fit in the power envelope given by Iwata. Some of you are seriously underestimating the power of Nintendium.

One thing I'm confused by now: If the max psu draw is 75w, and it say 60% efficient as some of you think; then when the psu is only drawing 40w as Iwata said for 'normal gameplay', are you suggesting the Wii U will only be using 24w power?? Seriously guys?

Are we certain he wasn't talking about the system drawing 75w max, not the psu?

Iwata said that WiiU's internal parts use 40w in your average game. Use all USB's and that's 50w in an average game. So the whole 65% efficient 75w PSU (48w) idea simply doesn't add up. Especially when you consider that the average game is never maximum load.

I suppose we'll have to wait until someone like Anandtech tests the power usage. I have no doubt when that happens we'll have to same culprits using that real world data to try to fit the maximum theoretical power draw of certain GPU's and declaring it impossible.

I don't quite agree with you, Nintendo's priorities may have been given to power efficiency while keeping overall capabilities of the newer GPU similar to the placeholder, as insane as it sounds. But even if they actually spent money and efforts on getting more FLOPS, you still can't pull conclusions since you don't know the form factor of the dev kit above.With what was in that dev kit being ~576GFLOPs on a "large for today's time" process, it should seem rather likely that the final being on a smaller process in a similarly-size retail case can be slightly over 600GFLOPs and fit in the power envelope given by Iwata. Some of you are seriously underestimating the power of Nintendium.

stupidvillager

Neo Member

This is one of the reason I laughed when people talk about the wiiu was design to do this. Havok is running on the CPU on the wiiu. If it was design to off load task to the gpu this would be the perfect thing to do. As I have said I think it just comes down to the r700 just not good enough or they feel people want to use 100% of gpu power for gfx.

We know the r700 is not well design for these tasks.

Ok, so clear something up for me...Havoks physics engine is running on the CPU because according to you the GPU is not good enough. Anonymous devs have said the CPU is "weak" and "you dont need high end physics for Marios cape." Devs have also said that the GPU is surprisingly powerful, that it has modern features and it surpasses DX10.1 and SM4.1. So this weak, enhanced 3-core broadway is better at running Havoks engine than a modern, fairly powerful GPU with compute support? So either a 3-core enhanced broadway is not really as bad as people think or its not just a 3-core enhanced broadway. Even if the engine was designed for CPUs how could it run so much better on a 3-core broadway over a modern GPU to warrant it.

I see how you would come to that conclusion. It's not true for PSUs though. When you buy a PSU, you want to know whether it can deliver sufficient power to the hardware. The_Lump's explanation was correct.

I will search the net for a source confirming this, should anyone not believe it yet.

My understanding is that PSUs are rated based on output not consumption. If a PSU is rrated at 75W it can deliver 75W, its power consumption will more than likely be much more than that. When I buy a PSU I'm only marginally intereted in how much power it consumes, my main concern is how much power can it deliver. Hence the power rating.

Ok, so clear something up for me...Havoks physics engine is running on the CPU because according to you the GPU is not good enough. Anonymous devs have said the CPU is "weak" and "you dont need high end physics for Marios cape." Devs have also said that the GPU is surprisingly powerful, that it has modern features and it surpasses DX10.1 and SM4.1. So this weak, enhanced 3-core broadway is better at running Havoks engine than a modern, fairly powerful GPU with compute support? So either a 3-core enhanced broadway is not really as bad as people think or its not just a 3-core enhanced broadway. Even if the engine was designed for CPUs how could it run so much better on a 3-core broadway over a modern GPU to warrant it.

As I said earlier its very flawed logic to say "Havoc is running on the CPU so the GPU can't do physics well". Last we heard multi-platform audio engines were running on WiiU's CPU despite the fact that WiiU has a hardware audio chip. So does that mean Nintendo have used an audio chip that can't process audio? No it means that these middleware providers are putting the most necessary paths in place first, which is software (CPU) to allow for easier porting from 360/PS3 (which don't have a audio chip or a GPGPU).

Imagine you're taking a picture with your camera and you adjust the zoom to make it clear. Well, we are half-way through this process actually. Except, we can't make it clearer and we are starved for more precise info.Too much tech talk. Can someone answer this? Wii U is POWAFUL yes / no

The discussion is more about details now. It is safe to assume that Wii U will have roughly as much CPU muscles as the X360, twice the GPU power, 4 times the RAM and that that devs can implement more AA thanks to augmented eDRAM on the CPU. And honestly, it will not move much from there.

We really need to know now if we'll get the necessary middlewares to run games on Wii U/X720/PS4 until the end of the life cycles of these consoles. Hopefully, the difference in IQ/AI/physics will not be too big across the range.

Havok, like any other multi platform software, has to support both a software and hardware path. Initially they'll get it working on the CPU because that's the priority due to the need for developers to port 360/PS3 games over. But that does not mean they won't provide a path to make it work on the GPU later.

And you based that on what? Hard to debate in these threads because people just make things up to fit whatever there point is.... Seem like you just made up that last part.

The biggest problem with using the gpu to do anything other than gfx work is that is makes the game look worse. Its a trade off i dont see many people making, plus you add the fact that the r700 is not design well to run this code.Ok, so clear something up for me...Havoks physics engine is running on the CPU because according to you the GPU is not good enough. Anonymous devs have said the CPU is "weak" and "you dont need high end physics for Marios cape." Devs have also said that the GPU is surprisingly powerful, that it has modern features and it surpasses DX10.1 and SM4.1. So this weak, enhanced 3-core broadway is better at running Havoks engine than a modern, fairly powerful GPU with compute support? So either a 3-core enhanced broadway is not really as bad as people think or its not just a 3-core enhanced broadway. Even if the engine was designed for CPUs how could it run so much better on a 3-core broadway over a modern GPU to warrant it.

So how does using USB increase the 40W for running the console? This is why you dont use the max rating on the psu. You leave some room for someone that plugs in 4 usb port for charging. The psu can supply up to 75w but again that would be a terrible design product that would fail a lot.Iwata said that WiiU's internal parts use 40w in your average game. Use all USB's and that's 50w in an average game. So the whole 65% efficient 75w PSU (48w) idea simply doesn't add up. Especially when you consider that the average game is never maximum load.

I suppose we'll have to wait until someone like Anandtech tests the power usage. I have no doubt when that happens we'll have to same culprits using that real world data to try to fit the maximum theoretical power draw of certain GPU's and declaring it impossible.

Most of the time the wiiu will use 40w, i think that is a little low so i say between 40-45w. Funny if you look back in june i told you how much power the wiiu was using just by the psu rating. Crazy we still have people who dont believe its true when its straight from the makers of the console.

Alexios

Cores, shaders and BIOS oh my!

His "last part" just says "that does not mean they won't provide a path to make it work on the GPU later", what's there to make up about it? Do you know for a fact they won't do so? He says they may, or may not. Saying either for sure would be making it up...And you based that on what? Hard to debate in these threads because people just make things up to fit whatever there point is.... Seem like you just made up that last part.

Iwata said that WiiU's internal parts use 40w in your average game. Use all USB's and that's 50w in an average game. So the whole 65% efficient 75w PSU (48w) idea simply doesn't add up. Especially when you consider that the average game is never maximum load.

I suppose we'll have to wait until someone like Anandtech tests the power usage. I have no doubt when that happens we'll have to same culprits using that real world data to try to fit the maximum theoretical power draw of certain GPU's and declaring it impossible.

well that's what my thinking was on the last couple of pages. Someone stopped me in my tracks though saying we already knew ages ago the psu was rated at 75w (ie the psu draws that from he wall, max). But if that were the case, and it was only 60% efficient (very low estimate imo) then that's 45w max of power going into the console. The '40w normal use' figure would mean 24w is being used but the hardware. Sounds fishy to me *scratched head*

From the translation of what Iwata said, it doesn't sound like that at all though...He makes it sound like the WiiU draws that much power max.

Nightbringer

Don´t hit me for my bad english plase

360Mhz RV770?

And you based that on what? Hard to debate in these threads because people just make things up to fit whatever there point is.... Seem like you just made up that last part.

What am I basing what on? That a middleware developers priority it to make sure that their software path is in place first? Its just the way it works, if you think that's made up then I really don't know why you're discussing these issues.

As I said, do you also think WiiU's DSP can't process audio well because current WiiU audio middleware has been running on WiiU's CPU instead of the DSP?

bgassassin

Member

I don't quite agree with you, Nintendo's priorities may have been given to power efficiency while keeping overall capabilities of the newer GPU similar to the placeholder, as insane as it sounds. But even if they actually spent money and efforts on getting more FLOPS, you still can't pull conclusions since you don't know the form factor of the dev kit above.

So you do agree with me and don't realize it. The idea of the placeholder, and this is with all consoles, is to give devs an idea of the performance of the final components even though it won't have all the features of the final silicon. And it's not that they spent more money to get more FLOPs. They (heavily) underclocked a part and would only need to raise the clock slightly to reach ~600GFLOPs.

I saw the dev kit a year ago and originally thought it may be on a 28nm process, then changed to 32nm because it was a main node. But then the TSMC info has me leaning back to 28nm (go away AlStrong

http://www.vgleaks.com/wii-u-first-devkits-cat-dev-v1-v2/

Too much tech talk. Can someone answer this? Wii U is POWAFUL yes / no

"Powaful" is too subjective to give a yes or no answer. In the range of current vs next gen, I consider it around mid-tier. And there's no such things as too much tech talk in a specs thread.

Any pics of the Wii U's UI?

I believe it was said that devs only recently seen it for themselves, so we might not get that till the console launches for all we know.

stupidvillager

Neo Member

The biggest problem with using the gpu to do anything other than gfx work is that is makes the game look worse. Its a trade off i dont see many people making, plus you add the fact that the r700 is not design well to run this code.

So you think a weak 3-core enhanced broadway can run the code better than a modern, customized R700?

Barack Lesnar

Banned

Excuse me? You wrongly claimed that 65% efficiency is the most a PSU can supply, I corrected you and your response is "it doesn't matter"? How does it not matter?

We know that the average power usage of WiiU without USB is 40w, we know its PSU is apparently 75w (though I don't recall any proof). But the fact is 75w could allow for as much as 67w for the console, remove USB and that's still 57w.

So with 57w what kind of horsepower can we expect? are there any comparable chips or is it hard to compare due to these chips being customized

bgassassin

Member

^ It was in the WUST while it was in the Community thread.

For me it's the latter. Based on my expectations of the final GPU I think the "best" PC GPU to compare it to for performance would be the 6570.

So with 57w what kind of horsepower can we expect? are there any comparable chips or is it hard to compare due to these chips being customized

For me it's the latter. Based on my expectations of the final GPU I think the "best" PC GPU to compare it to for performance would be the 6570.

"Powaful" is too subjective to give a yes or no answer. In the range of current vs next gen, I consider it around mid-tier. And there's no such things as too much tech talk in a specs thread.

True, well for people with non-tech heads it's all a blur.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

For the one thousandth time: a PSU is rated by its capability to provide power, i.e. a fixed voltage and up to a certain current (normally, unless it's multiple voltages and multiple currents, but that's not the case here). That's what its 'power' is (in Watts). How efficient it is, i.e. how much it draws from the grid while providing its output can only be measured by putting a watt-meter between the PSU and the grid socket. But if a PSU is rated at 75W that's what it can deliver (unless it's a scam). Yes, normally a PSU's efficiency will drop with reaching the max rated output, but that's and entirely different subject from the one of 'how much W a PSU can deliver'.well that's what my thinking was on the last couple of pages. Someone stopped me in my tracks though saying we already knew ages ago the psu was rated at 75w (ie the psu draws that from he wall, max).

GAF can be a really funny place sometimes.

I don't remember seeing a pic, but we got an eyewitness testimony, and it said 75W.By the way, did I miss it or did we ever get a pic proving 100% that WiiU's PSU is 75w?

So with 57w what kind of horsepower can we expect? are there any comparable chips or is it hard to compare due to these chips being customized

Its really hard to compare. PC chips include things that a console won't need for instance so part of the modifications Nintendo made could have been to remove some of these features. But at the same time we do know they've added things to the chip they started with. We also don't know the process used, its basically impossible to give anything but a vague guess IMO. I thought 500Gflops a while ago and I'll stick to that kind of range as I think its feasible from even a 40nm custom GPU within a 50w+ console.

What am I basing what on? That a middleware developers priority it to make sure that their software path is in place first? Its just the way it works, if you think that's made up then I really don't know why you're discussing these issues.

Please post a link to where you got his info from? You just made all that up... Please show me something that say they plan to move this over to the gpu. But of course you wont because no one but you has said anything like that.

I don't remember seeing a pic, but we got an eyewitness testimony, and it said 75W.

That's what I thought, very likely but not 100% definite then.

Havok, like any other multi platform software, has to support both a software and hardware path. Initially they'll get it working on the CPU because that's the priority due to the need for developers to port 360/PS3 games over. But that does not mean they won't provide a path to make it work on the GPU later.

Please post a link to where you got his info from? You just made all that up... Please show me something that say they plan to move this over to the gpu. But of course you wont because no one but you has said anything like that.

No, nobody has said that, me included. I said the fact they currently don't support it does not mean you can conclude that they never will. You've already been corrected on your misconception of what I said, but you continue to pretend I said something that I didn't.

Seriously mods, is this kind of complete ignorance really acceptable?

Absolute Bastard

Member

PSU stands for Power Supply Unit, the rating is what it can supply. For example 600 watt power supply unit supplies up to 600 watts. Efficiency affects what it draws from the wall to achieve that supply.

Also there's always a sizeable safety margin involved, pushing the PSU close to it limits for longer periods can be very bad. Typical usage is generally no more than 60-70% of rated capacity. Meaning if the systems needs 50w for typical heavy load it will have a roughly 75w PSU, if the sytems needs 75w it will have roughly 110w PSU.

Also there's always a sizeable safety margin involved, pushing the PSU close to it limits for longer periods can be very bad. Typical usage is generally no more than 60-70% of rated capacity. Meaning if the systems needs 50w for typical heavy load it will have a roughly 75w PSU, if the sytems needs 75w it will have roughly 110w PSU.

Please post a link to where you got his info from? You just made all that up... Please show me something that say they plan to move this over to the gpu. But of course you wont because no one but you has said anything like that.

Hey USC, you know what a straw man argument is? ;-)

Woaw, this is a USB cable that goes in? The box looks tiny already. Form factor and power were a non-issue back then, they only problem reaming was/is manufacturing costs. But I wonder, why is Nintendo so anal about form factor? Why is it they wanna make their home consoles so small? It impacts the entire design.So you do agree with me and don't realize it. The idea of the placeholder, and this is with all consoles, is to give devs an idea of the performance of the final components even though it won't have all the features of the final silicon. And it's not that they spent more money to get more FLOPs. They (heavily) underclocked a part and would only need to raise the clock slightly to reach ~600GFLOPs.

I saw the dev kit a year ago and originally thought it maybe on a 28nm process, then changed to 32nm because it was a main node. But then the TSMC info has me leaning back to 28nm (go away AlStrong). And a few months ago VGLeaks released the dev kit pics I saw.

http://www.vgleaks.com/wii-u-first-devkits-cat-dev-v1-v2/

Woaw, this is a USB cable that goes in? The box looks tiny already. Form factor and power were a non-issue back then, they only problem reaming was/is manufacturing costs. But I wonder, why is Nintendo so anal about form factor? Why is it they wanna make their home consoles so small? It impacts the entire design.

Isn't console size a huge selling point in Japan? I could be wrong.

No, nobody has said that, me included. What are you talking about??

Seriously mods, is this kind of complete ignorance really acceptable?

Where did this come from? I have not seen one statement that backs this up. No where does havok said they put this on the cpu because its needed to port ps360 games.Havok, like any other multi platform software, has to support both a software and hardware path. Initially they'll get it working on the CPU because that's the priority due to the need for developers to port 360/PS3 games over. But that does not mean they won't provide a path to make it work on the GPU later.

You come here saying well the reason was xxx why it on the cpu but based that on nothing released by havok.

For the one thousandth time: a PSU is rated by its capability to provide power, i.e. a fixed voltage and up to a certain current (normally, unless it's multiple voltages and multiple currents, but that's not the case here). That's what its 'power' is (in Watts). How efficient it is, i.e. how much it draws from the grid while providing its output can only be measured by putting a watt-meter between the PSU and the grid socket. But if a PSU is rated at 75W that's what it can deliver (unless it's a scam). Yes, normally a PSU's efficiency will drop with reaching the max rated output, but that's and entirely different subject from the one of 'how much W a PSU can deliver'.

GAF can be a really funny place sometimes.

I don't remember seeing a pic, but we got an eyewitness testimony, and it said 75W.

That's what I had originally said

blue, if the PSU is rated 75 W and that Iwata's comments are correct and that WiiU can actually draw up to 75 W when everything enabled, then your rating doesn't follow the law given by Absolute Bastard:[...]a PSU's efficiency will drop with reaching the max rated output, but that's and entirely different subject from the one of 'how much W a PSU can deliver'.

I don't remember seeing a pic, but we got an eyewitness testimony, and it said 75W.

The PSU you saw was definitely a scam/adapted to a low power dev kit unit.PSU stands for Power Supply Unit, the rating is what it can supply. For example 600 watt power supply unit supplies up to 600 watts. Efficiency affects what it draws from the wall to achieve that supply.

Typical usage is generally no more than 60-70% of rated capacity. Meaning if the systems needs 50w for typical heavy load it will have a roughly 75w PSU, if the sytems needs 75w it will have roughly 110w PSU.

I Stalk Alone

Member

Well that "small" kits had a big issue with heat problems, basically the gpu was limited to 50% of performance because if you try to get more then it freezes.

"WiiU, when it gets hot it freezes"

PSU stands for Power Supply Unit, the rating is what it can supply. For example 600 watt power supply unit supplies up to 600 watts. Efficiency affects what it draws from the wall to achieve that supply.

Also there's always a sizeable safety margin involved, pushing the PSU close to it limits for longer periods can be very bad. Typical usage is generally no more than 60-70% of rated capacity. Meaning if the systems needs 50w for typical heavy load it will have a roughly 75w PSU, if the sytems needs 75w it will have roughly 110w PSU.

Right. So to me, if Iwata was saying the system requires 75w max, the psu would need to be drawing 120w (assuming 60% efficiency). That's the question though: is that what he was saying.

Seems to confirm that Nintendo had a clear view of the form factor and how much power it wanted for the Wii U and then tried to reduce heat exhaust and manufacturing costs.Well that "small" kits had a big issue with heat problems, basically the gpu was limited to 50% of performance because if you try to get more then it freezes.

Nostremitus

Member

Ok, i've seen a lot of people referencing Iwata. Here is what he said.

Note, in this instance he was touting how efficient the system was, so he was giving the lowest ballpark figure.

If the Wii U is rated at 75W, I don't see why the PSU wouldn't be 110w. I know, I know, the demo units produced about a year and a half or more ago had 75w PSU's. ei, not indicative of the final design.

I guess what I'm getting at is that we don't know anything other than Iwata saying that the WiiU can consume 75W under full load. He never mentioned the PSU, anyone who says otherwise are fabricating a story to further their agenda. Why someone would feel the necessity to have said agenda is beyond me.

Hell, the Wii U could be able to disable unused cores. That would explain how a multicore system is 99.9% BC. Could also explain how "depending on the game" "40w could be realistic."

My two cents, but they seem lucid to me...

Edit: Thank you Cheesemeister

The Wii U is rated at 75 watts of electrical consumption.

Please understand that this electrical consumption rating is measured at the maximum utilization of all functionality, not just of the Wii U console itself, but also the power provided to accessories connected via USB ports.

However, during normal gameplay, that electrical consumption rating won't be reached.

Depending on the game being played and the accessories connected, roughly 40 watts of electrical consumption could be considered realistic.

This energy-saving specification has made this compact form-factor possible.

Note, in this instance he was touting how efficient the system was, so he was giving the lowest ballpark figure.

If the Wii U is rated at 75W, I don't see why the PSU wouldn't be 110w. I know, I know, the demo units produced about a year and a half or more ago had 75w PSU's. ei, not indicative of the final design.

I guess what I'm getting at is that we don't know anything other than Iwata saying that the WiiU can consume 75W under full load. He never mentioned the PSU, anyone who says otherwise are fabricating a story to further their agenda. Why someone would feel the necessity to have said agenda is beyond me.

Hell, the Wii U could be able to disable unused cores. That would explain how a multicore system is 99.9% BC. Could also explain how "depending on the game" "40w could be realistic."

My two cents, but they seem lucid to me...

Edit: Thank you Cheesemeister

I'm pointing that if the "placeholder" was a RV770 if you can't use it at 100 % is useless to know the model or the theorical power/flops on it. You need a stable machine so maybe you need to low the specs/clocks, etc to have it.

(All of this is speculation of course)

Yes that would fit in the power consumption. RV770 is 960 @ 110w. The rv740 is the 40nm version and this is where i got the performance for watt at 12w/glfop. If the devs kits ran at half this because of over heating than you have a good target.

25w = 300 Glfops

30w = 360 glfops

35w = 420 Glfops

300-420 glfop is in the ballpark for the wiu gpu.

But this does explain a lot on how we got the sky high glfop rating for the gpu. People took the rv740 at 80w and then said well if they move it from 40nm to 32nm then we can get over 1ftlop at 80w. When the whole time this rv770 was running at half speed in the dev kits.

edited for correct part numbers

THE:MILKMAN

Member

blue, if the PSU is rated 75 W and that Iwata's comments are correct and that WiiU can actually draw up to 75 W when everything enabled, then your rating doesn't follow the law given by Absolute Bastard:

The PSU you saw was definitely a scam/adapted to a low power dev kit unit.

The problem has I see it is that Nintendo and Sony like to have PSU's that run at there most efficient ~50% load when playing a game. Which explains the 75W/40W for Nintendo and 190W/70W for Sony.

Not every company does the same and it becomes really confusing.

plagiarize

Banned

Where did this come from? I have not seen one statement that backs this up. No where does havok said they put this on the cpu because its needed to port ps360 games.

You come here saying well the reason was xxx why it on the cpu but based that on nothing released by havok.

is there a compute shader version of havok yet? i honestly don't know the answer to this, but i do know for a fact that havok has been running on power pc processors for quite a while in software.

there is probably hardly any work required to port havok to the Wii U's CPU, if there is any work at all. porting havok to use compute shaders is going to be a lot of work. it hasn't even happened on PCs yet, to my knowledge.

my graphics card could eat havok alive, yet all those game developers choose to run it on my processor. it must be because my graphics card is crap right?

or, you know, the CPU runs it without any trouble, so why completely rewrite the thing to run using compute shaders.

Ok, i've seen a lot of people referencing Iwata. Here is what he said.

Note, in this instance he was touting how efficient the system was, so he was giving the lowest ballpark figure.

If the Wii U is rated at 75W, I don't see why the PSU wouldn't be 110w. I know, I know, the demo units produced about a year and a half or more ago had 75w PSU's. ei, not indicative of the final design.

I guess what I'm getting at is that we don't know anything other than Iwata saying that the WiiU can consume 75W under full load. He never mentioned the PSU, anyone who says otherwise are fabricating a story to further their agenda. Why someone would feel the necessity to have said agenda is beyond me.

Hell, the Wii U could be able to disable unused cores. That would explain how a multicore system is 99.9% BC. Could also explain how "depending on the game" "40w could be realistic."

My two cents, but they seem lucid to me...

I like you.

So how does using USB increase the 40W for running the console? This is why you dont use the max rating on the psu. You leave some room for someone that plugs in 4 usb port for charging. The psu can supply up to 75w but again that would be a terrible design product that would fail a lot.

Because Iwata said that 40w is the internal hardware, which does not include USB. That's why using the USB ports would increase the power usage that he mentioned.

Cosmonaut X

Member

So, reading what Iwata said in that quote, why is everyone talking about a 75W PSU (aside from the pic/witness report from a while back)?

He seems to be clearly stating that the Wii U will draw 75W max - under load, with all USB slots in use - but that 40W would be average, depending upon game being played and accessories used.

So, unless I'm misreading, we're not talking about it running off a 75W PSU, but rather a larger PSU and drawing up to 75W max (with that being in the 60-ish% range "safe" draw people have been talking about).

Or am I completely misreading?

EDIT:

So, if the 75W is the max draw from the Wii U, the PSU would be rated at something more like 110W, surely? That gives your safety margin and supplies the machine with its max draw under load and with all USB ports powered.

He seems to be clearly stating that the Wii U will draw 75W max - under load, with all USB slots in use - but that 40W would be average, depending upon game being played and accessories used.

So, unless I'm misreading, we're not talking about it running off a 75W PSU, but rather a larger PSU and drawing up to 75W max (with that being in the 60-ish% range "safe" draw people have been talking about).

Or am I completely misreading?

EDIT:

So, if the 75W is the max draw from the Wii U, the PSU would be rated at something more like 110W, surely? That gives your safety margin and supplies the machine with its max draw under load and with all USB ports powered.

Let me correct you: Iwata said that the WiiU can draw up to 75 W. A PSU rated a 75 W must be 100% efficient at peak power usage and this is not possible.The problem has I see it is that Nintendo and Sony like to have PSU's that run at there most efficient ~50% load when playing a game. Which explains the 75W/40W for Nintendo and 190W/70W for Sony.

Not every company does the same and it becomes really confusing.

Thus, the PSU must be rated higher in any cases. But let's say that for the Wii U, 60 W is the normal power draw. In agreement with your argument, the PSU should be rated then at 120 W 60 W being 50% of 120 W).

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

The problem with PSUs being used close to their max ratings is that due to their efficiency dropping, the amount of heat generated as byproduct increases. Heat shortens the lifespan of electronic/electrical parts, so you can safely claim that a PSU that is kept closer to its max rating will last shorter than one kept at 50% of its max rating. But how long a PSU can last while at its max rating is entirely dependent on a bunch of factors: what currents? what voltages? what components? Theoretically, nothing stops me from manufacturing a PSU that is physically capable of 100W, but artificially limit it to 50W (by limiting its max current). Hey look, I just made an ultra durable PSU that can be pushed to its max for as long as I please!blue, if the PSU is rated 75 W and that Iwata's comments are correct and that WiiU can actually draw up to 75 W when everything enabled, then your rating doesn't follow the law given by Absolute Bastard.

bgassassin

Member

Yes that would fit in the power consumption. RV770 is 960 @ 80w. This is where i got the performance for watt at 12w/glfop. If the devs kits ran at half this because of over heating than you have a good target.

25w = 300 Glfops

30w = 360 glfops

35w = 420 Glfops

300-420 glfop is in the ballpark for the wiu gpu.

But this does explain a lot on how we got the sky high glfop rating for the gpu. People took the rv770 at 80w and then said well if they move it from 40nm to 32nm then we can get over 1ftlop at 80w. When the whole time this rv770 was running at half speed in the dev kits.

Do you see the problem with this? You're doing this based on a 55nm part. We've know for awhile now it was underclocked. We have an idea of what of what it was downclocked to. Why do you think I've been talking about 600GFLOPS for the final? No one said it would be 1TF if they just used a smaller process. You keep harping on the R700 and treat it like all the limitations won't be addressed. Like I said back in that post I linked, you treat things in absolutes. And it's hypocritical to sit there and say "people just make things up to fit whatever there point is...." when you've been one of, it not the worst at doing this.

Similar threads

- 39

- 3K

Eddie-Griffin

replied