64bitmodels

Reverse groomer.

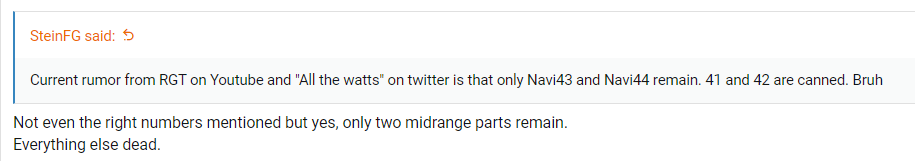

limiting your cards to midrange doesn't mean shit when Intel also has midrange at cheaper prices and with better tech. Even Apple is doing upscaling and Raytracing better than them and it simply comes down to the lack of AI acceleration in their cards.Intel will be dangerous in mid range market and they have more market share to lose to Intel than Nvidia.

High end cards are niche, like ~1 to 2% userbase. This is not how Nvidia takes a chunk of the market share either, everything plays out in the xx60 xx70 range.

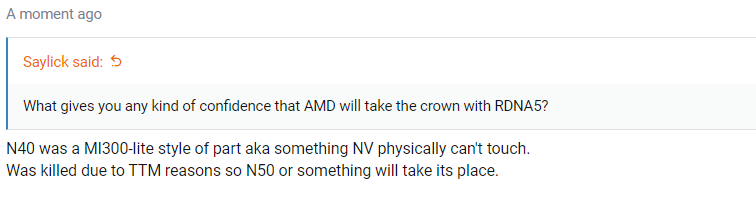

They probably can’t compete against Nvidia high end, next gen with all the AI chip design and AI foundry optimization with MCM and so on, they probably have a monster. But an expensive one. For all the R&D it would cost AMD to compete against it, it would barely break 1%, just look at 7900XTX, just now it broke into steam hardware survey after all this time.

So concentrate on mid range killer. That’s what Intel is doing also strategy wise.

What AMD needs to do is simply what Nvidia has done for the past 5 years: Lean into software more and add more AI cores into their products. Then and only then will they be compettiive.