Aizu_Itsuko

Member

I want to thank Chipworks as well for helping us shed some light on Wii U's hardware.

Really appreciated.

Really appreciated.

There's nothing gimped about it - it's just small-scale. The 750 does not have as many units as the 970 so there's no point in it resolving 5 ops for dispatch, and the entire associated workload. But 750's design performs notably better than an in-order design, at a minimal transistor premium.So its a "gimped" OoOE design in Broadway?

Does Espresso use the same OoOE design? If we even can figure this out somehow...

if the cpu is truly an FX and if the middle core is more bull boosted core, that means is dedicated for the games ? Definitely a better geek than xbox360.

360 uses 1 core 2 threads for the games, 1 core for the sound and 1 core for the OS. I am satisfied with the sounding of that... Better gpu and better cpu, it only needs different coding.

if the cpu is truly an FX and if the middle core is more bull boosted core, that means is dedicated for the games ? Definitely a better geek than xbox360.

360 uses 1 core 2 threads for the games, 1 core for the sound and 1 core for the OS. I am satisfied with the sounding of that... Better gpu and better cpu, it only needs different coding and more attention from the developers.. Wii U cpu (750 family) may be single cores (no threads), but they perfom with more instructions per clock, than any other... I think 360 is about 5 instructions per clock but a broadway is about 7+????

edit: How many instructions per clock a 750FX gives? Broadway gives 7+ (7.2 if I am right)

360 uses 1 core 2 threads for the games, 1 core for the sound and 1 core for the OS.

I ve read in an article, in the past, even skyrim uses 1 core, I know that because it took almost 6 months till they patch it properly and perform well in the PC.

Depends what your definition for instructions-per-clock is. According to the common convention for IPC, both Xenon and Broadway are 2 IPC (per core).if the cpu is truly an FX and if the middle core is more bull boosted core, that means is dedicated for the games ? Definitely a better geek than xbox360.

360 uses 1 core 2 threads for the games, 1 core for the sound and 1 core for the OS. I am satisfied with the sounding of that... Better gpu and better cpu, it only needs different coding and more attention from the developers.. Wii U cpu (750 family) may be single cores (no threads), but they perfom with more instructions per clock, than any other... I think 360 is about 5 instructions per clock but a broadway is about 7+????

edit: How many instructions per clock a 750FX gives? Broadway gives 7+ (7.2 if I am right)

I'm not doubting you, but you should doubt that story.I transfered the opinion of a wii U member.. its not mine, but he also said that the cpu was ready for Apple, but apple abandoned this and alianced with Intel and created the "i core"

IBM was planning a ppc750 to replace the disasterous 64bit ppc 970 (g4's/g5's, what would eventually be the basis of both xenon and cell)

Also entertaining, he thinks intel *supposed* alliance with apple created the iCore (i3/i5/i7)?Vx never saw the light of day because apple ended their partnership with ibm over the piss poor performance of the 970, and forged a an alliance with intel, creating the icore. ''''''

You are making some broad generalizations that really have no foundations.I ve read in an article, in the past, even skyrim uses 1 core, I know that because it took almost 6 months till they patch it properly and perform well in the PC. Also if you measure ports from 360 games in the pc, they always use 1 core.

XBOX 360 can use the OS during gaming, by pressing the middle green button, also its not only the game sound, but the cross chat, etc.. It has many features, downloading in the background, etc...

This is from Anandtech : http://www.anandtech.com/show/1719/6

With Microsoft themselves telling us not to expect more than one or two threads of execution to be dedicated to game code, will the remaining two cores of the Xenon go unused for the first year or two of the Xbox 360s existence? While the remaining cores wont directly be used for game performance acceleration, they wont remain idle - enter the Xbox 360s helper threads.

edit: I hope I helped. It also says, due to low dvd capacity, xbox uses 1 thread for decompressing data.

So far, besides pointing out the probable cache locations, all I've seen on this thread is people trying to defend the 360 CPU, insist the Wii U's is just 3 Broadway CPUs taped together, and dismiss any positive performance allotted to the PPC750 architecture.

When are we going to actually start doing the Espresso analysis?

So far, besides pointing out the probable cache locations, all I've seen on this thread is people trying to defend the 360 CPU and dismiss any positive performance allotted to the PPC750 architecture.

When are we going to actually start doing the Espresso analysis?

It's a good question, though to clarify, you mean CPU/GPU on the same MCM (multi chip module package), they're not on the same Die (which would be silicon). Apples A[x] series on the other hand have the CPU and GPU on the same die.Also, I've been wondering about the performance benefit of the CPU and GPU being on the same die. How does that work? What exactly is the benefit as compared to having them separate and independent?

Seems there are a lot less unknowns here. It isn't some hyper customized PPC750, aside from the cache customizations.

Seems like a very good fit for what Nintendo was trying to do. Along with the well designed memory subsystem and modern GPU, the system should, as Criterion said, punch above it's weight. Doesn't mean we should expect miracles, but we should get some very impressive looking games.

Now, it's just up to Nintendo to reverse the staggering loss in sales momentum. We need games and a new marketing plan ASAP, otherwise it could be a while before Nintendo returns to profitability.

This is completely unrelated, but how weird is it to be a fan of an industry where the two platforms that lost a combined 8 billion dollars on hardware over the last 8 years are considered to have the most positive momentum. Gaming as an industry could he in for major tremors over the next few years.

Seems there are a lot less unknowns here. It isn't some hyper customized PPC750, aside from the cache customizations.

Seems like a very good fit for what Nintendo was trying to do. Along with the well designed memory subsystem and modern GPU, the system should, as Criterion said, punch above it's weight. Doesn't mean we should expect miracles, but we should get some very impressive looking games.

Now, it's just up to Nintendo to reverse the staggering loss in sales momentum. We need games and a new marketing plan ASAP, otherwise it could be a while before Nintendo returns to profitability.

This is completely unrelated, but how weird is it to be a fan of an industry where the two platforms that lost a combined 8 billion dollars on hardware over the last 8 years are considered to have the most positive momentum. Gaming as an industry could he in for major tremors over the next few years.

Could be, I thought about it before writing. But... I don't think so.

My reasoning was that Broadway (90 nm) is also manufactured at half of Gekko's 180 nm process and it sits at roughly half the die area instead of a fourth. But... it's also less than half by 2 mm² or so and seems to have added things (and the size proportion of the cache banks changed in there)

I was probably oversimplifying it, but if anyone knows how to go about it please do. All in all though, it feels fishy, if each of those dies are the equivalent of a Gekko/Broadway (or even just the middle one), feature 4 times the cache and have that left sided block thing going on, I reckon it's kinda small.

Hey, do you know which is the Video Decoder Core?

I work on the software for PowerVR VXD and it'd be cool to see it actually on-die.

I don't know for sure, but here's where it is on the Tegra 2, that obviously doesn't use PowerVR GPUs but the video decoder is a separate block

I see.In theory 90nm to 45nm would make it a quarter of its original size. But in reality die's never shrink perfectly, especially the logic parts (memory shrinks much more linearly and not too far off the theory I think). Gekko was 43mm2 so a perfect shrink from 180nm to 90nm should have meant 10.75mm2 for Broadway, when in reality its 18.9mm2. Though as you say Broadway wasnt just Gekko on a 90nm process, it did have some extra transistors in there.

Yes, the change in sizes really confused me at first, I thought the L2 tags were the SRAM and the left side blocks were something else. Definitely overcomplicated things.While Espresso does have 12x as much L2 cache as Broadway (4x per core average as you say) its using eDRAM which uses far less space than the SRAM used for Broadways L2 cache. So you're only actually looking at about 4x as many transistors for the 3MB in Espresso as was used for the 256KB cache in Broadway. Since memory shrinks very close to 4x going from 90nm to 45nm the L2 cache of both CPUs should take up a very similar die area.

.Héctor Martín ‏@marcan42

Sigh, another tweet-to-neogaf: lostinblue, your gekko die shot isn't a gekko, it's an old PPC750. External L2 cache, no paired singles.

What's the orientation of the CPU to the GPU?

I'm thinking the CPU has direct access to the weird block of GPU eRam (thus shut off for developers to use - it's acting as an extension to the CPU).

I believe marcan when he says that it's currently completely off limits to developers. It's definitely in there primarily for BC. Perhaps Nintendo are also using this as a cache for their own system features? It's always possible they open it up to devs in the future.

I hope it is opened up later, but I suppose it's possible it's only physically wired to the backwards compatibility bits. Extra bandwidth/cache levels for the GPU or CPU to work with can't be a bad thing, and the two pools that are unavailable are presumably faster than the 32MB (one for being SRAM, one for being more tightly packed eDRAM which means lower latency).

I'm actually more interested in knowing what the Wii can do. Maybe we can finally get some real specs like polygon count/cpu speed on Broadway and Hollywood.

Thanks, credit on that one goes for Marcan.

Héctor Martín ‏@marcan42

Sigh, another tweet-to-neogaf: lostinblue, your gekko die shot isn't a gekko, it's an old PPC750. External L2 cache, no paired singles.

You are making some broad generalizations that really have no foundations.

First you cannot compare PC processor usage of any indication of what the Xbox cores are doing.

Second, you are confusing a cores "thread" and a task thread. You can have many times more task threads than physical threads. In fact hit ctrl+shift+esc and look at all the threads your windows box has going at any given time. It's no difference with the 360 or any modern operating system (embedded or not). The CPUs threads are just what the CPU can physically deal with at a given time, there is a scheduler to handle more virtual threads than physical ones. If you have something critical, you *may* want to dedicate a physical thread/core to its duties, but its not a requirement. MS I believe does not limit the developer to give up a core to the os, Sony does keep one spe for os duties though.

*edit* I just scanned the attached article,

I dont know how relevant it is to 360 games of today. It was written at the launch of the 360 before even pcs were heavily multithreaded. An interesting read though.

I am not comparing any pc with 360! Its old news that 360 uses 1 core 2 threads for its games, why you dont search. Maybe the new releases, like crysis 3, use 3 threads, but I find it extreme. Here is also a small example with xbox720. We all read the rumors that it comes with 8 cores, but the new kenect will use most of the cores for it!!! So 360 have lots of things to maintain at the same time, as it does have kenect and it does drain recourses. Thats an example.

Lets focus on wii U cpu now, I will wait to see analysis.

The Xbox 360's operating system does not manage processor affinity. All threads run on the same processor as their parent unless otherwise stated. This basically means that you, the programmer, are responsible for managing processor affinity of your threads, and therefore your applications overall performance.

We all read the rumors that it comes with 8 cores, but the new kenect will use most of the cores for it!!!

So you saying that games on xbox use about 3 cores for the games, because the sound is real time and its not only the sound that runs with the game! I said that for the most modern games, but its not a certain thing. And you know that microsoft did that from the beginning, because the cpu is "in order execution" much slower. So a wii U (lets say FX model core, I will wait for gaf analysis) can easily perform like 2 threds+ from 360, with the appropriate coding.

If i am wrong, please explain, why not.

edit: I am asking that, because I am reading and I am learning, I am not a spec guy.

Actually suspected as much, one of the reasons why I left the source linked in:.Héctor Martín ‏@marcan42

Sigh, another tweet-to-neogaf: lostinblue, your gekko die shot isn't a gekko, it's an old PPC750. External L2 cache, no paired singles.

Watch out man, he's gonna come in and sarcasm your ass!

Watch out man, he's gonna come in and sarcasm your ass!

Yeah, I replied there (first time posting on twitter) and the character limit sure isn't funny.I think he just means he's sick of having to tweet things to gaf instead of being able to actually post here.

Where exactly are the processors 3 Cores?

Also, are you certain that the entirety of the squared area to the right in the photo is pure cache?

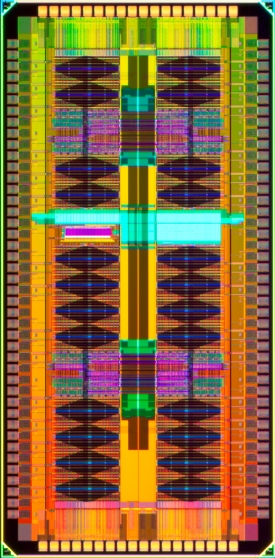

https://lh5.googleusercontent.com/-...JI/AAAAAAAAAdw/FyuvSjP1Phk/s640/Espresso2.png

If you're talking of the SRAM arrays on the right-most of the die - those are all L1 - 32KB data + 32KB instruction caches, per core.Where exactly are the processors 3 Cores?

Also, are you certain that the entirety of the squared area to the right in the photo is pure cache?

https://lh5.googleusercontent.com/-...JI/AAAAAAAAAdw/FyuvSjP1Phk/s640/Espresso2.png

Here's a quick diagram of the die layout. I'll do a more detailed one for one of the cores now.

Edit: Of course, the core numbers are my own assumptions, but we know Core 1 has the extra cache, and Cores 0 and 2 are identical, so it shouldn't matter much.

Is that right? I thought the solidly colored smaller blocks to the right of each core and the left of the middle core are eDRAM, I don't know what the far right is but it doesn't look like any eDRAM I've seen before.

Then again the whole shot is kind of weird looking, almost like it was looking through a layer, seems more hazy than every other chipworks shot.