-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

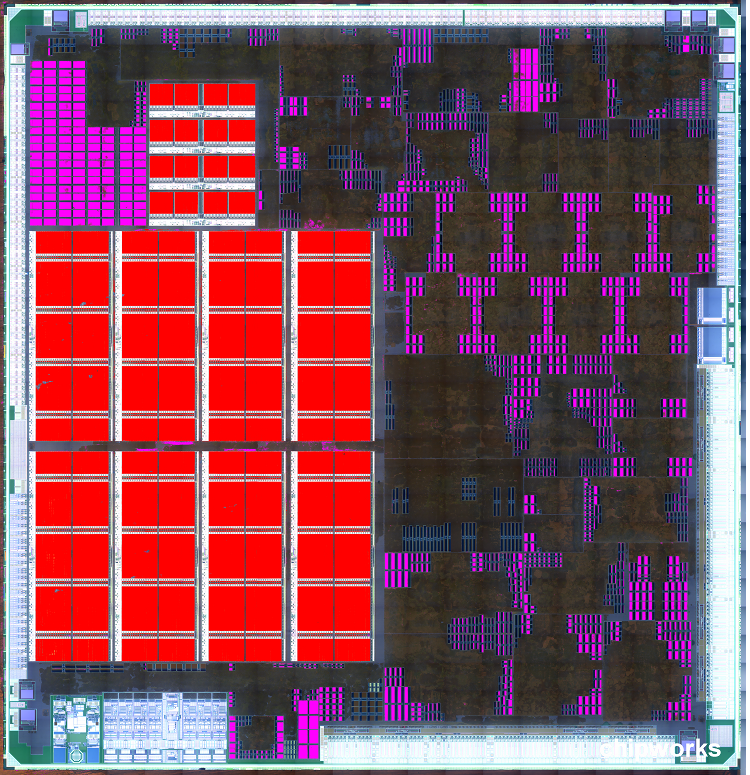

WiiU "Latte" GPU Die Photo - GPU Feature Set And Power Analysis

- Thread starter Thraktor

- Start date

- Status

- Not open for further replies.

I agree that this shoud be added to the OP. Looks like ScepticMatt put some time into it.

Also, what is the orientation of this GPU image with respect to the CPU?

Also, what is the orientation of this GPU image with respect to the CPU?

my attempt at coloring:

red = eDRAM

pink = L1/L2 cache

black = logic

light blue = Audio DSP

white = IO

blue = power connectors?

Green = ARM core/ PCB

So, are we sure about the 32+4 eDRAM configuration? When I saw it I thought that it would be more like 28+4 because I thought it was confirmed that there is 32 MBs of eDRAM in total on that die.

The larger pool of eDRAM is probably the primary pool (probably where the 32 MB number was refering to) and the smaller pool of eDRAM along with the sRAM are probably secondary or 'helper' pools, used both for emulation and further bandwidth assistance (just guessing here).

In regards to the Wii U's max power draw, is it not possible that system can draw a higher constant wattage but no game has yet demanded it?

Eurogamer used FIFA 13 and Mass Effect 3 for their power consumption benchmarking. I own FIFA and it's not a demanding game by any stretch, i doubt it even pushes the Xbox 360 or PS3 either. As for Mass Effect, while certianly more demanding i'd have to wonder how well optmised the port was and if it was taking full advantage of the shaders, tmus, and fully loading the GPU up.

Would it be out of the question to say the entire system could pull a costant 40w or even 45w if there was a game that loaded it?

Looking at the way modern GPUs power scale, there's a massive difference in power consumption between a fully loaded ATi 4850 and one under only moderate load.

Eurogamer used FIFA 13 and Mass Effect 3 for their power consumption benchmarking. I own FIFA and it's not a demanding game by any stretch, i doubt it even pushes the Xbox 360 or PS3 either. As for Mass Effect, while certianly more demanding i'd have to wonder how well optmised the port was and if it was taking full advantage of the shaders, tmus, and fully loading the GPU up.

Would it be out of the question to say the entire system could pull a costant 40w or even 45w if there was a game that loaded it?

Looking at the way modern GPUs power scale, there's a massive difference in power consumption between a fully loaded ATi 4850 and one under only moderate load.

gamethriller

Banned

COOL im from 954 too.....Can everyone calm down until we get a definitive count on the flops? (zombie's did this thread no favors :/)

In regards to the Wii U's max power draw, is it not possible that system can draw a higher constant wattage but no game has yet demanded it?

i'm curious as well. it feels odd that nintendo would target a power usage so low. 45w is low, but 30~?

Chronos24

Member

I'm having a real hard time wrapping my head around that kind of performance at that power draw.

Is it honestly that surprising now though? I know this a total far fetched comparison but take a look at cars today...Higher horsepower but better gas mileage. Electronic devices including the WiiU get more and more efficient and less power hungry all the time. I just find it surprising how many people find it so hard to believe that we have a decent machine here that sips on electricity. If the WiiU is straight up barely "better" than ps360 or way better and is drawing a small amount of power then kudos to Nintendo for at least doing that.

all of this and we STILL have no idea what the gpu is and what it can do, i think the reality is this GPU is VERY custom.

And that fact, which has now been proven, is important enough.

Now we need to find out how custom the CPU is.

JohnnySasaki86

Member

Yeah you're right, the GS is just a rasterizer, still I thought the Wii U CPU was similar to one of the cores in the 360 CPU....if it's 12 Gigaflops it's much weaker...although of course there's a different design philosophy behind the whole system (GPGPU).

I thought Wii U couldn't do GPGPU?

Zoramon089

Banned

just a rasterizer, still I thought the Wii U CPU was similar to one of the cores in the 360 CPU....if it's 12 Gigaflops it's much weaker...although of course there's a different design philosophy behind the whole system (GPGPU).

Much weaker? A xenon core is only 20% faster than a completely unmodified Broadway and the Wii U is using 3 derivative cores of it that, if the GPU is any indication, are surely heavily modified

shinra-bansho

Member

Xenos can do GPGPU iirc.

Although I can't remember what the conclusion of those discussions were - i.e. 70W PSU = ? max power draw?

Using FLOPS as a metric Xenon was something like 90 GFLOPS iirc.Much weaker? A xenon core is only 20% faster than a completely unmodified Broadway and the Wii U is using 3 derivative cores of it that, if the GPU is any indication, are surely heavily modified

They actually ran their library of software - not just FIFA and Mass Effect. They seemed to think it wasn't likely to change in future. Besides taking the 33W for gaming and then adding peripheral charging etc brings us close to the max anyway iirc.In regards to the Wii U's max power draw, is it not possible that system can draw a higher constant wattage but no game has yet demanded it?

Although I can't remember what the conclusion of those discussions were - i.e. 70W PSU = ? max power draw?

Xenos can do GPGPU iirc.

They actually ran their library of software - not just FIFA and Mass Effect. They seemed to think it wasn't likely to change in future. Besides taking the 33W for gaming and then adding peripheral charging etc brings us close to the max anyway iirc.

Although I can't remember what the conclusion of those discussions were - i.e. 70W PSU = ? max power draw?

There is very likely an up to 10W window that games could potentially push extra. Its not unusual either as it happens on titles that really push systems to the metal.

I thought Wii U couldn't do GPGPU?

It was confirmed in September that the Wii U has a GPGPU.

There is very likely an up to 10W window that games could potentially push extra. Its not unusual either as it happens on titles that really push systems to the metal.

Yeah i'd share that view Antonz.

With the Xbox 360 and PS3 we certainly saw games that consumed more power then others

However, all of the tested launch games drew exact as much... Be it Mass Effect, SMBWU, ZombiU, or even the dashboard (was 1W less i think). I remember reading a conversation in the other thread last week, that the difference in 360 games was never beyond +/-10%

adding 10W to 30W would be 25% (or 33%, depending on how you look at it).

Hey i could be wrong, but it'd be very difficult to lock a system down at 32-32w max under every single game.

Also Zombi U, and Fifa, both of which i own i would not consider demanding games.

Mass Effect, i'd again question if the port was optmised to max out the potential of the Wii U's GPU feature set

Again could be completely wrong, but i wouldn't be surprised if its max was around 40w

Also Zombi U, and Fifa, both of which i own i would not consider demanding games.

Mass Effect, i'd again question if the port was optmised to max out the potential of the Wii U's GPU feature set

Again could be completely wrong, but i wouldn't be surprised if its max was around 40w

JohnnySasaki86

Member

The ram isn't a bottleneck anymore (actually never was) so try again (you can only make a case for the cpu being a bottleneck of sorts).

DF has speculated that on every port its the CPU thats doing the bottlenecking. Its got be, Wii U games framerate get hit hard whenever theres a lot of enemies on screen compared to PS360. It makes total sense because PS360 are much more CPU centric consoles compared to Wii U and therefor there programmed that way. That causes some port problems for Wii U- there made to take advantage of PS360's strengths, not Wii U's.

Does anyone know why Proelite was banned?

Thunder Monkey

Banned

It's the viability of its usage that is in question.It was confirmed in September that the Wii U has a GPGPU.

DreadPirateRoberts

Member

With such a low power draw, is overclocking with a system update plausible(a la PSP)?

Do we have any idea how hot it runs?

Do we have any idea how hot it runs?

Man beyond3d members are on their own planet when it comes to Wii U.

lol everyone cant be in nintendoland.

That was always the question. It was known by tech members that the wiiu had computer shader suppport from day one since we had the base gpu core and 10.1 dx support.It's the viability of its usage that is in question.

I have yet to hear one dev say they are taking advantages of the gpgpu functions in teh gpu.

JohnnySasaki86

Member

Man beyond3d members are on their own planet when it comes to Wii U.

What do you mean? What are they saying about all this new info?

nothing particularly damning it seems, just that we shouldn't put too much hope into the idea of fixed functions bolstering the gpu performance.

Sound like a good idea. Very reasonable. People should have learned there lesson already. GAF is always hoping for the "special sauce". If it comes great, but don't count on it, your setting yourself up for disappointment. Just like when everyone was expecting a 600gflop Wii U GPU. Same goes for Durango special sauce.

What do you mean? What are they saying about all this new info?

nothing particularly damning it seems, just that we shouldn't put too much hope into the idea of fixed functions bolstering the gpu performance.

Chronos24

Member

i love nintendoland one of the most honest places you can go. I have ONLY taken nintendo's word word for this console and what it will be able to do. like ive said before Iwata is one of the most honest CEO's you will find in the gaming industry. so yeah i knowWii U will be fine and will create beautiful games. just cant wait to E3 so all of the numbers stuff will be irrelevant when we see the games the console will be running.... just the way nintendo wants it to be.

This^^. I know that the Durango and orbis are coming as well but unless they pull something out that just blows me away (beyond better graphics and improvements to move and kinect which is pretty much a certainty) then I think nintendo stays a very strong competitor in innovation and games.

Truthfully though... I won't be satisfied with any console or any company until someone gets me to where I'm walking onto the holodeck of the enterprise.

nothing particularly damning it seems, just that we shouldn't put too much hope into the idea of fixed functions bolstering the gpu performance.

Worked Nicely for the GameCube

Wish factor 5 still existed to give WiiU a go.

Worked Nicely for the GameCube

Wish factor 5 still existed to give WiiU a go.

meaning there is no fixed functions on the chip. This is a SoC and so it houses a lot more than just a gpu.

You are painting with a pretty broad brush seeing a lot of the members that are in this thread here also post on b3d. Pretty sure the guys that started this thread post over there also.basically what they have been saying since the beginning. there is a way to say the console isnt super powerful. but the basically now are saying its a BEST 1.5x 360. may still believes it a 360 nothing better saying the console overall is a big bottleneck. when is the last console nintendo made from the ground up that had a significant bottleneck in architecture and design? basically they are trying to take one aspect of the system and dooming the system as a whole due to that one thing. after what nintendo stated in their last q4 earning report really put my mind at ease.

Trevelyan9999

Banned

Well seeing that the Wii U GPU even after this reveal is still much of a mystery (until Fourth Storm returns with more inside info)

I least we know the Wii U can already do this in real time:

I least we know the Wii U can already do this in real time:

StreetsAhead

Member

yeah bayonetta has a chance to be the FIRST game to show what the finalized dev kit is capable of. i mean if thats real time on Wii U thats REALLY good for a so called gimped bottleneck system... according to beyond3d

Both the director and producer confirmed that to be running in real time on Wii U hardware, but it's too dark, too low-quality, and too short to make any definitive statements about the quality of the entire game.

What? You can't see anything in that clip, it's almost completely blacked-out.yeah bayonetta has a chance to be the FIRST game to show what the finalized dev kit is capable of. i mean if thats real time on Wii U thats REALLY good for a so called gimped bottleneck system... according to beyond3d

Both the director and producer confirmed that to be running in real time on Wii U hardware, but it's too dark, too low-quality, and too short to make any definitive statements about the quality of the entire game.

people keep saying that yes its a given i cant sum up the whole game off of that clip. i can though look at that clip in a vaccum and say thats REALLY damn good. if the full game is on this level We really have nothing to worry about.

I do not see any drool from the beast's mouth in the gif. Nor do I see any custom "drool" shader on the GPU in the OP. I want a refund, Iwata.

What? You can't see anything in that clip, it's almost completely blacked-out.

They need to stop rendering realistic Superdome environments. Ray Lewis looks scary in that gif.

What? You can't see anything in that clip, it's almost completely blacked-out.

it looks pretty awesome, though. that's all you need to sell games. it's that easy to fool the consumer.

This is a tech thread, not a defense force thread. Please stop posting GIFs.

What? Stop posting gifs related to the tech being debated...? I think it's relevant. I would rather see these gifs than the "clever" movie gifs.

its suppose to be dark.... im looking at the way the scene is renderd and the texture of the monster. i guess i see something most people dont.

I see it as well.

Considering what we are seeing in relation to the supposed "weak cpu", I think we should also factor in whether we think Takeda is a liar or not when he said that "There are people saying that the CPU is weak but that is not true."

Fourth Storm

Member

Man, it's late.

-40nm process confirmed.

Speculation:

I was looking at the geographical location of the blocks and interfaces on the die and then compared against pictures of the naked MCM on the motherboard. It kind of put things in a new light. Currently thinking this:

GP I/O's = interface to CPU.

SERDES/MIPI = interface to HDMI out (the specific use of HDMI for this interface was suggested to me by Chipworks.)

Other high speed interface w/ tank oscillator = interface to Gamepad out (is the oscillator helping to maintain the low latency 60 Hz refresh rate perhaps?)

-40nm process confirmed.

Speculation:

I was looking at the geographical location of the blocks and interfaces on the die and then compared against pictures of the naked MCM on the motherboard. It kind of put things in a new light. Currently thinking this:

GP I/O's = interface to CPU.

SERDES/MIPI = interface to HDMI out (the specific use of HDMI for this interface was suggested to me by Chipworks.)

Other high speed interface w/ tank oscillator = interface to Gamepad out (is the oscillator helping to maintain the low latency 60 Hz refresh rate perhaps?)

meaning there is no fixed functions on the chip.

The fuck are you smoking. This is what the one guy said on B3D:

Exophase said:It's fun watching the NeoGAF thread.. a lot of people seem to be clinging to this idea that there's all this fixed function graphics magic to make up for the relatively small amount of die area that's dedicated to shaders. What they're forgetting is that this isn't just a GPU, but it's an entire SoC sans CPU. So for instance it needs various peripheral interfaces (SD, USB, NAND) and may include fixed video decoders as well.

If we're going to be talking about this potential I feel it's worth asking - what added fixed function hardware can help a remotely modern GPU design? I figure anything in the critical path for shaders is going to be a problem to go out of the shader array for, or will need additional hardware/software solutions to decouple it like TMUs are.

He's challenging the notion but doesn't say anything in depth or concrete whatsoever while still acknowledging it as a possibility, albeit one he doesn't support. He also admits later that he doesn't fully understand what's going on here either. So please, almighty tech god from the stars, explain to him and the rest of us, in excruciating technical detail, what's in going on in this chip. Please. Sir. I beseech you.

why does that dude think no one understands that stuff, it's all in the OP

edit:

it might more to do with what nintendo developers are currently comfortable with rather than what is feasible in the modern age

edit:

If we're going to be talking about this potential I feel it's worth asking - what added fixed function hardware can help a remotely modern GPU design? I figure anything in the critical path for shaders is going to be a problem to go out of the shader array for, or will need additional hardware/software solutions to decouple it like TMUs are.

it might more to do with what nintendo developers are currently comfortable with rather than what is feasible in the modern age

Well seeing that the Wii U GPU even after this reveal is still much of a mystery (until Fourth Storm returns with more inside info)

I least we know the Wii U can already do this in real time:

wonderful 101 looks so amazing

I see it as well.

Considering what we are seeing in relation to the supposed "weak cpu", I think we should also factor in whether we think Takeda is a liar or not when he said that "There are people saying that the CPU is weak but that is not true."

It's weak relative to what's available right now. It's not weak compared to Wii.

VIDEOGAMESYAY

Banned

yeah bayonetta has a chance to be the FIRST game to show what the finalized dev kit is capable of. i mean if thats real time on Wii U thats REALLY good for a so called gimped bottleneck system... according to beyond3d

all I see is 95% blackness with some wet highlights. IMHO totally unimpressive. show me what a game will look like at some time other than midnight with a clear view of that monster.

reminds me of a purely FMV game being used to showcase a systems graphical prowess. yawn.

Please don't start posting gifs in a thread about the gpu die photo.Well seeing that the Wii U GPU even after this reveal is still much of a mystery (until Fourth Storm returns with more inside info)

I least we know the Wii U can already do this in real time:

Please don't start posting gifs in a thread about the gpu die photo.

could u edit your quote so there aren't gifs on the new page.

edit: thx

Sorry, I was doing just that the moment I realized it.could u edit your quote so there aren't gifs on the new page.

I'll post this here as it seems to have been forgotten due to being at the bottom of the page.

Then why does the diagram from Chipworks refer to the larger pool as "slower"? Same reason (for its size, not overall)? I don't mean to sound antagonistic--I'm genuinely curious.It's a higher speed for its size, not overall. But it should be extremely low latency even relative to the 32 MB MEM1.

Fourth Storm

Member

I'll post this here as it seems to have been forgotten due to being at the bottom of the page.

Apologies. IMO he meant the nature of the macro is slower (lower bandwidth) but you've got enough of it that the bandwidth adds up to more than that of the smaller pool. It's still great to have that extra smaller pool, and the latency should be pretty fantastic.

Apologies. IMO he meant the nature of the macro is slower (lower bandwidth) but you've got enough of it that the bandwidth adds up to more than that of the smaller pool. It's still great to have that extra smaller pool, and the latency should be pretty fantastic.

It missing from the supposed Wii U spec sheet might mean it's there only for BC, though.

Apologies. IMO he meant the nature of the macro is slower (lower bandwidth) but you've got enough of it that the bandwidth adds up to more than that of the smaller pool. It's still great to have that extra smaller pool, and the latency should be pretty fantastic.

Thanks a bunch. How do we go about finding out the number of shaders per cluster? And the fixed-function pipelines are separate from these on the die, yes? The rest of the people here seem to have a hook on 40 shaders per block, which seems reasonable.

And sorry to bother you, it is late as hell over here, too :/ (2:31 a.m.).

It missing from the supposed Wii U spec sheet might mean it's there only for BC, though.

That's the thing. Why would it be locked from Wii U development (if it's even locked, in the first place)?

- Status

- Not open for further replies.