ScepticMatt

Member

Road to VR live blogs:

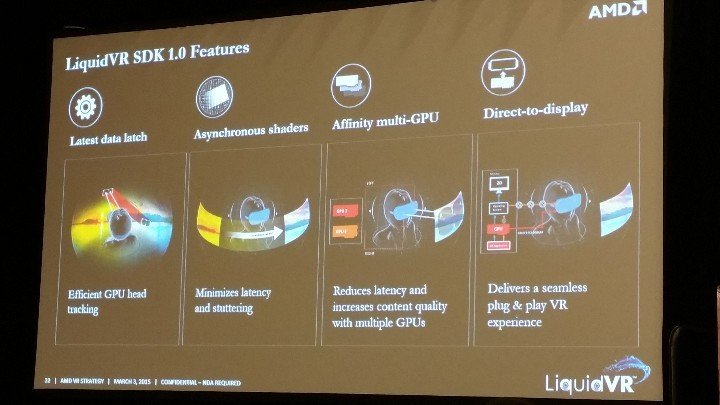

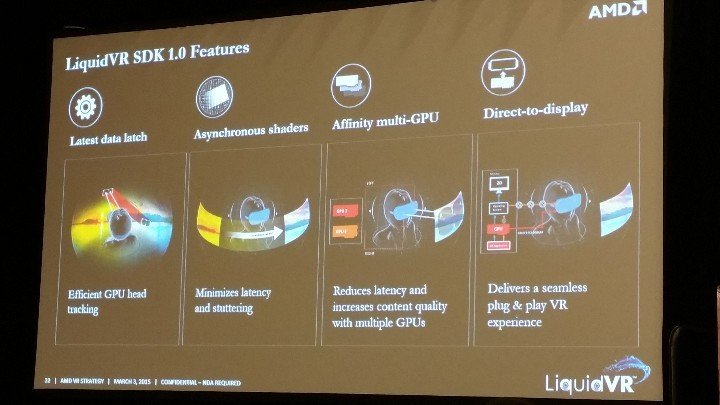

AMD

http://www.roadtovr.com/amd-on-low-...vr-and-graphics-applications-live-blog-330pm/

LiquidVR SDK

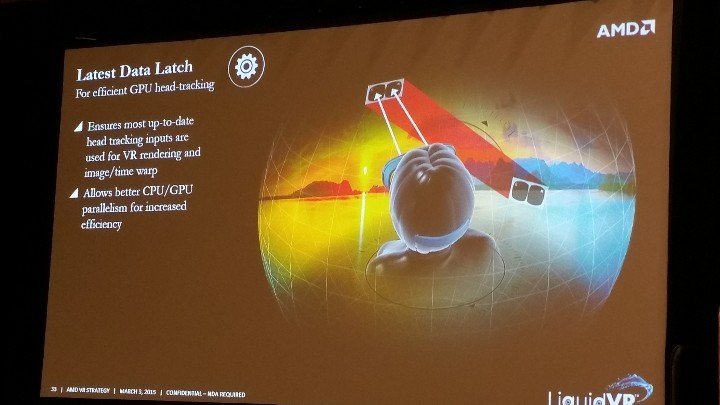

-Latest Data Latch (latency reduction, better parallelism and performance)

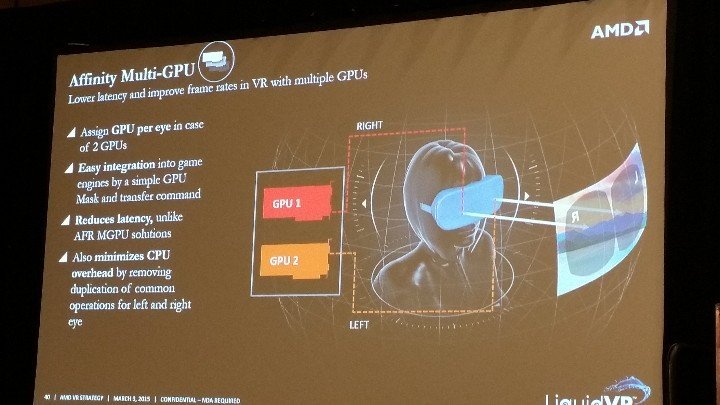

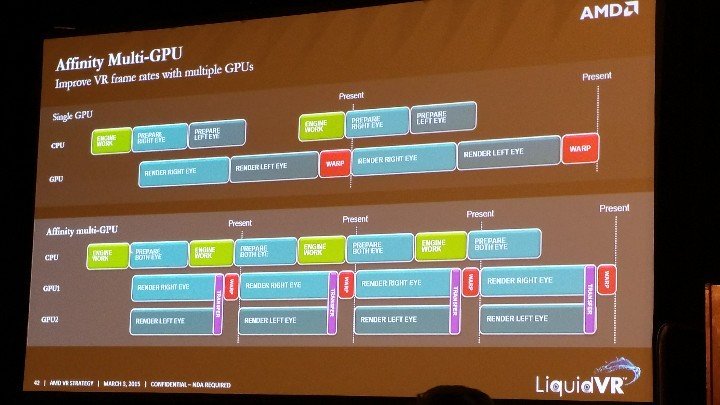

-Affinity Multi-GPU (latency reduction, better CPU performance)

-Direct to Display

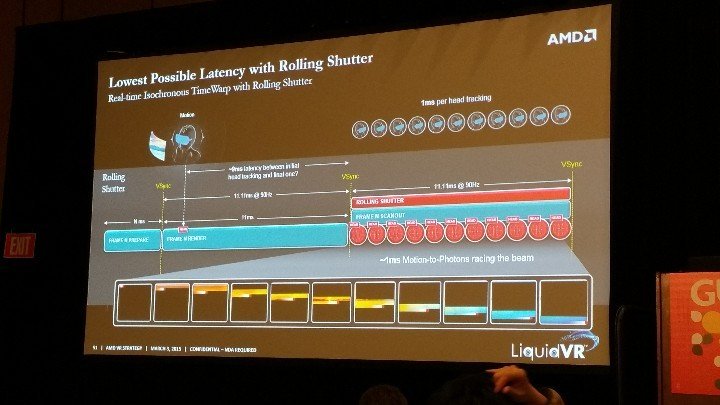

-"Racing the beam" during rolling shutter for 1ms motion-to-photon latency

-Working with Oculus/Valve

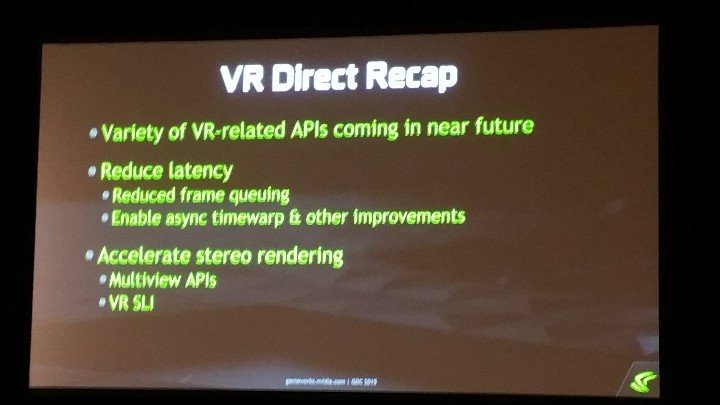

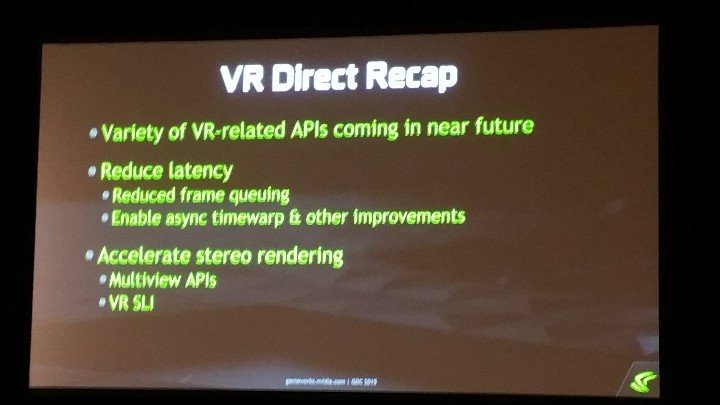

NVIDIA

http://www.roadtovr.com/vr-direct-h...mproving-the-vr-experience-live-blog-2pm-pst/

VR Direct

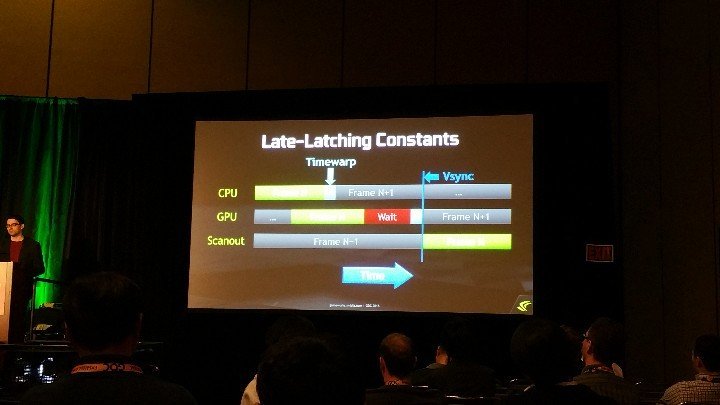

-Late Latching constants

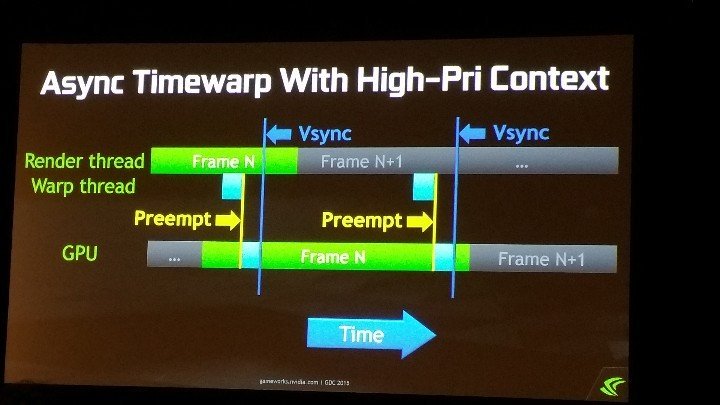

-Driver-level asynchronous time warp, priority over "normal" rendering

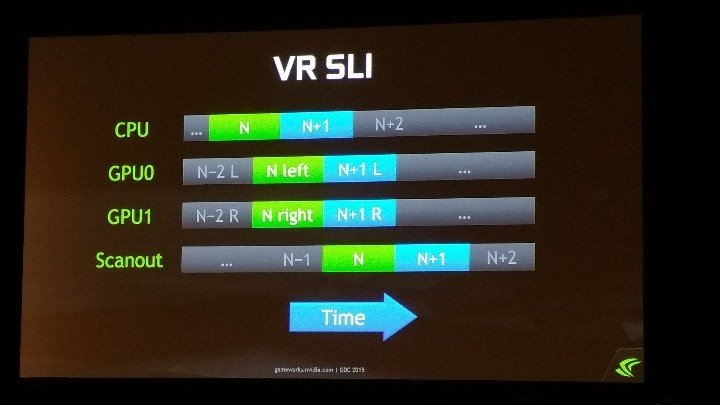

-VR SLI (lower latency)

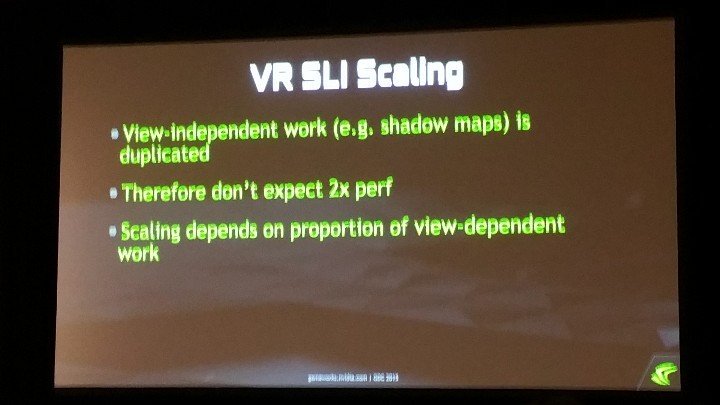

-"can't expect to see perfect 2x scaling here because it depends on the overlap of rendering between frames. If you see 40-50% increase in performance with SLI, you're doing well"

-lower latency GPU distortion shader, future compatibility with Oculus SDK

-"hot out of the oven", "will be in flux for awhile"

Valve

http://www.roadtovr.com/valve-talks-advanced-vr-rendering-live-blog-5pm-pst/

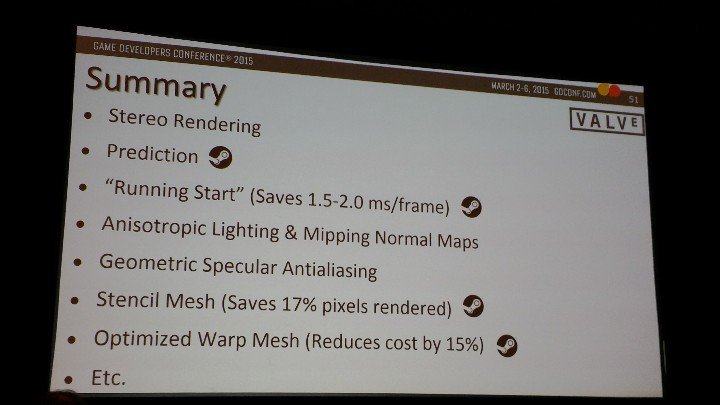

Advanced VR rendering

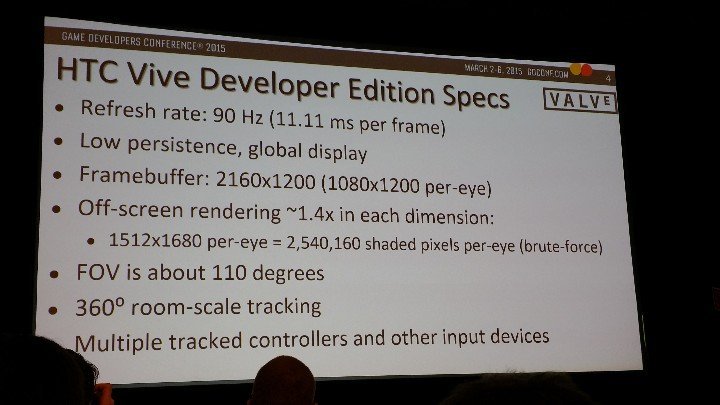

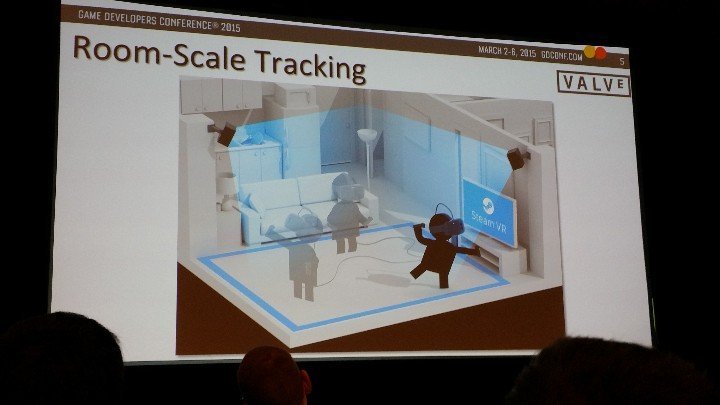

-HTC Vive specs (90Hz, 110 FoV, 1080x1200 per eye, room-scale tracking)

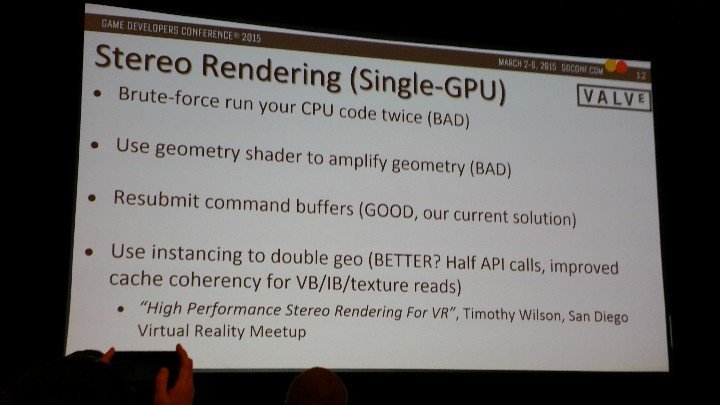

-instancing to double geometry setup (half API calls, improved cache coherency)

-DX11 Multi-GPU extensions: nearly doubled framerate on AMD, have yet to test NVIDIA implementation but will soon.

-Low persistence global display, panel only lit for 2ms after raster scan finished

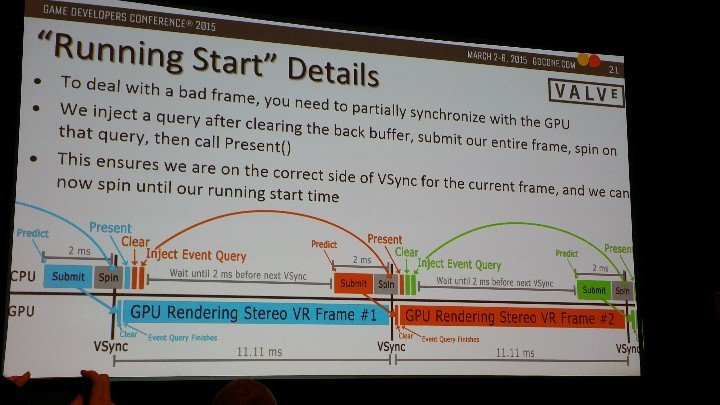

-"running start" for lower latency and better parallelism

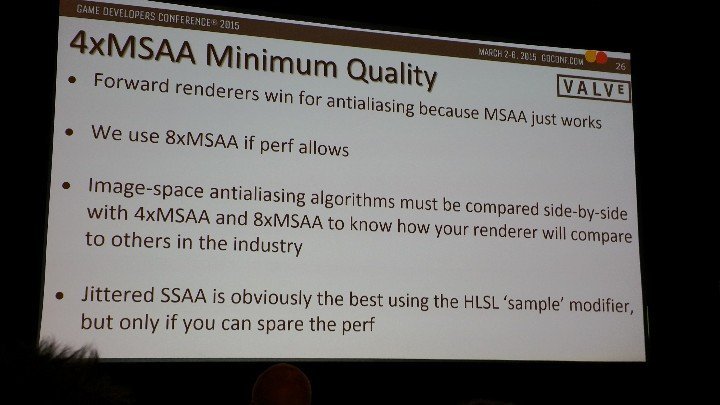

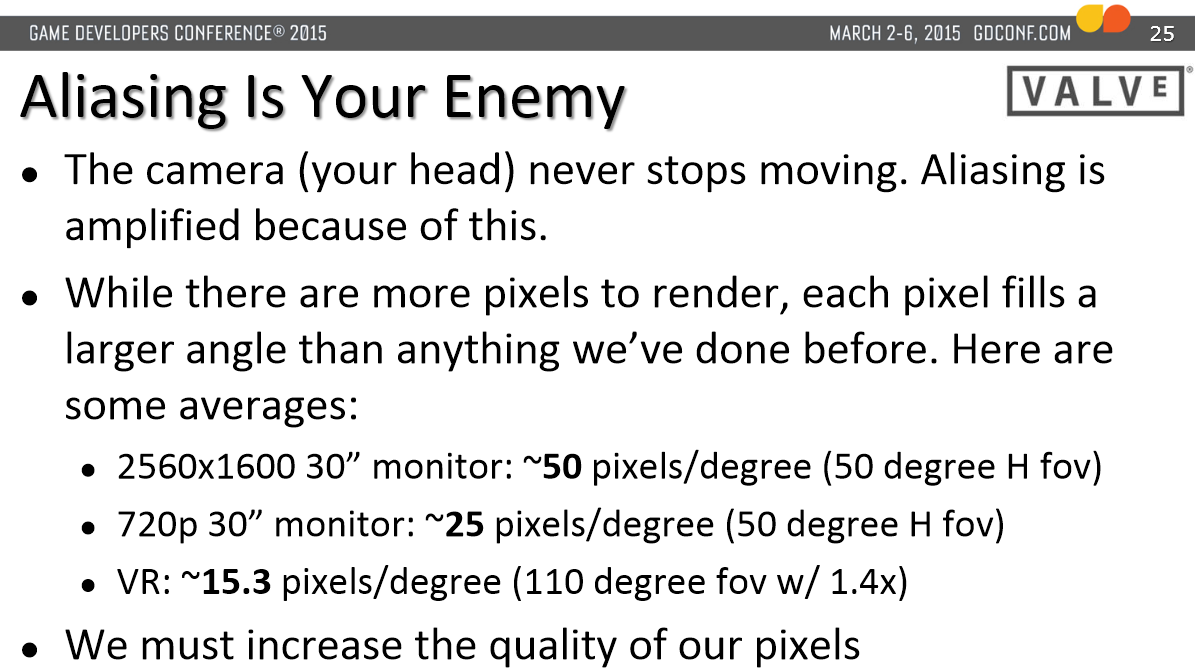

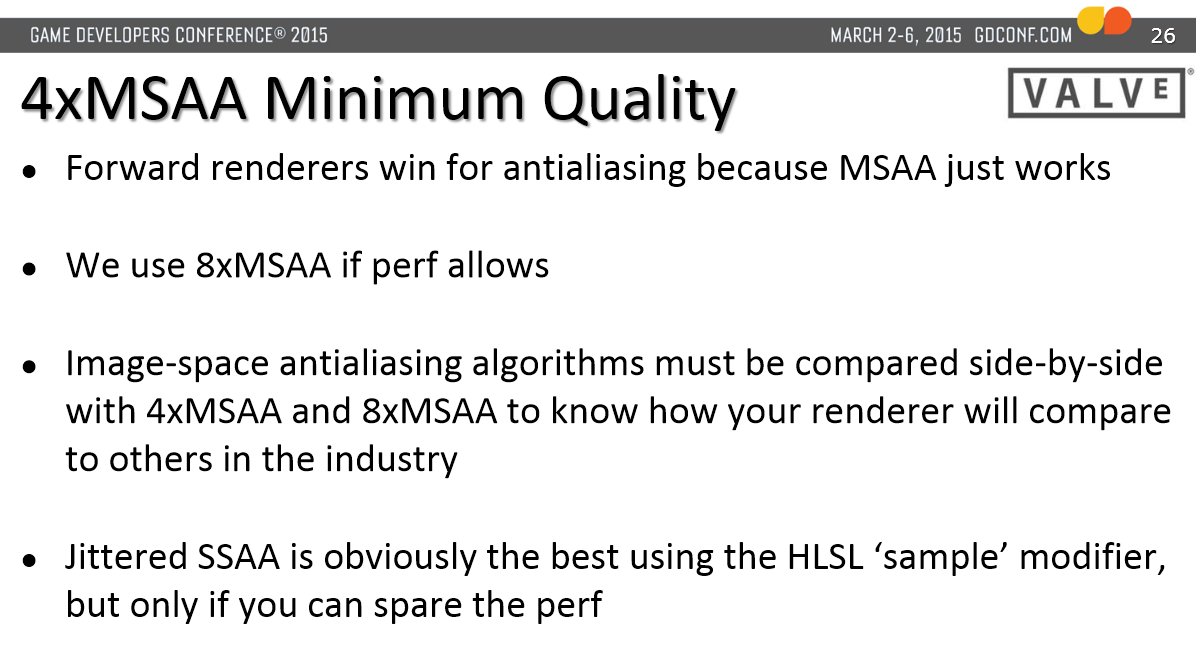

-"Aliasing is your enemy". 4xMSAA minimum, Valve use 8xMSAA. Jittered SSAA is the best

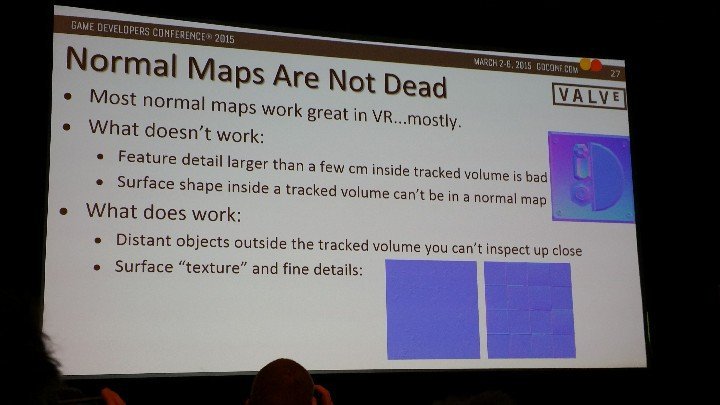

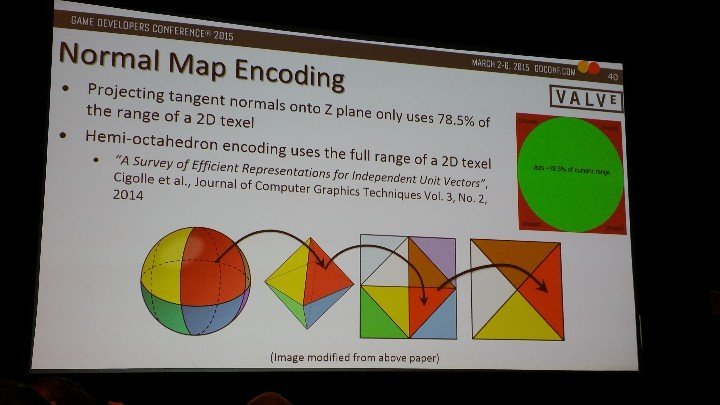

-New method for filtering normal maps.

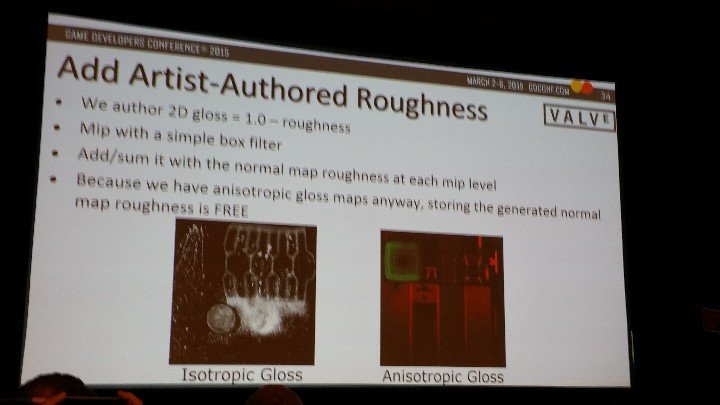

-Roughness mip maps

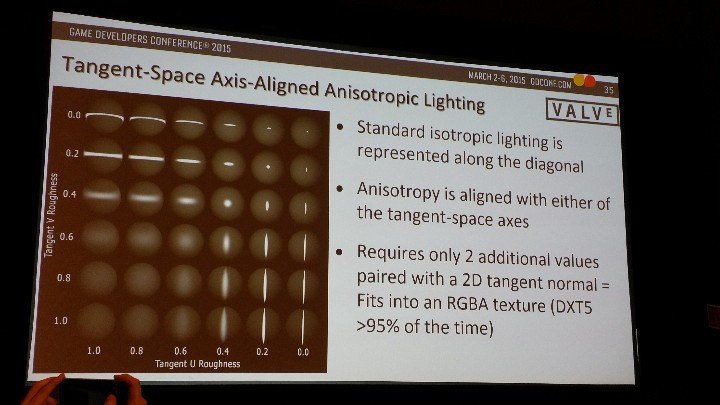

-Tangent-space axis aligned anisotropic lightning, "It's so critical that you have good specular in VR", "We think AA specular in VR looks so good that we want to make sure everyone knows."

-Geometric specular anti-aliasing "hacky math"

-More efficient mip map encoding using a hemi-octahedron

-"Noise is your friend." Gradients are awful in VR and they pop, banding is horrible. Every pixel should have noise. Absolutely do this.

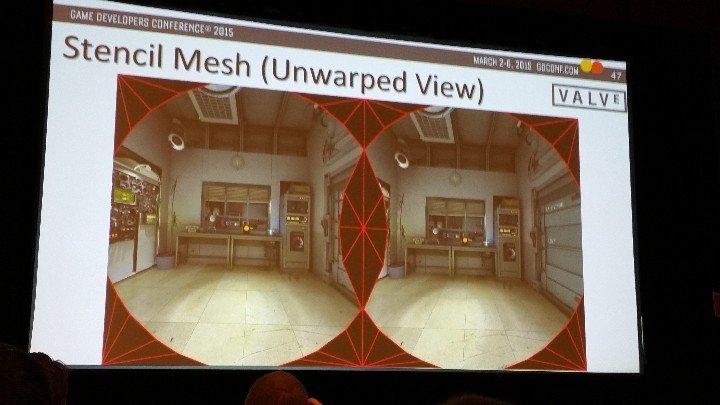

-Stencil out areas that you can't see through the lens. 15% performance improvement

-Warp mesh, only warp rendered area (15% performance cost reduction)

AMD

http://www.roadtovr.com/amd-on-low-...vr-and-graphics-applications-live-blog-330pm/

LiquidVR SDK

-Latest Data Latch (latency reduction, better parallelism and performance)

-Affinity Multi-GPU (latency reduction, better CPU performance)

-Direct to Display

-"Racing the beam" during rolling shutter for 1ms motion-to-photon latency

-Working with Oculus/Valve

NVIDIA

http://www.roadtovr.com/vr-direct-h...mproving-the-vr-experience-live-blog-2pm-pst/

VR Direct

-Late Latching constants

-Driver-level asynchronous time warp, priority over "normal" rendering

-VR SLI (lower latency)

-"can't expect to see perfect 2x scaling here because it depends on the overlap of rendering between frames. If you see 40-50% increase in performance with SLI, you're doing well"

-lower latency GPU distortion shader, future compatibility with Oculus SDK

-"hot out of the oven", "will be in flux for awhile"

Valve

http://www.roadtovr.com/valve-talks-advanced-vr-rendering-live-blog-5pm-pst/

Advanced VR rendering

-HTC Vive specs (90Hz, 110 FoV, 1080x1200 per eye, room-scale tracking)

-instancing to double geometry setup (half API calls, improved cache coherency)

-DX11 Multi-GPU extensions: nearly doubled framerate on AMD, have yet to test NVIDIA implementation but will soon.

-Low persistence global display, panel only lit for 2ms after raster scan finished

-"running start" for lower latency and better parallelism

-"Aliasing is your enemy". 4xMSAA minimum, Valve use 8xMSAA. Jittered SSAA is the best

-New method for filtering normal maps.

-Roughness mip maps

-Tangent-space axis aligned anisotropic lightning, "It's so critical that you have good specular in VR", "We think AA specular in VR looks so good that we want to make sure everyone knows."

-Geometric specular anti-aliasing "hacky math"

-More efficient mip map encoding using a hemi-octahedron

-"Noise is your friend." Gradients are awful in VR and they pop, banding is horrible. Every pixel should have noise. Absolutely do this.

-Stencil out areas that you can't see through the lens. 15% performance improvement

-Warp mesh, only warp rendered area (15% performance cost reduction)