jreinlie4:

System Specs:

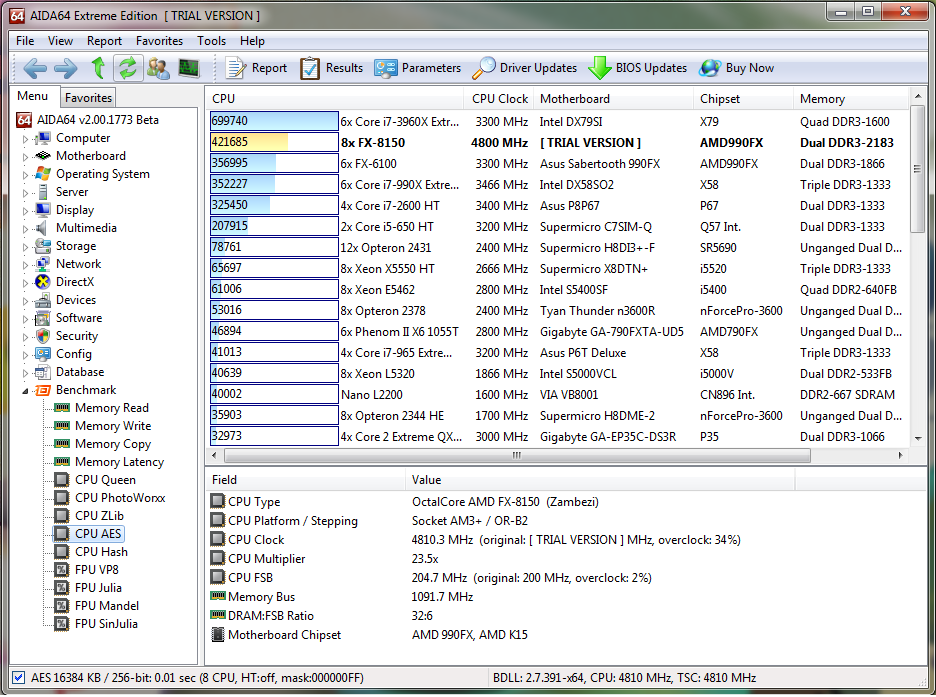

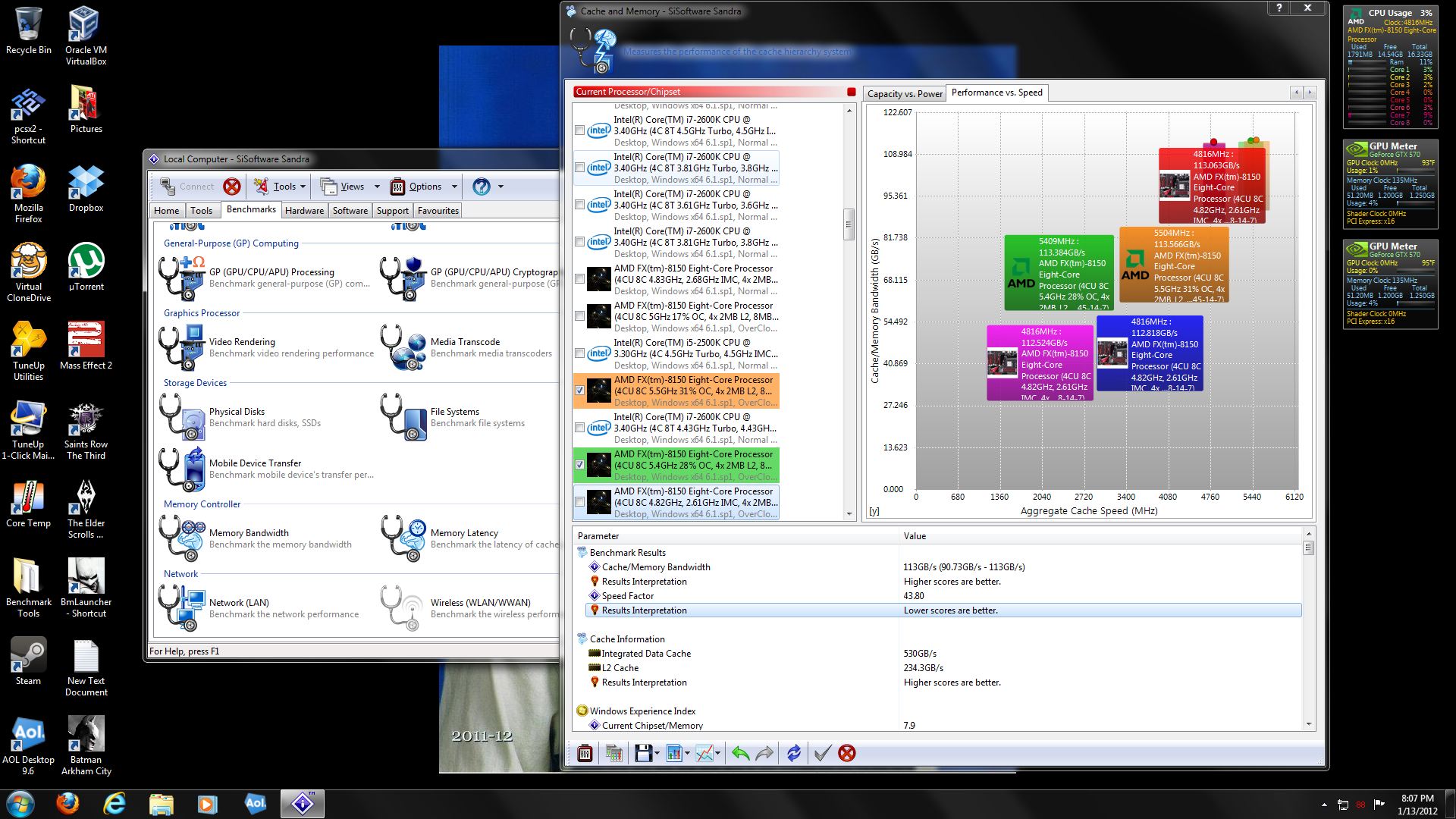

CPU: AMD FX-8150 (OC1 4.515ghz @1.46V) (OC2 4.620ghz @1.5V)

GPU: 2x AMD 6970 CFX @ 950/1500mhz

RAM: 2x 4gb Corsair XMS DDR3 @ 1632mhz

MoB: MSI 890FXA-GD70 BIOS 1.12 (1.1C)

HDD: 2x WD3000HLFS 300gb Velociraptor

PSU: Corsair AX1200

GPU Driver: Catalyst 11.11b + 12.1 CAP

The benchmark utility I am using is FRAPS.

(BD HF) = hotfix

FarCry 2 (OC1 @ 4.5ghz) (950/1500)

Benchmark (Ranch Long)

Settings: 1920x1080 @120hz,8xAA,DX10

Min: 71.96

Max: 231.09

Avg: 115.57

FarCry 2 (OC1 @ 4.5ghz) (950/1500)

(BD HF)

Benchmark (Ranch Long)

Settings: 1920x1080 @120hz,8xAA,DX10

Min: 74.36

Max: 258.81

Avg: 125.92

FarCry 2 (OC2 @ 4.6ghz) (950/1500)

Benchmark (Ranch Long)

Settings: 1920x1080 @120hz,8xAA,DX10

Min: 73.61

Max: 247.40

Avg: 122.74

FarCry 2 (OC2 @ 4.6ghz) (950/1500)

(BD HF)

Benchmark (Ranch Long)

Settings: 1920x1080 @120hz,8xAA,DX10

Min: 74.47

Max: 267.92

Avg: 128.73

Batman Arkham Asylum (OC1 @ 4.5ghz) (950/1500)

Benchmark

Settings: 1920x1080 @120hz,8xAA,PHYSX Off

Min: 78

Max: 338

AVG: 209

Batman Arkham Asylum (OC1 @ 4.5ghz) (950/1500)

(BD HF)

Benchmark

Settings: 1920x1080 @120hz,8xAA,PHYSX Off

Min: 76

Max: 344

AVG: 210.5

Batman Arkham Asylum (OC2 @ 4.6ghz) (950/1500)

Benchmark

Settings: 1920x1080 @120hz,8xAA,PHYSX Off

Min: 80

Max: 339

AVG: 211

Batman Arkham Asylum (OC2 @ 4.6ghz) (950/1500)

(BD HF)

Benchmark

Settings: 1920x1080 @120hz,8xAA,PHYSX Off

Min: 81

Max: 348

AVG: 214

ArmA 2 (OC2 @ 4.6ghz) (950/1500)

Benchmark A

Settings: 1920x1080 @120hz, AA Normal, View Distance 1500m, All Details Very High

Benchmark 01

Avg: 51

Benchmark 02

Avg: 22

ArmA 2 (OC2 @ 4.6ghz) (950/1500)

(BD HF)

Benchmark A

Settings: 1920x1080 @120hz, AA Normal, View Distance 1500m, All Details Very High

Benchmark 01

Avg: 56

Benchmark 02

Avg: 23