EDIT: Looks like the demo has been pulled.

There's a path traced playable game that uses the Brigade 2 engine.

Direct download: http://igad.nhtv.nl/~bikker/files/AboutTime R1.zip

Project site: http://igad.nhtv.nl/~bikker/

To be honest, it's not that "photorealistic" due to the heavy noise which is caused by me having only one GPU (GTX 570), 6 year old CPU etc..., but the feeling I get out of this is that we're now closer than ever to playing ray traced games. It's About Time, indeed.

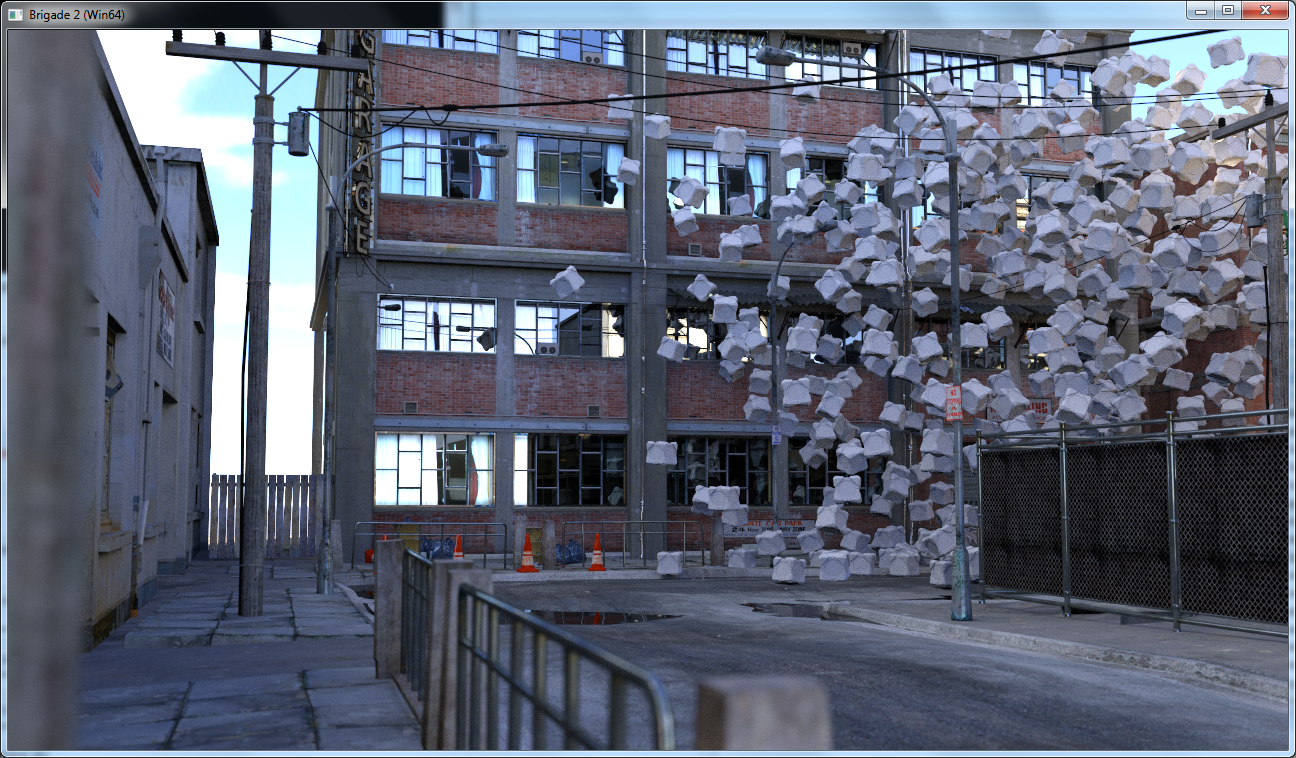

This is how it's supposed to look if you've got enough GPU power:

Siggraph 2012 video: http://www.youtube.com/watch?v=n0vHdMmp2_c (using cloud computing)

Screens: http://raytracey.blogspot.co.nz/2012/08/real-time-path-traced-brigade-demo-at.html

There's also a downloadable version of the Brigade 2 engine, if you want to build your own stuff with it.

http://igad.nhtv.nl/~bikker/downloads.htm

There's a path traced playable game that uses the Brigade 2 engine.

Direct download: http://igad.nhtv.nl/~bikker/files/AboutTime R1.zip

Project site: http://igad.nhtv.nl/~bikker/

This game is playable on NVidia hardware only, and will use all your GPUs if you have more than one installed. A single high-end GPU should run the game quite well; more GPUs will reduce the noise.

To be honest, it's not that "photorealistic" due to the heavy noise which is caused by me having only one GPU (GTX 570), 6 year old CPU etc..., but the feeling I get out of this is that we're now closer than ever to playing ray traced games. It's About Time, indeed.

This is how it's supposed to look if you've got enough GPU power:

Siggraph 2012 video: http://www.youtube.com/watch?v=n0vHdMmp2_c (using cloud computing)

Screens: http://raytracey.blogspot.co.nz/2012/08/real-time-path-traced-brigade-demo-at.html

There's also a downloadable version of the Brigade 2 engine, if you want to build your own stuff with it.

http://igad.nhtv.nl/~bikker/downloads.htm