ScepticMatt

Member

Why we need it:

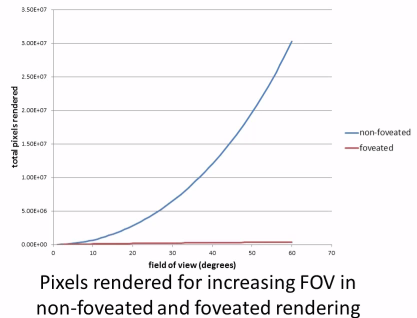

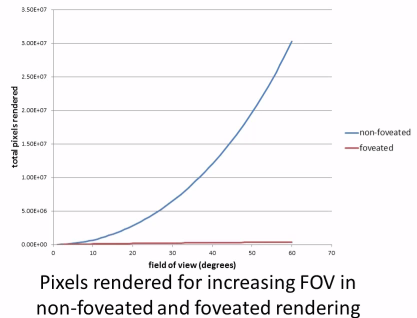

* Foveated Rendering

To achieve presence, high resolutions and field of view are necessary.

But the resolution of our eyes is only high for a tiny portion of the view (2°).

Foveated rendering dramatically reduces the rendering need, making 16k VR easily feasible.

(e.g 100x rendering cost reduction at 70° fov)

Image: Microsoft Research

* Eye-relative motion blur

While our eyes have a limited framerate, temporal aliasing artifacts are visible even at 10,000 Hz. (e.g strobing, wagon wheel, chronostasis, ..).

Eye-relative motion blur removes temporal aliasing without decreasing real detail, making 120Hz indistinguishable from the real world.

Motion blur vs wagon wheel effect: https://www.youtube.com/watch?v=iXg_7Ckv_io

* Depth of field modulation (with virtual retinal display)

Disparity between scene depth and focus depth causes discomfort and can reduce presence (for very close objects).

Eye tracking with virtual retina displays removes this disparity.

Virtual retina displays also eliminates the need for glasses and remove the screen door effect.

(light field rendering isn't necessary)

* New interactivity

The game can now react to your gaze, opening up new possibilities

Examples: NPC reactions (smile/ annoyance etc.), new types of user interface and control.

Infamous Second Son demo: https://www.youtube.com/watch?v=kKYr9MaZw3I&hd=1

What we need:

The eye has 2 principal modes of motion: Smooth pursuit with up to 30°/sec and saccades with up to 800°/sec .

The goal is to have a blurry screen during big saccades (saccadic masking) and a sharp screen during smooth pursuit.

With foveated rendering, any mismatch between real gaze point and rendering gaze point provides enough blur. So it only needs to be fast enough for smooth pursuit.

Latency and inaccuracy decreases the effectiveness for input, but everything else still works reasonably well (e.g. the 2° region increases by 4° with each frame latency @ 120Hz)

The SensoMotoric eye tracking system used in the Infamous Second son demo is more than suffienct (even enables saccade tracking), with:

0.03 °resolution

0.4 °acuracy

500 Hz sampling rate

4ms latency (1/2 frame @120Hz)

Link: http://www.smivision.com/en/gaze-and-eye-tracking-systems/products/red-red250-red-500.html

Now to fit it inside a head-mounted display...

Update 1: A working solution

* Foveated Rendering

To achieve presence, high resolutions and field of view are necessary.

But the resolution of our eyes is only high for a tiny portion of the view (2°).

Foveated rendering dramatically reduces the rendering need, making 16k VR easily feasible.

(e.g 100x rendering cost reduction at 70° fov)

Image: Microsoft Research

* Eye-relative motion blur

While our eyes have a limited framerate, temporal aliasing artifacts are visible even at 10,000 Hz. (e.g strobing, wagon wheel, chronostasis, ..).

Eye-relative motion blur removes temporal aliasing without decreasing real detail, making 120Hz indistinguishable from the real world.

Motion blur vs wagon wheel effect: https://www.youtube.com/watch?v=iXg_7Ckv_io

* Depth of field modulation (with virtual retinal display)

Disparity between scene depth and focus depth causes discomfort and can reduce presence (for very close objects).

Eye tracking with virtual retina displays removes this disparity.

Virtual retina displays also eliminates the need for glasses and remove the screen door effect.

(light field rendering isn't necessary)

* New interactivity

The game can now react to your gaze, opening up new possibilities

Examples: NPC reactions (smile/ annoyance etc.), new types of user interface and control.

Infamous Second Son demo: https://www.youtube.com/watch?v=kKYr9MaZw3I&hd=1

What we need:

The eye has 2 principal modes of motion: Smooth pursuit with up to 30°/sec and saccades with up to 800°/sec .

The goal is to have a blurry screen during big saccades (saccadic masking) and a sharp screen during smooth pursuit.

With foveated rendering, any mismatch between real gaze point and rendering gaze point provides enough blur. So it only needs to be fast enough for smooth pursuit.

Latency and inaccuracy decreases the effectiveness for input, but everything else still works reasonably well (e.g. the 2° region increases by 4° with each frame latency @ 120Hz)

The SensoMotoric eye tracking system used in the Infamous Second son demo is more than suffienct (even enables saccade tracking), with:

0.03 °resolution

0.4 °acuracy

500 Hz sampling rate

4ms latency (1/2 frame @120Hz)

Link: http://www.smivision.com/en/gaze-and-eye-tracking-systems/products/red-red250-red-500.html

Now to fit it inside a head-mounted display...

Update 1: A working solution