Thank you, I was waiting for someone to bring this up

Common misconception (at least for PS4). More education:

Most people assume the PS4 GPU was a scaled down 7870 desktop which released in March 2012 and was only the 2nd fastest card AMD made (the fastest was the 7970). In fact, the PS4 was actually based on the mobile GPU variant called the 7970M which initially released on the PC in April 2012. That card was the absolute fastest mobile card AMD produced and it was in fact based on the full 7870 desktop silicon. If you look at the

specs you will see that it is indeed virtually identical to the known PS4 GPU sans the PS4 GPU being even more underclocked from 850 to 800 Mhz. Now even though the PS4 did not release until Nov 2013, that 7970M card was still the fastest mobile card AMD manufactured at the time of the PS4 release! In fact, if you look at the history, AMD did not make another faster mobile GPU until the R9 M295X which didn't release until Nov 2014. This was essentially the mobile version of the 7970 desktop card but it came too late to be included in the PS4 (and was also way too power hungry to be in a console).

So contrary to popular belief, Sony included the absolute best and fastest card available at that time considering AMD's roadmap, PS4 release schedule, and thermal considerations for the console. In fact, if you know about computer hardware and thermals than you will know why it the PS4

HAD to include the mobile version. In short, AMD GPUs at that time were not very power effecient. GPUs in a console typically need to be < 100W TDP in order to be practical given the thermal and cooling limitations in a console. The desktop 7970 GPU released with a TDP of 250W which is absolutely not practical for a console. Even the desktop 7870 GPU had a TDP 175W which again is not practical given that the total TDP for the console is less than 150W. However, AMD was able to make a mobile variant of the 7870 with a TDP of only 75W, perfect for a console. Again this was the absolutely fastest card that was feasible up until the PS4 released in Nov 2013.

People always want to talk about the PS4 being so "underpowered" at launch but if you know that facts and what it takes to build a console from a hardware standpoint, Sony did the best they could given what AMD had available. But the focus for the PS4 (and Xbox One) wasn't on pure power, it was on developer ease and convenience. The real change was the move to x86 architecture on the CPU and again the Jaguar was the only thing AMD had available that could "fit" in a console form factor at that time (the AMD desktop parts were way too big and power hungry).

However, AMD is

NOT the same company today that it was in 2012/2013. They have a wide range of highly competitive CPUs and will be releasing a wide range of highly competitive GPUs in the coming year. Not just in raw performance, but more important in terms of efficiency.

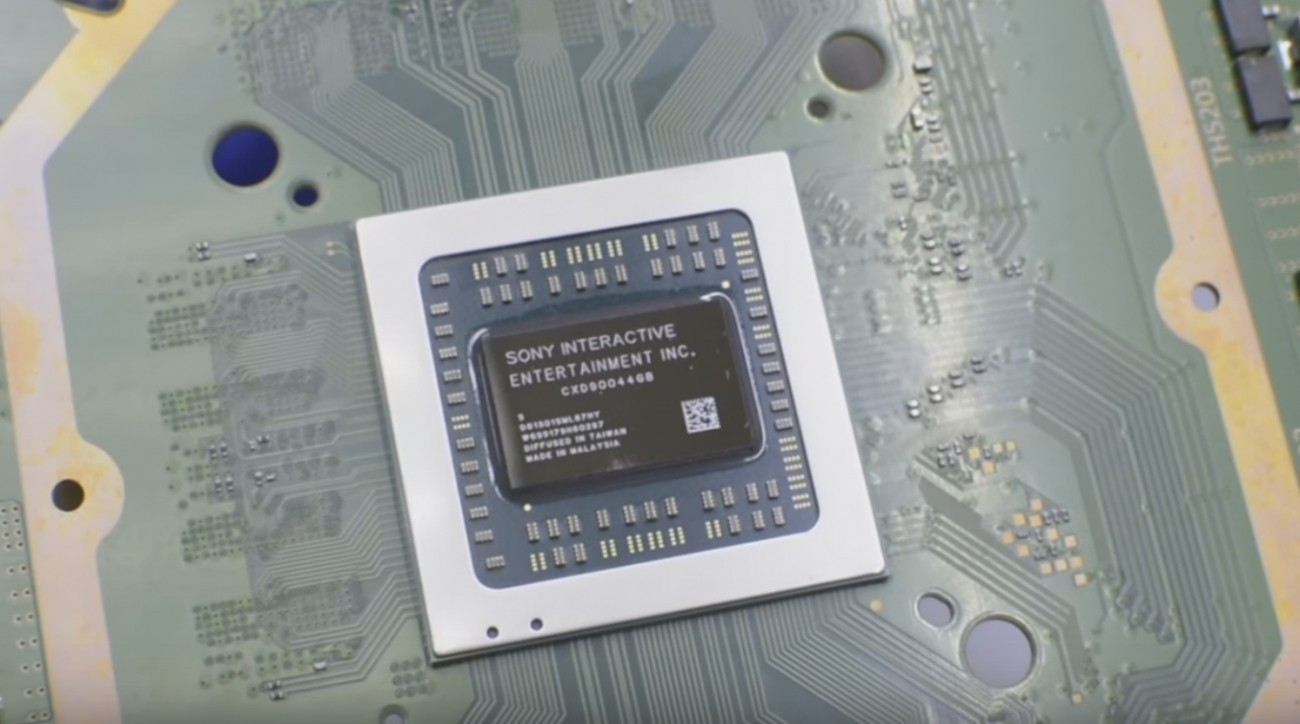

Also, the priorities of the console manufactures are different this time around. While the PS4 and Xbox One were mostly about removing barriers for developers, both Sony and Microsoft have made it clear that PS5 and Scarlett will be about pushing the boundaries of gaming. Having announced a GPU with 8K, 120fps, and ray-tracing capabilities says that they will definitely be significantly more powerful than the current RX 5700. If you look back, the PS4 and Xbox One GPU didn't really offer anything "new" at the time (except for ACE units on PS4). There were no marketing buzzwords to highlight advanced features and in fact all Sony could say was that it was a "super charged" PC.

Microsoft focused on software and UX features for Xbox One as part of their defocus on gaming initially. They paid the price and are hell bent on making sure they are technically competitive from day one with Scarlett. The difference in power between the two will be extremely small, probably smaller than any other previous generation.

So again if you think that the next consoles will ship with AMD Navi GPUs equivalent to the current RX 5700, then you are surely mistaken and you will see in due time!