Talking about a "bad memory decision" on XSX is just ridiculous.

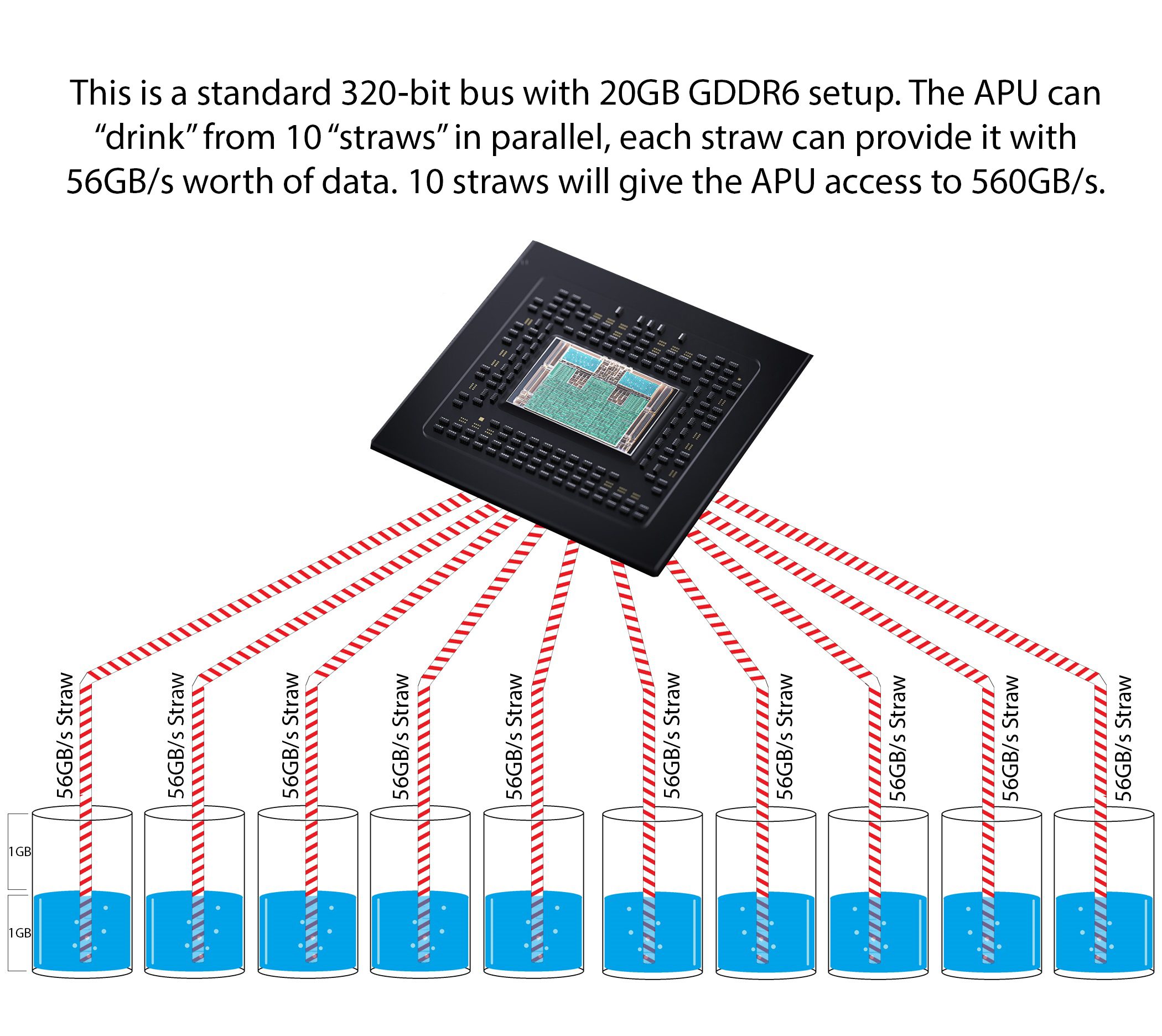

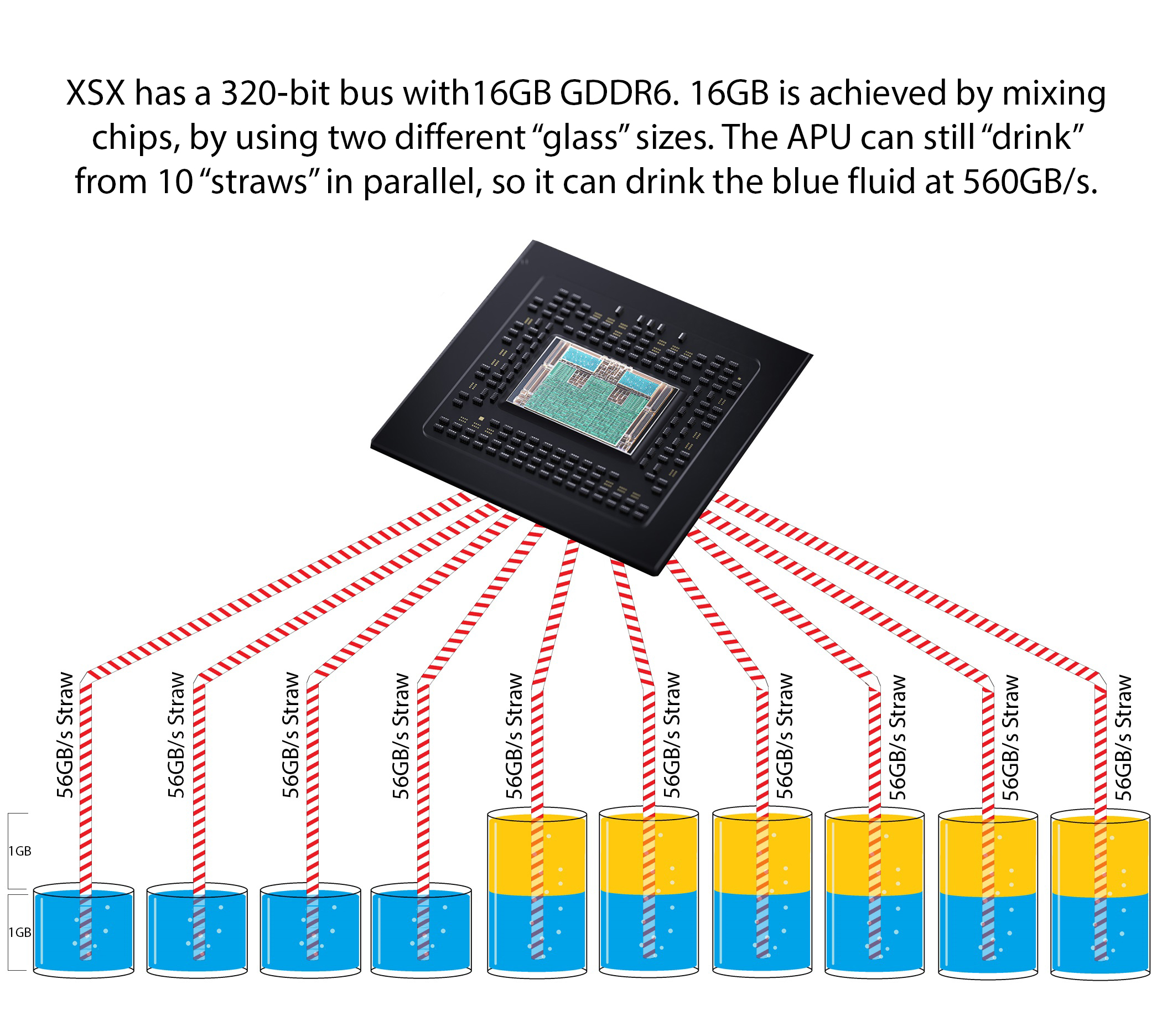

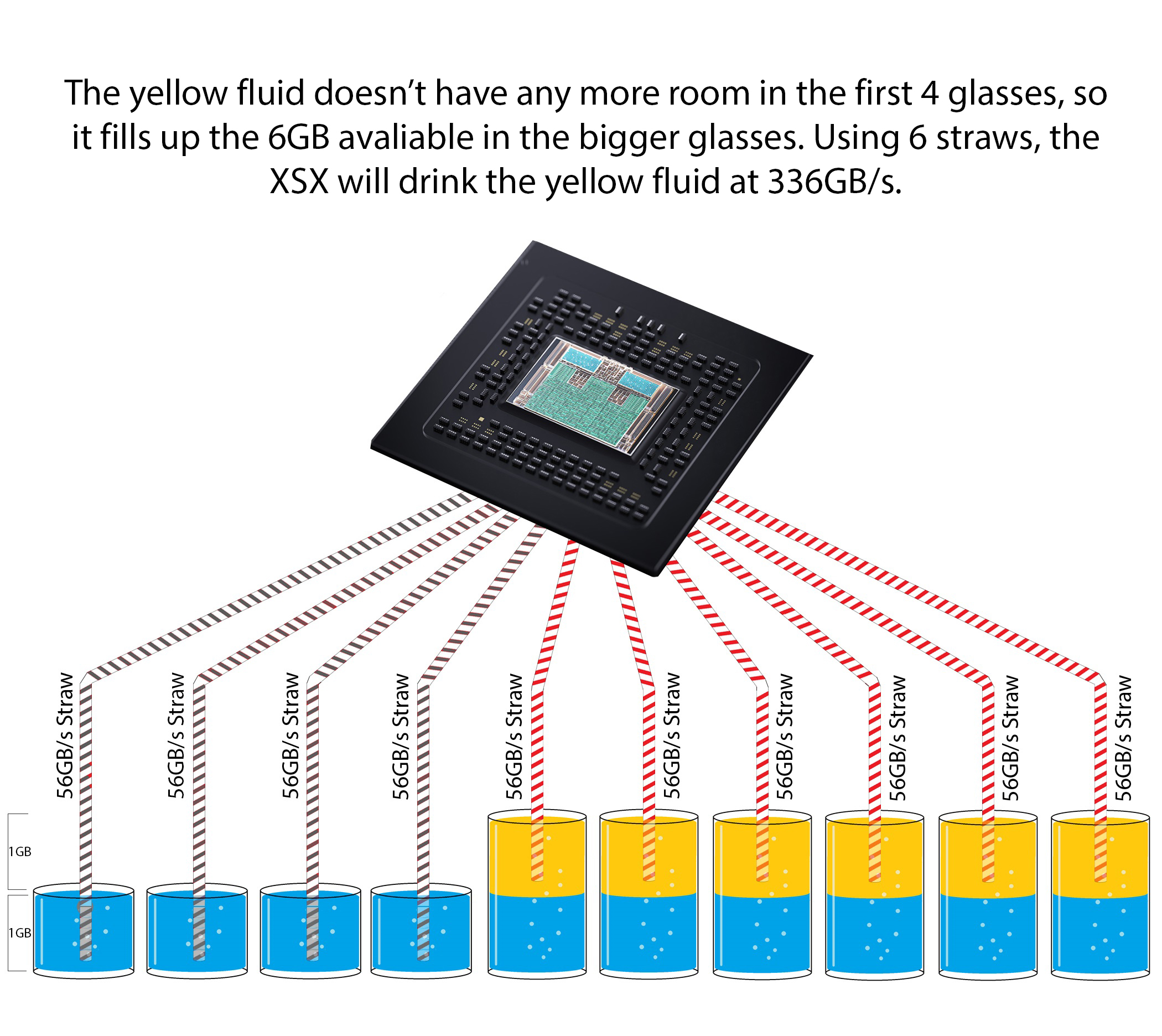

320-bit, 560 GB /s in any of the scenarios will beat 256-bit, 448 GB/s

!

XSX does not share memory on a physical level. The

second 192-bit bus also does not exist.

Games available

13.5GB VRAM.

10GB GPU optimal memory and 3,5GB standard memory.

PS4 actually has a pretty similar separation.

"The actual true distinction is that:

"Direct Memory" is memory allocated under the traditional video game model, so the game controls all aspects of its allocation

"Flexible Memory" is memory managed by the PS4 OS on the game's behalf, and allows games to use some very nice FreeBSD virtual memory functionality. However this memory is 100 per cent the game's memory, and is never used by the OS, and as it is the game's memory it should be easy for every developer to use it. "

https://www.eurogamer.net/articles/digitalfoundry-ps3-system-software-memory

This division is also abstract.

Good example of poor implementation - GTX 970

Slow section of GTX 970 memory has a bandwidth of only

28 GB / s (this is more than

6 times less than PS4 memory bandwidth -

176GB / s, and more than

12 times compared to XSX)

At the same time, when the buffer is full, the drop in performance is on average only

4-6 percent.