I think that X3D PACKAGING is spot on for PS5 SoC, in fact it can be a whole

SIP which is something Moore's Law Is Dead YT guy has heard from one of his source who said about PS5 chip, "Think Vita" and it makes perfect sense when you also figure the cooling patent into the picture.

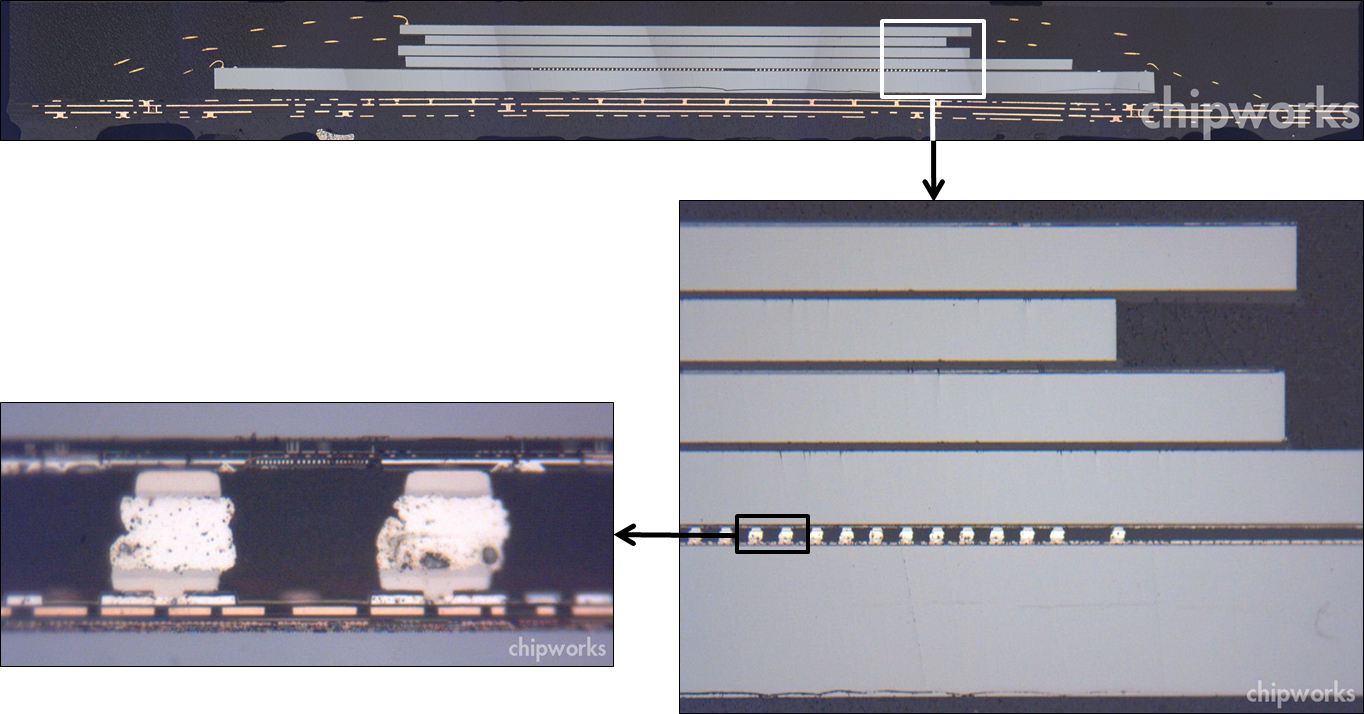

Article explaining PS Vita chip

Now remember Sweeney mentioned NAND chips so close to main processor, it almost removes any access latency, so it is quite possible those chips are stacked around the main die in a single package -> APU in middle and NAND chips around 4 sides and stacked 3 times to make 12 total, in a single package connected by Infinity fabric and secondary storage controller chip. They would be so close that it compares to VRAM chips latency times, as they are still conventionally all around the SIP.

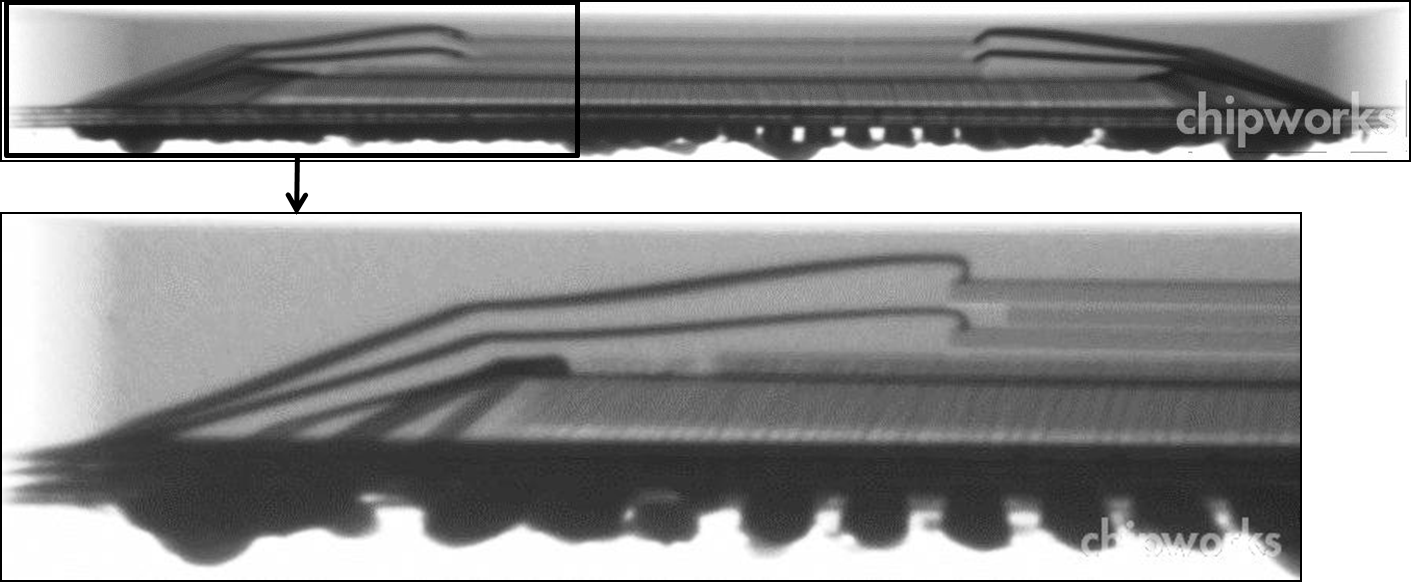

Just look at the monstrous Package size in that image, it is almost as big as the heat sink. Middle is PCB where the heat dissipation rods go through. And the thing inscribed as '5' is the giant SIP with main APU, storage controller and stacked NAND chips all connected with Infinity Fabric. And that gives you >10x bandwidth and it makes perfect sense for a custom engineers piece of hardware like PS5. Now we know why the console and cooling design is best kept secret and hyped up beyond anything for the reveal.

"A heatsink (21) is disposed on a lower surface of a circuit board (10). The circuit board (10) has through holes (h1) that penetrate the circuit board (10) in an area (A) where an integrated circuit apparatus (5) is disposed. Heat conduction paths (11) are provided in the through holes (h1). The heat conduction paths (11) connect the integrated circuit apparatus 5 and the heatsink (21).

This structure allows for disposition of a component different from the heatsink (21) on the same side as the integrated circuit apparatus (5),

thus ensuring a higher degree of freedom in a component layout"