Leonidas

Member

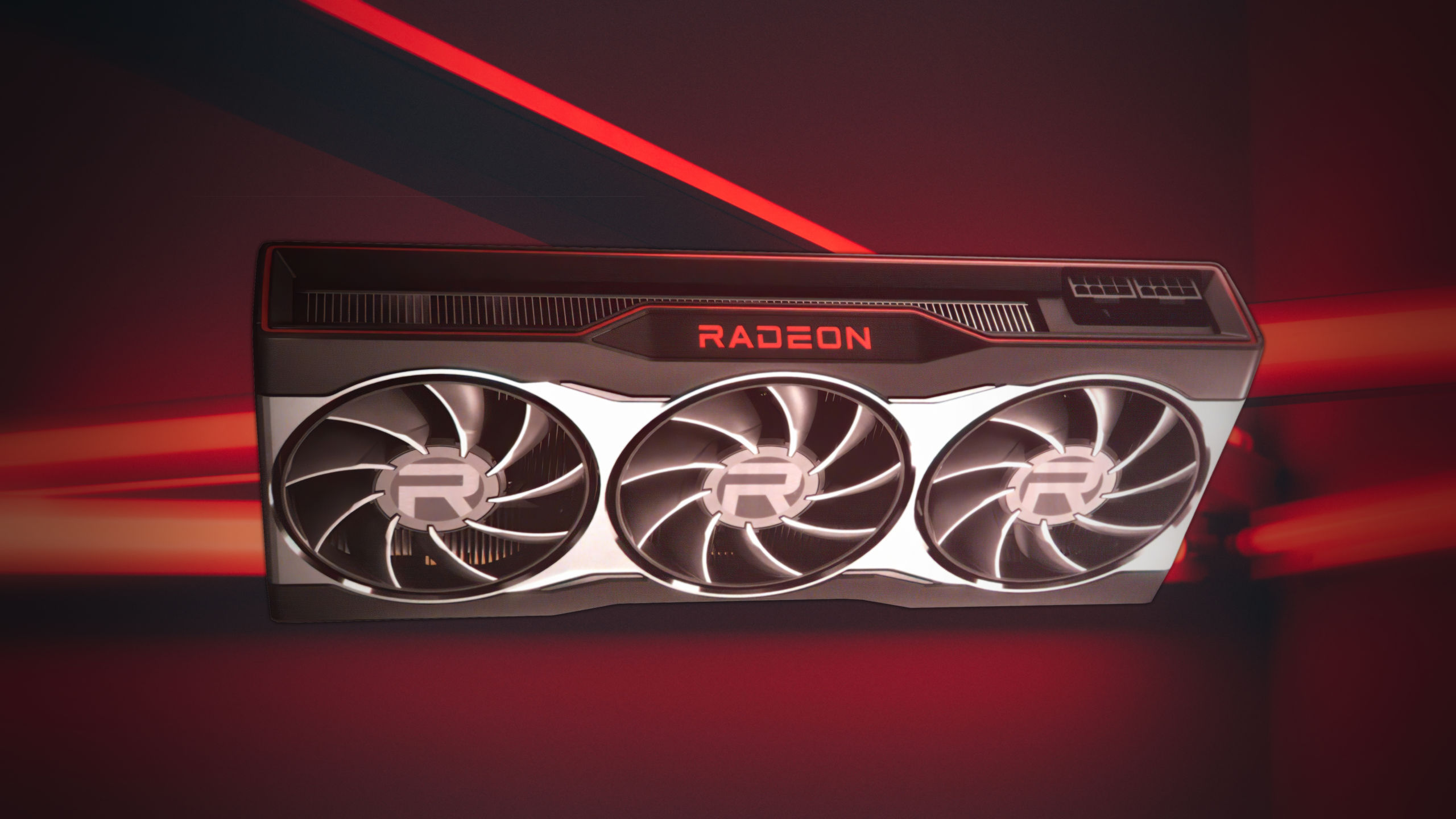

AMD Radeon RX 6900XT to feature Navi 21 XTX GPU with 80 CUs - VideoCardz.com

AMD Radeon RX 6000 series, what we know so far AMD Radeon RX 6000 series specifications have been known to us since Monday. This week AMD provided a lot of details to AIBs, including new (working) drivers, final SKU names, and so on. We have been able to cross-check parts of the specs and we […]

6900XT (AMD exclusive)

80 CU (5120 Stream Processors)

2040MHz Game Clock (2330MHz Boost Clock)

16GB GDDR6 @ 16Gbps

6800XT

72 CU (4608 Stream Processors)

2015MHz Game Clock (2250MHz Boost Clock)

16GB GDDR6 @ 16Gbps

6800

64 CU (4096 Stream Processors)

1815MHz Game Clock (2150MHz Boost Clock)

16GB GDDR6 @ 16Gbps

Interested to see where price and performance land. Given that even the 6800 has 16 GB G6 at the same speed as the 6900XT the 6800 for right now seems like it will be the best option. Reference clocks (for 6800) are lower than consoles though... Interested to see if 6800 can OC to 2 GHz like the others. Could be an interesting card if so.

Power consumption is concerning though, an issue I had with RTX 3080/3090 also.

6900XT is ~350 watts. 6800XT is ~320 watts. 6800 is ~290 watts. If igorsLAB power consumption numbers are correct

OC models of 6800 could be over 300 watts too.

Last edited: