longdi

Banned

I think SX designs has more to offer. But by locking at only 1.8ghz, seems like MS is selling it too short

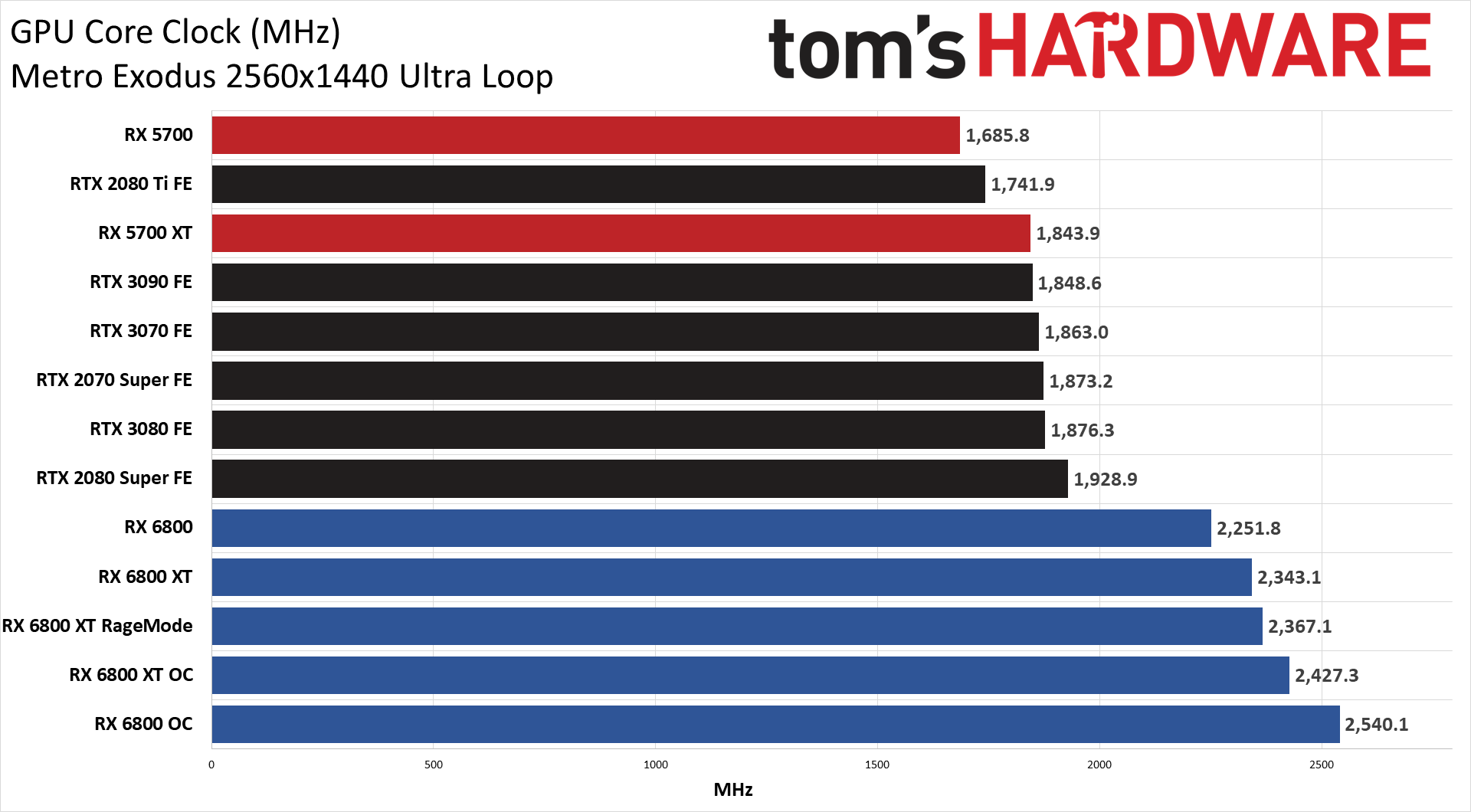

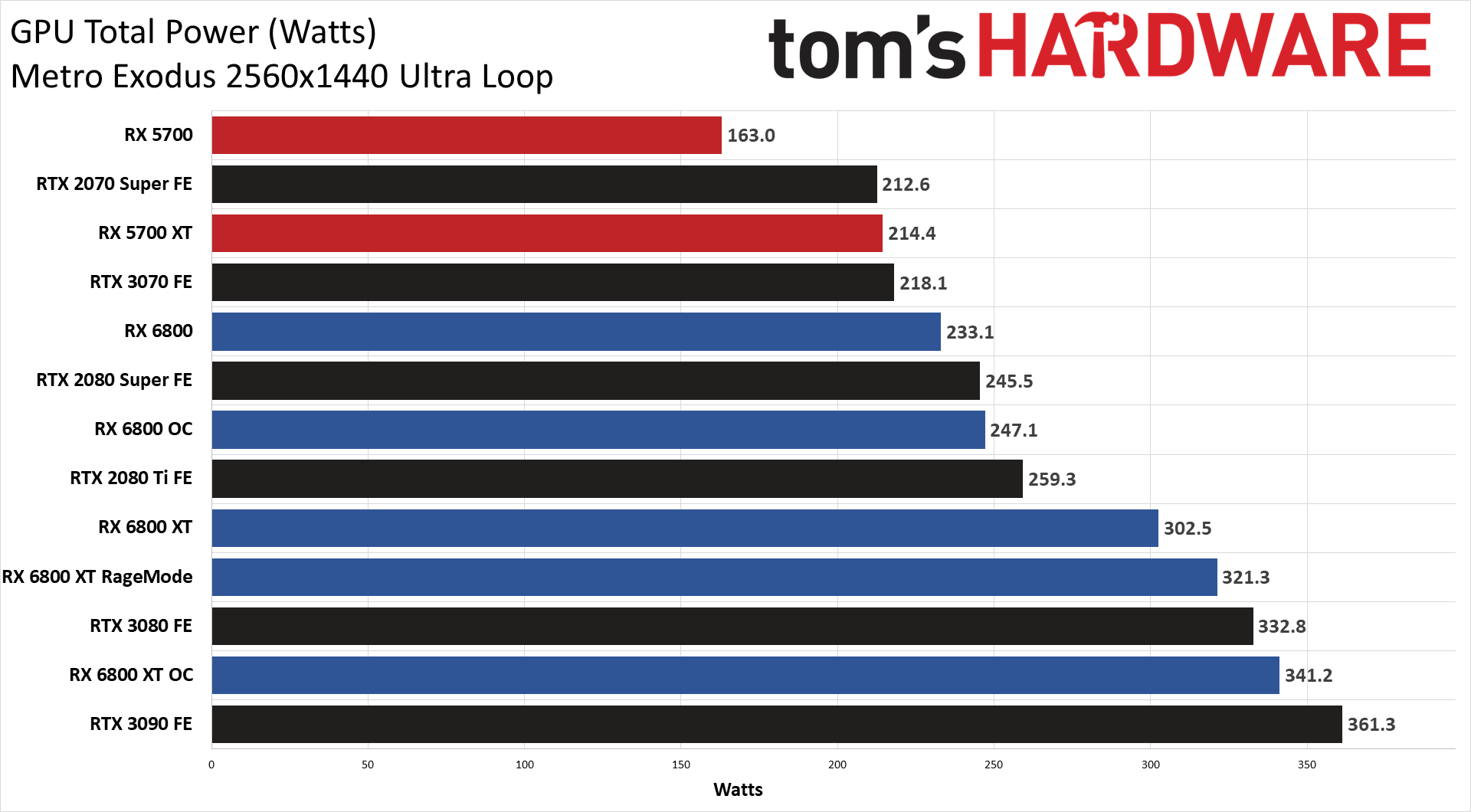

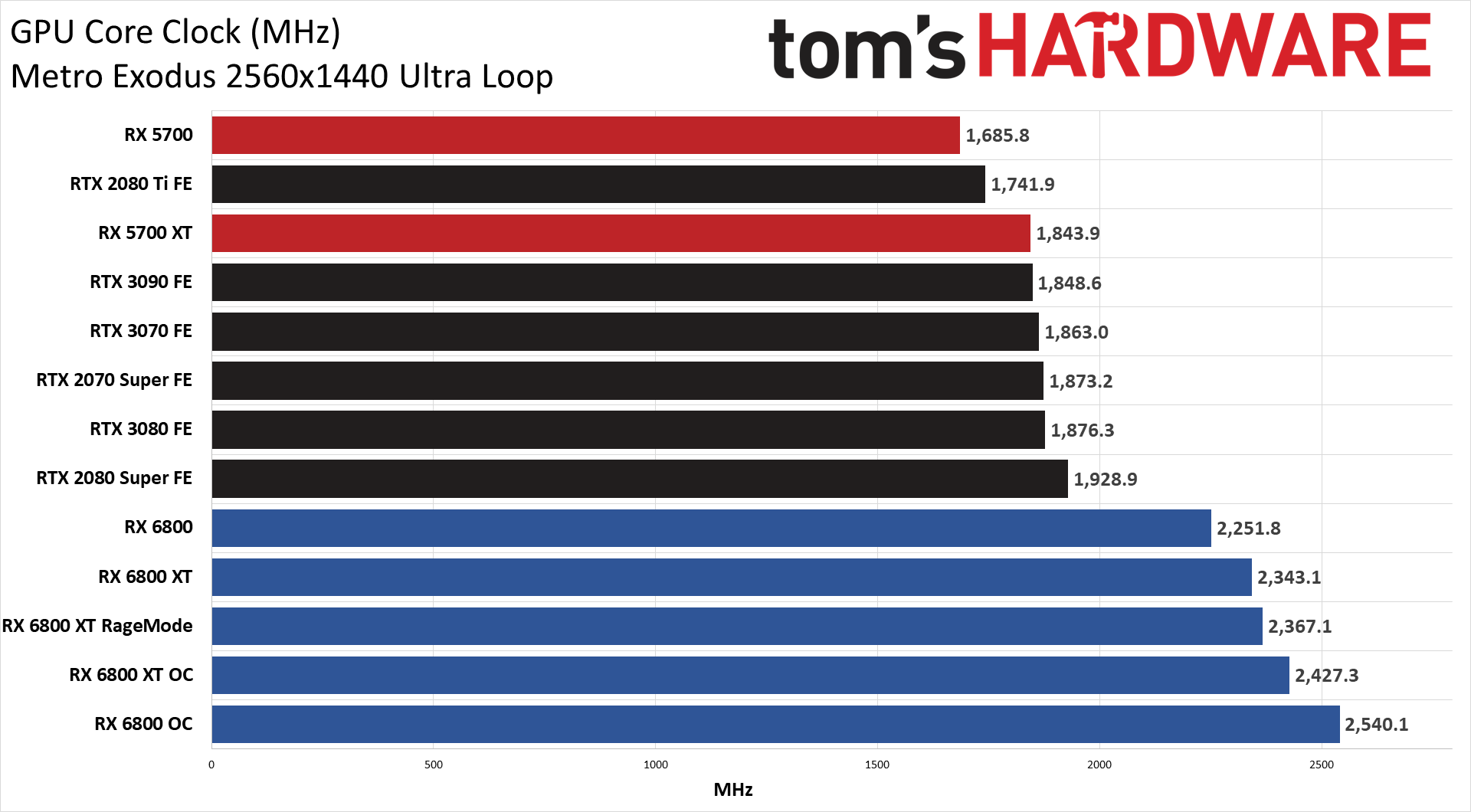

Look at RX6800, the closest to SX.

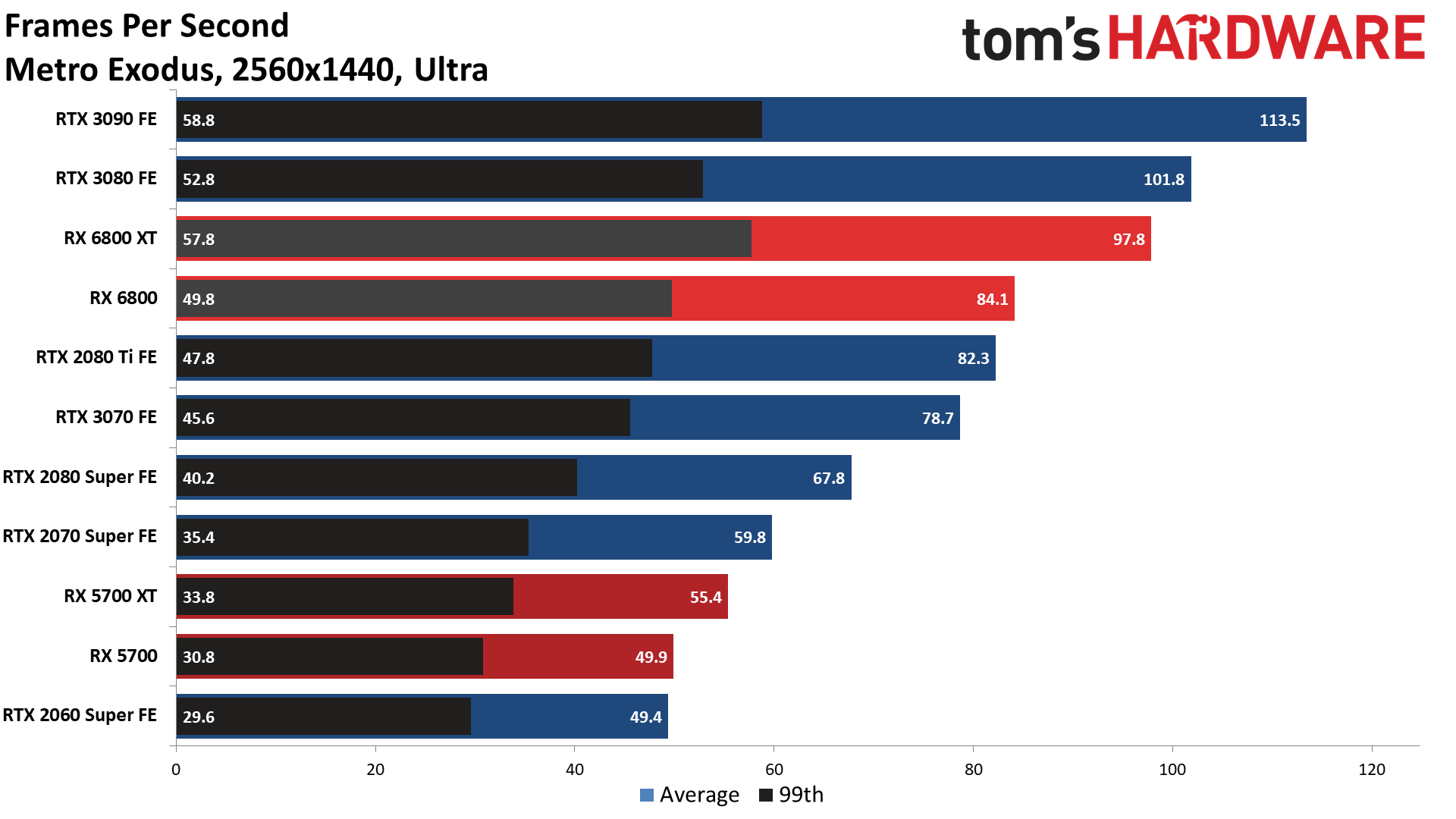

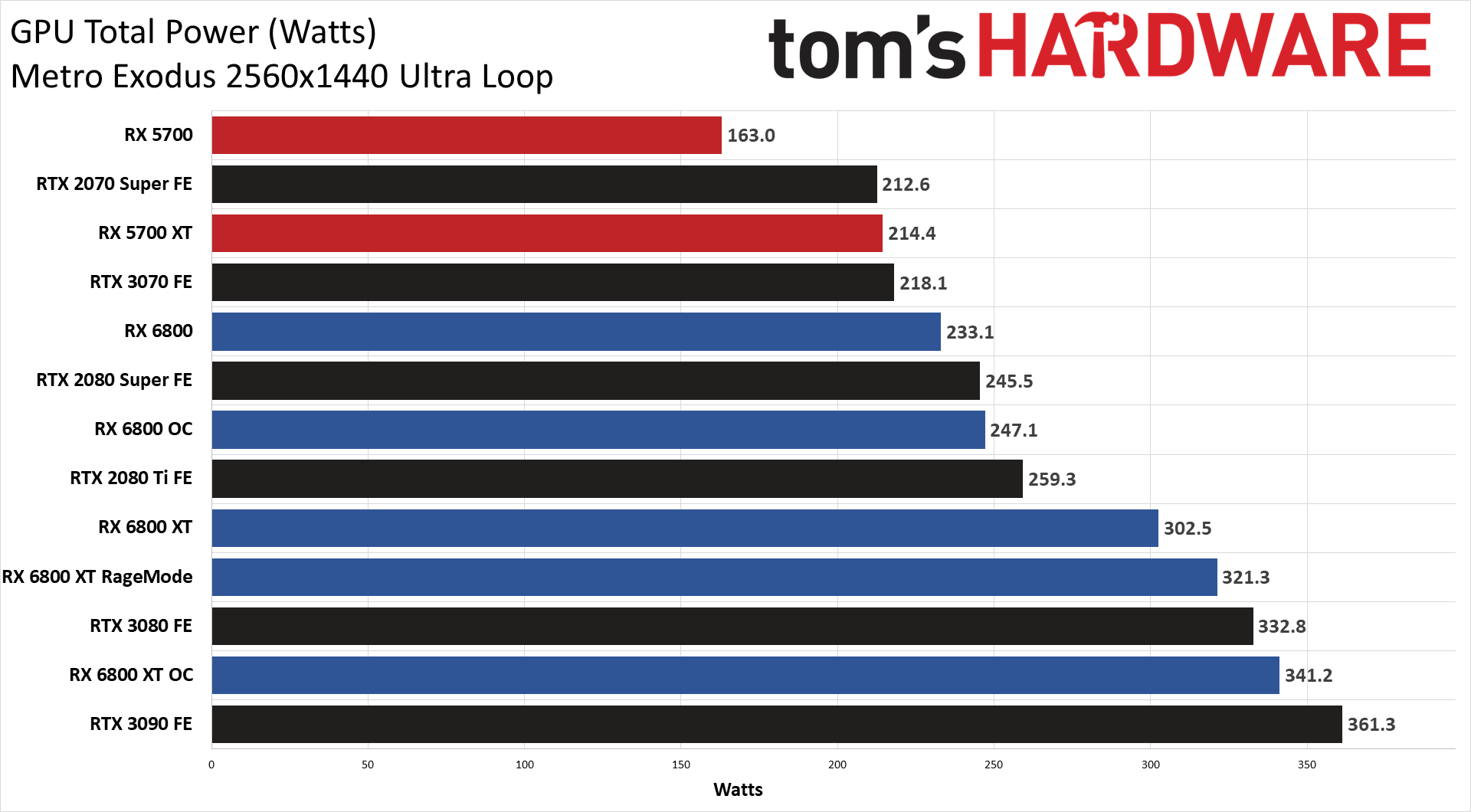

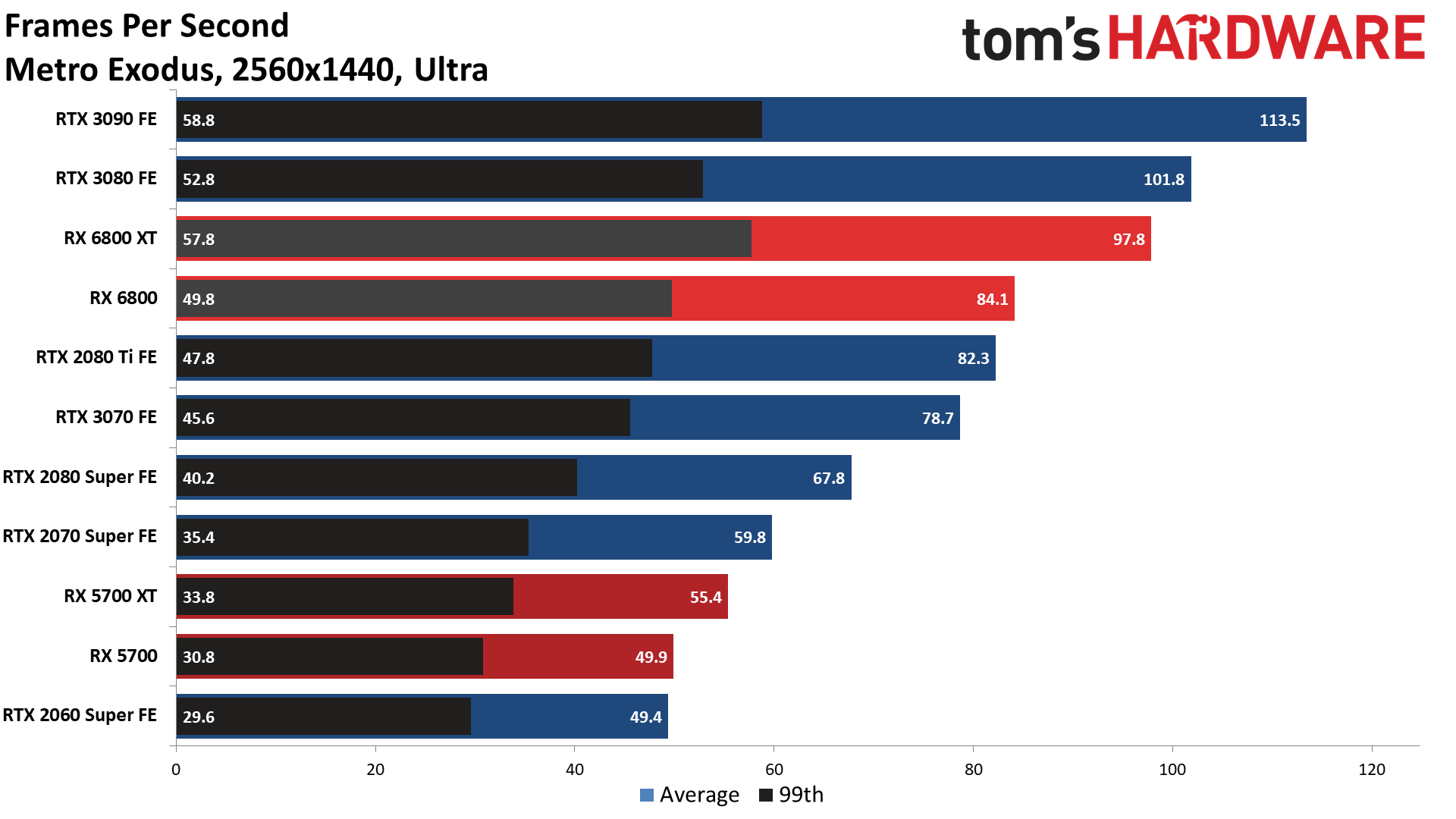

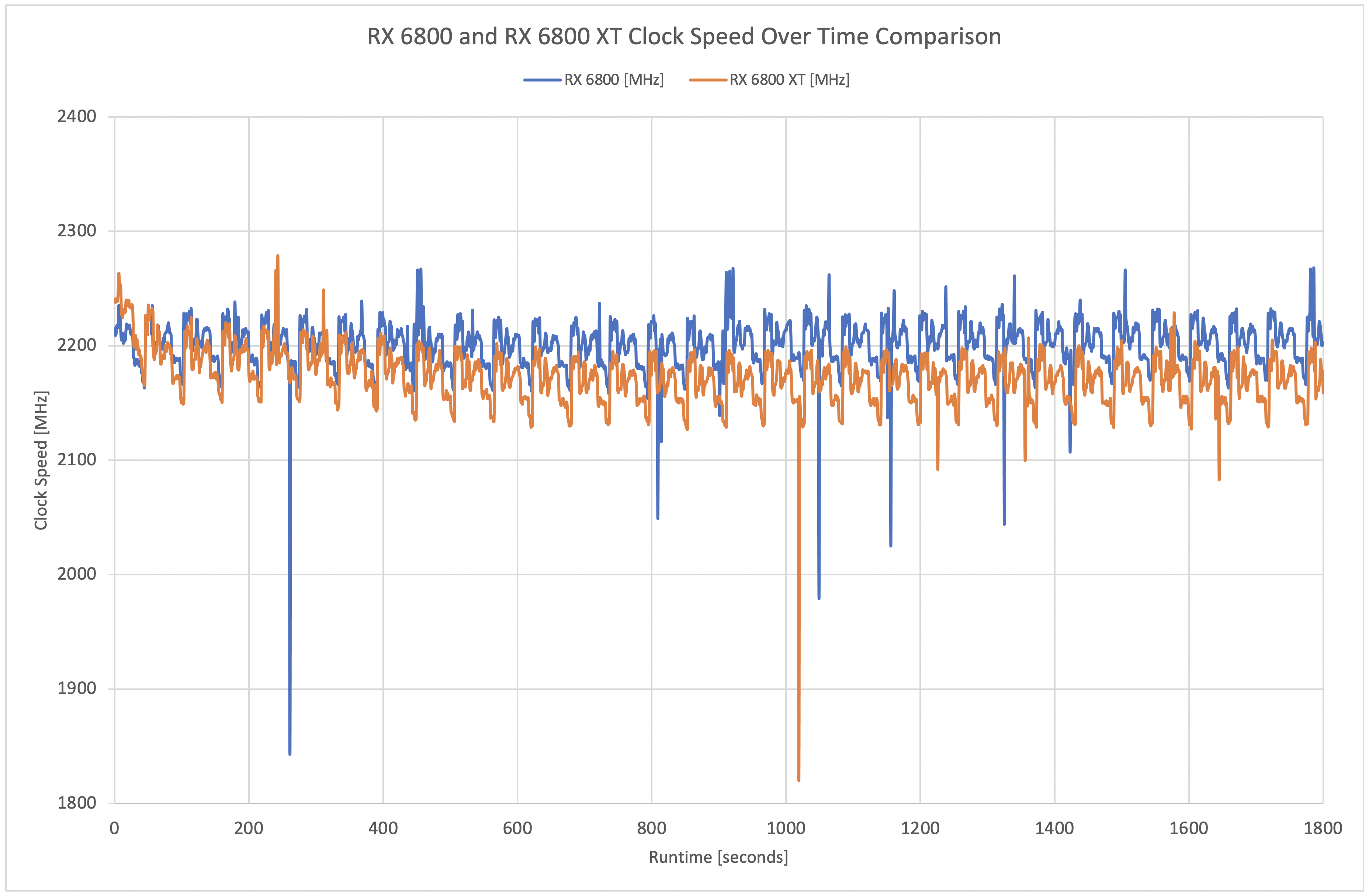

RX6800 runs metro 1440p ultra at around 2.25ghz, takes only 230W. It generates 85fps, beats 2080Ti Fe.

From reviews, a 7% overclock 6800, gives about 3% fps improvements

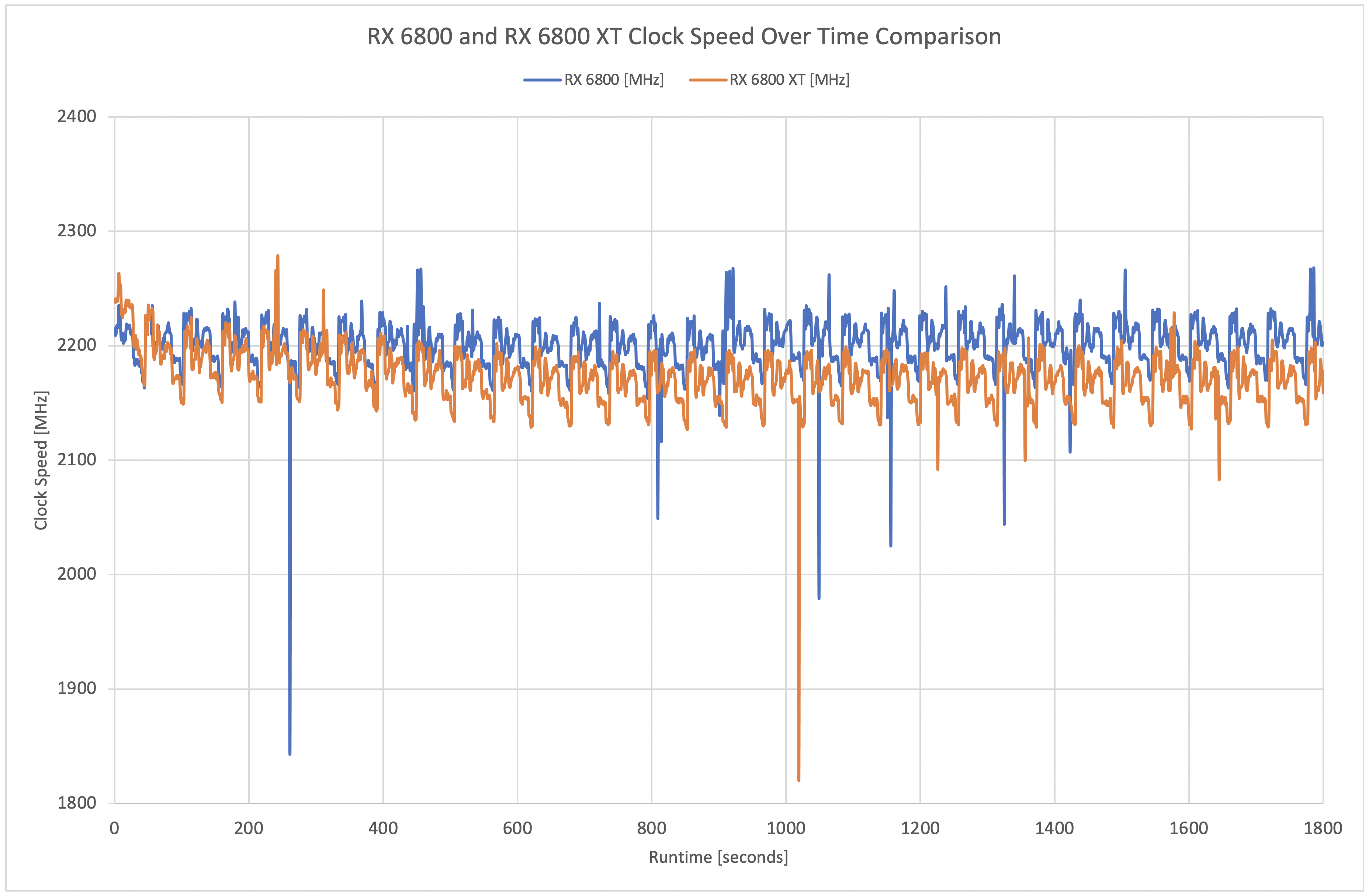

Working backwards, 1.8ghz to 2.25ghz is a 25% increase. So if we capped RX6800 to 1.8ghz, it will lose 10~12% in perf, so Metro 1440p may lose 10fps in doing so.

Let see if any reviewers review capped 6800/6800XT clocks. Please share if you come across them.

Look at RX6800, the closest to SX.

RX6800 runs metro 1440p ultra at around 2.25ghz, takes only 230W. It generates 85fps, beats 2080Ti Fe.

From reviews, a 7% overclock 6800, gives about 3% fps improvements

Working backwards, 1.8ghz to 2.25ghz is a 25% increase. So if we capped RX6800 to 1.8ghz, it will lose 10~12% in perf, so Metro 1440p may lose 10fps in doing so.

Let see if any reviewers review capped 6800/6800XT clocks. Please share if you come across them.

Last edited: