Freelance Yakuza

Member

Unlock the damn clock

Last edited:

Unlock the damn clock

I don't think so. MS fangirls had been far more vocal spreading fud. Especially the 12 teraflops nonsense.

You can't compare these values from xbox one x games to xbox series X compatiblity. Xbox one x games are optimized for GCN. Those optimizations are counterproductive with RDNA 1 or 2. RDNA chips will still be faster, but they have to do stuff, that was not optimized for RDNA....

BTW the CU IPC is 25% from Xbox One X to Xbox Series X that match exactly the GCN to RDNA1 IPC increase... RDNA2 CU IPC increase is something around 10-15% over RDNA 1 CU IPC.

MS said the IPC increase from Xbox One X to Xbox Series X is 25%.You can't compare these values from xbox one x games to xbox series X compatiblity. Xbox one x games are optimized for GCN. Those optimizations are counterproductive with RDNA 1 or 2. RDNA chips will still be faster, but they have to do stuff, that was not optimized for RDNA.

You can see this with newer games on PC, where RDNA based GPUs are much faster than Vega based GPUs. Only Vega VII can sometimes keep up, thanks to its much higher bandwidth and memory capacity.

Source on sdk?They designed a box whose internals are pretty much squished together. To run those type of speeds, they need a much more expensive cooling system.

Considering how far behind they already are, SDK wise, any improvements to the clocks will probably have to wait until the mid generation refresh.

They weren't ready to launch this year.

I think SX designs has more to offer. But by locking at only 1.8ghz, seems like MS is selling it too short

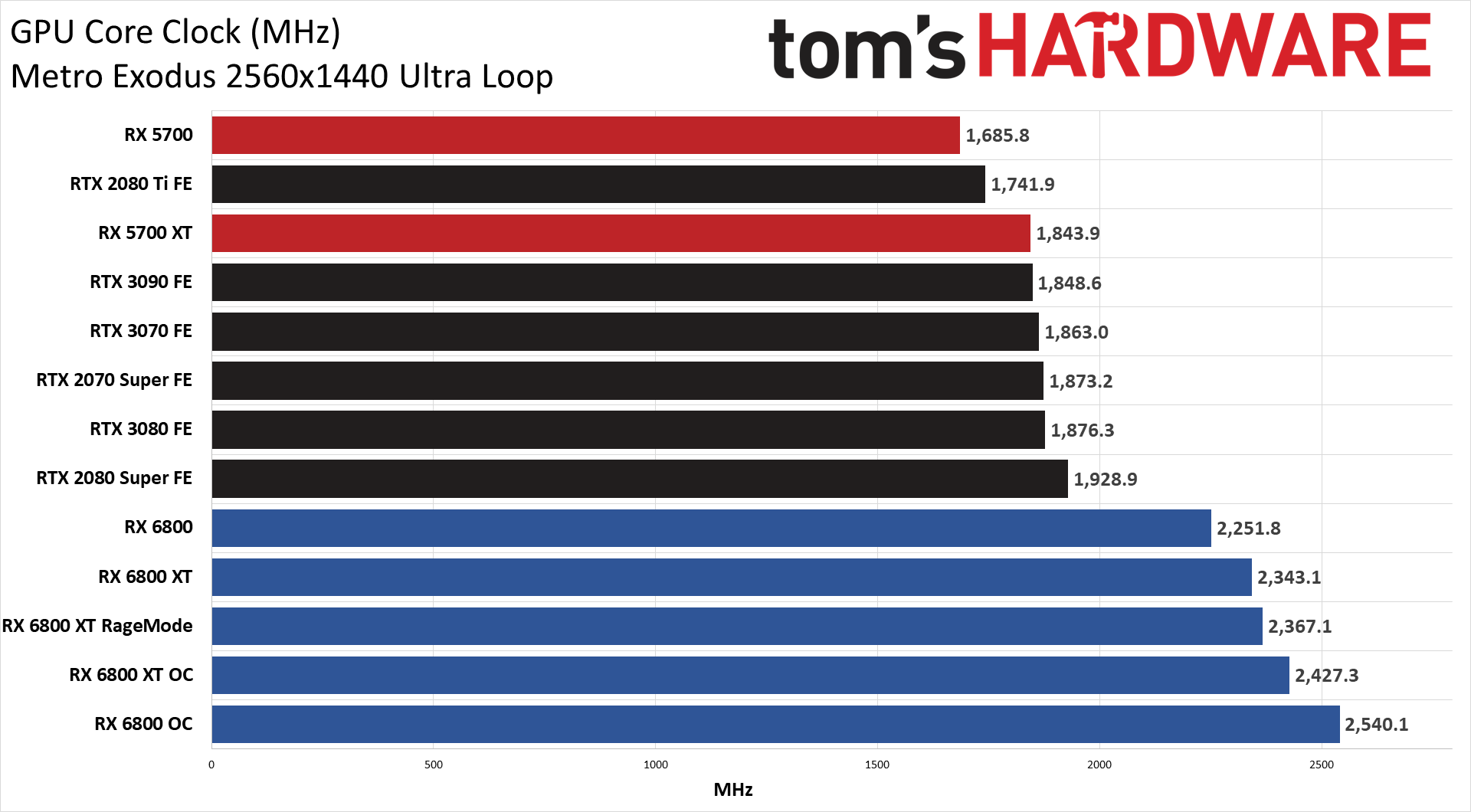

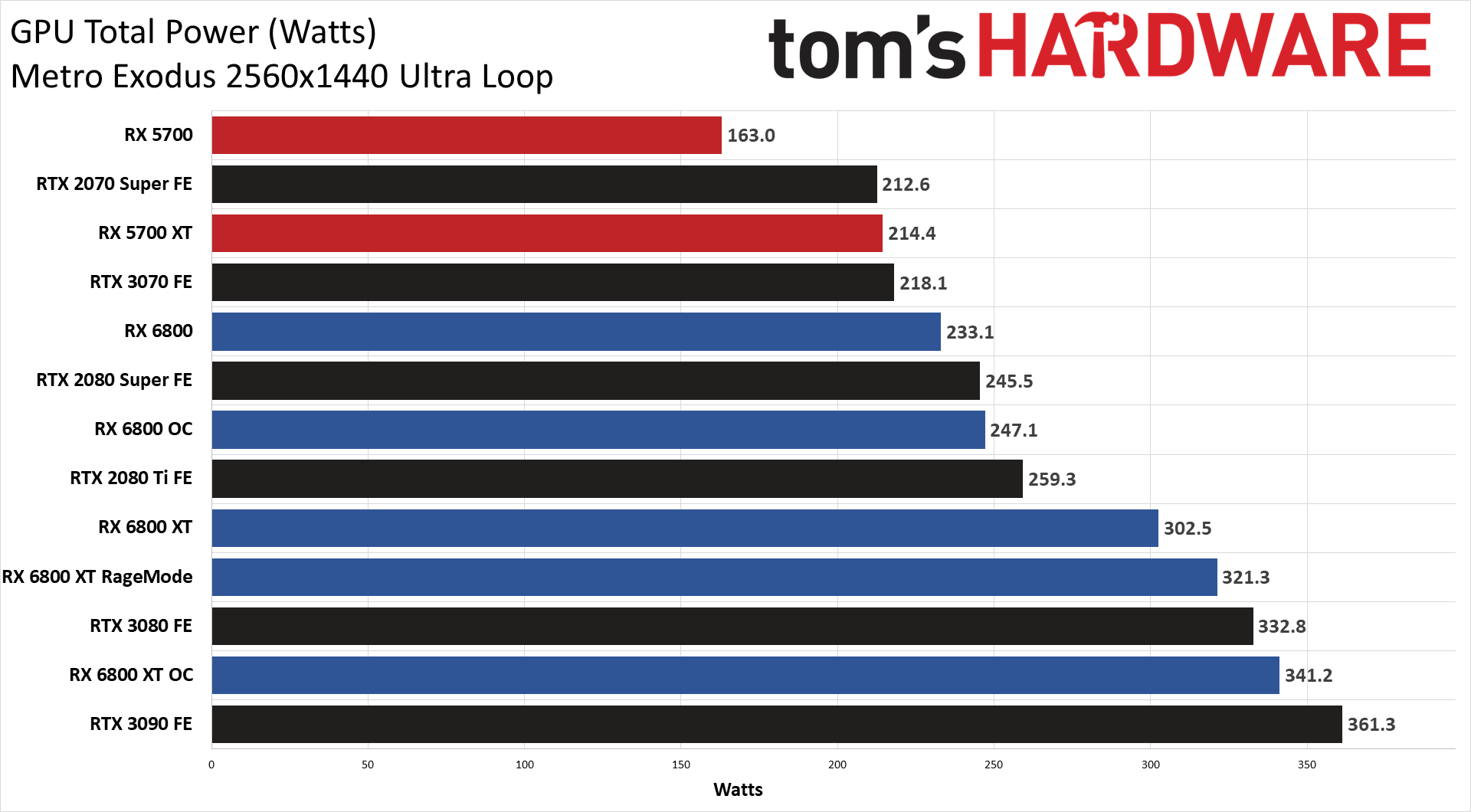

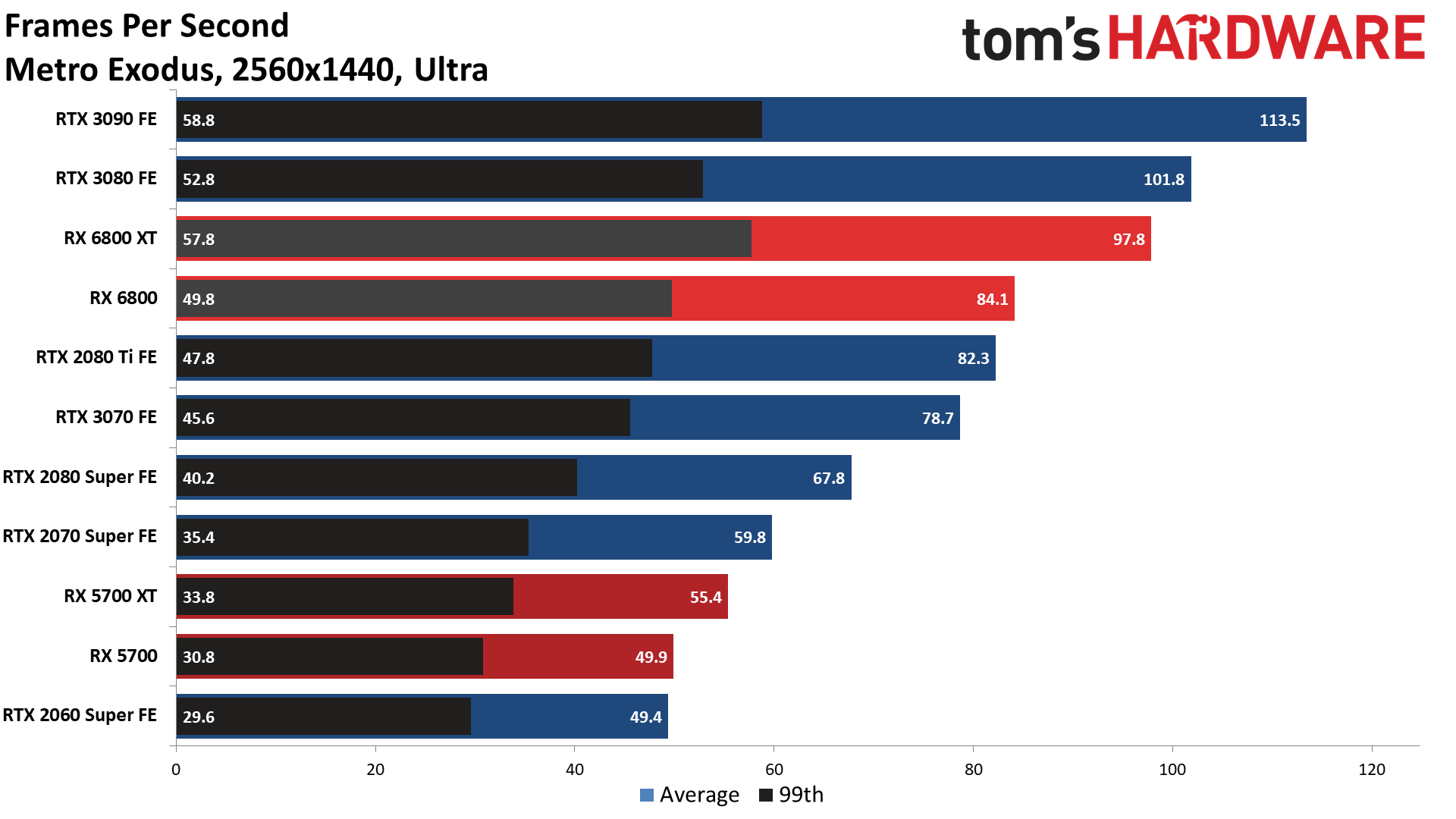

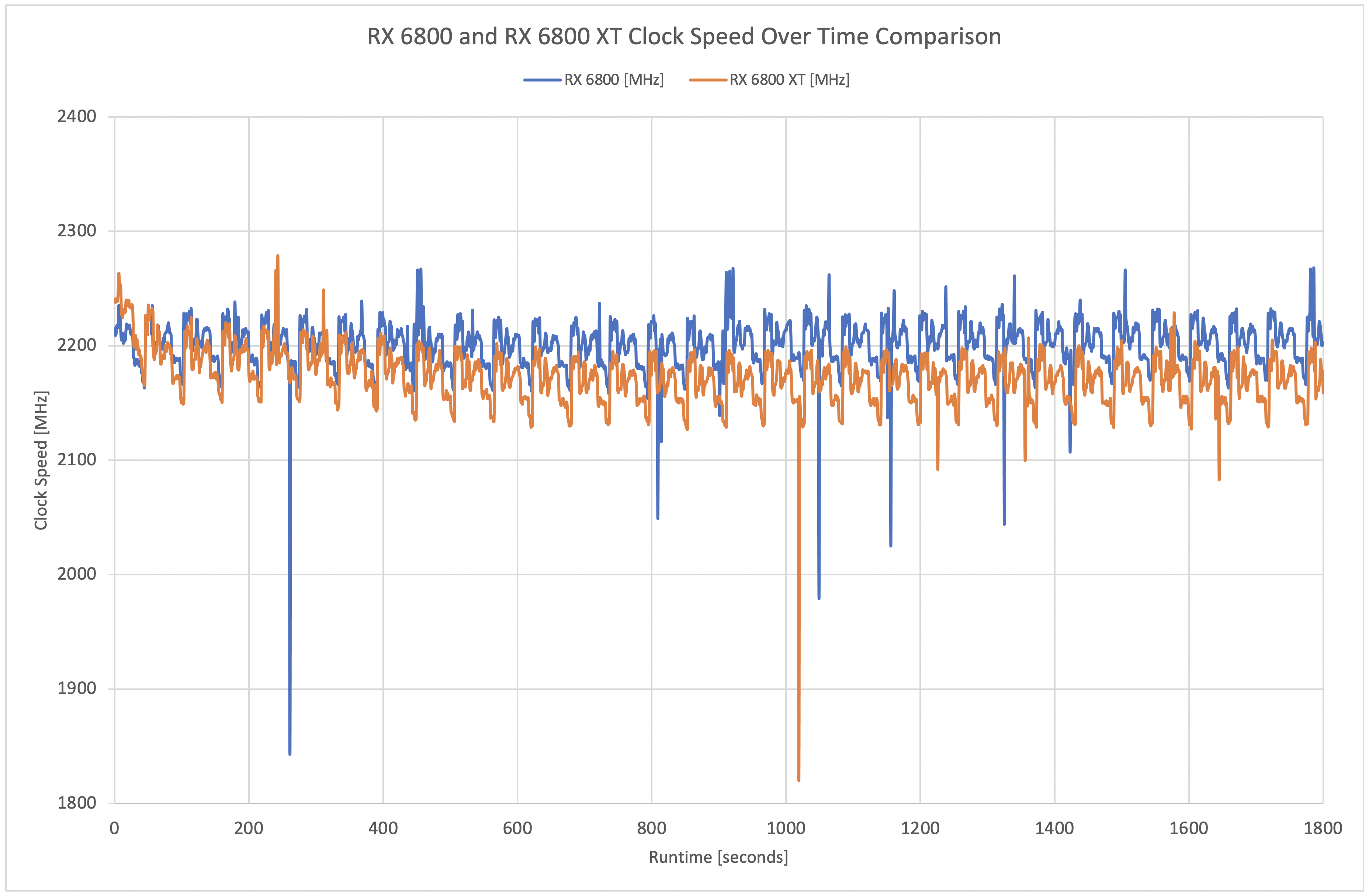

Look at RX6800, the closest to SX.

RX6800 runs metro 1440p ultra at around 2.25ghz, takes only 230W. It generates 85fps, beats 2080Ti Fe.

From reviews, a 7% overclock 6800, gives about 3% fps improvements

Working backwards, 1.8ghz to 2.25ghz is a 25% increase. So if we capped RX6800 to 1.8ghz, it will lose 10~12% in perf, so Metro 1440p may lose 10fps in doing so.

Let see if any reviewers review capped 6800/6800XT clocks. Please share if you come across them.

I totally forgot about that.

But it was not a typical overclock, they just unlocked CPU/GPU speeds, which were there since the release.

If you owned an hacked one, you already could access them much earlier.

I think it is even the same for the Switch.

Underclocked the already underclocked SoC for more battery time.

Of course, this could apply to the PS5 and XSX too, that theoretically some modes are not unlocked due to different reasons.

Source on sdk?

It is a not a GAFer leaker... it is a AMD leaker.

Xbox indeed is the only GPU that supports all new Advanced RDNA 2.0 features with DirectX 12U.

That doesn't mean the hardware is fully RDNA 2.0 (it is not... even if you ignore the CUs and Front-end it has not Infinite Cache for example).

BTW the CU IPC is 25% from Xbox One X to Xbox Series X that match exactly the GCN to RDNA1 IPC increase... RDNA2 CU IPC increase is something around 10-15% over RDNA 1 CU IPC.

I agree with your post in theory. If they were just making a console chip.

But clearly thats not their key business priority anymore, and so they built a compromise chip to serve multiple functions and also meet their marketing target.

The biggest flaw in Xbox console design over the last 5 years is obsessing over TFLOPS. The One X was 6, the SX is 12! Nice big round numbers sure, but totally arbitrary. Who cares.

The architect and engineers should be allowed to design the system for the ideal performance, unrestricted by marketing pressure. Like the PS5 was.

They wanted to build a chip for XCloud to run 4 Xbox One S games at once.

Phil also said they wanted to double the One X's 6 TFLOPs. So 12 TFLOPs was the marketing target.

Xbox One S has 14 CUs (12 active) and uses around 5 GB of RAM.

14x4=56 CUs. 5x4=20 GB. And 20 GB of RAM uses a 320 bit bus.

So they built a die with 56 CUs and a 320 bit bus, and so the XCloud chip was built.

But how to use it as a $500 console? Well, 20 GB RAM cost too much, so they went down to 16 GB and made it work with the same die. (Thus the split bandwidth.)

Then consoles need to disable 4 CUs for yields. So 52 active CUs for the XSX.

Plus, they save money and get better yields because they only need 48 active usable CUs for their server chips, so they can salvage some of the chips that don't yield the 52 CU min needed for the console supply.

What clock speed does 52 CUs need to be set at to hit 12 TFLOPs?

52 CUs x 64 shaders per CU x 2 IPC x 1800 MHz = 11.98 TFLOPs

So @1.8 GHz they'd only have 11.98 TFLOPs, not quite the round 12 they wanted for marketing, so 1.825 got to their goal.

The Xbox Series X SoC was not made to beat the PS5. It was a dual design chip built for XCloud servers to run Xbox One games to be streamed to phones via Game Pass, but also serves as their $500 Xbox One X replacement with 2X the floppies.

Maybe this is the truth?

I mean he is 100% in all AMD data he shares.

I mean he is 100% in all AMD data he shares.

Yeah he's a legit leaker.

Yeah he's a legit leaker.

Tomorrows rumor: "Microsoft will be unlocking the clock rate of Xbox Series S & X & giving it more power to crush PS5 they was just testing Sony this isn't even their final form "

There’s a work around for using multi threaded even before the update."While developers can now use the June 2020 release for games that will head to retail, features like “Multi-process games in Game Core” will not arrive until after launch."

The Latest on Xbox Game Core - Thurrott.com

Microsoft's latest Game Core update brings it closer to release as the company prepares for its next-generation Xbox launch.www.thurrott.com

That's one of examples.

Didn't they upped the clocks of the Xbox Ones CPU with an update?

Or it was fake?

Didn't they upped the clocks of the Xbox Ones CPU with an update?

Or it was fake?

You guys miss the part where XSX has already been confirmed to have "full RDNA2" GPU...

I think people suggesting that the sub-2GHz clock is related with the fact that the chip is also being used for xCloud are onto something, most of us completely forgot about it.

Lets relax a bit guys, the console just came out and MSs tool are not up to date yet. Its way too early to start changing clock speeds.

Let them improve the tools and let devs get used to RDNA2. its not gonna happen overnight.

I feel like they learned with the 360. It felt like a more purposeful design.They have always tried to make a powerful PC you can just use while on the couch. OG xbox was basically that. Same with xb 360.

I think the big disconnect is one is ready with RT implementation because they are using non direct x api. On top of having their development kits much further along which doesn't make sense when you see XBox showing their system off last December.

So while in the first half of 2021 we have first party titles by Sony that show off ray traced effects and instant loading. We then will still be waiting to see what first party titles from MS look like with ray tracing and advancements with Direct storage update.

I feel like they learned with the 360. It felt like a more purposeful design.

I know that even DF are saying that right now, the xbox tools that dev use are the problem. Once those get improved, we should all start seeing improvements. I think most launch games used the xbox one tools cause the series tools just arent finished.

Is this about PS5 vs XSX?2080 TI has a lower speed and a bigger CU count. And it destroys 2080 who has less CU and bigger clocks.

Nobody is missing that... Series X chip indeed support all RDNA 2 feature set.You guys miss the part where XSX has already been confirmed to have "full RDNA2" GPU...

I think people suggesting that the sub-2GHz clock is related with the fact that the chip is also being used for xCloud are onto something, most of us completely forgot about it.

I think the big disconnect is one is ready with RT implementation because they are using non direct x api. On top of having their development kits much further along which doesn't make sense when you see XBox showing their system off last December.

So while in the first half of 2021 we have first party titles by Sony that show off ray traced effects and instant loading. We then will still be waiting to see what first party titles from MS look like with ray tracing and advancements with Direct storage update.

Yes. But the innards they used were more friendly to PC developers hence better Multiplats on 360 during that gen. ANd 360 for a lot of pc gamers was a great option to play online when hardware wise direct x was figuring it's shit out with the 8800 line.

I agree. But there in lies the issue. They are behind, and it's impacted more by covid since they are waiting on the Windows team who writes the api. Direct X 12 ultimate re-write is still not 100% finished or tested fully. Direct storage for PC is not even going to be done till closer to end of summer 2021. And that impacts any optimization for storage solutions on xbox series. Right now they have raw speed being used and not utilizing direct storage optimizations which would improve install, load times, and possible faster cacheing.

Not sure if you've considered the power side - it's just going to be a fact that clocking 52 CUs as high as 36 would come with significantly more power draw, and Sony had to do the whole variable clock sharing deal to get here.

Interestingly, both get to about the same power draw figures in the end. I don't think it's being conservative so much as two different approaches to get to a similar spot.

300W is the entire SX power supply - efficiency, provisioning for capacitor aging, etc. It draws 150-200W, same as the PS5 does. 1/3rd to 50% more power draw is no trivial thing in consoles already pushing the size boundaries.

You know what's other name for "work around"? Hack. Hacks in code means problems. Usually performance ones. If work around would work as final one then it would be final solution.There’s a work around for using multi threaded even before the update.

It is what it is. Consoles never take full advantage of the hardware at launch regardless.

We have Sony first party titles that show ray traced effects and instant loading *right now*. Despite what people have tried to say, the two launches are not equivalent, Sony has next-gen titles that show off what the system can do, right at the start, even if they are down-ported to older consoles. Like I said before, instead of hoping for secret sauce, people should just support the system that is doing the stuff you want.

Didn't they upped the clocks of the Xbox Ones CPU with an update?

Or it was fake?

PS5 is and it's seen in a majority of their titles even little big planet shows some of this.?

Whats the narrative going to be like when RATCHET, GT7, returnal release in the next 4-6 months?

Is this your first console? welcome to gaming.

This literally happens every generation. Games look much better down the road than they do at launch. Literally happens every single time.

I get that. But that also has to do where tools are. And that has more merrit when your coming to a new architecture. The arc is not new it's same arc as last gen. So sony's developers dont have to redo tools. Their current tools and engine scale. ANd Sony's optimizations being more along shows that they seem far ahead. Were not getting a ratchet and clank that looks a little better and cleaner. We are literally getting a native 4k looking image from a game that looks better than most cgi films. Thats pretty crazy that they have been making the game since 2019?

That tells me tool, and development maturity is pretty much already there. Sure maybe in ray tracing, and other techniques you might see more like ray traced shadows. But in terms of getting more out of the system, we are seeing a giant jump. While Microsoft hasn't shown that and it shows how far behind they are.

Sony seems to have a more streamlined development environment that was optimized much earlier on and uses existing tools/engines.

I expect things to improve for sure, but I THINK seeing whats coming and what is released it's obvious sony has no software development issues in their kits like MS currently has. And that is showing.

Oh yeah i agree. Not sure why MS is so behind on software, but considering ps5 was supposedly going to launch in 2019, it would explain why they are ahead right now. Xbox was always waiting for the full RDNA2 feature set so, its hard to build tools if you didnt even have to full feature set yet.

Feature set? Like what? If anything they are waiting on Direct x which they make. RDNA 2 features are all there in both consoles, the difference is in the individual engines that need to be updated. Feature set is there it's the api that Microsoft uses along with their software instructions that is behind. RDNA 2 features have no bearing on software development on a hardware level. MiCROSOT supposedly has had final hardware since early last year. When those get shipped the features are there hardware wise. Software that is for windows drivers is the stuff thats the issue. But in terms of Sony they use their own api so right off the bat hardware wise they are using rdna 2 to it's fullest.

factschronicle.com

factschronicle.com

That's true, but 25% more IPC doesn't mean that the new architecture can't gain more. IPC only says that a set of calculations need less clocks. But the other gains are where GCN had a real problem (IPC wasn't really a problem). Saturating the CUs. The CUs were never really the problem, but get the work to the CUs was. This changed with RDNA and it is much easier now to saturate the CUs and therefore it is much easier to get all the performance out of it.MS said the IPC increase from Xbox One X to Xbox Series X is 25%.

That is not a performance comparison but IPC comparison... optimization takes no role in IPC comparisons.

From HotChips.

"09:21PM EDT - CUs have 25% better perf/clock compared to last gen"

www.anandtech.com/show/15994/hot-chips-2020-live-blog-microsoft-xbox-series-x-system-architecture-600pm-pt

That is RDNA IPC over GCN.

You guys miss the part where XSX has already been confirmed to have "full RDNA2" GPU...