Gaiff

SBI’s Resident Gaslighter

The ps5 matches the 3080 in rift apart though tbf it’s the only game I’ve seen usually 3070 is best case.

1. It doesn't. In Fidelity Mode, the 3080 averages 60fps and the PS5 48fps, that's a 25% performance advantage to the 3080. In Performance Mode, the 3080 is 17% faster.

2. NxGamer argues that these differences aren't due to the GPU but to the PS5's better IO which prevents the massive temporary drops of the 3080. It has much higher highs but also much lower lows. Furthermore, Nixxes also issued several patches to address the streaming issues.

3. The DRS in this game doesn't work the same on PC vs console. That's coming straight from Nixxes:

DRS on PS5 functions differently than it does on PC. DRS on PS5 works from a list of pre configured resolutions to choose from with limits on the top and bottom res with course adjustments (aka 1440p down to 1296p). PC DRS is freefloating and fine-grain. If you turn on IGTI with DRS set to 60, it will max your GPU essentially at the highest and most fine grain res possible.

And we can actually see that in NxGamer's own footage. Not only is the image obviously blurrier on PS5, but the plants are a dead giveaway that PC operates at a higher resolution.

Here?I also never said the ps5 performed like a 4080 literally where did I say that

Based on how last of us for example runs worse on a 4080 than a ps5 is it really that unrealistic for the pro to outperform the 4080 there same with ratchet in pure raster

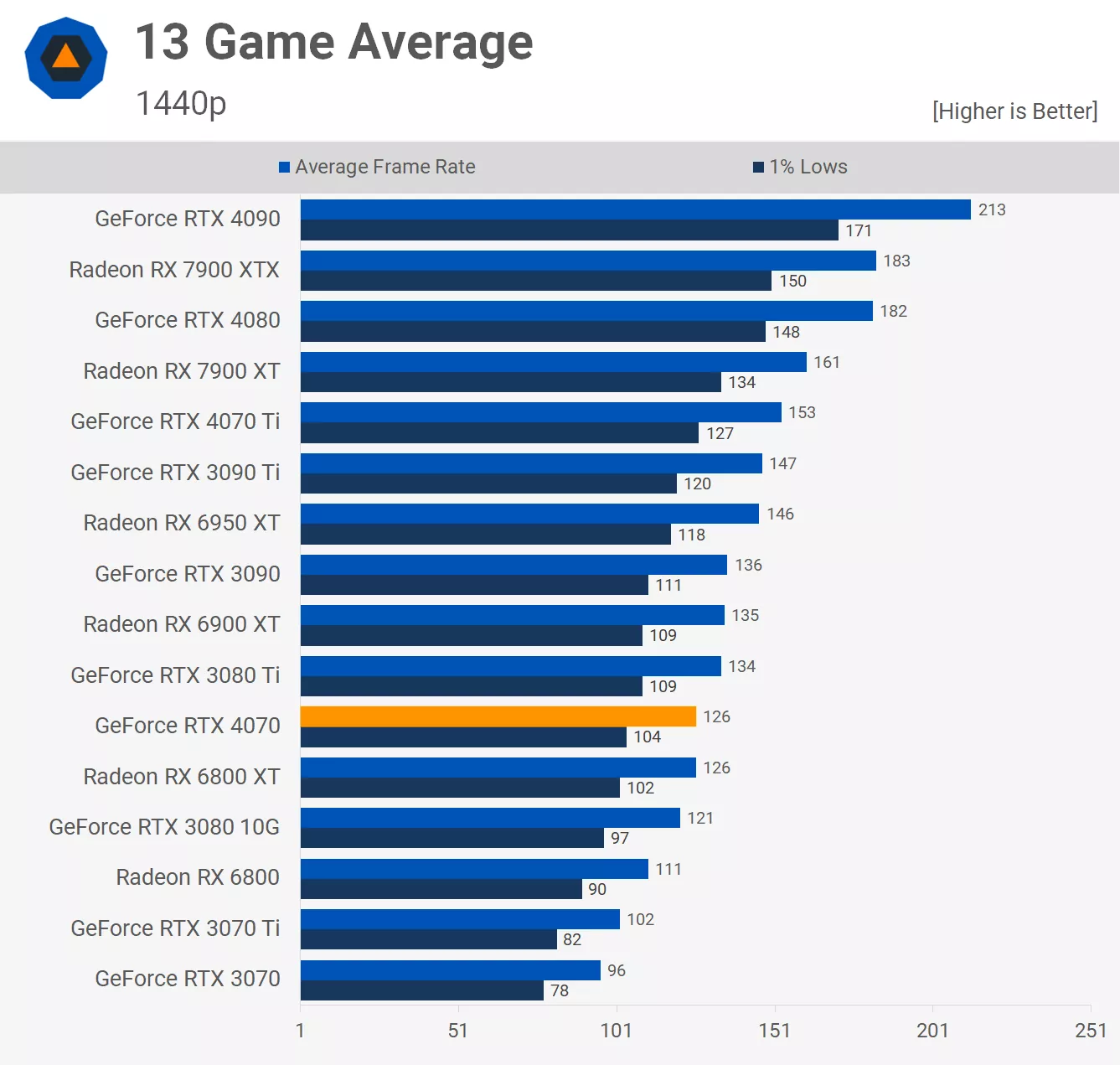

The 3080 is something like 5% faster than the 4070. There isn't a single game where the PS5 performs like the 4070 and you haven't shown one. The best it can do is be in the league of a 2080 Ti/3070. 3080/4070 are way out of reach.I said there are several games like last of us, rift apart some Ubisoft games where it already clearly outperforms the 4060 and matches a 4070 so why would a pro matching or dare I say edging the 4080 be some crazy idea. This isn’t for every game for reference

I think the relative GPU power comparable of the PS5 is even less than a 2080TI at is gives very similar native 4k performance to the 2070S benchmarks when you look around the web. The 2080TI benchmarks always had it most of the time over 60 fps at 4k , at least in 2020 . Since we know the PS5 can do at least 40 fps (mode) at 4K but can't do 60 at this resolution it probably hovers in the 45 fps range tops . PS5 Pro if increase a 2x fold , it will be comparable to a 4070 Super (tbd) I suppose

I'm talking about PS5 exclusives that run particularly well on PS5. Generally, the 2080 Ti is quite a bit faster than the PS5. And yeah, agreed with the 4070/4070S-ish tier of performance.

It has FSR which usually performs within 5% of DLSS. Their performance is typically identical. Same for XeSS on Intel hardware. No upscaling solution is much more performant than the others if they're using the same internal resolution.Base PS5 has no DLSS equivalent.

Without knowing what Sony has in store, it's impossible to tell. Based on how DLSS, FSR, and XeSS perform though, I would expect the same performance as those 3 given the same resolution but with IQ closer to DLSS and XeSS than FSR. Better image stability, better resolve of fine details, more temporal stability, etc.With PS5 Pro, if you were to get it to produce the same resolution and framerate as PS5 Pro, how much LESS could you get away with in terms of TFLop utilization?