Anyway, I'm actually pretty happy with a current gen system that has a bit better gpu. Hell, just finally going HD is a big improvement over the Wii. I'd say the best of current gen visuals will age a lot better than the last gen games, though some of those games still look good when ported into HD collections. I also don't see Sony and Microsoft making huge leaps in the visuals dept. while also hitting a good price point for the modern economy.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Eurogamer: Chat with Wii U developer about the general performance of the system

- Thread starter Vic

- Start date

Anyway, I'm actually pretty happy with a current gen system that has a bit better gpu. Hell, just finally going HD is a big improvement over the Wii. I'd say the best of current gen visuals will age a lot better than the last gen games, though some of those games still look good when ported into HD collections. I also don't see Sony and Microsoft making huge leaps in the visuals dept. while also hitting a good price point for the modern economy.

I sort of am, but I'll probably hold final judgement until after I see the next XBOX. If that thing is a super, super jump over what we have and the Wii U does not get down ports then I'll possibly swap systems at that point.

For now though, it's Wii U ordered and I'm looking forward to seeing how it all works and how the tablet browser etc goes.

Of course if Nintendo reveal Zelda before the 720 is released, they'll win me over

Well, if the Wii U gets a good number of exclusives I doubt it'll be a big problem. But then I AM crazy and like to own each of the relevant consoles, plus I'll reliably want Nintendo games anyway.I sort of am, but I'll probably hold final judgement until after I see the next XBOX. If that thing is a super, super jump over what we have and the Wii U does not get down ports. I'll possibly swap systems at that point.

For now though, it's Wii U ordered and I'm looking forward to seeing how it all works and how the tablet browser etc goes.

longdi

Banned

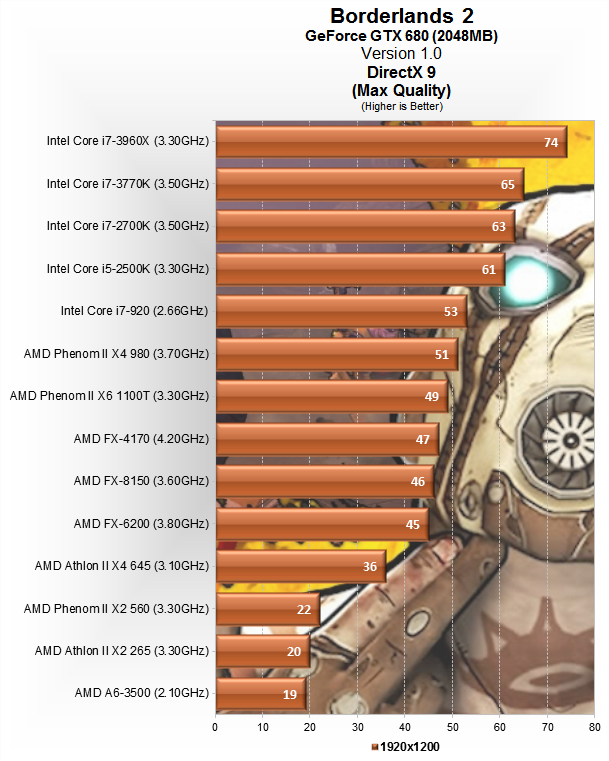

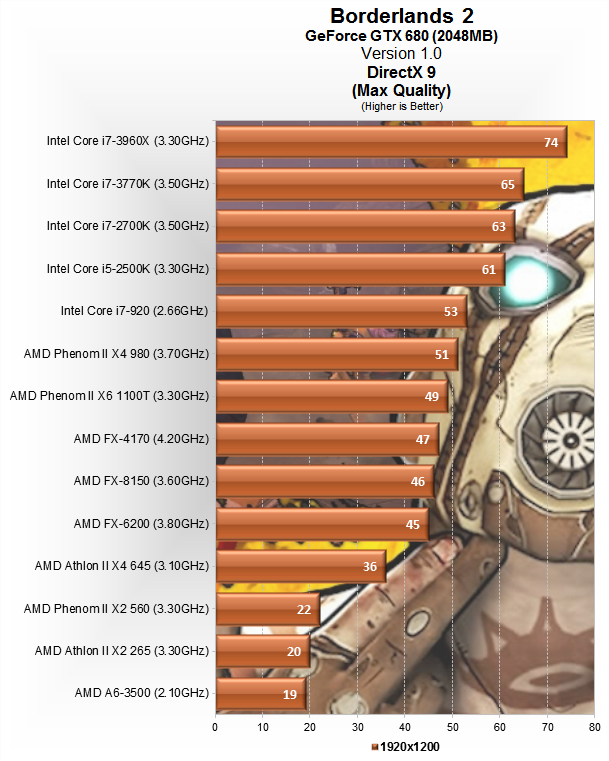

Ouch at this:

A slow/weak cpu will gimped (bottleneck) a good GPU no matter how powerful it is.

Right!

Wii U sounds like what i have in my old laptop only with a much weak cpu (i7 2630qm + 6750m)

JoshuaJSlone

Member

Haha. I know the values in there are meaningless, but it could've been worse; my alternate idea for visually presenting ratio differences was scaled pictures of superheroes with different powers.Anyone else find that the meaningless bar graph makes this post hilarious? :-D

Smurfman256

Member

Wii U runs a out-of-order CPU with lower clockspeed than the in-order 360/PS3 chips. An out-of-order CPU will beat the piss out of an in-order CPU. However, code built for the 360/PS3 CPU won't port straight on unless it is optimised.

This is what I have been trying to tell people. The CPU is fine, the devs just think that they can port Xbox 360 code straight onto Espresso and get half-way decent results (which happens to be the case) and just releasing the game like that. Wait until 2014, then we shall see how the CPU actually stacks up.

This is what I have been trying to tell people. The CPU is fine, the devs just think that they can port Xbox 360 code straight onto Espresso and get half-way decent results (which happens to be the case) and just releasing the game like that. Wait until 2014, then we shall see how the CPU actually stacks up.

An out-of-order cpu doesn't need special code to get good results. The in-order cpu's on 360 and PS3 need cleaner code to run efficiently and those optimizations are completely transferable and beneficial to an out-of-order cpu like the WiiU's.

nordique

Member

I still don't buy the "weaker" CPU claim

I think, and honestly believe, that it is a misunderstanding of context regarding a lower clock (and thus reporters unknowingly stating its weaker, when the clock is less thus "slower" but not necessarily weaker)

More so, I think because the architecture is completely different (OoOE is) from 360/PS3 CPUs, coupled with the GPGPU functionality (likely by design the idea is to take certain tasks away from traditional CPU codes) points to a CPU being very under appreciated

I do not doubt it has a slower clock, but I feel in a few years developers will be saying the opposite, such as "oh the CPU wasn't as weak as we thought...we just had to get used to it..."

its a new platform

I think, and honestly believe, that it is a misunderstanding of context regarding a lower clock (and thus reporters unknowingly stating its weaker, when the clock is less thus "slower" but not necessarily weaker)

More so, I think because the architecture is completely different (OoOE is) from 360/PS3 CPUs, coupled with the GPGPU functionality (likely by design the idea is to take certain tasks away from traditional CPU codes) points to a CPU being very under appreciated

I do not doubt it has a slower clock, but I feel in a few years developers will be saying the opposite, such as "oh the CPU wasn't as weak as we thought...we just had to get used to it..."

its a new platform

nordique

Member

I sort of am, but I'll probably hold final judgement until after I see the next XBOX. If that thing is a super, super jump over what we have and the Wii U does not get down ports then I'll possibly swap systems at that point.

For now though, it's Wii U ordered and I'm looking forward to seeing how it all works and how the tablet browser etc goes.

Of course if Nintendo reveal Zelda before the 720 is released, they'll win me over

And if they release it after, you know you're gonna hold on to your Wii U

This is what I have been trying to tell people. The CPU is fine, the devs just think that they can port Xbox 360 code straight onto Espresso and get half-way decent results (which happens to be the case) and just releasing the game like that. Wait until 2014, then we shall see how the CPU actually stacks up.

err....Code that has been optimised for in-order CPU's should run the same or better on an OOE CPU.

Stop look at out-of-order as an architecture and start look at it as an optimization. Think of as you would a feature on a gpu. It isn't a new architecture that needs special care to get optimal results out of it.I still don't buy the "weaker" CPU claim

I think, and honestly believe, that it is a misunderstanding of context regarding a lower clock (and thus reporters unknowingly stating its weaker, when the clock is less thus "slower" but not necessarily weaker)

More so, I think because the architecture is completely different (OoOE is) from 360/PS3 CPUs, coupled with the GPGPU functionality (likely by design the idea is to take certain tasks away from traditional CPU codes) points to a CPU being very under appreciated

I do not doubt it has a slower clock, but I feel in a few years developers will be saying the opposite, such as "oh the CPU wasn't as weak as we thought...we just had to get used to it..."

its a new platform

Everything we've seen with the gpu helping with cpu tasks, audio being handles elsewhere etc point to the cpu being weaker and Nintendo planning accordingly to compensate for it.

amstradcpc

Member

An out-of-order cpu doesn't need special code to get good results. The in-order cpu's on 360 and PS3 need cleaner code to run efficiently and those optimizations are completely transferable and beneficial to an out-of-order cpu like the WiiU's.

Exactly. An out of order cpu is only more efficient against an in order cpu if the code is not correctly sorted. But PS360 code is very efficiently sorted to iron out the in order nature of their cpus, so WII u cpu won´t run it better because of being out of order.

Panajev2001a

GAF's Pleasant Genius

Exactly. An out of order cpu is only more efficient against an in order cpu if the code is not correctly sorted. But PS360 code is very efficiently sorted to iron out the in order nature of their cpus, so WII u cpu won´t run it better because of being out of order.

If the compiler and the original coder did a perfect job and if the dataset of the problem at hand fits completely inside the L1 cache (which is the kind of latency OOOE cores are generally designed to hide without stalling the CPU: finding enough work to do to cover L1 data cache accesses, but not L1 cache misses for example), then yes there might be little work for the CPU to do at runtime.

Still,

1.) we still do not have such perfect compilers and programmers.

2.) some data is not fully known by the compiler at compile time and the CPU might have much more information at runtime than the compiler could offline (ok, there is a huge debate on this too). With multiple threads being active and the possibility to feed the CPU's execution units from multiple threads at the same time, the amount of work the CPU can gather at runtime is potentially quite high.

3.) awfully written code will not run much faster with an OOOE core than an in-order one (code constantly trashing cache and requiring main RAM accesses goes way beyond the amount of work the OOOE side can find to cover data dependencies).

4.) there are other parts of the CPU you should look at that influence performance, but we have no data on those (are they multi-threaded? how is the cache hierarchy? how is their branch predictor).

It might very well be that the WiiU CPU is overall slower than what both Xenon and CELL can deliver, both of those are very big chips with a considerably higher power consumption target. Wii U is not beyond the laws of physics. It is designed to consume a very low amount of power and require simpler heat dissipation technology than those other two monsters. Also, it is designed to be profitable while packing a controller that should be more expensive than DS3 or Xbox360 pad are (although I do not believe its manufacturing cost is anywhere near its JPN price). Also, Nintendo will want to make a profit on each WiiU sold day 1, which means that the actual budget for the WiiU console is even lower.

With this in mind, it is fully possible that their overall design goal/system balance considered GPU, RAM, faster disc drive, and cheaper and secure HW BC to be more important and that the CPU is indeed weaker if measured in isolation. Carefully redesigning their games and the technology behind them, some developers might find some area that could be optimized more (but that would run fast enough on Xbox 360 cores for example...), compilers might improve over time, they might shift more work onto the GPU (which would then have less headroom purely for graphics calculations).

I think it is reasonable that games which push CPU-side calculations very hard on Xbox 360 and PS3 to be ported on WiiU but maybe not push graphics too much beyond those systems (beside higher resolution textures and better AA).

Stop look at out-of-order as an architecture and start look at it as an optimization.

It is a an architecture, instruction sets, processor design, logic, all vastly different from IoE.

An optimisation would be SMT

Think of as you would a feature on a gpu. It isn't a new architecture that needs special care to get optimal results out of it.

Running in order routines on an out of order CPU will lead to a lot of wasted clock cycles. You need to code in a destinctly different manner for OoE then you do in order. To say OoE is not an architecture is laughable, the processor is fundamentally so different its not funny.

SMT on the other hand, code the same way, just get a few extra threads to feed the CPU. Again highlighting its simply an extension.

Everything we've seen with the gpu helping with cpu tasks, audio being handles elsewhere etc point to the cpu being weaker and Nintendo planning accordingly to compensate for it.

Please. You sound like someone who has no idea of programming or machine code, yet alone hardware.

Dedicated DSP makes perfect sense. Especially when you see Xbox 360 game audio regularly taking up 1/3rd of more of an entire SMT, then I/O consuming near that again. Absolutely stupid having this 3.2ghz CPU core spending time and tying up resources processing something a 200mhz DSP and I/O controller could do just as well and without half the wattage.

No doubt the Wii U wont be a power house, but really dont put much faith in the comments of developers who openly admit they're porting from the HD Twins, as well as working on a limited time frame with architecture they've had zero exposure to before. That to me doesn't scream 'weak cpu', but rather tight ass, half assed, and cheap effort. Get get out what you put in.

err....Code that has been optimised for in-order CPU's should run the same or better on an OOE CPU.

Point of discussion: What about code that ISN'T fully and completely optimized?

walking fiend

Member

*Posts a random benchmark to prove that a particular case can be generalized into how Wii U will perform.*Right!

Wii U sounds like what i have in my old laptop only with a much weak cpu (i7 2630qm + 6750m)

CPU not important at all confirmed:

It is a an architecture, instruction sets, processor design, logic, all vastly different from IoE.

An optimisation would be SMT

Running in order routines on an out of order CPU will lead to a lot of wasted clock cycles. You need to code in a destinctly different manner for OoE then you do in order. To say OoE is not an architecture is laughable, the processor is fundamentally so different its not funny.

SMT on the other hand, code the same way, just get a few extra threads to feed the CPU. Again highlighting its simply an extension.

Please. You sound like someone who has no idea of programming or machine code, yet alone hardware.

Dedicated DSP makes perfect sense. Especially when you see Xbox 360 game audio regularly taking up 1/3rd of more of an entire SMT, then I/O consuming near that again. Absolutely stupid having this 3.2ghz CPU core spending time and tying up resources processing something a 200mhz DSP and I/O controller could do just as well and without half the wattage.

No doubt the Wii U wont be a power house, but really dont put much faith in the comments of developers who openly admit they're porting from the HD Twins, as well as working on a limited time frame with architecture they've had zero exposure to before. That to me doesn't scream 'weak cpu', but rather tight ass, half assed, and cheap effort. Get get out what you put in.

Don't talk about something you know nothing about!

A compiler might be designed to make code that tries to minimize stalling for an IOE CPU, but that is not likely to harm performance on an OoOE CPU and the big point some "people" miss is THEY ARE NOT USING THE SAME COMPILERS FOR A DIFFERENT CONSOLE AND THUS WILL NOT EVEN BE USING THE SAME BINARY CODE THAT HAS BEEN OPTIMIZED FOR IOE!

OoOE means that a CPU can handle code that is non optimal better by working around stalls in the code it is being feed, not the other way around!!!!

SMT is also not just "simple'!

The fact that you said that xbox 360 "game audio regularly taking up 1/3rd of more of an entire SMT"

Shows that you can not even use terms like SMT correctly!

I am sure you are clueless when you state that anything to do with I/O taking up large amounts off time, needing to be off loaded and not understanding that just about all systems have chips that handle I/O, it is commonly known on PCs as a SOUTHBRIDGE!

Most consoles have them integrated on the same die as the GPU!

Panajev2001a

GAF's Pleasant Genius

It is a an architecture, instruction sets, processor design, logic, all vastly different from IoE.

An optimisation would be SMT

How is the instruction set different? Were there huge fundamental changes in the x86 ISA in the Pentium MMX to Pentium Pro transition due to the shift to OOOE (we agree that the processor design changes)?

Instruction fetch and decode is usually still in-order as the retire phase is, what we are allowing to execute out of program order is essentially the issue/dispatch and execute phase.

SMT is not a just an optimization without side-effects, especially on the HW side. In order for multiple threads to share the same execution HW you need to increase the resources available (not execution units, but what you need to distinguish each task's context) according to the number of HW threads you want support and you need to waste some space and bandwidth to tag all your instructions with threadId to track them. SMT on Xenon cores requires duplication of the VMX-128 register set for example.

Sure, at the end you get better utilization for like 5% of die area penalty per core, but on multi-core set-ups it is not such a laughable amount (it is a tradeoff you have to take into account).

Running in order routines on an out of order CPU will lead to a lot of wasted clock cycles. You need to code in a destinctly different manner for OoE then you do in order. To say OoE is not an architecture is laughable, the processor is fundamentally so different its not funny.

I would not say that the best coding practices should change THAT much. In both kind of cores you would do best to avoid excessive cache misses (on an OOOE core you usually want to do an even better job at keeping data inside the L1 cache as much as possible as that will allow you to reap the most benefits of OOOE, but I am not sure why you would not want to do the same for in order cores which would just sit there idling).

For an in order core you want to pay more attention scheduling (you or the compiler) more math/logic ops between a load and the instruction depending on it as possible so that the CPU does not stall. OOOE goes ahead in the instruction stream (one of the various mechanism to do that) and find non-dependent instructions that are ready to issue. Some cores evolved to re-organize load and stores in the instruction stream to better fit their needs, to fuse instructions in the decoding phase and in the issue phase.

Where OOOE can really mess up, and the reason why some OOOE cores do not do it there, is floating point execution in which re-ordering math ops could really mess things up if you care about accuracy.

The "Golden Era" of programming, think about x86 on the desktop, was essentially named that way because it was an era in which your code would essentially get faster as time went on because CPU's were getting faster and faster each year... the in order to out of order transition occurred in that "period" as well.

Should run much better on the OoOE CPU, which is why Cell and Xenon have been considered crappy if they were used for regular computer use. I guess they have a bunch of potential power, but it'd be harder to utilize that power.Point of discussion: What about code that ISN'T fully and completely optimized?

The problem with the whole OoOE CPU always being faster argument is that you can't just ignore the raw CPU throughput. An equivalent (clock speed, features, etc) OoOE CPU will be faster, but as far as we know these aren't equivalent CPUs. Like whatever code might run twice as fast per clock on whatever OoOE CPU, but if the in order CPU is clocked three times faster it'll be a net loss in performance.

Otherwise what we might be seeing in this particular case, is if code is tailored specifically to the in order CPU, then the OoOE CPU might not be able to eke out the usual gains. Like if the software itself is already designed around avoiding the bottlenecks/weaknesses of the in order CPU, running on an OoOE CPU wouldn't necessarily be a huge automatic win.

I didn't say the architecture wasn't different, I said to stop looking at as some major difference like Xenon compared to Cell as far as running code is concerned. The rest of your post shows why a little knowledge can be a dangerous thing. You know just enough to sound like you actually know what you're talking about.It is a an architecture, instruction sets, processor design, logic, all vastly different from IoE.

An optimisation would be SMT

Running in order routines on an out of order CPU will lead to a lot of wasted clock cycles. You need to code in a destinctly different manner for OoE then you do in order. To say OoE is not an architecture is laughable, the processor is fundamentally so different its not funny.

SMT on the other hand, code the same way, just get a few extra threads to feed the CPU. Again highlighting its simply an extension.

Please. You sound like someone who has no idea of programming or machine code, yet alone hardware.

Dedicated DSP makes perfect sense. Especially when you see Xbox 360 game audio regularly taking up 1/3rd of more of an entire SMT, then I/O consuming near that again. Absolutely stupid having this 3.2ghz CPU core spending time and tying up resources processing something a 200mhz DSP and I/O controller could do just as well and without half the wattage.

No doubt the Wii U wont be a power house, but really dont put much faith in the comments of developers who openly admit they're porting from the HD Twins, as well as working on a limited time frame with architecture they've had zero exposure to before. That to me doesn't scream 'weak cpu', but rather tight ass, half assed, and cheap effort. Get get out what you put in.

m.i.s.

Banned

it goes back to "Nintendo, build a bigger console!". But they believe that delivering a console with such low profile is a worthy goal on itself.

...

the power draw for the entire system will be between 1/3rd and 1/6th that of current gen consoles... Even the new PS3 Slim-slim version will be average drawin 190 watts compared to Wii U's 45.

Nintendo's conception of what a videogames console is supposed to be all about is quite different from MS and Sony. For Nintendo, a console should be small and unobtrusive, loading times on games minimal (ie games should be almost immediately accessible or as accessible as tech and game design allows).

Many purchasing decisions are also made by women, particularly where the console is to be situated in the main family room. "Big" consoles like PS3 and 360 are off-putting to this [and a subset of the casual] demographic.

but that is not likely to harm performance on an OoOE CPU

It does when you're running processes priority and work orders geared for IoE. So too when your porting code that has been created around the small L1 and L2 cache size of the Xenon and Cell processor.

THEY ARE NOT USING THE SAME COMPILERS FOR A DIFFERENT CONSOLE AND THUS WILL NOT EVEN BE USING THE SAME BINARY CODE THAT HAS BEEN OPTIMIZED FOR IOE!

CAPSLOCK! and yes obvious.

OoOE means that a CPU can handle code that is non optimal better by working around stalls in the code it is being feed, not the other way around!!!!

OoE CPUs can assess all the instructions in their cache, identify ones in which it already has the instruciton sets loaded for, also asses if a instruction is locked by memory, dependant on another process, or by another piece of hardware. A OoE can also assess workloads and identify the most optimal manner to process them. If a high priority instruction is sent to the CPU once it's already into a clock cycle, OoE CPUs have the ability to cease processing their current low priority instruction, store the work they've done on this low priority task into cache, start processing the high priority instruction, then pickup and resume the low priority task.

It's not as simple as you make it out to be

SMT is also not just "simple'!

Compared to OoE, it is.

The fact that you said that xbox 360 "game audio regularly taking up 1/3rd of more of an entire SMT"

Shows that you can not even use terms like SMT correctly!

Single thread. Happy?

Either way the point is clear.

I am sure you are clueless when you state that anything to do with I/O taking up large amounts off time, needing to be off loaded and not understanding that just about all systems have chips that handle I/O, it is commonly known on PCs as a SOUTHBRIDGE!

Most consoles have them integrated on the same die as the GPU!

Both the Xbox 360 and even the PS3 processors do some I/O and memory controlling tasks. While both conosles feature bus controllers, it's almost the equivalent of Intel's ICH raid. Yeah its raid, but it's also utelizing the cpu

Also modern AMD and Intel processors tend to have intergrated memory controllers, PCIE lanes etc, negating the need for north bridges. South bridges are typically used for HDDs, USB, and other lower speed components.

SquiddyCracker

Banned

Wuu specs so far:

CPU: 3-core CPU, with each core being an upgraded Broadway CPU

GPU: AMD Radeon e6760 equivalent or better graphic processing unit

Memory: 32MB eDRAM (accessible by both GPU/CPU?), and 2GB of DDR3 general ram (of which 1GB is currently accessible to developers)

Misc: ARM-chip for OS(?), DSP for sound.

All in all, it looks like a 3-4x upgrade over the current generation.

CPU: 3-core CPU, with each core being an upgraded Broadway CPU

GPU: AMD Radeon e6760 equivalent or better graphic processing unit

Memory: 32MB eDRAM (accessible by both GPU/CPU?), and 2GB of DDR3 general ram (of which 1GB is currently accessible to developers)

Misc: ARM-chip for OS(?), DSP for sound.

All in all, it looks like a 3-4x upgrade over the current generation.