16 bit computation is generally not very useful outside of a small subset of problems.

The precision loss is just too great, for example, the calculation (10.0 + 1.0 / 500.0) cannot be computed accurately with 16bit floating point precision - the result will be 10.0. Now imagine performing 100 such calculations one after the other, and the accumulated precision loss can become quite dramatic.

Precision loss can be a problem with 32bit calculations sometimes, and 16bit is *dramatically* worse (thousands of times worse).

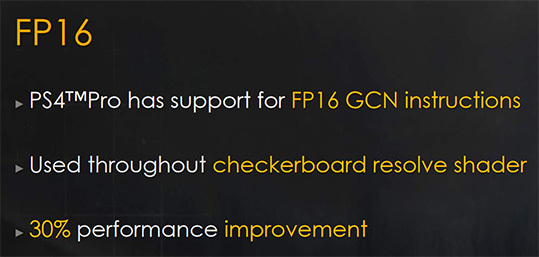

As such the number of places in a game where 16bit computation can be used is generally quite limited, but it's still a useful tool in those places. You won't see it being used in things that require high precision (lighting, animation, etc) but it may be practical where you are already dealing with already low-ish precision data that doesn't require heavy processing (e.g. post processing, antialiasing, etc). From personal experience, even these obvious cases you have to be very careful.

However even when you have a valid use case it won't mean you'll get a performance boost (sometimes it can be slower!) - almost all modern processors (including mobile parts) are limited by bandwidth and latency (either internal or external), as shunting data around draws a lot of power. As such, even using 16bit precision and doubling your theoretical FLOPs can typically mean you just end up bottlenecked elsewhere in the system.

But, this is ironically where 16bit can be most useful.

The primary advantage for hardware 16bit computation is the registers are half the size of 32bit - not that they are twice as fast. So sometimes you can more easily reduce register pressure for a shader by using 16bit computation, which can sometimes allow for a greater number of wavefronts to be active, which can sometimes improve *latency* hiding (assuming that is your bottleneck), thus sometimes improving performance. Lots of assumptions there though.

Thus, generally, the benefit of 16bit computation is not the doubled theoretical FLOPs, but the smaller data size and potential for improved latency hiding that results (Cerney actually mentioned this). But as with any modern, highly complex hardware, it's never black and white.

Where 16bit is really useful is a storage format. 16bit render targets, vertex data, etc, has been supported for a very, very long time (Vita had full support for 16bit render targets with blending, for example). It's great here because you do calculations in 32bit, then encode to 16bit or less from the higher precision calculated value.

This reduces memory usage, cache thrashing, etc (which is what the Killzone presentation mentioned above was referencing - NOT 16bit calculations).

Basically:

16bit storage: awesome, and supported everywhere.

16bit computation: very limited use, can often be bottlenecked elsewhere, but in certain limited situations can be very useful.

In no way whatsoever does supporting FP16 double the performance of a machine, but it does provide a useful tool to help micro optimize certain parts of a game though.

TFLOPs are still a fairly useful metric, because they are often balanced against other bottlenecks within the system (it's a waste of silicon to add more ALU if you are 95% latency bound). However, the catch is that this only applies to 32bit ops. The 16bit FLOP measurements they commonly use for mobile parts are best completely ignored (*cough* nvidia *cough*)