so i've been investigating the claims about vegas mining efficiency with the following results:

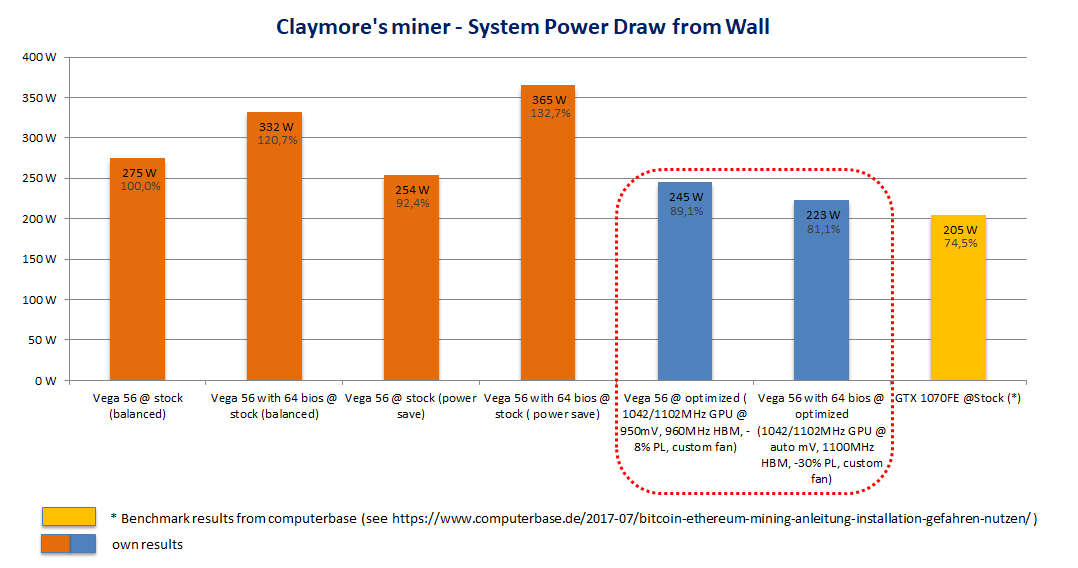

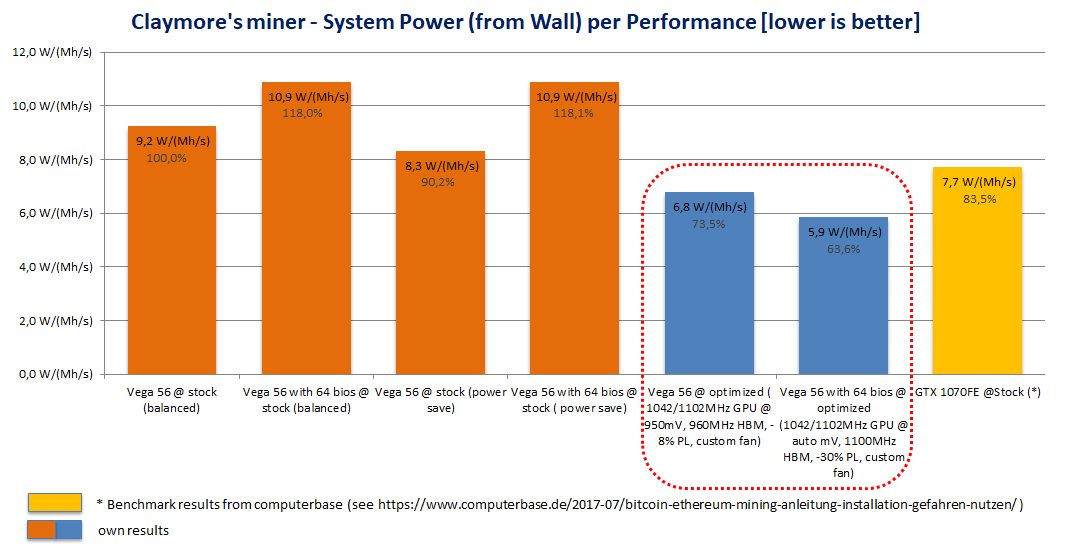

for the power numbers keep in mind that im using a less efficient PSU than computerbase.de. with the right PSU the optimized 56 with 64 bios should draw basically the same as the 1070 system. doing the math, the optimized 56 with 64 bios should draw around 120 w on the DC side of the PSU. therefore i think the numbers posted by the reddit user for the 64 with the full 64CUs available and optimized settings should be accurate.

please note: i don't want to promote mining here. really, i think mining and cryptocurrencies are kinda stupid when considering their environmental and macroeconomic impact. just wanted help clear some desinformation lurking around the net and here.