For ppl not liking/recomending rtx 4070 its best to show them hardware unboxed cost per frame charts, both at msrp and current streetprice, its pretty decent deal vs any other nvidia cards, and should be considered go to card for ppl with 600$ budget and has some fair advantages(some disadvantages too) even vs so prized rx 6950xt.

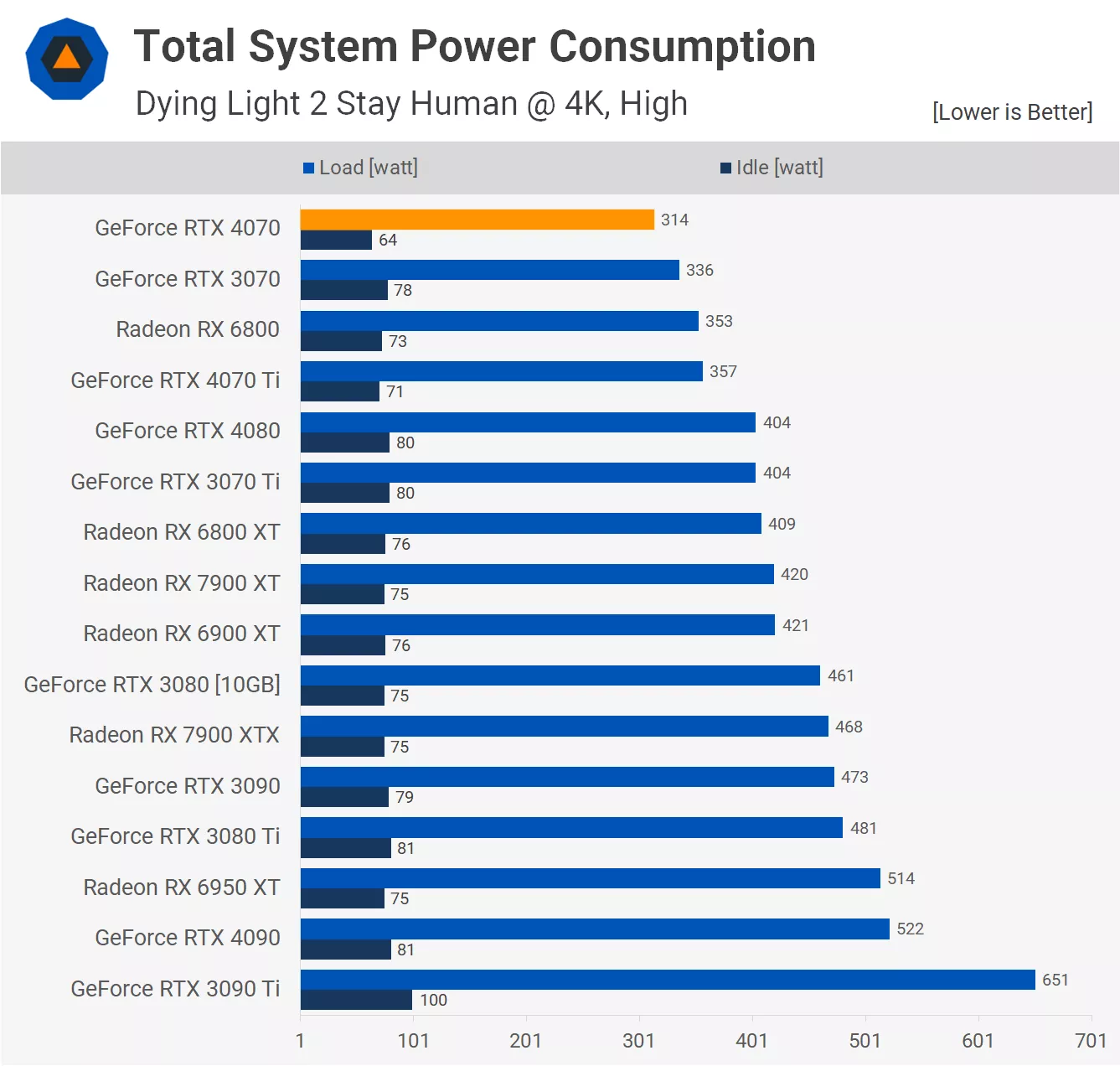

And one thing u cant accuse Hardware Unboxed of is being nvidia's shills, even they put the 4070 as top4 best value card on current market, and top1 nvidia card, since amd cards have worse features and power efficiency.

For all u ppl not liking the cards- its perfectly fine, but just tell me this- why didnt we get by now 600$ rdna3 gpu from amd, hell- why did they try to sell to us castrated rx 7800xt for 900$ and only quietly/unofficially lowered msrp to 800$ once noticed non existant sales?

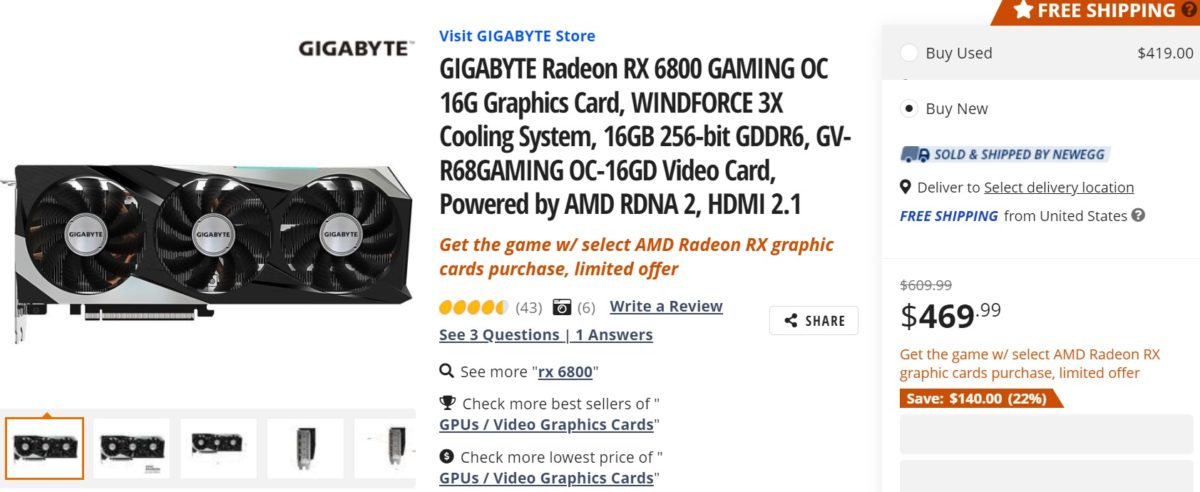

Shouldnt amd sell much better value card(s) by now? and i dont mean even rt/dlss/fg features, but simply 6950xt 16gigs equivalent for 600 or even 550, maybe even 500$, since its such a bad deal for us to get 4070 there should be options from amd by now? and yet nothing...

And no, 6950xt isnt good enough, it has much higher tdp(335 vs 200 from 4070) and is much hoter/louder vs what true rnda3 card at 500-550$ should be, since its topend rdna2 card after all, heavily discounted ofc, from 1100$ launch price to current 650$.

U can make valid arguments amd wants/can milk their customers on cpu side, but here on gpu side they are under 11% of market share now, hell intel is above 6% now and nvdia above 82% , it should be amd's biggest priority to at least go back to 35-40% marketshare asap, or game devs will start treating their gpu's as black sheep, aka worse optimisation(less time/budget spent on that, since for such a small % of market its not worth it),