Starfield holds significant importance in the gaming landscape for 2023, and Intel recognizes the need to provide gamers with a great gaming experience. Regrettably, Intel’s GPU drivers initially fell short of ensuring a seamless gaming experience during the early access phase. However, the company promptly addressed this issue by releasing the first driver specifically optimized for Starfield.

Nonetheless, that release did not constitute the full ‘Game On’ driver,

nor does the latest driver, recently unveiled. While Intel has resolved certain bugs and introduced further optimizations, the roster of known issues remains more extensive than the fixes applied. Nevertheless, Intel’s consistent stream of driver updates demonstrates their commitment to enhancing the gaming experience, considering the circumstances.

Regrettably, Intel was unable to deliver a fully optimized driver for the game’s launch on September 6th, potentially necessitating Alchemist GPU series gamers to exercise patience.

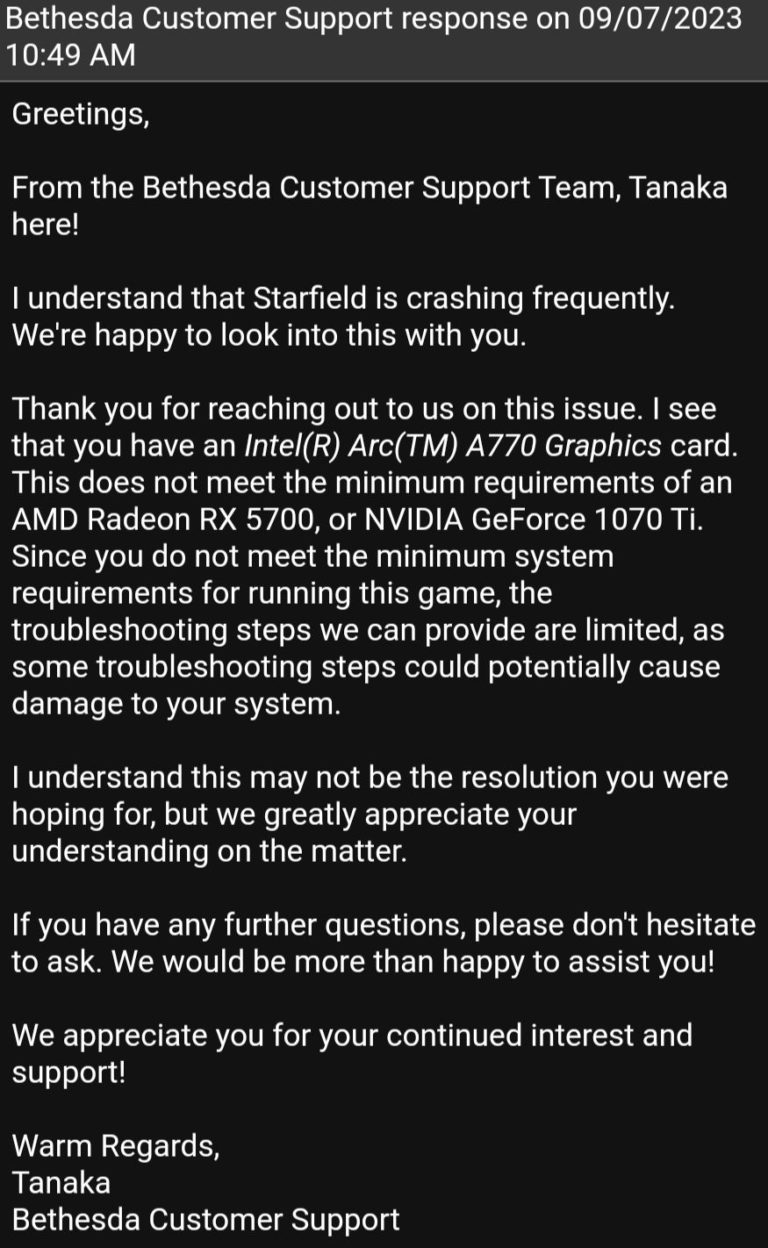

But some gamers are losing this patience as the one who reached out to Bethesda for support. A gamer who sought assistance from Bethesda’s consumer support was informed that the Arc A770 GPU does not meet the minimum requirements specified for Radeon RX 5700 or GTX 1070 Ti GPUs:

Despite the Arc A770 GPU’s better performance compared to the aforementioned cards, Bethesda’s generic response suggests a lack of comprehensive guidance on how to address or optimize the game for their hardware. Consequently, Arc gamers may have to rely on Intel to resolve issues that Bethesda appears unwilling to tackle independently. One can only hope that Intel and Bethesda are working on Starfield optimizations together and Intel will have its “Game On” fully optimized driver for Starfield soon.