DonJuanSchlong

Banned

why you're holding so much hatred in your heart

why you're holding so much hatred in your heart

Leave him alone. I'm pretty sure a pc killed his dog once and now he's out for revenge.yamaci17 instead of digging yourself a deeper hole, just tell us that you are jealous of PC gamers, for taking all of your exclusives away, and for touching you when you were younger. Cause I can't understand where you come up with some of the most nefarious lies, and blatant bullshit. What honestly entices you to write all this bullshit, that just about anyone with single-digit IQ can refute, easily. There's a reason why so many people jumped on your post, and quoted you, because you have the most blatant bullshit posted on GAF today.

you can find a lot of criticism coming from me regarding series sAnd where does the Series S fit in to this equation of yours genius?

I only played the demo but I never saw any of that. Is it related to ray-tracing or specific resolutions or graphic cards? Only have a 1080ti, played in 1080p without RT. I once noticed a drop to 130fps but otherwise it was 144fps with occasional dips to 143fps, only noticeable with a fps counter.

DF highlighting perf issues (timestamped):I finished it on 3080/3700x. Did not had 24fps drops. Ran good with gsync. Some minor stutters here and there. Nothing bad

you can find a lot of criticism coming from me regarding series s

but it has at least respectable 8 core 16 threads zen 2 cpu accompanied with 500 gb/s ssd

by omitting ray tracing out, its memory can survive

for its low tflops, series s users are always welcoming 540p, so there' s no problem on that front

It should not have been released but IMO it is still way easier to adjust a game to run on S, if You release it on X, than it is on pc.And where does the Series S fit in to this equation of yours genius?

i hope it dies

imagine being forced to optimize games to run on decrepit 4-6 core cpus and gtx 1060s/1070s (sx, ps5 and even series has 8 core 16 threads rofl)

imagine being forced to design games around slow hdds or slow sata ssds (even the sx can reach 5 gb/s bandwidth. most "pcmr" users still have 400-550 mb/s sata ssds which is inferior to what series x/s/ps5 has)

imagine being forced to design textures, data streaming around the majority of 8 gb vram gpus (sx will be able to provide 13.5 gb total memory available to games)

i hope microsoft changes their stance on pc and make xbox exclusive games. imagine a game taking the full power of 4.8 gb/s ssd bandwidth, 13.5 gb vram + sampler feedback streaming (which no hardware has yet to have on PC. no, sampler feedback alone does not count, because sampler feedback streaming is one step forward) and of course, 12 tflops.

sx by its gpu power alone is stronger than maybe %70-80 of pc users

decrepit pc hardware will hold back multiplatform games for quite a while sadly.

hopefully ps5 exclusives will overcome that. blame is on microsoft for giving their exclusive games to PC lmao

So the 3080 is now a gimped card, because it doesn't have 16gb of vram? Do you even understand the basics of how computers work?you can find a lot of criticism coming from me regarding series s

but it has at least respectable 8 core 16 threads zen 2 cpu accompanied with 500 gb/s ssd

its ssd and cpu spec is still better than %90 of pc userbase XD

by omitting ray tracing out, its memory can survive

for its low tflops, series s users are always welcoming 540p, so there's no problem on that front

but developers will have to accomodate for gimped cards that are 2070, 2080, 2080s, 3070 and 3080 for a time now until nvidia graces "pc" gamers with 16 gb mainstream gpus (3060 has 12 gb rofl)

then we shall see truly generational ray tracing enabled games with actual high quality textures, unlike the hideosity that are cyberpunk (extreme texture and lod culling) and re village (textures breaking down 2 meters away from camera)

Lol... Imagine having consoles dragging PC gaming back since the beginning of time and now that consoles finally managed to match or exceed the average PC spec, you want it dead... because it drags your brand new console back?i hope it dies

imagine being forced to optimize games to run on decrepit 4-6 core cpus and gtx 1060s/1070s (sx, ps5 and even series has 8 core 16 threads rofl)

imagine being forced to design games around slow hdds or slow sata ssds (even the sx can reach 5 gb/s bandwidth. most "pcmr" users still have 400-550 mb/s sata ssds which is inferior to what series x/s/ps5 has)

imagine being forced to design textures, data streaming around the majority of 8 gb vram gpus (sx will be able to provide 13.5 gb total memory available to games)

i hope microsoft changes their stance on pc and make xbox exclusive games. imagine a game taking the full power of 4.8 gb/s ssd bandwidth, 13.5 gb vram + sampler feedback streaming (which no hardware has yet to have on PC. no, sampler feedback alone does not count, because sampler feedback streaming is one step forward) and of course, 12 tflops.

sx by its gpu power alone is stronger than maybe %70-80 of pc users

decrepit pc hardware will hold back multiplatform games for quite a while sadly.

hopefully ps5 exclusives will overcome that. blame is on microsoft for giving their exclusive games to PC lmao

So let me get this straight, the series S is fine but an equally powerful 1060 and more powerful 1070 are a problem?

So you not think owners of 1060\1070 graphics cards can pair them with a modern 6-8 core desktop CPU and an SSD? Do you not think 1060\1070 owners will likely be gaming at resolutions of 1080p or 1440p?

Sorry, but I'm not understanding your logic here. Unlike consoles, PC's are not set in stone, the parts are easily interchangeable and can be upgraded.

It should not have been released but IMO it is still way easier to adjust a game to run on S, if You release it on X, than it is on pc.

S and X are very simlar. Most of the time You can probably lower resolution or/and framerate

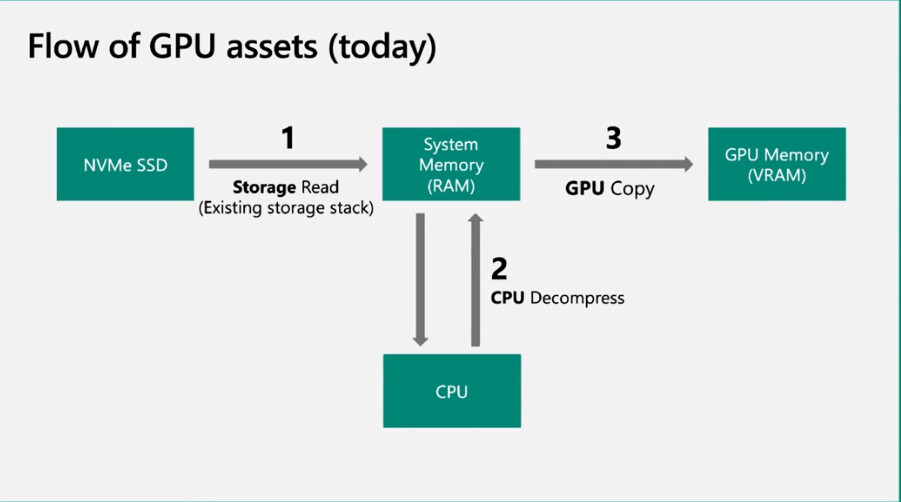

Simply show me one game that is held back because of the lack of direct storage or RTX I/O? All you need is one example, and your argument might actually hold more than a feather of weight. If you can't do this, you might as well shut the fuck up and stand down, as you're only digging yourself a deeper hole like I said.because of directstorage, rtx io, and other technologies.

series s/x has the directstorage and sophisticated advanced techologies that can take advantage of high speed nvme ssds.

1060/1070 simply have not. nvidia specifically built rtx io to make directstorage work.

series s can still run games at 648p, that's no problem for the "casual" player base it has, apparantly.

That means that console gaming is for casuals?PC gaming is for the enthusiast crowd only.

he's a heretic! GET HIM!!!!!That means that console gaming is for casuals?

it's the technologies that series s and x supports that puts them front over 1060/1070Look, it's no secret that I'm not a fan of the series S, that's well documented here but if people are going to start propping it up as something good when comparing it to PC hardware then quite frankly they have lost the plot.

The bolded sentence - what do you think the first thing is that mid/lower spec PC gamers tend to do?

Console gaming is for the average person who likes to play video games.That means that console gaming is for casuals?

Name a single example, game, or even a demo! UE5 is coming to PC more than likely before directstorage or RTX I/O, and will run much better. I'll be waiting for any possible example.it's the technologies that series s and x supports that puts them front over 1060/1070

you can't have;

- sampler feedback

- sampler feedback streaming (not even on rtx 3000 series)

- dx12_ultimate

- variable rate shading

- proper texture streaming via the directstorage

with old hardware. slamming a high speed nvme ssd and calling it a day is not enough. there's also the custom decompression block on xboxes for those ssds, that you don't have on PCs. this is why rtx io is important

if a hypothetical game that were designed to use all these technologies exclusively on a xbox console, it would look truly phenomenal. but we will have to wait a couple more years so that pc users can catch up.

what's there not to understand?

Funny enough, the console fanbase seems to be the armchair tech experts on this site...Console gaming is for the average person who likes to play video games.

PC gaming is for the enthusiast gamer who has to be on the cutting edge of performance and graphics.

And seem more interested in hardware than the actual games.Funny enough, the console fanbase seems to be the armchair tech experts on this site...

because of directstorage, rtx io, and other technologies.

series s/x has the directstorage and sophisticated advanced techologies that can take advantage of high speed nvme ssds.

1060/1070 simply have not. nvidia specifically built rtx io to make directstorage work.

series s can still run games at 648p, that's no problem for the "casual" player base it has, apparantly.

you forgot to mention console gamer's shriveled genitals. i expect more from you, be better!Console gaming is for the average person who likes to play video games.

PC gaming is for the enthusiast gamer who has to be on the cutting edge of performance and graphics.

This is getting laughable.

Directstorage is a DirectX technology and will work with any DirectX 12 GPU.

DirectStorage API Works Even with PCIe Gen3 NVMe SSDs

Microsoft on Tuesday, in a developer presentation, confirmed that the DirectStorage API, designed to speed up the storage sub-system, is compatible even with NVMe SSDs that use the PCI-Express Gen 3 host interface. It also confirmed that all GPUs compatible with DirectX 12 support the feature. A...www.techpowerup.com

The Series S/X use standard specification pcie gen 3 nvme SSD's.

For games like the medium that the series S has to run at 648p, GPUs like the 1070 can run at 1080p at high settings and could probably achieve even more if the settings were dropped to whatever the Series S is running the game at:

And that's an example with a 6 core 1600 CPU.

Please educate yourself before you embarrass yourself further.

acussing other people lying while yourself literally lied in previous post in this thread is kind a sad.yamaci17 instead of digging yourself a deeper hole, just tell us that you are jealous of PC gamers, for taking all of your exclusives away, and for touching you when you were younger. Cause I can't understand where you come up with some of the most nefarious lies, and blatant bullshit. What honestly entices you to write all this bullshit, that just about anyone with single-digit IQ can refute, easily. There's a reason why so many people jumped on your post, and quoted you, because you have the most blatant bullshit posted on GAF today.

What are you smoking my man? Did you just appeared in the gaming scene out of nowhere in the last couple of months or something?but developers will have to accomodate for gimped cards that are 2070, 2080, 2080s, 3070 and 3080 for a time now until nvidia graces "pc" gamers with 16 gb mainstream gpus (3060 has 12 gb rofl)

lol 1070 drops below 30 fps in certain scenes in this game. and medium to high settings do not matter. i played that game myself, you can't argue with that game rofl. even with medium settings, a 1070 will drop below 30 fps regardless (besides, there are even heavier scenes in the game, and you practically picked the start of the game, which is the lightest)

and pc equivalent directstorage will never work efficient like it does on series s/x. they have special hardware that pcs don't have. get over it. accept it and move on.

rtx io is the equivalent hardware chip that is similar to what series s/x/ps5 have. but then again, it will take years for pc users to catch up with these technologies, so these techs will only be used partially and not to an full extent, until all the hardware is capable.

just like tesselation, ambient occlusion. remember that in the first years these techs were introduced, they were subpar in-game because only a limited hardware supported them. it was a novelty, but a weak one. with more and more gpus supporting them, we've started to see their true capabilities. rdr 2 is a fine example what a fully fledged tesselation can do.

by the way:

ryzen 1600 can't hold 60 fps in cyberpunk

series x can.

you can keep ignoring the truth

and with that being in mind, i shall ignore you. those who are ignorant shall be ignored

they have special hardware that pcs don't have.

and with that being in mind, i shall ignore you. those who are ignorant shall be ignored

yeah I am not arguingLook, it's no secret that I'm not a fan of the series S, that's well documented here but if people are going to start propping it up as something good when comparing it to PC hardware then quite frankly they have lost the plot.

The bolded sentence - what do you think the first thing is that mid/lower spec PC gamers tend to do?

I thought Cyberpunk runs at 30fps on series x?ryzen 1600 can't hold 60 fps in cyberpunk

series x can.

So a 2017 cheap CPU can't hold 60 fps on graphically demanding 2020-2021 game? Colour me shockedby the way:

ryzen 1600 can't hold 60 fps in cyberpunk

series x can.

It has both quality and performance modes, in quality mode it targets 1800p+ and higher settings, with performance mode it targets 1440p and 60 FPSI thought Cyberpunk runs at 30fps on series x?

Yeah, let me colour you shocked then, with a counter argumentSo a 2017 cheap CPU can't hold 60 fps on graphically demanding 2020-2021 game? Colour me shocked

My pc from 2014 ran its first year nonstop 24/7 with no issues; after that year gta5 came out and it actually needed a new driver, updated, didn't reboot, no issues. It's still going strong today.Lol you wish. Pcs never change.

I did, here is my entire playthrough, stutter free and never dropping below 60:Yeah check RE: Village out.

Runs perfect on consoles. Stutters big time on a 3090 and 10900k.

Wrong way to analyse this data pal. You don't look at the percentage but the actual numbers.Yeah, let me colour you shocked then, with a counter argument

- Majority of PC gamers have GTX 1060, 1650S, 1050Ti, 1070, 970, RX 580, RX 570 i.e. midrange GPUs

- Majority of PC gamers have ryzen 1600, i5 7400, 8400, 9400f, ryzen 2600, ryzen 3600 (still can't hold 60 fps in cyberpunk) i.e midrange cheap CPUs

This is where the problems start, Series X triumphs these specs.

Series X triumps what a RTX 2070+3700x gaming machine can deliver. This is huge.

You realise that is because Cyberpunk is a last gen game for Xbone?Series X can't hold 60fps on Cyberpunk either. On perf mode it runs at 50 something even when you just free roam and not doing combat or anything demanding.

I had to do this back in the day for a 980 ti...

I'm sorry your PC has issues. Mine is perfect. It helps that i know what i'm doing though.Lol you wish. Pcs never change. X570 and ryzen 3700x caused me so many issues... It still does fuck with USB. My keyboard input sticks sometimes due to usb issues... Many other problems

Huh?You realise that is because Cyberpunk is a last gen game for Xbone?

Then only use it as an argument when nextgen patchs are actually out.Framedrops will be ironed out for nextgen consoles with nextgen patch.

John Wick guy was the one using that as an example, so take complaints to himYou realise that is because Cyberpunk is a last gen game for Xbone?

I also know what I am doing.I'm sorry your PC has issues. Mine is perfect. It helps that i know what i'm doing though.

Also my XBOX 360 red ringed.

Huh?

Shouldn't a last gen game run much faster on a much more powerful, next gen console?

I thin you are confused. Also, i was answering to the guy who claimed the Series X can hold 60fps on that game.

Theres a meme for the your post just now you know

i'm having great laughs over how pc people triggered so easily lol

People engaging you in a debate now constitutes them getting "triggered"?i'm having great laughs over how pc people triggered so easily lol

keep them comments rolling ppl

Ah, so you were trolling after all.i'm having great laughs over how pc people triggered so easily lol

keep them comments rolling ppl

This. It's an amazing time to play on PC but only if you are already in the ecosystem.The saddest part with the gpu situation is that even when you do find parts in stores, they're still double the price while consoles will be at msrp. I don't understand this.

If you already have a PC yes. If you're looking to get one, I don't think it's ever been worse.