freezamite

Banned

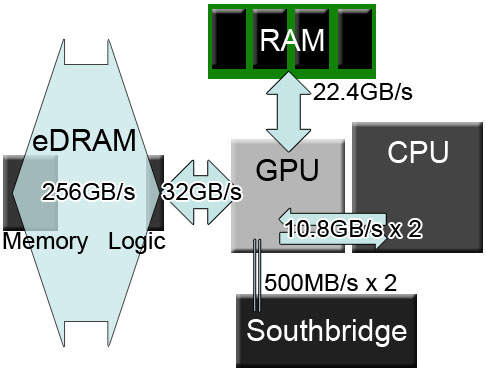

Really simple to understand. Current engines were made with PS360 on mind, and this means, 22-40 GB/s bandwidth to the bigger pool of memory and on 360's case, a small amount of eDram that can't be read directly from the GPU.Read my last post. The question i am asking, is because the performance we are seeing, in no shape or form can be justified by what we know from the memory setup. Even if it were worse. The fact that so many games suffer from it so excessively, makes me think there has to be another explanation.

Even when memory management is one of the easiest part to adapt, since kits weren't even finalized, games had been totally rushed and maximum bandwidth of the WiiU big pool of RAM is only 12,8GB/s, it's easy to think were these "artificial" bottleneck appears.

CPU is also totally different, with a much more modest SIMD design but a much better general purpose performance *.

So until engines are adapted to a more friendly design (and both PS4 and 720 will have that design, by the way) we won't be able to speak about the console's flaws basing our judgement on the games at sale.

*Hell, even the "1 thread of the 360 CPU was only 20% faster than Broadway in a general case scenario" is not a fair comparison because 360 had a shared L2 cache, which means that if only 1 thread was used, this thread had the whole 1MB of L2 cache for itself.

So the correct sentence would be "1 thread of the 360 CPU with the whole 1MB of L2 caché available to it was only 20% faster than Broadway", so with more threads being used and since we know that cache misses were EXPENSIVE on the 360 (500 cycles waiting for data XD) it's obvious that WiiU CPU, which that at LEAST is three Wii cores overclocked at 1,24Ghz (70% more clock), with two of them having the L2 cache DOUBLED and the other one having it multiplied by EIGHT will be on another league on real world scenarios. Ah, and this is not considering that WiiU has a proper DSP and some other processors to do what the 360 had to use it's CPU for, meaning even more accesses to main memory and so less real world performance...