Devs have been developing for the xbox360 for the past 8 years. Dont think the ESRAM will be that complicated.

Sure, but not having to think about that at all makes life still easier.

Devs have been developing for the xbox360 for the past 8 years. Dont think the ESRAM will be that complicated.

Sure, but not having to think about that at all makes life still easier.

well yes, because without it, youre talking monstrous deficiencies in bw...

So possibly November 8 for Xbox One?

http://www.kotaku.com.au/2013/08/the-xbox-one-might-be-out-on-november-8/

from the start, this upcoming generation is just some sort of bizzarro world/twilight zone...where Sony is making things easy, and everyone else is over complicating things...its just so fun to watch/be a part of...

really at the end of the day on a purely hardware front this generation hinged on Cerny's ballsy gamble to go with GDDR5 and being able to secure the right size chips to give them 8GB....

from the start, this upcoming generation is just some sort of bizzarro world/twilight zone...where Sony is making things easy, and everyone else is over complicating things...its just so fun to watch/be a part of...

really at the end of the day on a purely hardware front this generation hinged on Cerny's ballsy gamble to go with GDDR5 and being able to secure the right size chips to give them 8GB....

Devs have been developing for the xbox360 for the past 8 years. Dont think the ESRAM will be that complicated.

4 x 256bit Read/Write on the eSRAM according to MS.

So why isn't the theoretical not 218GB/s?

4 x 256bit Read/Write on the eSRAM according to MS.

So why isn't the theoretical not 218GB/s?

4 x 256bit Read/Write on the eSRAM according to MS.

So why isn't the theoretical not 218GB/s?

Devs have also been developing for PS3 for the past 8 years, Sony should have gone with a more complicated CELL.Devs have been developing for the xbox360 for the past 8 years. Dont think the ESRAM will be that complicated.

The ESRAM bandwidth issue indeed remains weird, especially since they "discovered" the new peak bandwidth rather late in the development process.

Especially since 4 * 256bit * 853mhz = 873,472 Gbit/s = 109,184 GB/s

There must be some way to read/write on the rising and falling edges of the clock. However, it is still weird that the theoretical maximum BW is not doubled by that.

Apparently, there are spare processing cycle "holes" that can be utilised for additional operations. Theoretical peak performance is one thing, but in real-life scenarios it's believed that 133GB/s throughput has been achieved with alpha transparency blending operations (FP16 x4).

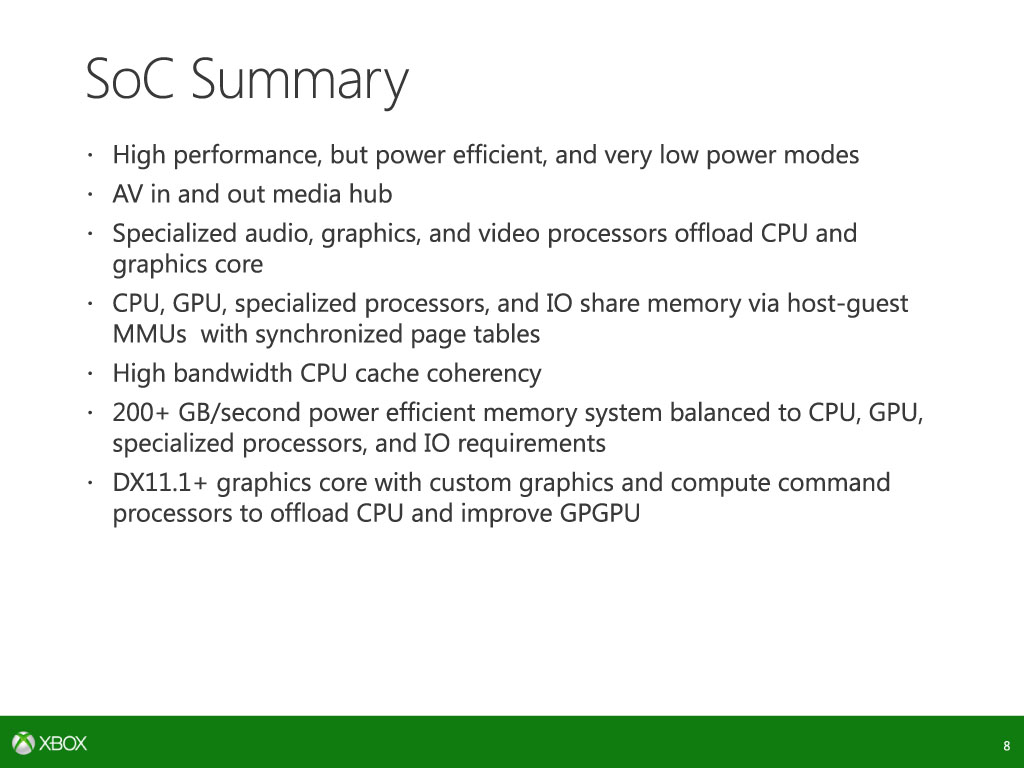

Think about what the initial demands from higher ups meant for X1's design:I can't shake the feeling it's just a bit too complicated, like they started out with an easy design and ended up just adding more and more layers where they see fit to achieve their goal.

Think about what the initial demands from higher ups meant for X1's design:

Must be virtually silent: Huge box with large heatsink & fan, limited power draw, external power brick, etc.

Must have lots of RAM to multitask: 8GB RAM from the start, which meant DDR3+ESRAM, limiting room on the SoC for better GPU.

Kinect mandatory: Extra budget/hardware resources dedicated to processing Kinect.

3 OSes, HDMI input overlay, etc.: Lots of budget poured into non-gaming software features.

It's a miracle it performs as well as it does given all the non-gaming restrictions and budget allocations.

Can you ask your dev buddy why the bw is 204MB instead 218MB?Pretty much echos what I've heard from a friend in development, not regarding HSA and ESRAM and the comparative benefits of one over the other, but regarding the performance implications of ESRAM.

I don't think they found it very late, because the exact operation example suggested in the DF article about the increase in the XB1's memory performance was included right there in an Xbox One development document from back in early 2012.

It says word for word "Full speed FP16x4 writes are possible in limited circumstances."

This was from January or February 2012, and is a Microsoft document.

And now from the DF article.

The same exact thing mentioned in Microsoft's own document on the Xbox One from early 2012 on what the implications would be of having 32MB of ESRAM on the Xbox One. The DF article makes it sound like it's something that's only possible under certain conditions, and the 2012 MS document seems to anticipate that full speed writes of that nature would be possible in limited circumstances, so Microsoft literally knew about this as far back as early last year, which probably lends credibility to the increased ESRAM performance.

I don't think they found it very late, because the exact operation example suggested in the DF article about the increase in the XB1's memory performance was included right there in an Xbox One development document from back in early 2012.

It says word for word "Full speed FP16x4 writes are possible in limited circumstances."

This was from January or February 2012, and is a Microsoft document.

And now from the DF article.

The same exact thing mentioned in Microsoft's own document on the Xbox One from early 2012 on what the implications would be of having 32MB of ESRAM on the Xbox One. The DF article makes it sound like it's something that's only possible under certain conditions, and the 2012 MS document seems to anticipate that full speed writes of that nature would be possible in limited circumstances, so Microsoft literally knew about this as far back as early last year, which probably lends credibility to the increased ESRAM performance.

32MB eSRAM32 + 4MB of L2 cache on CPU + L1 cache + whatever the fuck the rest of it is. ~1mb is on the audio chip... Maybe 4 or so MBs on the GPU... don't know where the rest is.

Also, not hUMA, but a solution to accommodate for it.

Seems like it. Thanks for counting though.32MB eSRAM

4MB L2 (CPU)

512KB L1 (CPU)

1MB SHAPE

512KB L2 (GPU)

768KB LDS (GPU)

96KB Scalar Data cache (GPU)

3120KB (GPU - 260KB per CU)

~ 42MB + Buffers + 2 Geometry engine caches + Redundancy. Redundancy of ~10% for eSRAM and you arrive close to 47

The 47MB on-chip storage figure is as meaningless figure as the 5 billion transistors.

32MB eSRAM

4MB L2 (CPU)

512KB L1 (CPU)

1MB SHAPE

512KB L2 (GPU)

768KB LDS (GPU)

96KB Scalar Data cache (GPU)

3120KB (GPU - 260KB per CU)

~ 42MB + Buffers + 2 Geometry engine caches + Redundancy. Redundancy of ~10% for eSRAM and you arrive close to 47

The 47MB on-chip storage figure is as meaningless figure as the 5 billion transistors.

Sorry but this isa is pretty horrible compared to the ps4 and smacks of ps3. Sure, sure these devs are going to port your game over to the xbone... And then make some of these calls work on the audio chip because of flops. Sure, they won't just turn down the res. just like they did on the ps3.

What a bunch of jokers. All of this because they wanted to not have to source for gddr5 and were stuck on this multi OS kick. Jokers the lot of them.

Thanks for the rundown. Quite a big L1 cache, it seems.

That wasn't the only limiting factor, ie. the amount of sub-HD games on PS3 was even greater despite not having to deal with it. Same goes for sub-SD games on OG Xbox (I wanted to add Wii/GC/PS2 but then realized they had embedded RAM).The EDRAM in the 360 could only do one thing, and it was much faster, relative to the GPU power than the One's ESRAM is. And you still ended up with lots of sub-HD games from devs who just wanted their buffers to fit in 10MBs.

Can a fellow japanese Gaffer translate this article?

http://pc.watch.impress.co.jp/docs/column/kaigai/20130827_612762.html

Goto thinks or seen (?) that the PS4 APU is 250-300mm2. That corresponds to what I heard couple of months back - http://www.neogaf.com/forum/showpost.php?p=61566193&postcount=3668

is this real?So the 15 special purpose processors are as follows?

1) av out / resize compositor

2) av in

3) video encode

4) video decode

5) swizzle copy lz encode

6) swizzle copy lz/mjpeg decode

7+8) swizzle copy x 2

audio :

9) C something something dsp (top blue box in audio processor)

10) scalar dsp core

11+12) vector dsp core x 2

13) sample rate converter

14) audio dma

15) the last orange box covered by the guy's head

32 + 4MB of L2 cache on CPU + L1 cache + whatever the fuck the rest of it is. ~1mb is on the audio chip... Maybe 4 or so MBs on the GPU... don't know where the rest is.

Also, not hUMA, but a solution to accommodate for it.

Can you ask your dev buddy why the bw is 204MB instead 218MB?

SenjutsuSage, if you are going to make some claims, at least link WTF you are talking about. Some vague reference to a 202 doc doesn't help anyone.

And something pretty interesting that I've literally been skipping over this entire time, mostly because it didn't strike me as that big a deal is the page that comes right before the ESRAM page, the Memory Management page, and the following is everything that's said on that page.ESRAM

-- General parameters

32MB

102GB/s

lower latency access

No contention (Front Buffer is in DRAM)

And It's Generic

Durango

Rendering into ESRAM - YES rendering into DRAM - YES

Texturing from ESRAM - YES texturing from DRAM - YES

Resolving into ESRAM - YES resolving into DRAM - YES

Xbox 360

Rendering into EDRAM - YES rendering into DRAM - NO

Texturing from EDRAM - NO texturing from DRAM - YES

Resolving into EDRAM - NO resolving into DRAM - YES

-- Implications

No need to move data into System RAM in order to read.

Full speed FP16x4 writes are possible in limited circumstances.

Is that passing of pointers between the CPU and GPU a big deal, as I think I remember reading that being one of the big things for hUMA?1TB of Virtual Address space via own MMU

--- A page in ESRAM, DRAM or unmapped

Some Implications

--- Well-defined page miss behavior

--- Memory fragmentation becomes less important

--- Architecturally possible to pass pointers between CPU and GPU

--- Portions of resources could be using ESRAM

I fully intend to do just that, so I can get a better understanding of how they arrive at the figure, although I won't be the least bit surprised if we have our answer in the next 4 days or so.

I obviously can't link it, because I don't want to get anyone in trouble, but it's a Durango Developer Summit powerpoint presentation called Graphics on Durango. It's an official MS document on the Xbox One from early 2012.

There's a page on ESRAM. This is everything that it says on that particular page.

And something pretty interesting that I've literally been skipping over this entire time, mostly because it didn't strike me as that big a deal is the page that comes right before the ESRAM page, the Memory Management page, and the following is everything that's said on that page.

Is that passing of pointers between the CPU and GPU a big deal, as I think I remember reading that being one of the big things for hUMA?

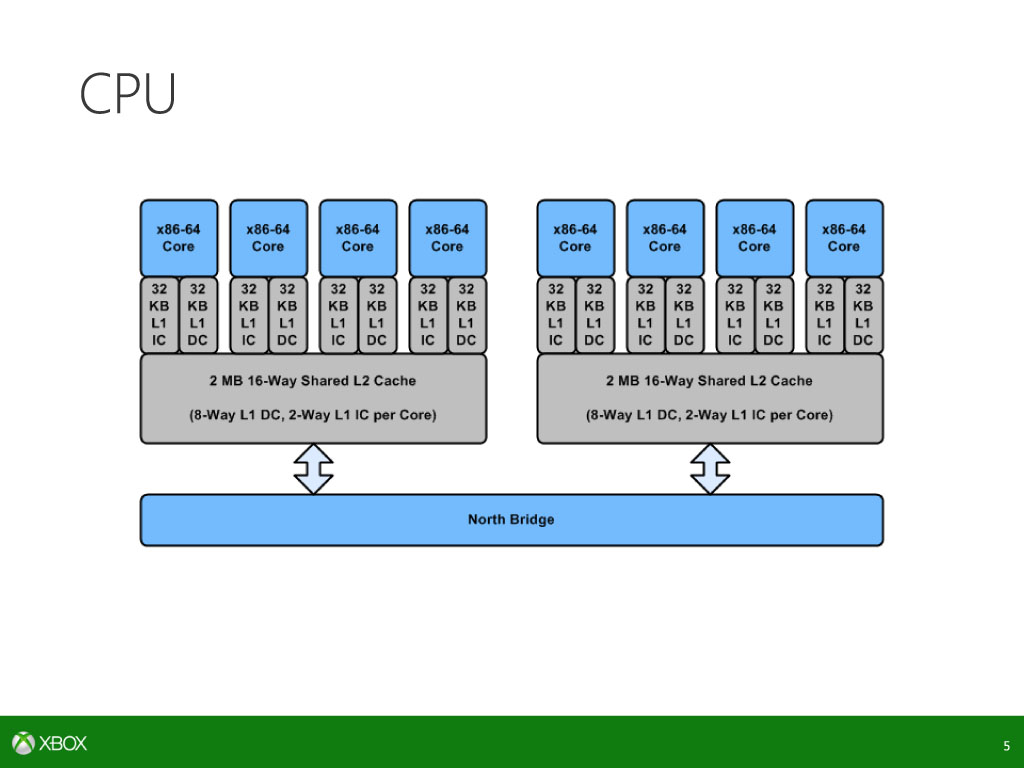

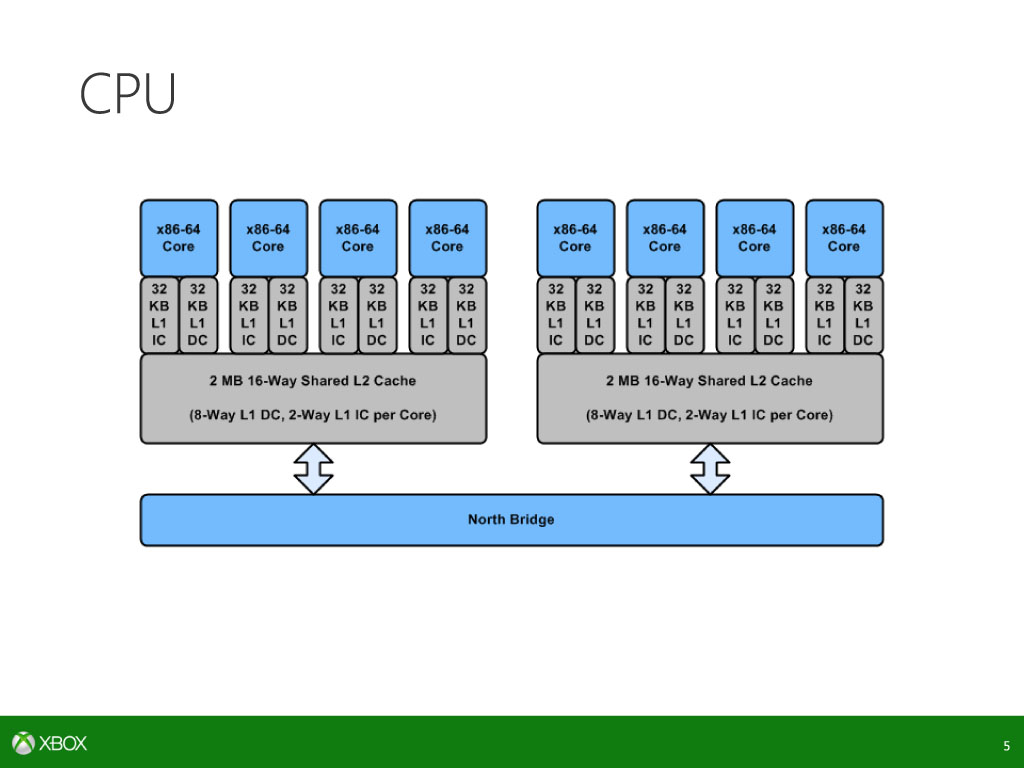

Interesting, was the cpu pic posted?

So they did not even go for an 8 core CPU and instead got pretty much 2 quad core CPUs and connected them via the NB.

So they did not even go for an 8 core CPU and instead got pretty much 2 quad core CPUs and connected them via the NB.

That's the only way you can get 8 cores with Jaguar, PS4 achieves it the same way.

And now that I'm thinking about it, that portions of resources could be using ESRAM thing is pretty interesting now, as it sounds like it's trying to say that, thanks to the 1TB of virtual address space via the MMU, a lot more of a game's resources could actually be using ESRAM than what the physical memory size (32MB) would suggest is possible..

Anyway, pretty tired. Good night all.

No.While googling around, I found a nice quote from 2009.

Seems like the tables have turned, with the PS4 being the (presumably) "easier to program for" console.

Really? Even for the OS? I thought flash storage was quick. Hence why so many use SSDs for their OS partitions.

I know the PS4 is also like that, I just do not know why.

If you go to all this trouble to make a custom SoC why not make sure the internal bus that connects the cores is large enough to have 8 of them.

Faster than conventional mechanical hard rives by a lot, slower than RAM by a lot.

It based on during only alpha transparency blending. So it just created math.

Its like saying my car can do 250 MPH*

*When drop off a cliff.

It doesnt have real world performance like that.

If I'm not wrong AMD didn't have a native 8 core Jaguar CPU solution... so for 8 core you need to go with 2 quad-core Jaguar.So they did not even go for an 8 core CPU and instead got pretty much 2 quad core CPUs and connected them via the NB.

Looking at it, I can see they will treat it like a turbo charged 360. ESRAM used as framebuffer, and for compute temp storage.

The one advantage the esram has over the 360 is it's addressable, which means your post processing is going to be slicker than the PS4 by about 30gb/s, without stalls. Thats a fair boost.

Man this is going to be so much more even than raw numbers can show I think.

This is interesting:

http://venturebeat.com/2013/08/26/m...se-details-are-critical-for-the-kind-of-expe/

Looks like the processing is what will be causing most of the latency with Kinect now.

I agree that the hUMA-term is not very helpful since we all don't seem to know the exact feature set that constitutes a hUMA architecture. In general, the slides align nicely with the leaked documents (after adapting the numbers to the new 853mhz clock). My take is:

- In both XB1/PS4 the GPU can access main memory by probing the CPU's L2 caches (cache-coherency)

- In both XB1/PS4 the GPU can (and must to achieve peak bandwidth) bypass the CPU's caches at will (no cache-coherency)

- ESRAM seems to be a GPU-only scratchpad with a dedicated address space and DMA support via the 4 move engines

- The PS4 seems to have a finegrained mechanism to bypass GPU cache lines (volatile tag) while the XB1 needs to flush the entire GPU cache

- XB1 has 2 GFX and 2 ACE processors, the PS4 has 2 GFX and 8 ACE processors which, in combination with the above mentioned volatile tag, shows a bigger emphasize on GPGPU/HSA in general.

Big difference seems to be

- Xbox one has 30GB/s when probing caches, 68Gb/s when not

- PS4 has 10GB/s when probing caches (using onion and onion+), 176GB/s when not.