The information below I read + Quoted from an Article on redgamingtech.

The new data may shed some interesting light for speculation on "paritygate" for the more technical minded Gaf'ers on Assassin'sCreed: Unity 1080p vs 900p resolution and Framerate.

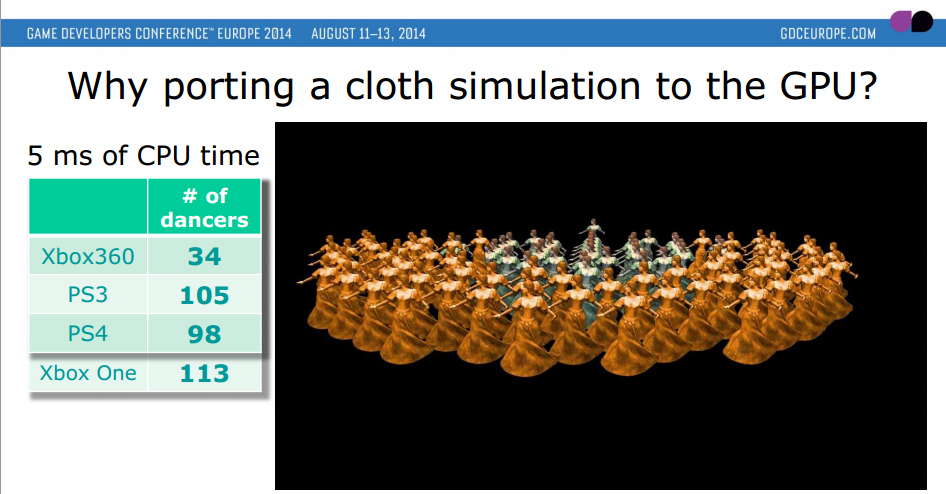

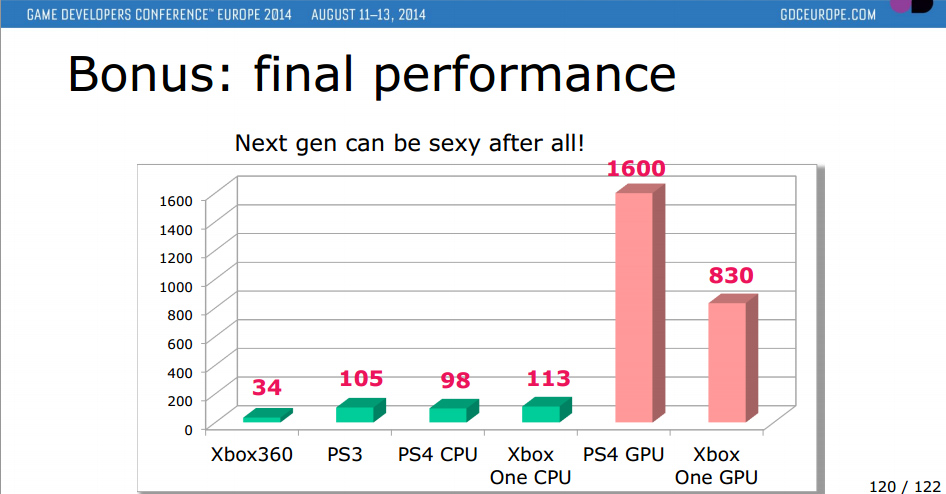

In summary, Ubisoft using cloth simulation benchmarks on both systems CPU's + GPU's (graphs + data in article) shown:

- X1 Marginally faster CPU (due to higher clock speed, 1.6ghz vs 1.75ghz)

- PS4 - Has actually significantly more GPU benchmarking than thought compared to Xbox1, nearly 100% more.

- CPU + GPGPU management is essential and seems no where near optimal this early in the generation.

I highly recommend reading the article in its entirety or checking out their video on the technical analysis as there is a vast amount of information and data that cannot be used here without the graphs for proper context (I have probably left out some important info too!). Notably for the optimizing shaders + Compute Units in the GCN architecture. (Likely the lifeblood going forward next-gen for offloading CPU tasks to the GPU in the coming years, alot of which is over my head)

In the article RedGamingtech discusses Alexis Vaisse presentation (Lead Programmer at Ubisoft Montpellier) he details the performance on PS4 + X1's CPU vs GPU whilst throwing in the previous generations for good measure.

RGT:

RGT:

Full Article here, with much more Infographs from Ubisoft:

http://www.redgamingtech.com/ubisoft-gdc-presentation-of-ps4-x1-gpu-cpu-performance/

Recent interview with Ubi:

From RGT:

The new data may shed some interesting light for speculation on "paritygate" for the more technical minded Gaf'ers on Assassin'sCreed: Unity 1080p vs 900p resolution and Framerate.

In summary, Ubisoft using cloth simulation benchmarks on both systems CPU's + GPU's (graphs + data in article) shown:

- X1 Marginally faster CPU (due to higher clock speed, 1.6ghz vs 1.75ghz)

- PS4 - Has actually significantly more GPU benchmarking than thought compared to Xbox1, nearly 100% more.

- CPU + GPGPU management is essential and seems no where near optimal this early in the generation.

I highly recommend reading the article in its entirety or checking out their video on the technical analysis as there is a vast amount of information and data that cannot be used here without the graphs for proper context (I have probably left out some important info too!). Notably for the optimizing shaders + Compute Units in the GCN architecture. (Likely the lifeblood going forward next-gen for offloading CPU tasks to the GPU in the coming years, alot of which is over my head)

In the article RedGamingtech discusses Alexis Vaisse presentation (Lead Programmer at Ubisoft Montpellier) he details the performance on PS4 + X1's CPU vs GPU whilst throwing in the previous generations for good measure.

RGT:

During this conference Alexis pushed home the importance of reduction of workload on the new systems CPU’s. Not only do Ubisoft show the relative GPU performance of the PS4 GPU vs the Xbox one’s (which we cover at the end), but also highlight the rather obvious performance differential between the AMD Jaguar’s which power both the PS4 and X1 and the previous generation consoles (the PS3′s SPU’s in particular were noted). So with the preface out of the way, let’s get on with the show

RGT:

In the above image, we can see the results of running cloth simulation on the the system’s CPU. The previous generation – Microsoft’s Xbox 360, and Sony’s Playstation 3 had very robust CPU’s. Naughty Dog (along with many other developers) have often highly praised the PS3′s SPU’s (Synergistic Processing Unit) as they were in effect vector processors Read more on the PS3′s cell processor here. They were in some ways a precursor to the Playstation 4′s GPGPU structure. So, the Jaguar’s lag behind, running the cloth simulation is too expensive.

You’ll notice the Playstation 4′s CPU performs slightly inferior if compared to the Xbox One’s; clearly this difference can be attributed to the raw clock speed difference between the two machines. Microsoft, as you might recall, bumped up the clock speed of their CPU (using the AMD Jaguar, in the same configuration as Sony, that is four cores per module, two modules total) from 1.6GHZ to 1.75GHZ. Sony didn’t do this, and despite tons of speculation as to the actual clock speed of the PS4′s CPU clock frequency, it was eventually confirmed by Sony to have been left at 1.6GHZ, thus providing Microsoft a slight advantage.

The CPU’s in both machines are relatively weak compared to their GPU’s, and thus offloading work load to the GPU’s remains the only logical choice. Ubisoft (like many other developers) are starting off loading a lot of work to the GPU. It’s high shader count allows for SIMD (Single Intruction Multi Data) nirvana. Quite simply put, multiple of the processors inside the systems GPU can be assigned to the task, and they’ll work at this task until it’s complete.

In essence, if the CPU isn’t doing this efficiently, because of the massive discrepancy between the CPU and GPU performance, the CPU can still become bogged down with telling the GPU what to do.

Their new approach results a lot of initial trouble. A single compute shader was used, rather than the CPU needing to dispatch to multiple different shaders. This cut down on both CPU use, and also memory problems. Up to 32 cloth items could now be handled using a single dispatch, which is clearly much more efficient than throwing a fifty dispatches at a problem. CPU time is still very valuable, and in turn so is GPU

If you’ve been following the next generation since the initial launch rumors, you might recall much being made of the memory bandwidth situation in both consoles. Despite Sony clearly having a bandwidth advantage. Despite both consoles using the same width of memory bus (256-bit), Sony opted to use GDDR5 running at 5500MHZ, instead of Microsoft’s slower DDR3 running at 2133MHZ. This leads to the Xbox one having about 68 GB/s of bandwidth (using purely DDR3) and the PS4 with 176GB/s.

This isn’t so bad if you consider the fact Microsoft’s console has 50 percent less shaders (but they do run at a slightly higher clock speed), which does slightly help lessen the bandwidth requirement. Aside from this, the Xbox One uses the rather infamous eSRAM to make up for the deficit. But, despite all of this, the Playstation 4′s memory bandwidth isn’t endless. We’ve found out of course that the PS4′s memory latency (which was suspected to be an issue because of a myth of GDDR5 RAM, won’t be an issue… see here for more info)..

I’m sure the above is what you’re all waiting for – and it makes a lot of sense. But then, at the same time, the performance difference between the Xbox One’s GPU and Playstation 4′s GPU is actually slightly higher than what you might think. What Ubisoft do say is that “PS4 – 2 ms of GPU time – 640 dancers” – but no form of metric for the Xbox One, which is a bit of a shame. It’s clear however that for the benchmarking Ubisoft have used here, the PS4′s GPU is virtually double the speed.

With a little bit of guess work, it’s likely down to a few reasons. The first being the pure shader power. We’re left with 1.84 TFLOPS vs 1.32 TFLOPS. The second, the memory bandwidth equation, and the third – the more robust compute structure of the Playstation 4. The additional ACE buried inside the PS4′s GPU do help out a lot with task scheduling, and generally speed up the performance of the PS4′s compute / graphics commands which are issued to the shaders. Mostly the reason behind the improvement in ACE is Sony (so the story goes) knew the future of this generation of consoles was compute, and requested some changes to the GPU, thus there are many similarities to the PS4′s GPU and Volcanic Islands from AMD.

Another possibility for improvement is the PS4′s robust bus structure, or finally the so called ‘Volatile Bit’.

Either way, it’s clearly a performance win in the GPU side for Sony. So parity in some ways could either be a PR effort, or in some cases, perhaps the relatively weak CPU in both machines is holding everything back.

It’s unfortunate Ubisoft were rather quiet on the Xbox One front – we do have a little information, for example Microsoft and AMD’s Developer Day Conference (Analysis here) but other than that, we’re left with precious little info. It remains to be seen how much DX12 will change the formula. But for right now, gamer’s are still going to want answers for the reasons of ‘parity’.

Full Article here, with much more Infographs from Ubisoft:

http://www.redgamingtech.com/ubisoft-gdc-presentation-of-ps4-x1-gpu-cpu-performance/

Recent interview with Ubi:

"Technically we're CPU-bound. The GPUs are really powerful, obviously the graphics look pretty good, but it's the CPU [that] has to process the AI, the number of NPCs we have on screen, all these systems running in parallel.

"We were quickly bottlenecked by that and it was a bit frustrating," he continued, "because we thought that this was going to be a tenfold improvement over everything AI-wise, and we realised it was going to be pretty hard. It's not the number of polygons that affect the framerate. We could be running at 100fps if it was just graphics, but because of AI, we're still limited to 30 frames per second."

From RGT:

With the Playstation 4′s compute, much of the work is down to the developer. This means the developer has to control the synching of data. This has been mentioned a few times previously by other developers, and is crucial to ensuring good performance. Quite simply speaking, not only do you need to make sure the correct data is being worked on by either the CPU or GPU, but that data is being processed in the correct order

Careful mapping and usage of memory, caches and management of data are crucial. Even having one of the CPU cores from module 0 accessing data in the cache of module 1 can result in a large hit to performance, as discussed in our SINFO analysis (based on a lecture from Naughty Dog).

Sucker Punch has said themselves (in regards to running particles on GPGPU) you could use a “fancy API to prioritize, but we don’t”. Unfortunately there’s still a lot of CPU time eaten up by particles and compute, as it’s down to the CPU to setup and dispatch the compute tasks.

Ubisoft actually suggest that you write ten versions of your feature. It’s very hard to know what version is going to work best, due to the way the compiler works on the systems. This is likely due to the more Out of Order nature of the Jaguar processors, as opposed to the In-Order execution of the CPU’s sitting at the heart of both the Playstation 3 and Xbox 360. Therefore, much of the time you’re left with little choice but to perform a rather manual testing phase. Write ten or so versions of the code, testing each of them in turn and see which one (if any) works the best. If you find one that’s acceptable (in terms of performance and compute time) then you have a winner..