Ehhh, kind of. There are actually a lot of times where GPU utilization, even when just rendering stuff, is actually fairly poor. You can just be transforming and shading triangles, and have the GPU working a whole bunch, but in practice, only half of the GPU is being used, because of...

reasons.

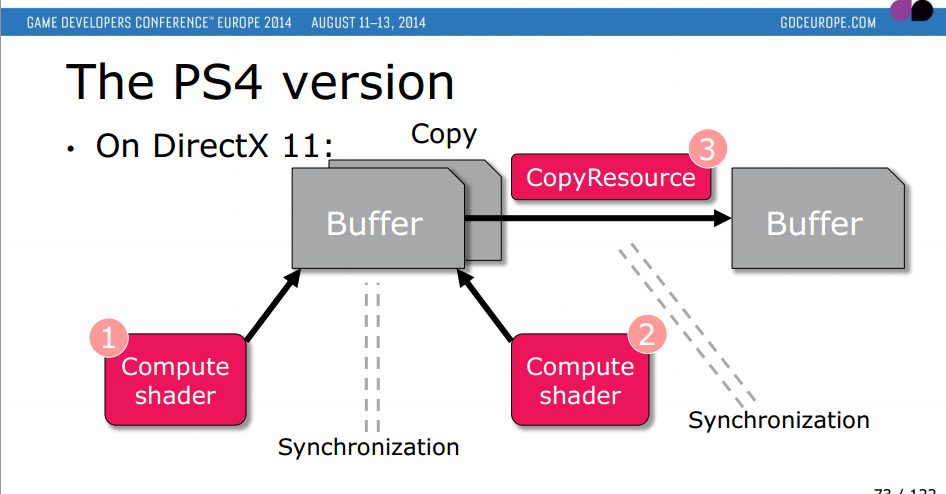

The whole idea behind "asynchronous compute" on the GPU is to fill up that other half with stuff when some bits are underutilized, and up to a point/in some regards, it actually IS a free lunch. The whole deal behind having multiple "async compute contexts" on the PS4 and XB1 (and other

GCN-based GPUs, via Mantle) is to give the GPU the opportunity to ignite that dark silicon with some other tasks when it's available.

These two presentations on the implementation of tessellation/subdivision in Call of Duty Ghost, and voxel cone tracing for The Tomorrow Children, briefly touch on the subject at the end:

http://advances.realtimerendering.c..._2014_tessellation_in_call_of_duty_ghosts.pdf

http://fumufumu.q-games.com/archives/Cascaded_Voxel_Cone_Tracing_final_speaker_notes.pdf

If you're a real tech hound, you're going to hear a lot more about "async compute" in the coming years.