Netherscourge

Banned

Nvidia FLOPS and AMD FLOPS are not anywhere near the same. AMD FLOPS are generally much higher for the same performance as Nvidia

Well, bring on the benchmarks!

Nvidia FLOPS and AMD FLOPS are not anywhere near the same. AMD FLOPS are generally much higher for the same performance as Nvidia

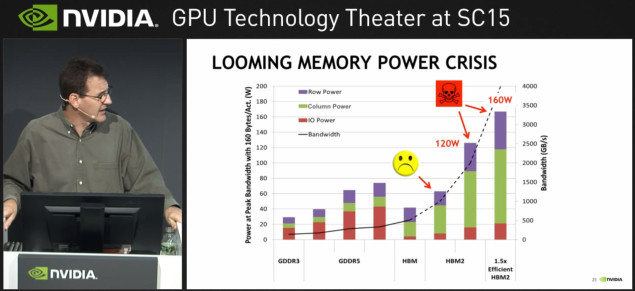

HBM isn't capable of more than 4GB per GPU, so 8GB total is the max for this card. HBM2 allows a higher amount of memory per GPU but that tech isn't ready yet.

Well, bring on the benchmarks!

It's a fact, buddy. Find two equal cards from AMD and NVIDIA and check Wikipedia for their FLOPS nomenclatures. The difference is usually about 1.2x.Well, bring on the benchmarks!

Dual GPU/Crossfire on a single card?

So if games don't support crossfire will it work?

Curious to see how this does at 4K with only 4 gigs.

Also HBM has an overall higher / faster bandwidth then GDDR5, so 4GB of HBM is significantly faster than 4GB of GDDR5, despite being the same amount of memory.

In many forums I've read be it Anandtech, HardOCP, you still have some folks that think 4GB HBM is 1 and 1 with 4GB of GDDR5 in terms of performance.

So I kind of don't get when people go Meh towards 4GB of HBM, especially considering the limits of the first revision of HBM. Now if this was 4GB of GDDR5, that would be a different matter entirely.

God damn video card prices have spiralled out of control in the last decade.

Nope. Nvidia's SLI is bad enough. Going to Crossfire is just rubbing salt into the wound.

How many GameCubes duct-taped together is this?

Just look at the R7 370 (1024 shading units) vs GTX 950 (768) with the 950 being close to 20% faster in most games.

I used to think the $300 I spent for my 9700Pro was ridiculous because that's what a console cost. Then later I figured $500 was the top of the line limit when I got my GTX 680. Now I'm expecting to drop $600-700 this year for the "sorta the best for a few months" card.

My PC build costed me $1500. I guess its for the VR enthusiast?

No, Crossfire doesn't work for VR

No, Crossfire doesn't work for VR

That price is crazy. I'm starting to think I should just go with a mid range card and upgrade every couple of years.

No, Crossfire doesn't work for VR

It's actually perfectly suited to VR. The SteamVR benchmark actually makes use of LiquidVR MultiGPU rendering tech.

SLI / Crossfire for other stuff though is a garbage fire.

Man all that overkill hardware and he uses a budget 850 evo lol

So if 2018 is already some sort of "Next Gen Memory" does that mean we're essentially only gonna get a single Generation of HBM2 cards (2017)?

That doesn't sound very costs effective at all.

It's mostly for people wanting CF, but only have 1 slot = 2 GPU or 2 slot = quad GPU setup. Very few people so very limited quantity. So, you don't have any choice but to pay the extra cost.This is the exact version of the 295x2 from AMD that was released in 2014 (15?)

a 2GPU under one card working as a crossfire..

I used to have that card. I sold it and I got 980ti. while in general the performance of the 2 cards WHEN they work is much better than the 980Ti, in most of my cases it was working as a single GPU only.

AMD is very slow and way behind when it comes to updating their profiles for crossfire. I am never gonna buy a crossfire AMD card ever again.

also whats with the stupid price ? you can buy 2 Fury X cards and same like 200$ over this.

Standard enthusiast PC case in a few years:

And...? That thing has 500MB/s read write, even their most modern ssd isn't that much faster unless you go m.2.Man all that overkill hardware and he uses a budget 850 evo lol

How much power is it in DBZ terms?

Guys/Gals, this is a workstation GPU, considering that $1500 16TFlops is pretty damn nuts for the price.