Fucking thank you.The larger numbers = better game is annoying, you can see it with the complaints that the NX's screen will "only" be 720p, which is apparently terrible. Despite having a dot pitch that'll make the pixels indistinguishable to the eye at a reasonable viewing distance. Its ppi is slightly higher than the Vita, and its screen is larger and higher resolution, so that extra resolution will either be used to make things bigger, or show even more than developers could on the Vita.

It's the same story with phones. 1440p sounds nice in theory, but 1) The phone ends up rendering more pixels that are indistinguishable to the eye, and 2) OLED screens utilise a PenTile subpixel structure, which gives an effective resolution of around 2/3-3/4 of the actual pixel count because every third subpixel is shared between neighbouring pixels (this leads to a "brick"-like arrangement when using devices like the HTC Vive and Rift which is absent on PS VR, despite PS VR having a lower resolution panel.

But yeah, the metric seems to be how big the number is instead of how it works in practise. Sure, the iPhone 7 might "only" be 750p, but 1) the pixel count is fine for the majority of viewing instances 2) the device renders a sensible resolution for performance and battery life and 3) It's the first display shipping on a phone to accurately support both the sRGB and wider DCI-P3 colour gamuts, and it automatically switches between them depending on the content being shown to ensure sRGB content isn't oversaturated. But of course that's not something worth applauding or buying the device for because it's not a bigger number like 1440p.

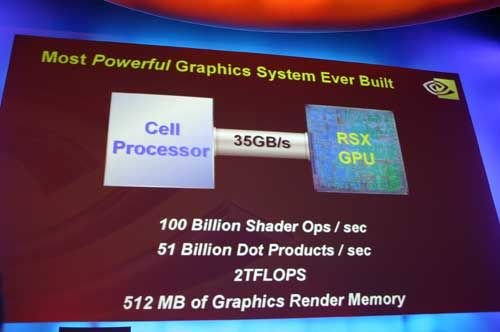

Too many people are jacked up about the numbers, especially regarding resolution. Resolution is only one piece of the whole puzzle when evaluating hardware.... Particularly on handhelds more than anything else.