-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nintendo Switch: Powered by Custom Nvidia Tegra Chip (Official)

- Thread starter ekim

- Start date

Dark Cloud

Member

Yes.So are we all assuming that games running while docked will run better than on tablet screen?

So are we all assuming that games running while docked will run better than on tablet screen?

Either that's the case or you have active cooling all the time and in that case you have the full power on anyhow.

Rogue Agent

Banned

In the future, what kind of power would Switch have if it upgraded to Volta architecture? Any estimates, or is it too early to even speculate?

This will change now that both the Metal and Vulkan APIs are available for repectively the iOS and Android OSes.To be honest, even some Vita games (Killzone!) look better than 99% of games available on mobile today. GPU prowess doesn't really matter on phones, they will never use it to full potential.

It's just like some laptops that both disable it's discret GPU and uses a powersaving CPU governor when it's battery becomes the main power source. The CPU performance probably won't be sacrificed when the Switch goes in portable mode but the GPU probably will be limited in someway.So are we all assuming that games running while docked will run better than on tablet screen?

So are we all assuming that games running while docked will run better than on tablet screen?

I have my doubts, but Laura Dale has been pretty spot-on so far.

I just think it would be more hassle than it would be worth.

Smiles and Cries

Member

too early but hope they build in easy hardware upgrades that considers the roadmapIn the future, what kind of power would Switch have if it upgraded to Volta architecture? Any estimates, or is it too early to even speculate?

dr_rus

Member

But seriously, what are the odds of 1080P in dock mode for at least first-party games?

Exclusive games should be able to run in 1080p, no reason why they can't. It is a decision of a dev house though if they want a higher resolution or more complex shading.

Smiles and Cries

Member

if Nintendo had some 1080P games on WiiU I Would expect more for NS not lessBut seriously, what are the odds of 1080P in dock mode for at least first-party games?

In the future, what kind of power would Switch have if it upgraded to Volta architecture? Any estimates, or is it too early to even speculate?

Man the concept of this console is so cool i want them to never drop it, and just upgrade it with better hardware.

Rogue Agent

Banned

too early but hope they build in easy hardware upgrades that considers the roadmap

I hope so too.

Man the concept of this console is so cool i want them to never drop it, and just upgrade it with better hardware.

Yeah, I really hope this is marketed well and sells well - then it just keeps going towards this upgrade path if that's possible.

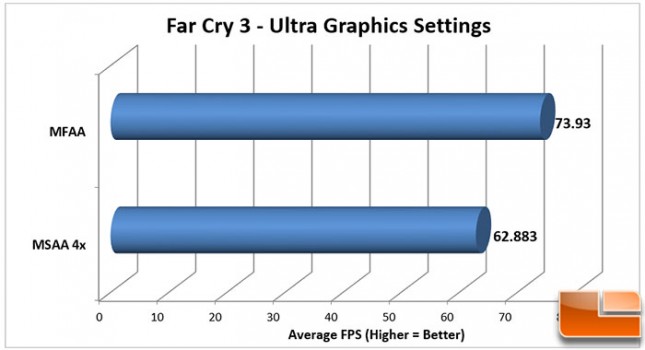

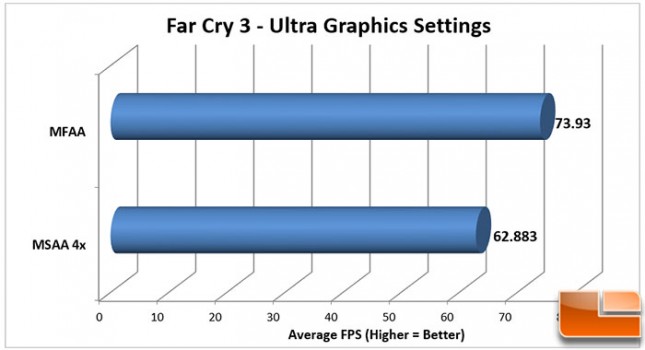

So here's a question for you guys. What are the chances that docking just has the system add 2 passes of Multi-Frame Anti-Aliasing to whatever it is rendered at?

According to Nvidia it seems to be part of the architecture(?) for their newer Tegra GPU's. https://shield.nvidia.com/blog/tegra-x1-processor-and-shield

I've been looking up comparisons and 2 passes of MFAA seem to be equivelant to 4 pass of MSAA at an incredibly marginal performance hit

Which could mean even 720P games would look great when blown up.

According to Nvidia it seems to be part of the architecture(?) for their newer Tegra GPU's. https://shield.nvidia.com/blog/tegra-x1-processor-and-shield

I've been looking up comparisons and 2 passes of MFAA seem to be equivelant to 4 pass of MSAA at an incredibly marginal performance hit

Which could mean even 720P games would look great when blown up.

^ You don't transparently add any form of sampling-based AA.

If there is a clock bump when docked, then I expect it to be up to developers how to use it, and thus the effect to differ per-game.

A "PC" can ship in any form factor a console can (and more), it can use any type of input device a console can (and more), and it can use the same types of output devices (and more).

I don't think that this is a particularly likely scenario, but I also don't see a reason to consider it less likely than them going third party on any other non-smartphone platform.

If there is a clock bump when docked, then I expect it to be up to developers how to use it, and thus the effect to differ per-game.

I'm not assuming that. I think it's a possibility.So are we all assuming that games running while docked will run better than on tablet screen?

Not inherently any more difficult than gathering around any other device.Good luck gathering families around to PC to play Mario Party PC edition.

A "PC" can ship in any form factor a console can (and more), it can use any type of input device a console can (and more), and it can use the same types of output devices (and more).

At the very least including PC in their target platforms as a pure software developer makes more sense than including other vendor's consoles. On PC, they keep 100% of their digital revenue, and a significantly larger part of retail revenue.Nintedo transitioning to PC when going third-party is a funny dream scenario.

I don't think that this is a particularly likely scenario, but I also don't see a reason to consider it less likely than them going third party on any other non-smartphone platform.

mckmas8808

Mckmaster uses MasterCard to buy Slave drives

Does anybody know how much RAM will be in the Switch?

Agreed. Knowing how Nintendo operates it's not hard to imagine a scenario where they'd open a Windows eShop storefront and sell usb Classic, Pro and Wii controllers in stores for example. They want their own platform if at all possible and they could still essentially have that on PC.A "PC" can ship in any form factor a console can (and more), it can use any type of input device a console can (and more), and it can use the same types of output devices (and more).

At the very least including PC in their target platforms as a pure software developer makes more sense than including other vendor's consoles. On PC, they keep 100% of their digital revenue, and a significantly larger part of retail revenue.

I don't think that this is a particularly likely scenario, but I also don't see a reason to consider it less likely than them going third party on any other non-smartphone platform.

Thelonelykoopa

Member

Does anybody know how much RAM will be in the Switch?

No not at the moment. Im hoping for more than 4 gigs.

Yes, for such a hypothetical split that would be correct. Of course it all comes down to the split. The assessment of that could be tricky, but let's try to hypothesize:

1. Vertex positions generally need maximum precision - 1-unit errors in viewport coords can cause high-visibility high-freq artifacts - shimmerings, etc. So all vertex pos transformations are better left in fp32.

2. Likewise with geometry shaders - while some base computations could be done in fp16, the eventual MVP transform is best left in fp32 - i.e. all geometry-shaded vertices have to eventually pass a fp32 MVP.

3. Everything that ends up as shading though is very error-resilient - starting from normal transformations for lambertian terms, color blends and factorisations - pretty much everything which is not a tex coord for a dependent texture read could go as fp16. And then even some tex coords could go fp16, given a sufficiently-low tex res.

4. An exception to (3) are shadows - PCF shadows, etc, could exhibit crawling artifacts and/or perforations if stored or carried (read: sampled and/or filtered) in under-precision, particularly along the edges.

5. Then come post-effects, where tone-mapping could be a real wild-card - one might or might not get banding artifacts if those were carried in fp16 depending on the paramers of the scene lighting. But let's say that to be on the safe side fp32 would be the preferred format.

Again, these are all off-the-top-of-my-hat musings. One normally takes a pipeline, sits there with a few shaders in mind and experiments.

So, the actual boost might be more in the 10-20% range for most applications? Or what do you think? Actual "real-world" benefits of having fp16.

Doczu

Gold Member

Older dev units had 4 gigs, retail will surely have more than that.Does anybody know how much RAM will be in the Switch?

Older dev units had 4 gigs, retail will surely have more than that.

How is that a sure thing? What's the current nvidia based mobile device that has the highest RAM anyway?

Older dev units had 4 gigs, retail will surely have more than that.

Dev kits typically have MORE ram than the retail not less because they run debugging tools and the like.

Do you have a source on that 4GB?

Dev kits typically have MORE ram than the retail not less because they run debugging tools and the like.

Do you have a source on that 4GB?

There's this one, I've seen it mentioned a couple other places as well. https://mynintendonews.com/2016/10/...-cortex-a57-4gb-ram-32gb-storage-multi-touch/

TwilightPrincess

Member

Older dev units had 4 gigs, retail will surely have more than that.

The "leaked" spec sheet had 4gb, if that isn't fake, we don't know. The modder who brought us this specs isn't a insider.

Doczu

Gold Member

Dev kits typically have MORE ram than the retail not less because they run debugging tools and the like.

Do you have a source on that 4GB?

There's this one, I've seen it mentioned a couple other places as well. https://mynintendonews.com/2016/10/...-cortex-a57-4gb-ram-32gb-storage-multi-touch/

Yeah, that's the one. Although everywhere on the web people are either calling fake or really early devkits.

Yeah, that's the one. Although everywhere on the web people are either calling fake or really early devkits.

I'm still curious though, what's the precedent on existing devices with similar chipsets in terms of RAM? I don't think I've ever heard of a mobile device with 8 gb RAM.

That used to be the case but generally isn't now. Now devkits typically just hand over memory reserved for OS/background functions for debugging purposes. Also pre-launch kits often have less ram than final hardware. Early PS4 kits had 4GB for example, early 360 kits just 256MB, early 3DS kits 64MB, etc.Dev kits typically have MORE ram than the retail not less because they run debugging tools and the like.

Do you have a source on that 4GB?

Doczu

Gold Member

Well you have phones with 6GB of RAM (or even more? I'm really out of the loop for premium models), so a 6-8GB tablet sized console is not dream level speculation.I'm still curious though, what's the precedent on existing devices with similar chipsets in terms of RAM? I don't think I've ever heard of a mobile device with 8 gb RAM.

That used to be the case but generally isn't now. Now devkits typically just hand over memory reserved for OS/background functions for debugging purposes. Also pre-launch kits often have less ram than final hardware. Early PS4 kits had 4GB for example, early 360 kits just 256MB, early 3DS kits 64MB, etc.

The PS4 case was specifically because there was a last minute price drop in 8gb RAM and Sony seized the opportunity. It wasn't meant to have 8.

Doczu

Gold Member

Would be the same with the 360. It was planned to have 256 MB, but got upped to double that.The PS4 case was specifically because there was a last minute price drop in 8gb RAM and Sony seized the opportunity. It wasn't meant to have 8.

Not with the price, but with the increase

Well you have phones with 6GB of RAM (or even more? I'm really out of the loop for premium models), so a 6-8GB tablet sized console is not dream level speculation.

After some cursory searching, the most current and upcoming powerhouse phones/phablets/tablets have 4 gb RAM at most.

TwilightPrincess

Member

Yeah, that's the one. Although everywhere on the web people are either calling fake or really early devkits.

NWPlayer123 (who brought us those specs) told me to not read to much into it. ("eh, I wouldn't read too much into that" ) and a week before she believed it was a amd console.

Doczu

Gold Member

From the offtop side of GAF: http://m.neogaf.com/showthread.php?t=1299630After some cursory searching, the most current and upcoming powerhouse phones/phablets/tablets have 4 gb RAM at most.

New Xiaomi powerhouse with 6 gigs on board. Pretty sure there are also other ones already announced or at least rumored/leaked.

6 gigs will be the norm for premium phones next year, so a tablet device could surely have at least the same ammount.

Just pure speculation, could be that we end with 4GB tops.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

Keep in mind fragment shaders are the bulk of the computation for most frames. If I'd have to go out on a limb and pull yet another number out of thin air I'd say anything from 25% to 50% of a frame these days could go fp16. Don't quote me on that though : )So, the actual boost might be more in the 10-20% range for most applications? Or what do you think? Actual "real-world" benefits of having fp16.

People afraid this won´t be powerful, lads it is Nvidia doing the god damn chip, Nvidia is known for absolutely INSANE graphics cards and was the company who blamed Ps4 and called it a low end Pc, Switch will be powerfull, more than most think, Nvidia isn´t the type of company that does UP stuff, let alone graphics card

http://www.zdnet.com/article/nvidia-calls-ps4-hardware-low-end/

i mean let´s be serious here for a moment, Switch will be the most powered handheld device on the market by HUGE HUGE margins, 720p 60fps on the go, 1080p at home!

http://www.zdnet.com/article/nvidia-calls-ps4-hardware-low-end/

i mean let´s be serious here for a moment, Switch will be the most powered handheld device on the market by HUGE HUGE margins, 720p 60fps on the go, 1080p at home!

sir_bumble_bee

Member

Yeah, I'm curious to know just how far nVidia's influence on the Switch extends. Hell, even the physical design of the things screams nVidia ShieldPeople afraid this won´t be powerful, lads it is Nvidia doing the god damn chip, Nvidia is known for absolutely INSANE graphics cards and was the company who blamed Ps4 and called it a low end Pc, Switch will be powerfull, more than most think, Nvidia isn´t the type of company that does UP stuff, let alone graphics card

http://www.zdnet.com/article/nvidia-calls-ps4-hardware-low-end/

i mean let´s be serious here for a moment, Switch will be the most powered handheld device on the market by HUGE HUGE margins, 720p 60fps on the go, 1080p at home!

Doczu

Gold Member

You could say that Nintendo went third party on their own hardware.Yeah, I'm curious to know just how far nVidia's influence on the Switch extends. Hell, even the physical design of the things screams nVidia Shield

TwilightPrincess

Member

After some cursory searching, the most current and upcoming powerhouse phones/phablets/tablets have 4 gb RAM at most.

There are china handys for 400dollar with 6gb

like the LeTV LeEco Le Max 2 Pro/X820 6GB RAM 64GB ROM 5.7inch 2K Screen Android 6.0 OS Smartphone 64-Bit Qualcomm Snapdragon 820 Quad Core 21MP

4gb version about 230 dollar.

TwilightPrincess

Member

This thread is hilarious reminds me of the ps4pro thread. People just throwing up random hopes and dreams. Just accept another gimped console and stop wasting energy until Nintendo releases the official (underwhelming) specs

For a console it will be weak (but modern), that's not news. For what it is (also a handheld) it's not underwhelming.

Nvidia is making a chip for Nintendo, tailored to their requirements. For a portable device.People afraid this won´t be powerful, lads it is Nvidia doing the god damn chip

It would be a different matter if Nvidia was making a chip for, say, Scorpio.

I'm quite confident that it will be a powerful portable, and insanely so by Nintendo standards (manifesting as probably the largest generational jump ever over 3DS), but it won't be powerful in the grand scheme of things compared to stuff which plugs into a power outlet. That's just physics.

AcademicSaucer

Member

People afraid this won´t be powerful, lads it is Nvidia doing the god damn chip, Nvidia is known for absolutely INSANE graphics cards and was the company who blamed Ps4 and called it a low end Pc, Switch will be powerfull, more than most think, Nvidia isn´t the type of company that does UP stuff, let alone graphics card

http://www.zdnet.com/article/nvidia-calls-ps4-hardware-low-end/

i mean let´s be serious here for a moment, Switch will be the most powered handheld device on the market by HUGE HUGE margins, 720p 60fps on the go, 1080p at home!

Nintendo is paying for it tho, I'm sure it's going to be impressive for a portable but there is no point comparing it to current gen consoles

Well you have phones with 6GB of RAM (or even more? I'm really out of the loop for premium models), so a 6-8GB tablet sized console is not dream level speculation.

This year, the mobile market has reached norms of 4GB and there are a few instances of 6GB models (Chinese Note 7, OP3). The expectation is that next year the industry will move to 6GB models for flagships. Some sources are saying the Surface phone may have up to 8GB.

On average, what would the fp16/32 ratio be for graphically demanding games? At fp32, the TX1 is capable of 0.5TF. So if say 1/3rd of instructions runs on fp16, that means an equivalent of 0.66TF, for instance, right?

Yes, for such a hypothetical split that would be correct. Of course it all comes down to the split.

This isn't actually quite correct. The issue is that, if the ratio of FP32 computation to FP16 computation is 2:1 before boosting the FP16 performance, it has to be 2:1 after boosting the FP16 performance as well (as if you're putting out more frames per second, then the FP32 workload is going to increase in line with the FP16 workload, those extra frames aren't going to be calculated purely with FP16).

So if you've got 500 GF of FP32 performance, or double that for FP16, and a 2:1 split of FP32 to FP16, then the following two equations have to be satisfied:

FP32 + (FP16 / 2) = 500

FP32 / FP16 = 2

Which can be calculated to give you 400 GF of FP32 and 200 GF of FP16 for a total of 600 GF.

I doubt that the performance of the whole CPU block matches the performance you can get out of the 8 cores on PS4 and Xbox One let alone th upclocked PS4 Pro version of the CPU block. Is each core on NX potentially more performant on a per clock cycle basis? Possibly, likely even. is it likely for NX to have enough cores at at high enough speed to overcome the sheer difference in core count (unless you want a gulf of performance, big frame rate and visual loss, when switching to handheld mode)? No, I do not believe it is likely they went completely against the industry commons CPU - GPU balancing.

Is there a reason you're discounting the possibility that Switch would use a 8-core CPU? Their current handheld uses a quad-core, and the TX1 dev-kits had an 8-core CPU, so I wouldn't put it outside the realm of possibility. I certainly wouldn't expect 8 A72 cores in there, but 4 A72s plus 4 A53s sounds like a reasonable proposition.

Interfectum

Member

Nvidia is making a chip for Nintendo, tailored to their requirements. For a portable device.

It would be a different matter if Nvidia was making a chip for, say, Scorpio.

I'm quite confident that it will be a powerful portable, and insanely so by Nintendo standards (manifesting as probably the largest generational jump ever over 3DS), but it won't be powerful in the grand scheme of things compared to stuff which plugs into a power outlet. That's just physics.

My hope is they get within 75-80% of Xbox One so we can get some decent, though downgraded, third party ports of current gen. Is that aiming too high in your opinion?

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

That's right. But if you're not outputting more fps, but instead you're targeting a fixed framerate, and another console uses 0.66TF of fp32 for that, then you can safely say that you'd be hitting that with your 0.66TF of fp32 + fp16 mixed precision (for the hypothetical scenario ozfunghi was discussing).This isn't actually quite correct. The issue is that, if the ratio of FP32 computation to FP16 computation is 2:1 before boosting the FP16 performance, it has to be 2:1 after boosting the FP16 performance as well (as if you're putting out more frames per second, then the FP32 workload is going to increase in line with the FP16 workload, those extra frames aren't going to be calculated purely with FP16).

So if you've got 500 GF of FP32 performance, or double that for FP16, and a 2:1 split of FP32 to FP16, then the following two equations have to be satisfied:

FP32 + (FP16 / 2) = 500

FP32 / FP16 = 2

Which can be calculated to give you 400 GF of FP32 and 200 GF of FP16 for a total of 600 GF.

Yeah I know but still... all I see is good enough PS3 level games, and that is very worrying by itself.

Have you seen how the tegra version of doom 3 compares to the 360 and PS3 versions?

It runs at 2.25x the resolution while holding 60fps more consistently.

Darkangel

Member

I hope there isn't a major gap between portable and handheld mode. I feel like one side is going to have a crappy time if developers have to design their games around both modes.

I wonder if dropping the resolution to 720p is enough to have the same game performance at a lower clockspeed?

I wonder if dropping the resolution to 720p is enough to have the same game performance at a lower clockspeed?

That's right. But if you're not outputting more fps, but instead you're targeting a fixed framerate, and another console uses 0.66TF of fp32 for that, then you can soundly say that you'd be hitting that with your 0.66TF of fp32 + fp16 mixed precision (for the hypothetical scenario ozfunghi was discussing).

Even in this case you would be talking about a 1:1 split between FP32 and FP16 (as that's 330 GF FP32 + 330 GF FP16), not a 2:1 split. If you take an existing game and switch one third of the shader workload to FP16, then that portion of your workload is going to perform the same job in half the time, not perform twice the work in the same time. If you can find some way to do more of whatever your FP16 shader code does, and that's how you decide to use the spare computational resources, then yes you could fill the remainder of your 16.7ms with FP16 calculations and get the speedup you're talking about, but at that point you're really just talking about adjusting your FP32/FP16 split to 1:1.

Keep in mind fragment shaders are the bulk of the computation for most frames. If I'd have to go out on a limb and pull yet another number out of thin air I'd say anything from 25% to 50% of a frame these days could go fp16. Don't quote me on that though : )

Thanks, i won't quote you, i'll just refer others to your post, lol. I'm kidding. No, that's much better than i expected. That would mean an equivalent of between 625 GF and 750 GF.

People afraid this won´t be powerful, lads it is Nvidia doing the god damn chip, Nvidia is known for absolutely INSANE graphics cards and was the company who blamed Ps4 and called it a low end Pc, Switch will be powerfull, more than most think, Nvidia isn´t the type of company that does UP stuff, let alone graphics card

http://www.zdnet.com/article/nvidia-calls-ps4-hardware-low-end/

i mean let´s be serious here for a moment, Switch will be the most powered handheld device on the market by HUGE HUGE margins, 720p 60fps on the go, 1080p at home!

Again, this'll be one of those posts we will all love to revisit in a year or so.

Either you'll be a prophet or you'll be the next AceBandage.