GPUs are different but you can imagine similar issues holding back performance. For example, GPUs are SIMD on a massive scale and SIMD execution by nature is typically memory bottlenecked, as speed can severely affect ability to get data to work on. So if Nvidia's design is better at getting data from memory to work on then they would be better able to make use of floating point math units than AMD's design.

Memory used to be a limiting factor. It isn't much anymore. It's far outpaced memory sizes and the GPU designers are experts at hiding large prefetch latencies.

Keep in mind this is GREATLY oversimplified and some people far more knowledgeable than me are going to correct stuff. When a GPU is working on stuff it keeps data in its equivalent of registers. Now, GPUs can't execute the entire pipeline in a single cycle so they rapidly switch between threads. These are wavefronts in AMD terminology and warps in Nvidia's.

Now let's take an AMD CU. It has 4x 16 lane FP32 ALUs. It has 256KB of registers for those lanes. Nvidia is similar, they have 64 independent ALUs per SM and 256KB of registers for those ALUs.

So where's the difference? Scheduling.

AMD for full occupancy requires 10 wavefronts. 4 vector units, 64 work units per vector unit for a total of 2,560 work items.

Nvidia on the other hand for full occupancy requires 2,048 active threads. You can split it into various arrangements of warps, blocks, and work items which is irrelevant for this discussion.

So even though they both have the same number of ALU lanes and the same number of register sets, to maintain full occupancy a shader running on an AMD GPU requires you to use less than 26 registers per VGPR (65536/2560). Which gave rise to this infamous little diagram:

After you use more than 24 registers per shader on average you risk the pipeline starting to stall as it has to fetch out to memory. On Nvidia this number is 32. So Nvidia can run more complicated shaders before the pipeline starts to stall out. Guess what programmers do to make their graphics prettier? They make their shaders bigger and longer and more complicated.

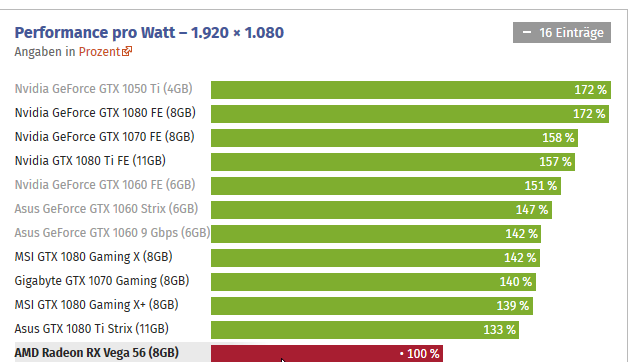

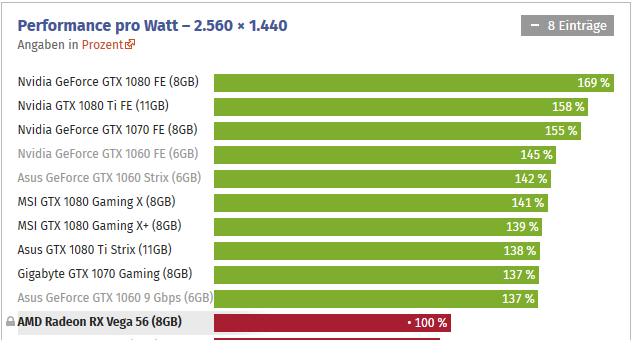

So it ends up being a hell of a lot easier to run an Nvidia card to its fuller potential. That's why Nvidia cards whip an AMD card with less TFLOPs but then the exact reverse happens on compute benchmarks. Gaming is typically way less than full occupancy even when your GPU reports that its running flat strap. It's why your GPU will run at 2GHz on a game and 1.8GHz on Furmark. Nvidia is better at keeping its pipes full because it has more storage for the working set for a full occupancy pipeline, less pipeline stalls, and more efficiency running code.