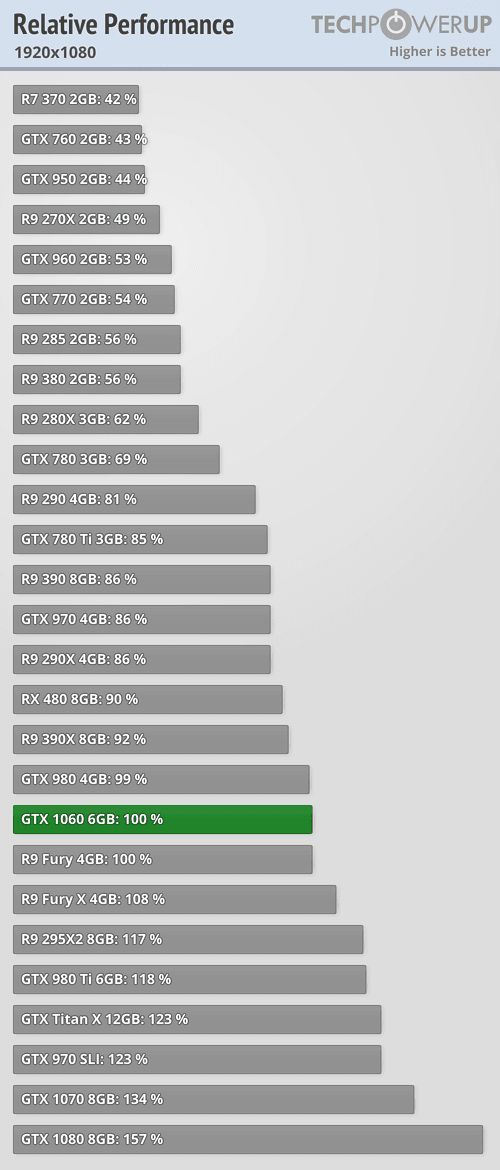

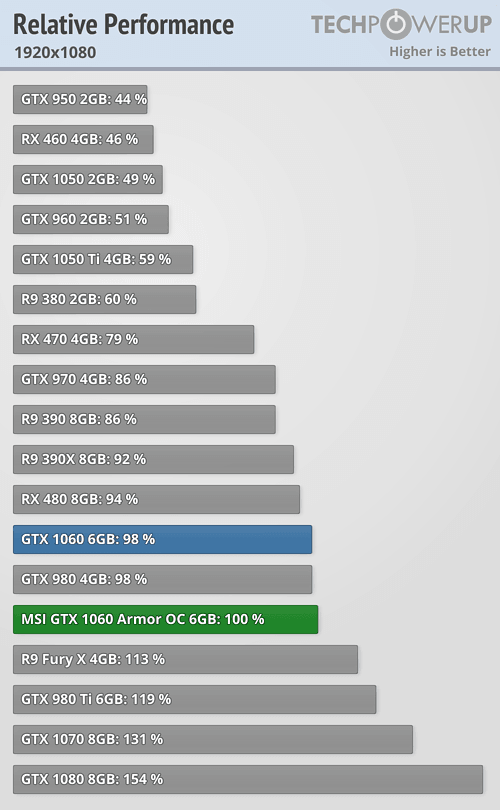

A 13.7 TFLOP GPU with 27.4 TFLOPs in FP16 supports my statement. You can be as dense as you want, but RX 480 was 15% behind the 1060 at launch but look at it now....

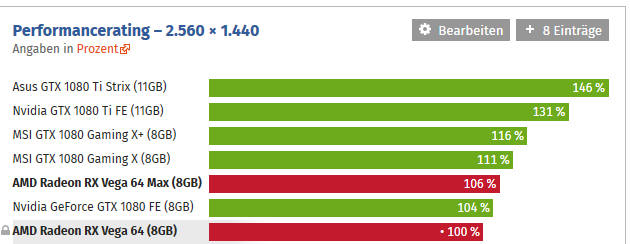

You are really adamant about this whole Vega 64 > 1080ti thing aren't you? All evidence points to the Ti smashing the 64, and yet you just keep on believing. Its not drivers, its not bad benchmarks by literally everyone that has done benchmarks. Its the card. Its just not as powerful, its time to come back to reality.

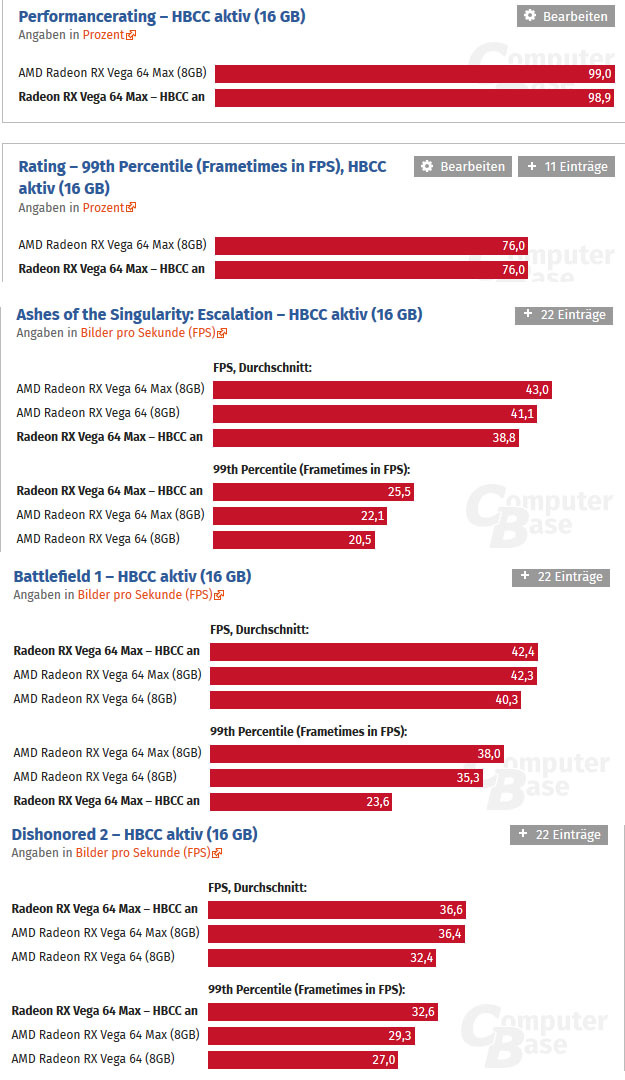

The Ti is better atm, but I can see Vega 64 catching up with better drivers and eventually outpacing the 1080ti with games developed for it's unique architecture. HBCC and RPM including the raw compute advantage Vega has over Pascal is sure to come into play eventually...

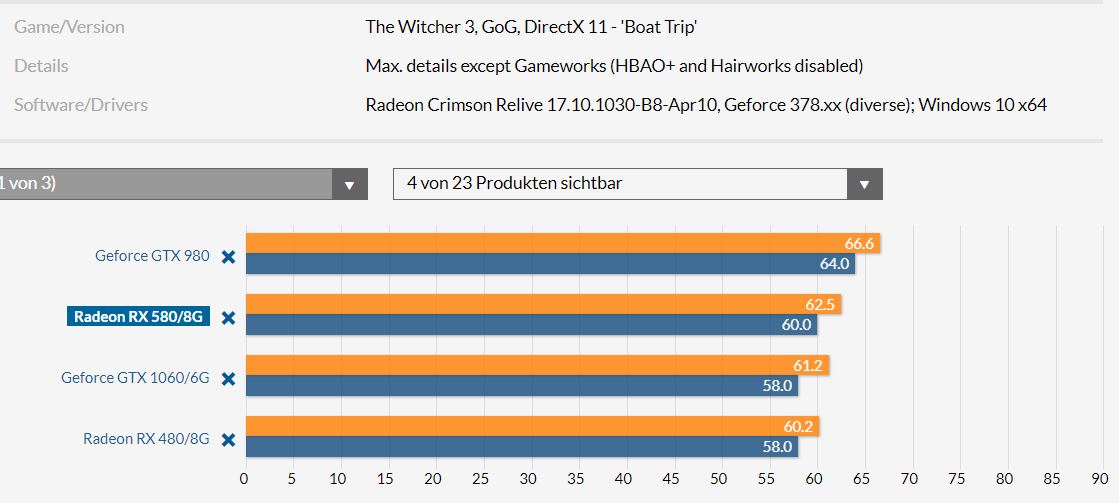

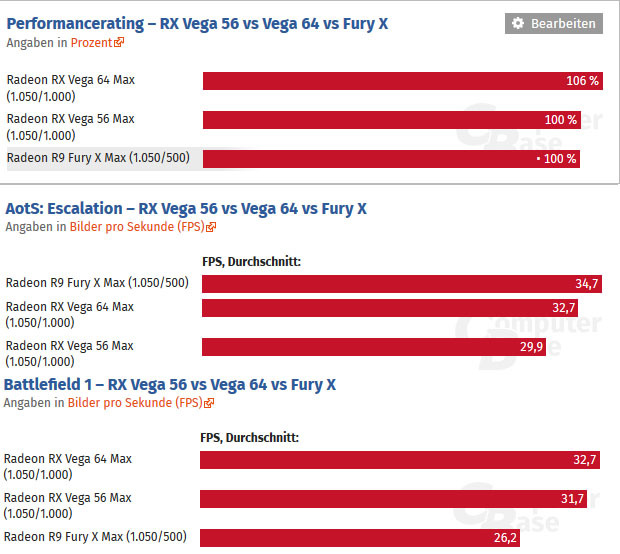

Lets look at the Vega 56 as an example, if it is already solidly beating the 1070 in every test, even Nvidia optimized games how much will it stretch that lead in 3 months with better drivers, yet Vega 56 was being compared to an AIB 1070 and still beats it in every game minus PUBG and another Nvidia optimized game Overwatch.....In a little bit, Stock Vega 56 will absolutely demolish the 1070 due to better drivers and AIB Vega 56 will be going toe to toe and even surpassing the GTX 1080, mark my words on this one.. It will be even more profound when developers make use of HBCC and RPM in their titles...

I see the same for Vega 64, I see huge increases forthcoming there as well, if AMD allows AIB partners full access beyond bios and current vrm restrictions, then I can see some pretty impressive improvements on Vega 64 AIB's, notwithstanding the driver gains we should expect........ As it stands, AMD is focused on DX12 and the Vulkan API as opposed to DX11, this is where the cards will shine and really show their power, not so much for yesterday's games but for future titles....

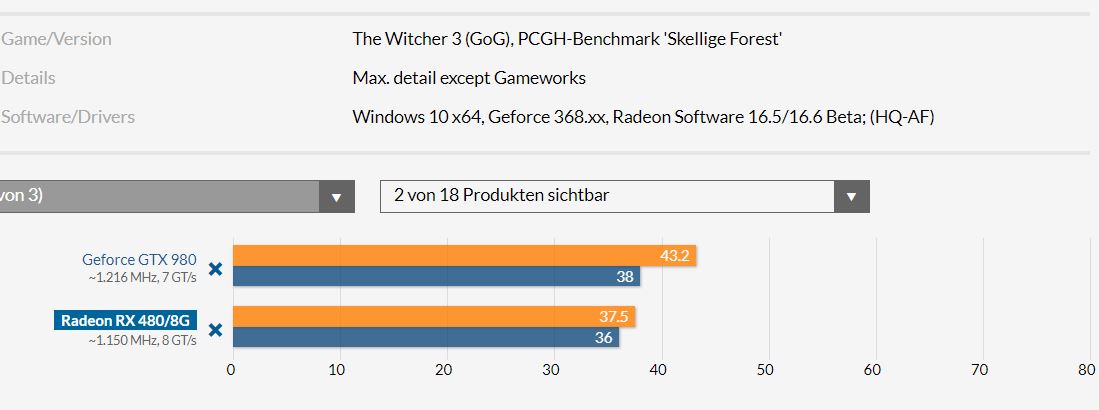

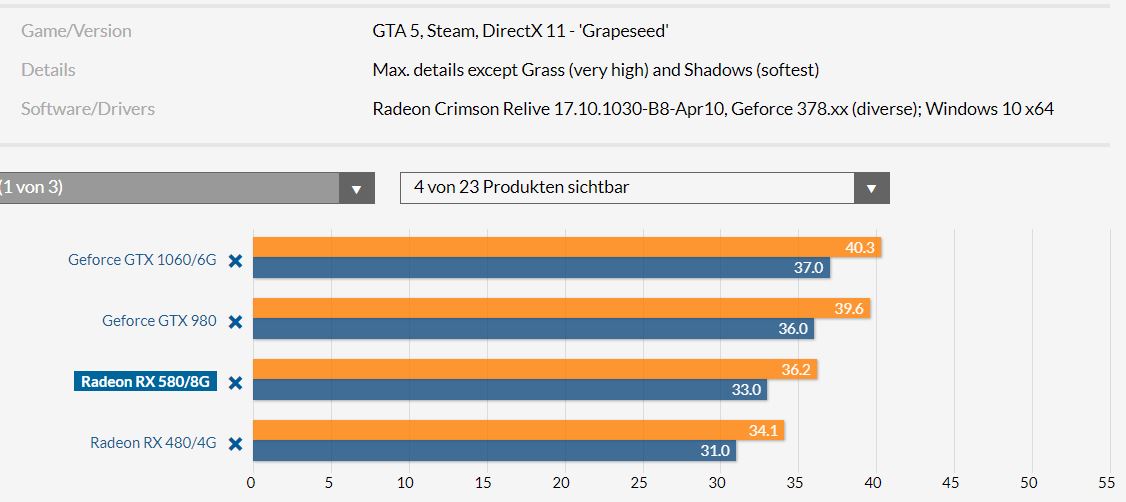

Therefore. simply saying NV is faster is not really accurate by extension, the same was said of the 1060 being a faster card over Rx 480, but it's really not that simple.....

Something strange about those benchmarks, they don't fit with benchmarks from other sites.

Yeah. I'm looking at other benchmarks. 1080Ti is the current king in benches over the 64, but the 64 wins some too and yet there are quite a few of the games where the 1080Ti does not have a substantial lead, which is where the 64 can catch up with mature drivers. In some benchmarks, especially NV focused titles the margins are greater no doubt, but I'm not sure anything can be done about that. I imagine future titles that make use of the Vega features will be a hard pill for the Pascal cards In comparison as well....On the top end, NV is in front, well with the 1080ti at least... I do see that gap closing though.

You should also note that HBC and Primitive shaders are not yet enabled for what it's worth...Sorry to say, but AMD GPU's are never firing on all cylinders on day one, but I'm sure lots will be ironed out eventually. I even expect some impressive gains for the Frontier Edition cards in gaming mode eventually...I guess it's true that they just wanted these cards out this quarter and they would iron out the kinks....a bit later

.....

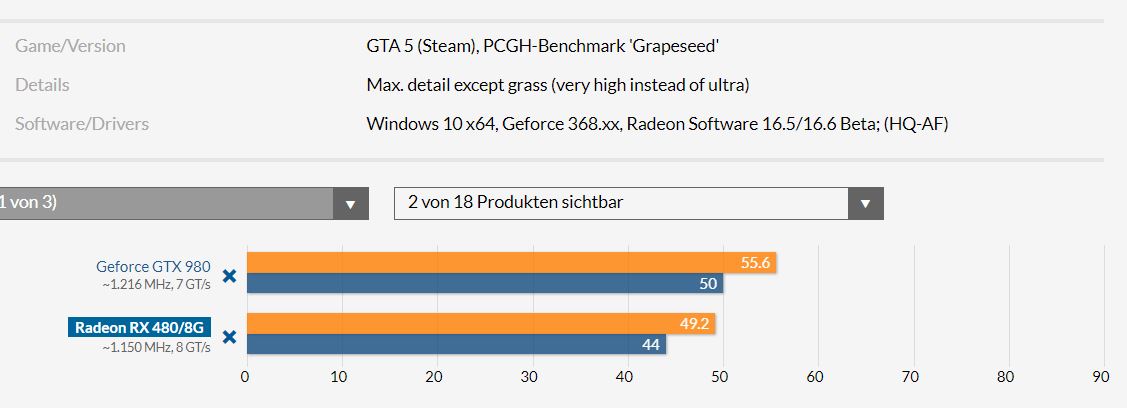

Hitman, Titanfall 2, Battlefront...? Sorry, how are they optimized for Nvidia? GTA V is pretty agnostic. Metro LL, no idea - perhaps so. Care to clarify?

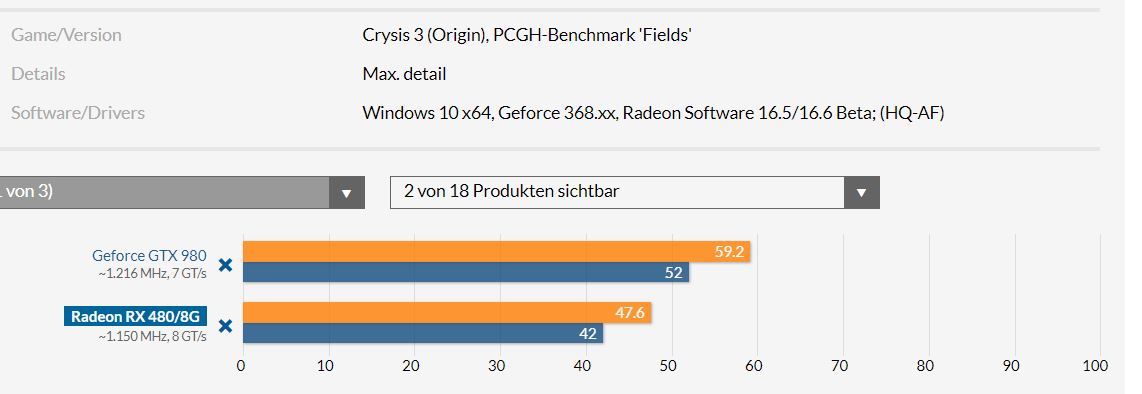

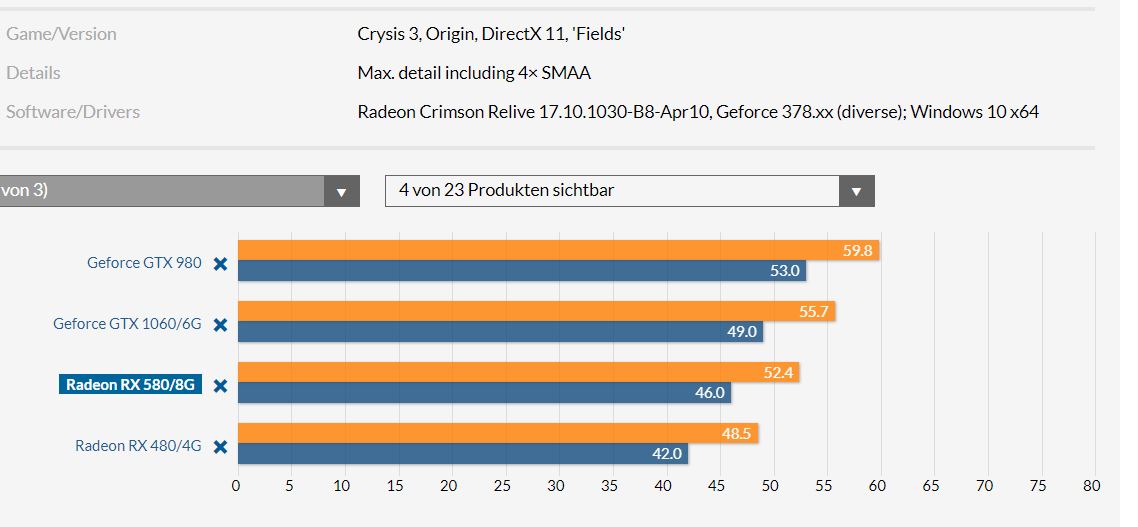

I didn't mean these.....In any case; hitman, titanfall and battlefront always did well on NV cards....GTA5 just prefers the Intel+NV combo, that can't even be argued, (just watch any benchmark at all....), things may change with RDR 2 or GTA6 since the GPU+CPU landscape has changed a bit now.....Metro always had a solid lead on NV cards in every test I've seen of it....If an AMD GPU is beating a comparable NV card in Metro with beta drivers, then it must be a supremely beastly card in terms of raw power....

OTOH

I'm hearing news of a new RX Vega firmware update??????