Proelite

Member

Essentially maybe we aren't getting the full bandwidth picture?

ROPS are not in esram this time around.

Essentially maybe we aren't getting the full bandwidth picture?

Orbis:

Durango:

Wii U:

Say, do you work for MS? I just saw your location in your profile.GPU's are not the same.

There is a reason MS is calling their units shader cores and not compute units.

They're maximized for graphics.

But that would still make the combined BW of both pools inferior to the BW of Orbis' single pool. Which just seems a bit weak in comparison. And needlessly limited for ESram.ROPS are not in esram this time around.

I agree. The quoted bandwidth seems far too low. One explanation attempt posted earlier is that it's the main memory <-> ESRAM BW, not the ESRAM <-> GPU BW.

Gemüsepizza;46711044 said:And CUs in cutting edge GPUs aren't "maximized for graphics"?

At least this time they can store 1280x720 with 4x MSAA in the embedded memory.

Still not enough to do 1080p with 4x MSAA though.

New territory.

I shall call it.

"Xbox viagra."

Gemüsepizza;46711044 said:And CUs in cutting edge GPUs aren't "maximized for graphics"?

Gemüsepizza;46711044 said:And CUs in cutting edge GPUs aren't "maximized for graphics"?

This thread somehow just turned into Wii u bashing...

That diagram is slightly wrong. The main die of 360's gpu and the daughter die had a 32GB/s link.Something regarding the ESRAM doesn't add up.

So far as I know ESRAM is supposed to be better than EDRAM, but the 10MB EDRAM on 360 provided a whopping 256GB/s bandwidth to the GPU (daughter die straight to ROPS)

So for 720 they are going with supposedly better ESRAM that has only 102GB/s bandwidth?

That's a pretty hefty bandwidth drop!

It seems one thing Durango and Orbis fanboys agree on: Lets be mean to Wii U.

But lets be honest, Wii U deserves it.

No it's not. At the very least, you need a Z buffer in addition to your color buffers.32mb is enough to do 1080p with 4xmsaa, in fact its like the exact amount needed if I added things up right.

It seems one thing Durango and Orbis fanboys agree on: Lets be mean to Wii U.

But lets be honest, Wii U deserves it.

Gemüsepizza;46711044 said:And CUs in cutting edge GPUs aren't "maximized for graphics"?

I also remember:

PS3: 60fps

360: 30fps

Apparently not. Guess the engineers at Sony went to AMD to only party and have orgies. They woke up with splitting headache and special sauce on their bodies. And since time ran out and report was to be made to Kaz hirai in his ivory tower, these engineers just slapped some parts together and called it a day. Much like RSX.

Also the esram will be able to communicate more directly than the edram on the 360. One of the major dev complaints.

Can the eSRAM buffer be sideloaded into the main memory?

Guess not.

GPU's are not the same.

There is a reason MS is calling their units shader cores and not compute units.

They're maximized for graphics.

This thread somehow just turned into Wii u bashing...

you are a bit off, it costs about $10 for 1gb GDDR at consumer level. (2gb cards vs 1gb cards of the same model.) so total 4gb would probably be less than $30 for sony to buy in bulk.

DDR3 is just about half that price.

overall 8gb ddr3 should cost about the same as 4gb Gddr5.

One of my big confusions on Friday as well. I could not grasp why CU's were not the "fix" and in addition that Move engine confused the shit out of me.

But for all intents and purposes MS outlined a specific desire for graphics to AMD who found some custom ways to get MS what they wanted without going with a bigger GPU. We will see how that pans out and if MS wasted millions and what MS's overall target was.

If its a ton of wasted silicon we will know

Simply "taking stuff out" wouldn't make it more efficient per FLOP though, at best it would maintain the same efficiency for graphics while reducing the die size.Yes, but. AMD put in a lot of effort and tech to make the GCN architecture efficient at high performance computing, stuff that's not necessarily needed in a console. I'm guessing they took that stuff out.

At least this time they can store 1280x720 with 4x MSAA in the embedded memory.

Still not enough to do 1080p with 4x MSAA though.

Yeah, let's all encourage pathetic childish system wars for no reason!It seems one thing Durango and Orbis fanboys agree on: Lets be mean to Wii U.

But lets be honest, Wii U deserves it.

This thread somehow just turned into Wii u bashing...

ROPS are not in esram this time around.

I've read that costs less than 2 dollars for 1gb of ddr3, so 8gb would probably be ~9 dollars for MS.

That's also why I find it difficult to believe in large efficiency differentials.For those thinking that the GPUs will be fundamentally different; if AMD came up with a GPU architecture that's more efficient than GCN, then they'd be using it in their PC graphics cards. Sony and MS both want the most advanced AMD graphics tech available, and in both cases that's GCN. There might be small tweaks here and there, but the GPUs will be fundamentally the same, but with ~30% more power on PS4's part.

It's the one thing that still unites us all.

Can the eSRAM buffer be sideloaded into the main memory?

Guess not.

For those thinking that the GPUs will be fundamentally different; if AMD came up with a GPU architecture that's more efficient than GCN, then they'd be using it in their PC graphics cards.

So:

3DS = Walter White

Vita = Mike

Wii U = Jesse

720 = The Columbians

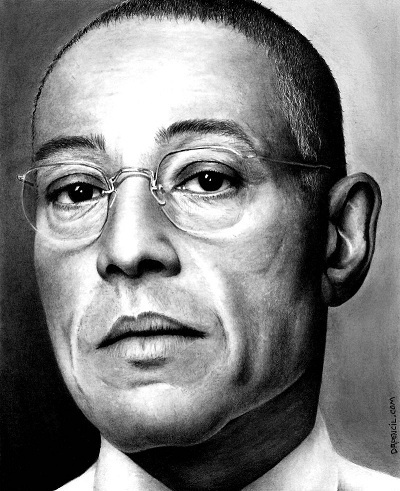

PS4 = Gus

Steam box = Todd

It's the one thing that still unites us all.

Let me see if this helps. For Durango:

Rendering into ESRAM: Yes.

Rendering into DRAM: Yes.

Texturing from ESRAM: Yes.

Texturing from DRAM: Yes.

Resolving into ESRAM: Yes.

Resolving into DRAM: Yes.

For the 360, that would be yes, no, no, yes, no, yes.

Orbis

Durango

WiiU

Gemüsepizza;46711044 said:And CUs in cutting edge GPUs aren't "maximized for graphics"?

based on estimated performance given what we know.

i updated the image, turns out passmark is a terrible GPU metric:

Something regarding the ESRAM doesn't add up.

So far as I know ESRAM is supposed to be better than EDRAM, but the 10MB EDRAM on 360 provided a whopping 256GB/s bandwidth to the GPU (daughter die straight to ROPS)

So for 720 they are going with supposedly better ESRAM that has only 102GB/s bandwidth?

That's a pretty hefty bandwidth drop!

This is a big difference.

I mean, sampling from eSRAM. Interesting.

it definitely shows how immature people can be for sure...