CyberPanda

Banned

The latest part in the Gears of War series, Gears 5, has just been released on the PC. Powered by Unreal Engine 4, Gears 5 promises to showcase what Epic’s engine can achieve on current-gen systems. As such, it’s time to benchmark it and see how it performs on the PC platform.

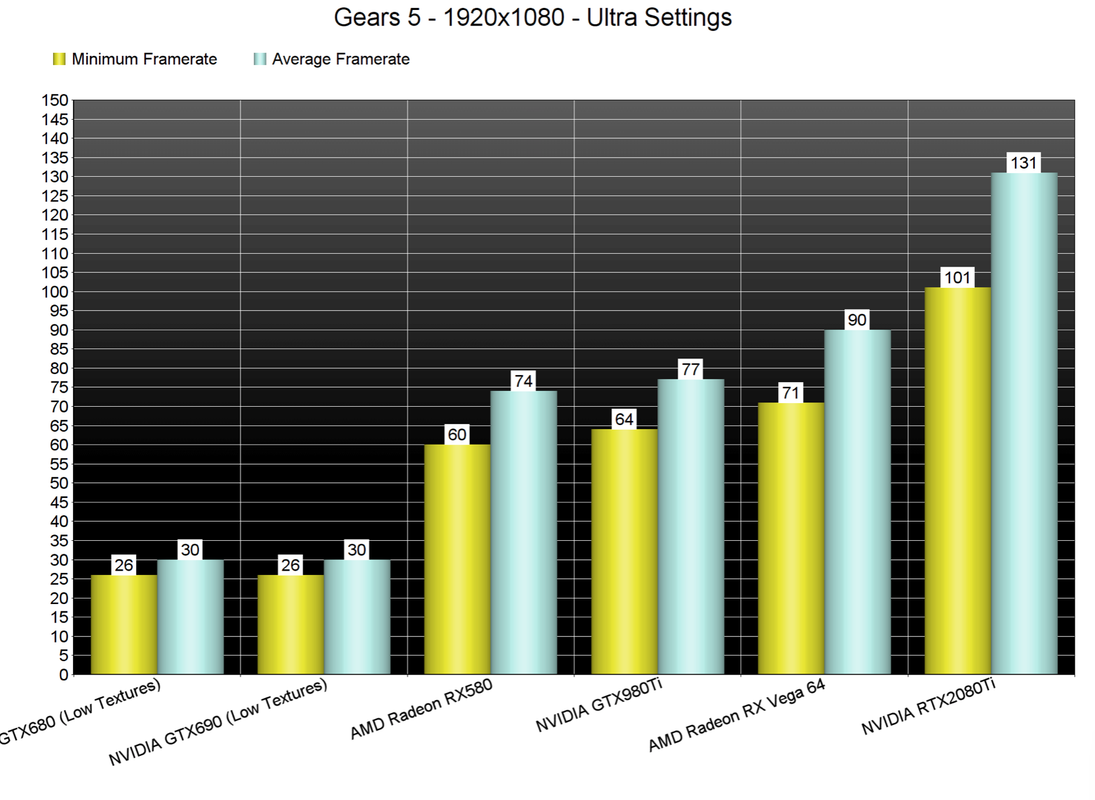

For this PC Performance Analysis, we used an Intel i9 9900K with 16GB of DDR4 at 3600Mhz, AMD’s Radeon RX580 and RX Vega 64, NVIDIA’s RTX 2080Ti, GTX980Ti and GTX690. We also used Windows 10 64-bit, the GeForce driver 436.30 and the Radeon Software Adrenalin 2019 Edition 19.9.1 drivers. NVIDIA has not included any SLI profile for this title, meaning that our GTX690 performed similarly to a single GTX680.

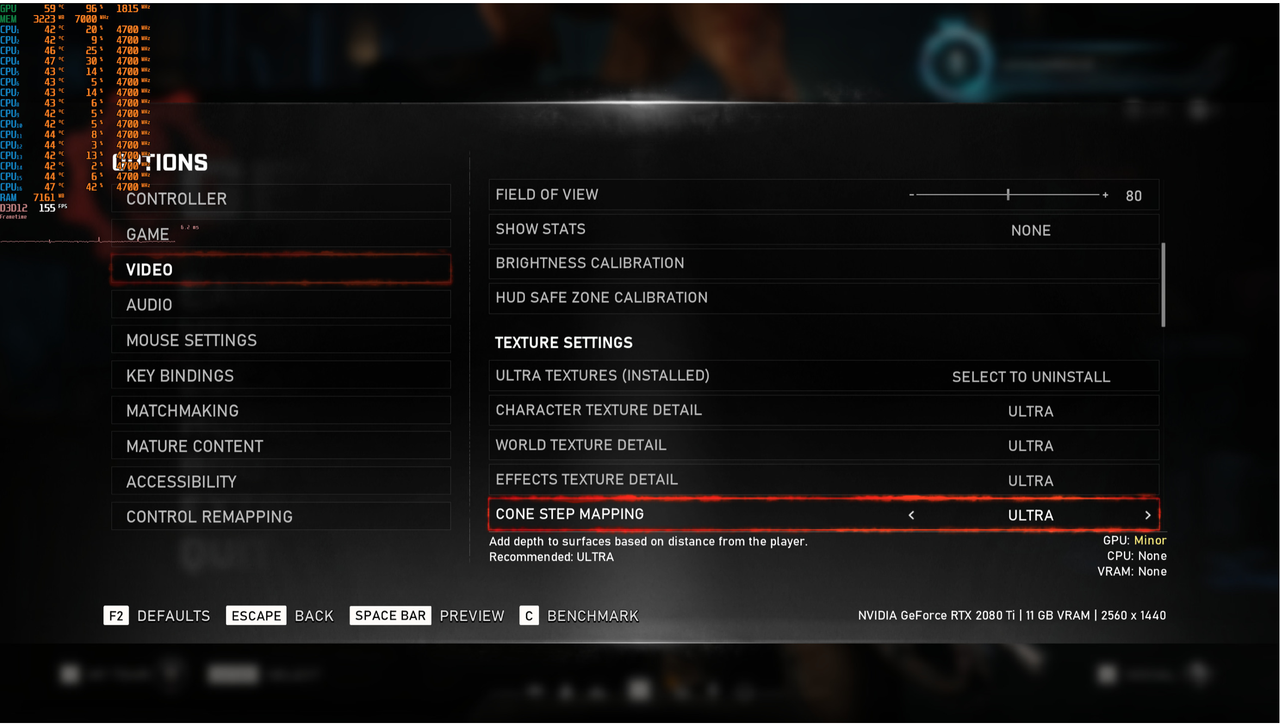

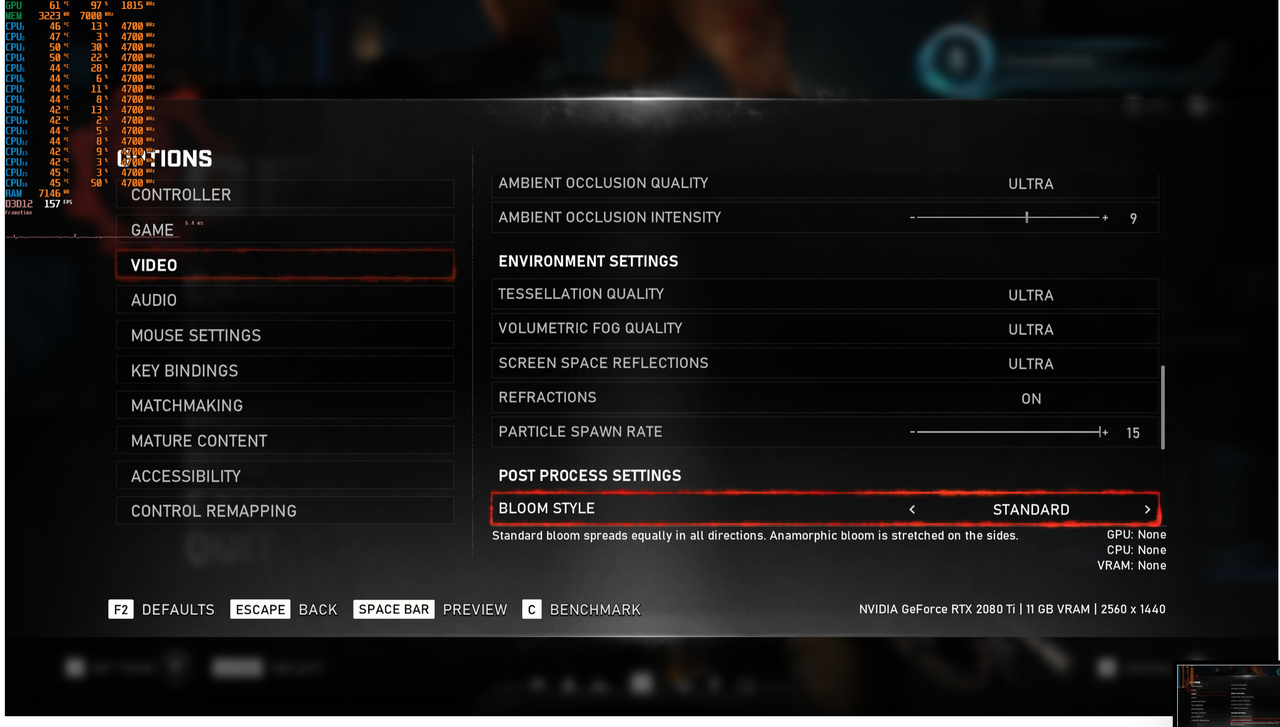

The Coalition has added a huge amount of graphics settings to tweak. Seriously, Gears 5 may feature the most extensive graphics settings we’ve ever seen in a PC game. The game features basically everything; from a resolution scaler to Field of View, Sharpening and Ambient Occlusion Intensity sliders. Not only that, but there is a small description for each option that lets you know whether it affects the CPU, the GPU, or the VRAM.

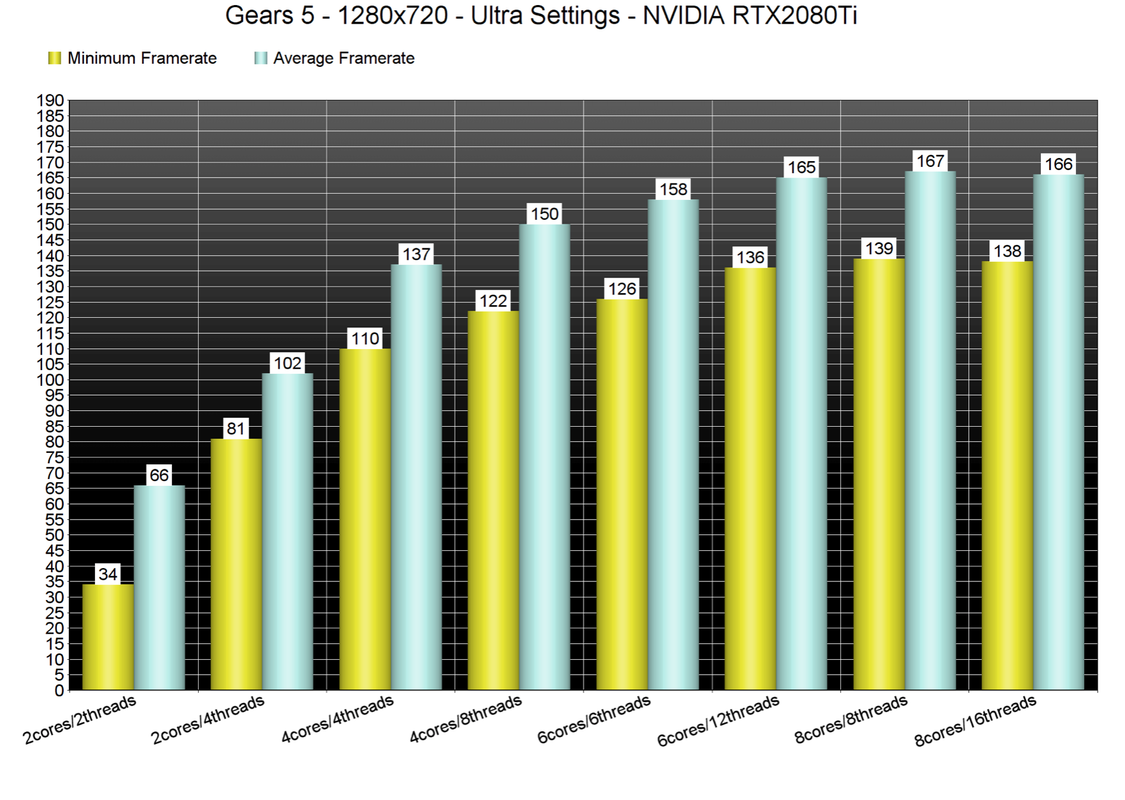

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. We also used the game’s built-in benchmark tool as it’s representative of the in-game performance you’ll be getting. Now even though we lowered our resolution to 720p, we were still GPU-limited on most of our setups. This basically suggests that Gears 5 does not require a high-end CPU.

Without Hyper Threading, our simulated dual-core was able to push a minimum of 34fps and an average of 66fps. When we enabled Hyper Threading, our simulated dual-core system was able to offer a constant 60fps experience. All of our other systems were able to offer a smooth gaming experience (with framerates above 100fps at all times). Still, it’s worth noting that the game can scale on more than four or six CPU cores/threads. Given its very light CPU requirements (and after facing a lot of issues with Microsoft Store), we did not benchmark the game on our Intel i7 4930K system.

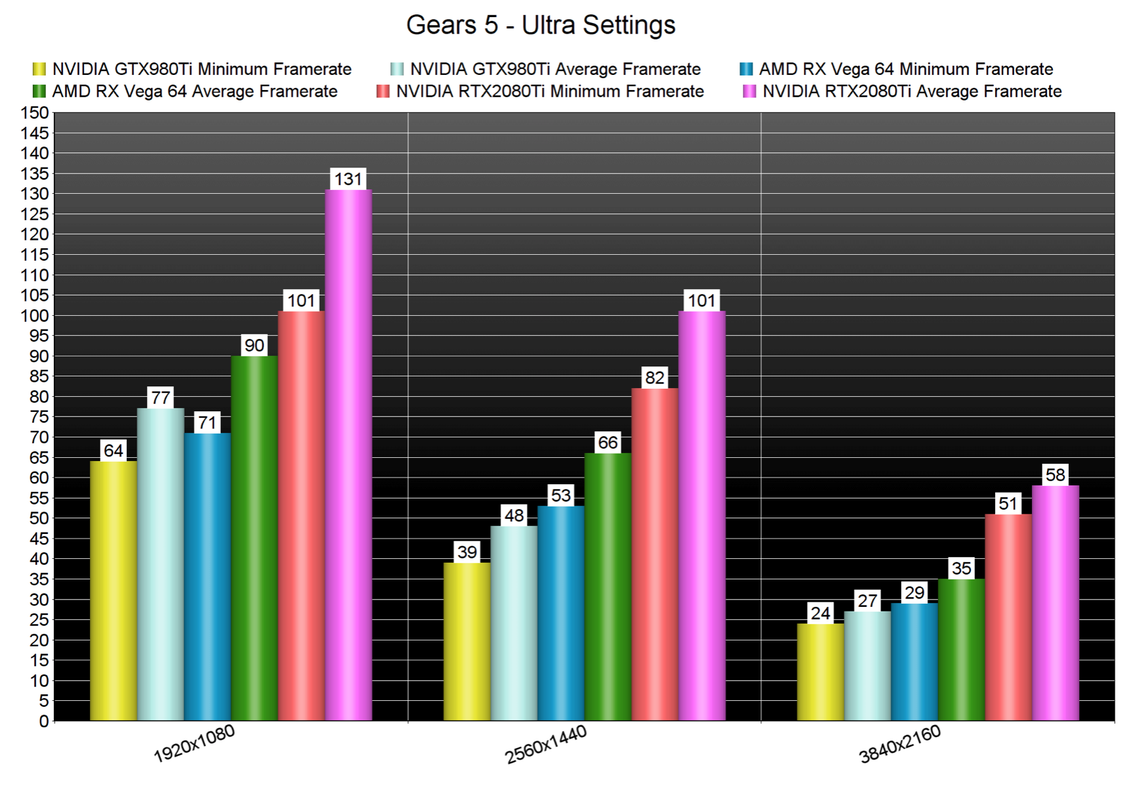

At 1080p, most of our GPUs were able to offer a 60fps experience on Ultra settings. Moreover, and contrary to other Unreal Engine 4 games, Gears 5 works like a dream on AMD’s hardware. The AMD Radeon RX580, for example, was almost able to match the performance of the NVIDIA GTX980Ti.

At 2560×1440, the only GPUs that were able to offer a smooth experience were the AMD Radeon RX Vega 64 and the NVIDIA GeForce RTX2080Ti. As for 4K, NVIDIA’s most powerful graphics card struggled to come close to a 60fps experience.

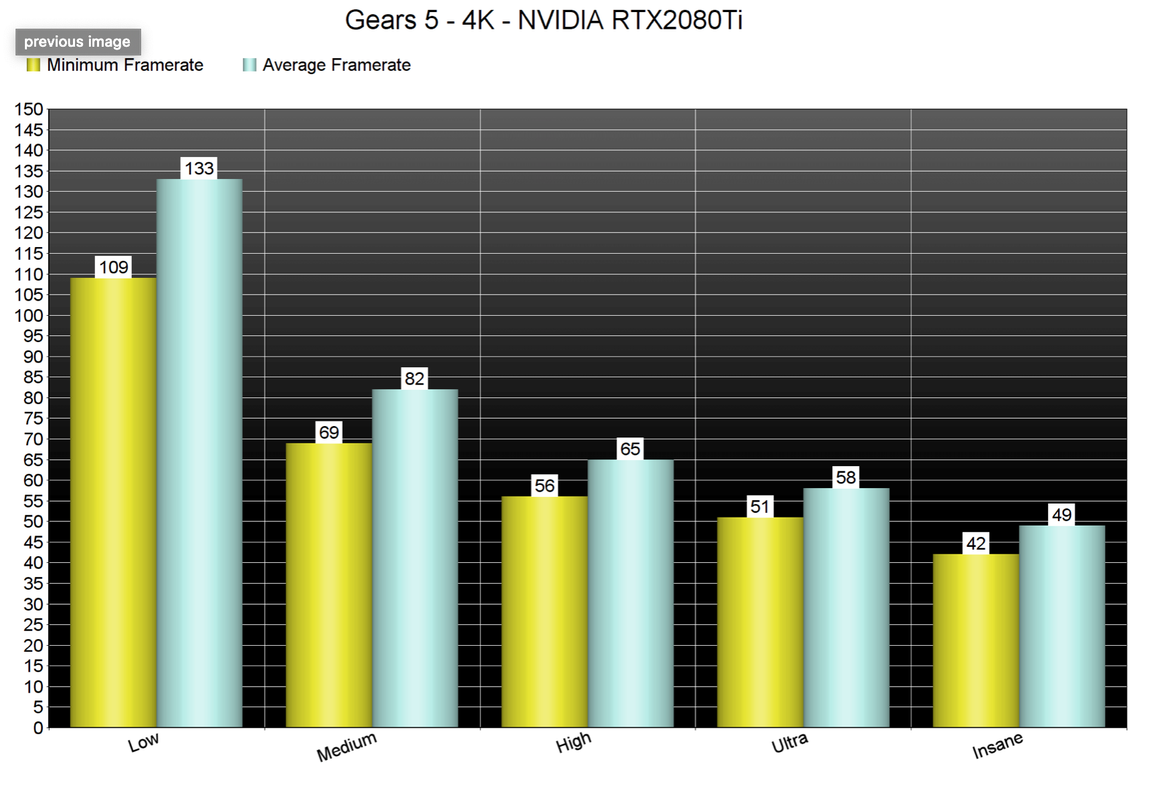

Since the game also comes with some Insane graphics settings, we’ve decided to benchmark all presets in 4K on the NVIDIA GeForce RTX2080Ti. In order to maintain a 60fps experience in 4K, PC gamers will need to run the game on High settings. That, or they can use the game’s minimum framerate option (which basically reduces your resolution whenever the framerate is about to go under 60fps). Owners of G-Sync monitors will be able to enjoy the game in 4K with Ultra settings. As for the Insane settings, we suggest running them at a lower resolution.

Graphics wise, Gears 5 looks great. The characters looks awesome, the lip-syncing is great, the environments look amazing and there are some cool environmental destruction set-pieces. However, there are also some limitations here that really surprised us. For instance, the gameplay environmental physics/destruction are toned down. The lighting is nowhere close to what Metro: Exodus and Control achieved. It still looks great, however, it lacks the depth that ray tracing brings to the table. Ray tracing is not a gimmick and if you’ve played these two games (or even Quake 2 RTX), you know what I’m talking about. Don’t get me wrong; Gears 5 looks great and runs smooth on the PC. However, Gears 5 does not showcase what future games can look in Unreal Engine 4; it feels more like a “refinement” of what modern-day games can look like.

All in all, Gears 5 runs exceptionally well on the PC platform. The game does not require a high-end CPU and we did not experience any stuttering issues even on our simulated dual-core system. The game also packs one hell of graphics settings to tweak, and it plays great with mouse and keyboard. It’s also worth noting that Gears 5 runs incredibly well on AMD’s hardware. While it does not push the PC graphics boundaries, Gears 5 is easily one of the most optimized PC games of 2019.

Gears 5 PC Performance Analysis

Powered by Unreal Engine 4, Gears 5 promises to showcase what Epic's engine can achieve on current-gen systems so it's time to benchmark it and see how it performs on the PC platform.

www.dsogaming.com

www.dsogaming.com