I'm confused by part of this.

If you have an 120 Hz (refresh rate) monitor and your framerate is much lower than 120 Hz, that will have lower input latency than otherwise? If you have a 60 Hz monitor and your framerate is much HIGHER than 60 Hz, that means you'll have HIGHER input latency?

Both of those examples are the opposite of what I would expect.

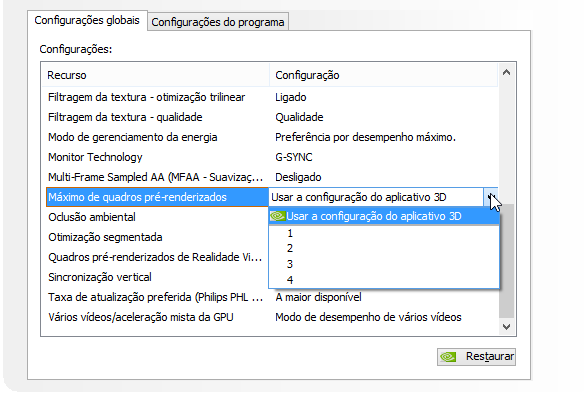

Not exactly, the input latency derived from your frame rate (frame time) will will be lower the higher frame rate you have. However, when your frame rate goes over the refresh rate, due to how Vsync works, it causes the back buffers to fill up and stalls the GPU. So it's not about having much lower frame rate (below your refresh rate), as long as you're at 59 (for a 60Hz refresh display) it'll prevent the back buffers from filling up. However, capping to 59 will cause judder to occur, so it's best to just use 60 (if you're aiming for 60fps on a 60Hz display) to eliminate some of the input latency of going over the refresh rate with Vsync without introducing judder.