That doesn't mean it can run an OS, nor does it mean it doesn't need an OS at all.

I dunno if you're familiar, but I've talked in the past about how hUMA and GPGPU can be used to boost performance on stuff like AI. Most AI code is branchy prediction stuff that runs really well on the CPU, but you also need to determine what the actor can see around them (visibility), and also how they plan to move from where they are to where they want to be (pathfinding). So you look around, and based on what you see, you decide where you'd like to be instead of where you are, and then you figure out how you're going to get there. (Ladder? Stairs?)

The problem is, the CPU is really really shitty at visibility and pathfinding. In fact, even though it makes up a fairly small portion of the total AI code, a CPU will spend about 90% of it's cycles just on visibility and pathfinding. The other 10% of your CPU time is what makes the actual decisions.

Enter the GPU, which happens to be super awesome at both visibility and pathfinding. So we ask the GPU what the actor can see, and then the CPU can make a decision on what the GPU spots. Not only do we figure what we see much faster overall, we've freed up 90% of the AI core. Now we can make decisions for ten times as many actors, for example. Or we can teach them to make far more complicated decisions.

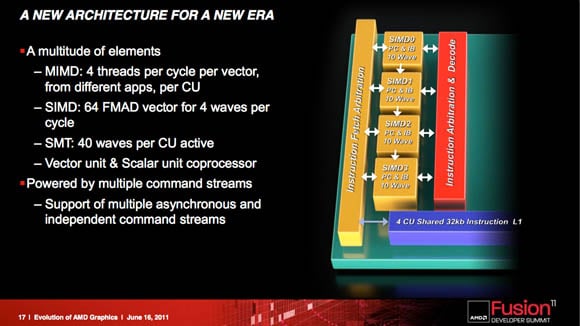

Now let's add heterogeneous queuing to the mix. Without it, the CPU basically needs to say, "What can actor1 see? Okay, then how does he get to where he's headed now? Okay, how about actor2?" That wastes a lot of the CPU's precious time, and is unnecessary on GCN. Basically, the GPU already knows it needs to rapidly update visibility and pathfinding for every entry in the actors table. Thanks to hUMA, the very same table is shared between the GPU and CPU, so they don't to pass that back and forth either; either can directly access any data they need. That means the GPU just takes the first actor, casts rays out of its face, determines which object(s) of interest those rays intersect, sets the resulting array in the actor's canSee property, and moves on to the next actor. After that, the GPU can lookup each actor's currentLocation and desiredLocation, and write out a nice path for them to start following in the actors table. Hell, it can probably do the visibility and pathfinding simultaneously, actually, since they're not directly interdependent.

All of this occurs with no input from the CPU whatsoever. "But wait!

All of the input is coming from the CPU!" Sure, but the GPU doesn't care nor even

know if it's looking up data the CPU wrote moments ago, or reading from a script written a year ago. It's just reading two points in space and tracing a line between them through the environment, which it also has a record of. All the GPU knows is

input comes from A; output goes in B and it just does that, again, and again, and again

because that's what it does.

Meanwhile, from the CPU's perspective, everything is equally simple, but reversed.

input comes from B; output goes in A

So, yeah, that's what hQ is all about. It doesn't change what the GPU is capable of; it just means the GPU already knows what it's supposed to be doing with the data, basically. Not only does that free up time on the CPU, it reduces interdependency, which helps you make your engine not just asynchronous but also largely autonomous. Ideally, you want each task to be completely independent from the others. That lets you do all kinds of neat tricks. For example, obviously, you want rendering done at 120 Hz in VR, but does your AI really need to make 120 decisions a second? I don't know that humans change their mind that quickly. Running your AI at 30 Hz or even 15 Hz would save you a lot of cycles, and seems like it would provide sufficient realism, especially if you're running more sophisticated scripts now, with all the time you saved offloading visibility and pathfinding.