Like, working working? As in, Sony could pay UW a license fee today, and start putting this in to Morpheus tomorrow?

UW have a working as a prototype in a lab. The UW technology itself was sold to microvision, which seem to have abandoned the project at the end of the VR craze in favor of pico projectors for smartphones.

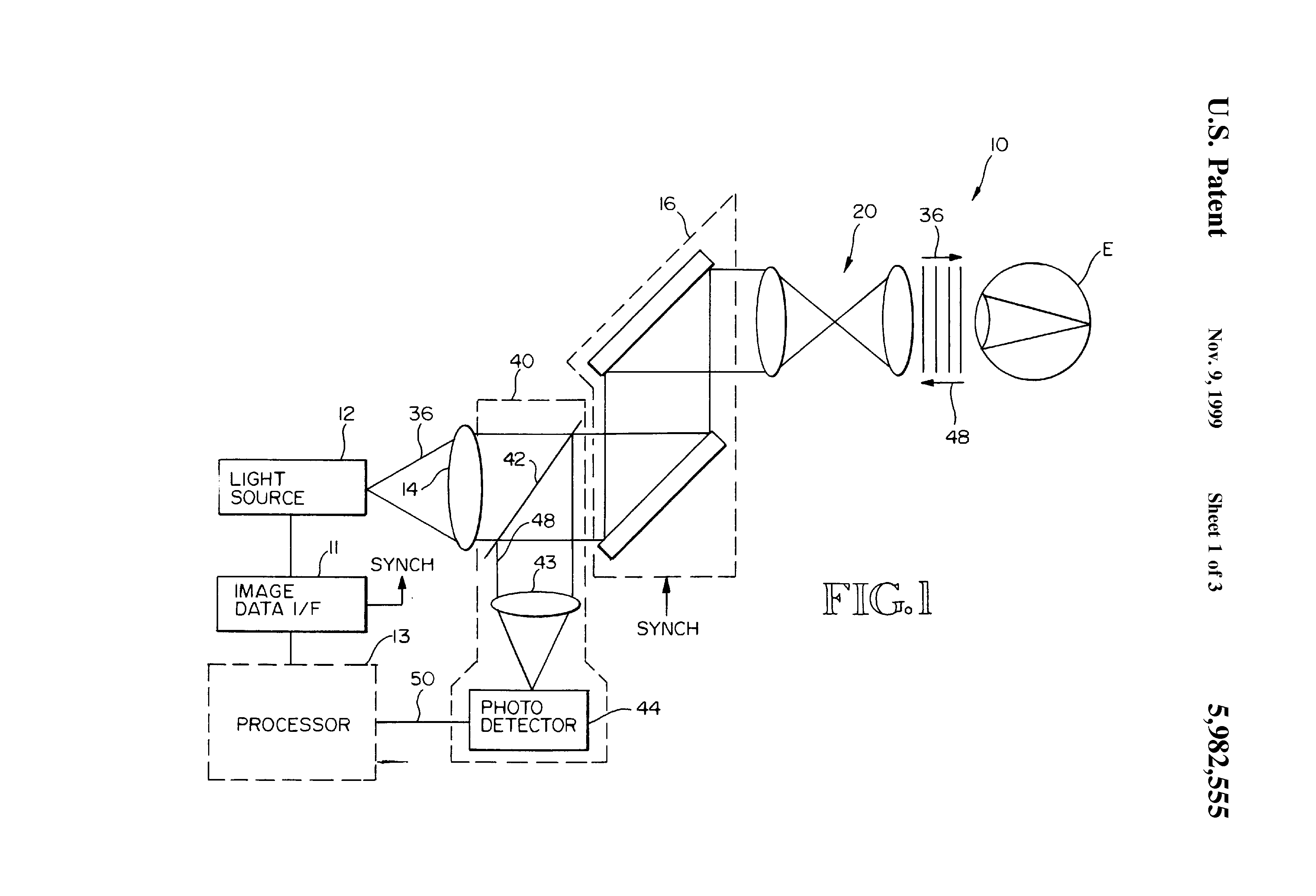

I don't think it's a hard think to do anyways. It's just a beamsplitter + photodetector, so Sony especially should have little issues adapting their own technologies from 3 chip video cameras, apart from cost issues.

Source:

http://www.hitl.washington.edu/research/true3d/ (note: seems like optics have improved by a lot since then, huh)

What's the deal with VRDs anyway? Wiki sez they have resolution "approaching the limit of human vision," and great color reproduction. It also lists a FoV of 120º, but for some reason the Avegant Glyph is only 45º, so I'm not sure what's going on there.

Wikipedia links to the prototype above, which has a poor VGA screen with 40 degree FoV. Seems like they copied their wishlist before they edited it.

Source:

http://www.hitl.washington.edu/publications/p-95-1/

Modern digital micromirror devices are commercially available from many manufacturers. For example. Avegant uses a 0.3" 720p chip from Texas Instrument. They also have a 1.3" 4k chip, but price might be prohibitive.

And it doesn't have to be DMD. LCOS would work as well.

Source:

http://www.ti.com/lit/ds/symlink/dlp3114.pdf

Beam splitters and photodetectors aren't hard to find either. It's all a question of cost

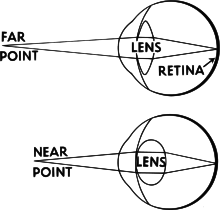

Okay, so when the user is looking at a fly on their nose, we want to defocus everything behind it? And eye tracking gives us that?

Something like that. We want at least the focus depth of the object we are looking at to be the same as the stereopsis depth. Doesn't matter if our eyes are moving or not. Stereopsis = depth we get from viewing with two eyes. Ideally focus and object depth are equal for the whole scene, which would work without eye-tracking. Though I'm not sure how that would work with VRDs, but it works with light field rendering.

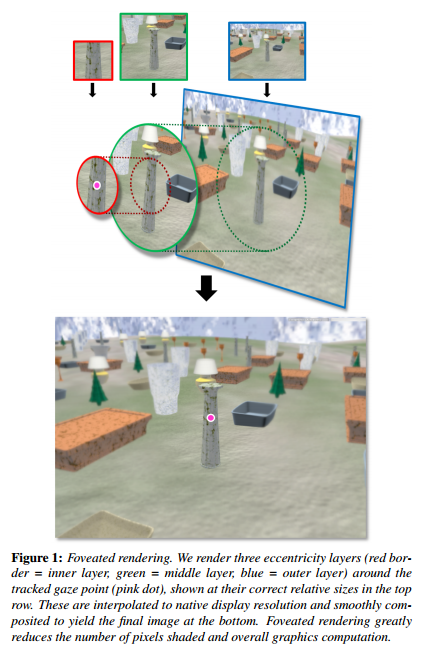

Wow, that is pretty tiny. So if I'm understanding your other post correctly, this gives us a 100-fold increase in rendering efficiency? So if a PS4 is running a game at 1MP per eye and 60 Hz, then with foveated rendering, we can jump to 16MP per eye and 375 Hz?? So we could get visuals as good or better than Second Son, at 16x the resolution at 120 Hz, or what?

Not quite, the benefit get lower with lower resolutions and accounting for tracking latencies. Also rasterization is only one part of the rendering cost. But otherwise yeah, that's the jist of the idea.

And with a larger field of view, we actually get a larger performance multiplier, since a proportionally smaller section of the display gets a full pass?

yep

I was wondering about that, but I wasn't sure if it would be a good idea or not. So we'd refresh the in-focus area at like 120 Hz while the rest is refreshed at 60 Hz? Or would we need to refresh at like 180/90 or 240/120 Hz to avoid flicker in the periphery?

The periphery is less important, but to avoid low persistence flicker, it would actually have to be higher in the periphery.

The latest model for flicker fusion is (See my other neogaf post:

http://www.neogaf.com/forum/showthread.php?t=773531 )

Code:

CFF = (0.24E + 10.5)(Log L+log p + 1.39 Log d - 0.0426E + 1.09) (Hz)

E = how far from center of your gaze (degrees)

L = how bright (eye luminance, 215cd/m2 full white screen = 3.45 Log L)

d = field of view (degrees)

p = eye adaption (pupil area in mm2, typical 0.5-1.3 Log p)

So it's something like 80/90/100 Hz for the three layers (might calculate exact values for it later)

What are your thoughts on "giant" microdisplays? Can they just re-tool a fabrication line to start producing 2.8" 16MP displays with "current" technology, or would additional research need to be done to make them larger? What about arrays? Or should we be looking at VRDs? Or something else?

As I said above, 4k DMDs are already available. It's just a matter of price. A 1080p chip costs $50 on amazon.

See:

http://www.amazon.com/s/ref=nb_sb_n...s&field-keywords=dlp+chip&rh=i:aps,k:dlp+chip

Can HDMI even carry this much data, or would the headset need to do its own upscaling and compositing of the component layers?

Not HDMI, but display port 1.3 is enough for 8K 60 or 4k 120, and should be available in the second half of this year.