This question is almost impossible to answer because OpenGL by itself is just a front end API, and as long as an implementations adheres to the specification and the outcome conforms to this it can be done any way you like.

The question may have been: How does an OpenGL driver work on the lowest level. Now this is again impossible to answer in general, as a driver is closely tied to some piece of hardware, which may again do things however the developer designed it.

So the question should have been: "How does it look on average behind the scenes of OpenGL and the graphics system?". Let's look at this from the bottom up:

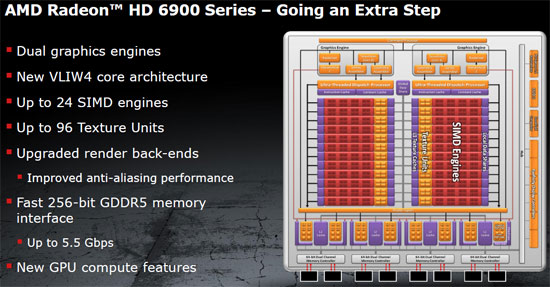

At the lowest level there's some graphics device. Nowadays these are GPUs which provide a set of registers controlling their operation (which registers exactly is device dependent) have some program memory for shaders, bulk memory for input data (vertices, textures, etc.) and an I/O channel to the rest of the system over which it recieves/sends data and command streams.

The graphics driver keeps track of the GPUs state and all the resources application programs that make use of the GPU. Also it is responsible for conversion or any other processing the data sent by applications (convert textures into the pixelformat supported by the GPU, compile shaders in the machine code of the GPU). Furthermore it provides some abstract, driver dependent interface to application programs.

Then there's the driver dependent OpenGL client library/driver. On Windows this gets loaded by proxy through opengl32.dll, on Unix systems this resides in two places:

X11 GLX module and driver dependent GLX driver

and /usr/lib/libGL.so may contain some driver dependent stuff for direct rendering

On MacOS X this happens to be the "OpenGL Framework".

It is this part that translates OpenGL calls how you do it into calls to the driver specific functions in the part of the driver described in (2).

Finally the actual OpenGL API library, opengl32.dll in Windows, and on Unix /usr/lib/libGL.so; this mostly just passes down the commands to the OpenGL implementation proper.

How the actual communication happens can not be generalized:

In Unix the 3<->4 connection may happen either over Sockets (yes, it may, and does go over network if you want to) or through Shared Memory. In Windows the interface library and the driver client are both loaded into the process address space, so that's no so much communication but simple function calls and variable/pointer passing. In MacOS X this is similar to Windows, only that there's no separation between OpenGL interface and driver client (that's the reason why MacOS X is so slow to keep up with new OpenGL versions, it always requires a full operating system upgrade to deliver the new framework).

Communication betwen 3<->2 may go through ioctl, read/write, or through mapping some memory into process address space and configuring the MMU to trigger some driver code whenever changes to that memory are done. This is quite similar on any operating system since you always have to cross the kernel/userland boundary: Ultimately you go through some syscall.

Communication between system and GPU happen through the periphial bus and the access methods it defines, so PCI, AGP, PCI-E, etc, which work through Port-I/O, Memory Mapped I/O, DMA, IRQs.