I think we're not getting anywhere, so let's do it step by step.

Let's consider a gamer playing 1.5m away from the camera, just in front of it. The guy is 1.8m tall. There is a wall 2m to the left, another one 2m to the right, and a back wall 5m in the back.

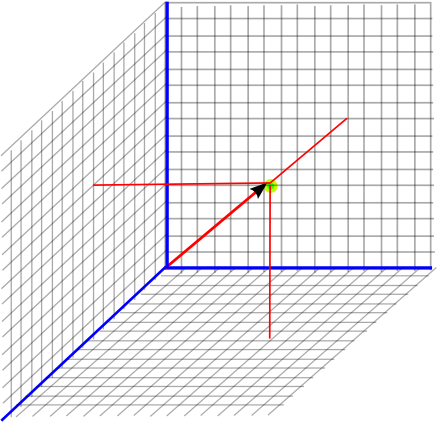

The camera is the origin (coordinates (X,Y,Z) = (0,0,0)). (X is the Axis towards the player, Y is lateral, Z is the vertical axis)

Let's say we want to track the player's head and display it in the game (I single out one body part, but it can be generalized to all of them).

Because the camera does depth measurement, it knows that the head is at coordinates (1.5, 0, 1.8). It also "knows" that there are planes at Y = -2, Y = 2 and Z = 5 (it doesn't really need to know that they are planes actually, only that the given pixels are part of a background).

With that single data, the software can display a virtual representation of the head in an arbitrary referential. If the game has the same origin, it's only drawing a sphere in its own referential at (1.5, 0, 1.8). If it has another one, it's just a matter of translation and rotation to display it somewhere else.

It can also display the walls, or any other object it located, but all of them are unrelated.

If the user moves his head, for example strafing left and right, the camera will re-estimate its position and track it in its referential. The head position will be estimated to (1.5, *, 1.8) , and the position in the game will be updated accordingly.

At no moment the position of the head needs to be matched to the environment, nor would it make its tracking better. There is no need to consider any other data than the raw output of the depth measurement, that is absolute position in a XYZ referential.