I thought about doing a deep dive into the next generation consoles from the standpoint of a developer with a new thread, but I can´t open threads yet so I posted this here. So, I would like to take you onto a journey where we will investigate certain aspects and also dive into the specifications and what they could mean for game development and the technologies it enables. I apologize in advance for any grammatical / spelling or other error. Remember I am only human and I did very likely make some mistakes (please point them out if you can).

This will be a dive into the specifications of both consoles, so we will compare them and look at what each console can provide in each specific area and what kind of differences we can expect. That being said, this is still very much speculation on my part, since I haven’t got to play with either console.

Starting with the

CPU:

The processor on both consoles is going to be nearly identical, we don’t know every specific detail, yet we can expect them to be very much the same in terms of design and architecture.

Xbox:

The Series X is running an 8 core 16 thread cpu @3,6 ghz with smt enabled and @3,8 ghz with smt disabled.

PlayStation:

The PlayStation 5 is running an 8 core 16 thread cpu @3,5 ghz with a variable frequency (more on the variable frequency later).

We will start by talking about simultaneous multithreading (smt):

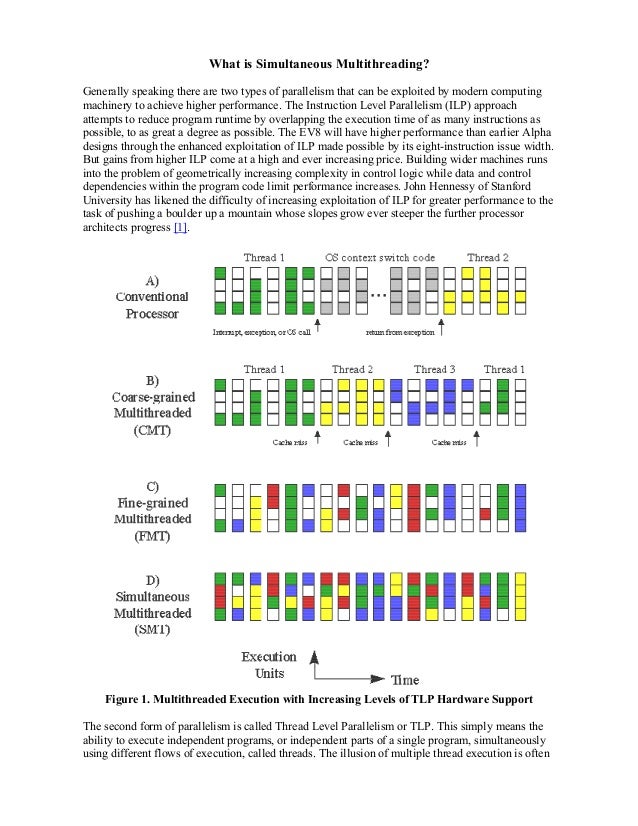

Smt is a multithreading technology, which enables the cpu to divide each cpu core into two threads, giving the cpu the option to make each processor core do two simultaneous workloads. This parallelism enables the cpu to get much better efficiency out of the workload distribution and allows for faster workload completion if used correctly. This technology relies heavily on programming efficiency, as programmers must distribute the different workloads amongst the different threads of the cpu. Smt specially will shine very bright in cpu intensive games, which for instance rely on physics-based calculations like the euphoria engine used by Rockstar games. AI in general relies a lot on the cpu since most logic from the ai is calculated and processed by the cpu.

This technology in general then is perfect for OpenWorld games which need a lot of cores for all the different things going on at the same time. The following picture shows the difference between linear load processing and parallel workload processing.

The cpu without smt does one task after the other, which results in idle time, visualized by the white spots in the picture. While this is an extreme case there is a lot of idle time in which the processor loses a lot of processing power in which it does nothing but wait for the next instruction (workload). The smt processor on the other hand is filled with way fewer empty spaces indicating a more efficient approach distributing different types of tasks to its threads. So, in short if used correctly the same workload can be calculated much more efficient by spreading it amongst the threads.

Heavily calculative based workloads (physics engine, object streaming, ai, destruction engine, etc..) are benefitting the most from this technology, so we can expect some nice improvements during the next generation.

The processor in our current generation consoles is severely underpowered and is holding back most of the other components. It is also the reason why most games lack good framerates; this is especially true in the Ps4 Pro and Xbox one X. It is the reason why games rarely hit 60 fps, but with the next gen consoles 60 fps is very doable, while game genres like racing could even opt for 120 fps. This is very exciting indeed and will surely enrichen game worlds to the point where in direct comparison the current generation games are very distinguishable from the next generation.

Remember the biggest reason we are not seeing more ai walking in our games is mostly due to lack of cpu power, a good example for this would be Assassin’s Creed Unity where the PlayStation 4 and Xbox one really struggle with keeping up for the most part. The frames per second even dip below 20 fps which makes the game very hard to enjoy.

(

)

Here is some gameplay analysis showing the fps on both consoles for comparison.

The Zen 2 processors in both new consoles will allow developers to put much more ai on the screen at the same time, without having to worry that the frames will dip into the low 20s. Also it could allow for much smarter ai with much more (thinking) operations per second, enabling deeper and smarter logic by the ai.

The Xbox Series X allows smt to be disabled, which in return clocks the cpu an additional 200 mhz higher. This will most likely be used for backwards compatibility or engines which do not yet make use of smt. Also games which do not need a lot of parallel compute, but any additional frequency boost will probably also make us of this (games like Counter Strike Global Offensive).

Now before we jump to the next part, I want to talk a bit about the variable clock frequency of the ps5 and why it is less of a big deal then people think. The cpu is rarely working with 100% load sustained throughout a longer period of time, which gives inefficiency to power consumption and heat. In a closed system such as the gaming consoles, engineers have to take into account that power consumption and heat need to be kept to a minimum, going above the limitations of a cooling inside of a closed system brings enormous problems with it. Some of the early PlayStation 4 adopters experienced this themselves. The apus inside the consoles are usually more power efficient when compared to the more traditional pc design of having the to parts separated. Yet at the same time both components share the same die, having to also rely on the same cooling element. What this means is that a cpu could possibly take away any upclock potential from the gpu, which is much more likely to need the additional clock speeds. While the cpu is not fully utilized or simply is not needed as much, the cpu could slightly underclock itself to give the gpu more overhead. Power consumption goes hand in hand with the clock speeds, so by lowering the cpu clock speed the power consumption is lowered exponentially (the higher the clock speed the more drastic of a power consumption is required, this is never a linear rise) only a few percent should be more then enough to give the gpu the desired maximum clockspeed of 2.23 ghz.

This is AMDs smart shift technology, with which the cpu can help the gpu by distributing more power to the gpu to squeeze out a bit more performance. Simply said, if the cpu is underused it will be using less power in order for the gpu to use more while staying at the same power limit the engineers had originally intended. This will not be a massive jump in power and result only in a few fps in the real world but again every bit helps of course.

Now then to the juicy part comparing the cpus in both consoles, will the 100 mhz higher frequency make any difference in the real world, well yes and no. Let me explain, for benchmark purposes you of course would see a few points going to the cpu of the Xbox Series X, but in real world applications like games, a performance delta of under 3% is not going to make any difference what so ever. So the performance of both consoles in regards to the cpu is essentially the same.

Then on to the next Topic, ssds:

Solid state drives are truly something magical for the next generation as it will simply allow much richer worlds without the hassle that game developers have to go through right now. Let me elaborate by going into detail how a standard hdd works and why it is such a limiting factor in game creation right now.

The main problem with hdds are the way they are working, it is a mechanical drive and as such it has a lag with which it can read data from its disk. Imagine a disk spinning at a certain speed, let’s say 5200 times a minute. During the spinning of the disk, each spin represents a chance to read data from the specific area in which the required data is stored. This of course means that there is a certain latency which is required for the head assembly to reach the location of the needed data, this is referred to as seek time.

This in itself is already a problem, because it is time in which the cpu and the rest of the components sit idly and wait for the data to be processed.

Looking at this picture we can understand that the head has to move to the area of the required data in order for it to be obtained. Depending on the location of the stored data the transfer speed is variable. Data on the outer ring could possibly be read faster than the one on the inner ring etc. So, we now have another problem variable read and write speeds, all this amounts to a lot of problems:

- The hard drives inside of the current gen consoles are about 100 mb/s, this speed is a very limiting factor. With low transfer speed of hdds, assets need to get stored inside of the ram to be accessible much more quickly. This eats an unnecessary amount of ram in the current gen consoles, inefficiency is the keyword here. This is also one of the reasons game worlds can’t currently go much bigger than they are, while being as rich as they are. In order to stream in more objects, we simply need faster read and write speeds to the hard drive in order to not rely so much on the ram. Overstepping the speed limitations results in various problems but one very well known effect is the game object pip-in.

()

- Here is a video illustration of that effect, this of course can also be caused by the cpu, though this is just an example. The seek time, this introduces latency in which the other components must wait for the data to be accessed by the hard drive. Combined with the first problem, programmers have to constantly innovate new tricks to bypass this limiting factor inside of the current consoles. The example given by Mark Cerny is very good and a real world current solution to this problem, by storing an asset multiple times on your hard drive in various places (example given, mailbox stored 400 times in different places), this is of course very inefficient in terms of storage utilization and takes away space needlessly. So, it is not just a problem and limiter for game designers but a headache for programmers as well.

Now then with the ssds installed in the next generation consoles we are bypassing all these problems with firstly very high raw speeds of the ssds:

PlayStation 5 = 5,5 gb/s (raw), 8-9 GB/s (compressed);

Xbox series X = 2,4 gb/s (raw), 4,8 GB/s (compressed);

While the Xbox series X is very fast, the PlayStation 5 has an insane amount of raw speed, this is very exciting especially for game designers of open world games, as these if they really utilize the full potential of the ps5 ssd could pull of some very amazing things. Secondly ssds have no seek time as data is stored digital and does not require for any mechanical part to do work, thus the latency issue is also resolved.

But what does this mean for the real world?

Well it isn’t just faster loading times, it also allows much bigger game worlds to be loaded, while being much richer. Game objects can be streamed in directly from the ssds, not needing to rely on the ram to handle as much caching for the hard drive, giving a more efficient use of the available ram hence the less required jump in terms of ram capacity (more on that later).

If you want to see what could be possible with the next generation ssds, Star Citizen is a very nice example to look at. This game heavily recommends an ssd to be used for gameplay and if you ever try playing it without one

this is pretty much what is awaiting you. But the ssd requirement at the same time enables amazing game worlds, which not only looks amazing but are stunningly rich given the size they are at.

Comparing the two next gen consoles then, the additional ssd speed of over 2x from the PlayStation 5 will initially not make a huge difference over the Xbox Series X in terms of capabilities. This is due to the fact that developers have to learn to make use of the crazy speeds both consoles will provide. It will take some time before we reach speeds where the Xbox Series X becomes the limiting factor. This is especially true in multiplatform games, where games are always programmed for the weakest platform with any given component, and then if there is enough resources and time it is optimized for the stronger hardware. First party Sony exclusive games could very well show the true potential of the PlayStation ssd, but do not expect multiplatform games to do the same. These will generally probably simply load faster and allow for other slight improvements over the Xbox Series X.

Then lets compare ssd storage to the pc, where it is already being utilized for years. But why is there no real difference between a sata ssd and a nvme ssd albeit the huge difference in speed? The answear is simple, it is due to the fact how data is being transferred, engines right now do not utilize nvme ssd speeds and simply assume that you still use a hdd. Hopefully this will change very soon even on pcs.

The ram:

PlayStation 5: 16gb GDDR6 @448GB/s

Xbox series X: 16gb GDDR6 (10GB @560GB/s, 6GB @ 336 GB/s)

The ram this generation is frankly very unsurprising and not very interesting, I already wrote a little bit about it in another thread, but I will summarize what I wrote. The amount of ram this generation is very lacking in terms of a generational leap. Only 2x the amount while the last generation had a 16x jump in raw capacity, that’s not very exciting, yet it is more then enough. In the ssd section I wrote that ssds will enable game assets to be read directly from the drives and do not need to be cached as much in the ram anymore. This will lead to the ram being utilized much better. The bandwidth itself is also more then enough with both consoles, I do not believe that the ram will be the limiting factor in any way.

The GPU:

The teraflop metric has ruled the gpu world for a long time and any slight uplift in teraflops is expected to make a world of a difference, but is this really true?

Well the answer is probably going to upset a lot of people, but I will explain why it is not the unbelievable difference maker that most people think it is. Now before we begin let us look at both gpus:

Xbox Series X: Custom RDNA 2 gpu, 52 CUs @1.825 Ghz resulting in 12.1 teraflops.

PlayStation 5: Custom RDNA 2 gpu, 36 CUs @2,23 Ghz resulting in 10.28 teraflops (variable frequency -> same explanation as the cpu).

On paper the Xbox Series X has the clear advantage with a 17% performance delta between the two at peak PlayStation 5 performance. This is quite a nice lead by Microsoft, but historically speaking it is one of the smallest we have ever had between the two console makers.

Going back to the PlayStation 4 and Xbox One, the ps4 has a 1,8 teraflops gpu, while the Xbox One has a 1,3 teraflops gpu. That’s more then a 40% difference in power and it is the same story with the Xbox One X and the PlayStation 4 Pro. Xbx = 6 teraflops vs ps4 pro 4,2 teraflops ~ 43% performance difference between the two. While looking at the difference between each of the consoles, we could see some very hefty performance differences, but did these really make so much of a difference that either console was a deal breaker? In my opinion not really, the only thing it did was, that each more performant console had the better-looking multiplatform games, which simply ran a bit better, but it still was the very same game running. The thing game developers usually do with this much of a performance delta is simply adjust the resolution of the game and / or adjust some of the graphics settings to be better.

Going back to this generation then, with a 17% performance delta, can we really expect that much of a difference between the to consoles seeing as it is probably the smallest performance difference in any of the console generations yet. In my opinion no. Multiplatform games will simply look slightly better or run better on Xbox Series X, and load faster on PlayStation 5. But it should in no way shape or form make either of the two consoles a dealbreaker because of some slight performance difference. Simply pick the console to your liking and you will very likely be pleased with your purchase.

Returning to a more technical standpoint and less of a comparative one, RDNA 2 is very exciting as both consoles have very powerful hardware indeed. These gpus should allow developers to further push graphics beyond what we have right now. No matter if you are playing on console or on pc, remember that games are always made with the weakest hardware in mind, you can expect a lot from when games are make from the ground up for the next generation hardware. In combination with technologies like real time ray tracing, games will indeed become much more lifelike. I feel myself coming back to Star Citizen, but I find it is a good representation of what we can expect next generation games to look like as a standard if you will.

I will not be talking about the audio chips yet, as I find I have to little information to go into that topic right now, yet I hope to come back to this one soon.

Sadly, I could not make a new thread and have to post it here, excuse the long post. If anyone want´s to make this into a new thread be my guest.

I really hope you enjoyed this little journey into the next generation.

Thanks for reading.

/cdn.vox-cdn.com/uploads/chorus_asset/file/19816617/ps5vsxbx.jpg)