Without being a game developer or programmer, which I assume none of us are, are you capable of changing which data is stored inside RAM? Or is data that's already in RAM forever stuck there with no fast means of changing or swapping old data out with new?

Simple answer to this HAS to be yes you can swap out data and change where it's located in RAM, and fairly quickly at that! Why are people just assuming the data that actually needs to be in the fast GPU optimal memory can't quickly be moved there for processing at full speed bandwidth of 560GB/s? It's like people expect developers will just sit on their hands and not do what's needed when it's needed when all the capability to do it, and do it quickly, is right there at their fingertips. The asymmetrical memory setup is a non-issue for maintaining full bandwidth performance on Xbox Series X games.

Next question for us non programmers and game developers. Is 100% of what's in RAM on consoles tied to textures, models etc, or does some of that RAM need to also be reserved for other crucial aspects to making your game work, such as script data, executable data, stack data, audio data, or important CPU related functions?

A videogame isn't just static graphics and/or models. There are core fundamentals that require memory also such as for animations, mission design and enemy encounters, information crucial to how those enemies behave and react to what the player is doing, specific scripted events, how weapons, items or attacking works (how many bullets, rate of fire, damage done) how the player or weapons they use interacts with the world and objects around them, an inventory system, stat or upgrade systems for those games that use them, how vehicles or NPC characters can be interacted with or controlled, characters customization, craftable items, I can go on. There are things that do require RAM, but may not require the fastest possible access to RAM. For example, maybe the player has a weapon or power that when they use it, it causes confusion amongst the enemies, or it temporarily blinds them, allowing a window of opportunity for the player to gain a much needed advantage. That, too, as simple as it sounds, needs RAM, it just may not require as much as textures and models at the same high speed, but a game is made up of all these basic functions and rules and mechanics, and they require memory just the same.

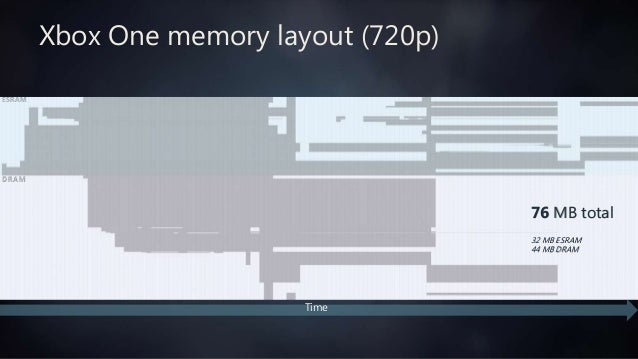

When you have some basic understanding of all this you also come to understand that concerns about memory amount and memory performance on Xbox Series X are largely overblown. You can expect the parts of any game that could benefit from 560GB/s worth of memory bandwidth performance to actually get it, and parts that do not need it as much to get the lesser side. Then when you factor in custom solutions such as sampler feedback streaming, which is tailor made for using available RAM and the SSD performance in a more efficient manner. Microsoft says Sampler Feedback Streaming contributes to an effective 2-3x multiplier on effective amount of physical memory and the same on effective i/o performance.