Sampler Feedback isn't just a software implementation API as you put it, it requires explicit hardware support to be used. That's like calling Mesh Shaders just a software implementation API not understanding the hardware needs to be there to support it. Same is true for VRS, there needs to be hardware support, up to a specific tier to support the quality of VRS that even Turing GPUs support. Up to this point there's zero evidence PS5 supports Sampler Feedback. Being RDNA2 doesn't automatically mean it is assured to be one thing or another, as confirmed by cerny in his talk.

To date the only features confirmed for the PS5 GPU are ray tracing, Primitive Shaders (a feature from Vega that was finally made active in RDNA1) and he discussed the cache scrubbers they had put in place. Cerny didn't touch on anything else, not even VRS in his talk. And this was billed as a ps5 architecture deep dive. He touched on every piece of custom hardware crucial to the SSD, dug down into primitive shaders on the GPU, cache scrubbers, and went into amazing detail on the audio chip. I would think if other high profile features were there he would have talked about them. Cerny doesn't have a reputation for leaving things out when he decides to go public with specific technical details like what he has done with DF. I once doubted hardware ray tracing prior because the communication made me suspicious as to whether it was truly there, but that's been put to bed with the road to ps5 presentation. Unlike ray tracing where I thought communication seemed off, there has been zero communication on VRS, Sampler Feedback, or Texture Space shading (a feature of sampler feedback)

Sampler Feedback is far, far more than just texture space shading. In order for texture space shading to even work you require sampler feedback to help provide the necessary information.

And where texture space shading comes in, and why it's necessary to have support for sampler feedback to even be able to use texture space shading.

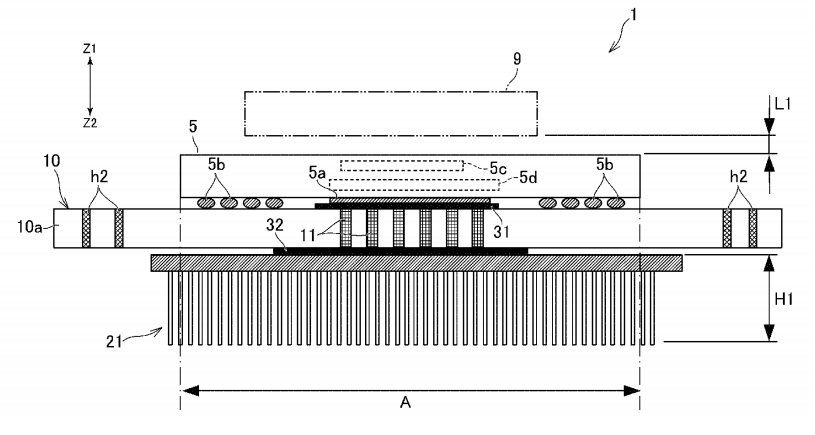

It is time for DirectX to evolve once again. From the team that has brought PC and Console gamers the latest in graphics innovation for nearly 25 years, we are beyond pleased to bring gamers DirectX 12 Ultimate, the culmination of the best graphics technology we’ve ever introduced in an...

devblogs.microsoft.com

So as you see, texture space shading is dependent on sampler feedback and it must be supported in hardware. It isn't just some software, because if it was Xbox One X and PS4 Pro could do it. Are there software methods for trying to emulate what sampler feedback does? Absolutely, but the problem is they are simply not as effective and the performance benefits are not as good without hardware support.

Based on how I've seen AMD handle Primitive Shaders and Mesh Shaders, I'm honestly not even sure if Mesh Shaders and Primitive Shaders are even exactly the same thing. As AMD went from calling Primitive Shaders one thing in Vega and RDNA1 to now marketing Mesh Shaders as a specific RDNA 2 feature. It's entirely possible that Mesh Shaders goes a bit further than Primitive Shaders, and so that's why it's marketed as an RDNA 2 feature. Or mesh shaders is just the DX 12 Ultimate name for Primitive Shaders as at a basic level they do really sound very similar. but what's always left out is the level of detail and extra capability we can read about in Mesh Shaders. Nvidia, for example, has always called it Mesh Shaders since turing. Anandtech for example confirmed that primitive shaders gave zero performance benefit in Vega and it wasn't until RDNA that AMD managed to fix some things and enable it, but it may still not be the same as Mesh Shaders. For the sake of argument though because it's hard to tell I'll assume it's the same.

?