Well an integer to store a single "point" would be 4 bytes. So really depends, usually people talk triangles (12 bytes each) but IIRC it's also a combination of triangles and then vertexes (where the points meet in space, which takes 12 bytes itself.) So it's a mix.

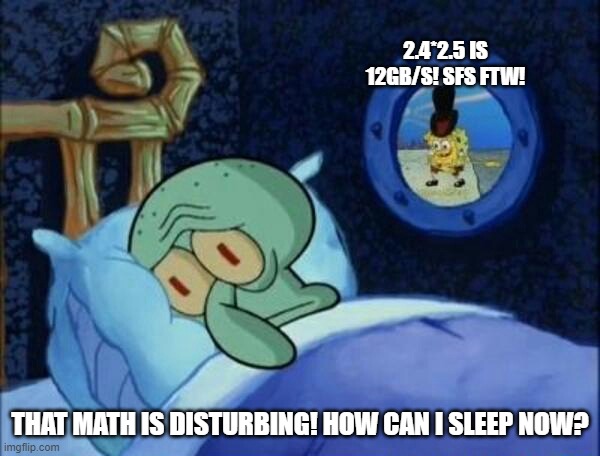

If it was nothing but triangles being stored, 100 billion of them would be 1.2 TB if I'm doing the conversions right.

But then there's all the points where the triangles meet in space being stored.... of course there's also compression.

edit: Here's a convo where some rendering folks are talking about 2 million per GB.

As a basis, each polygon (quadrangle or triangle - not considering n-gons) has 4 LONG indices into the vertex array - triangles simply have duplicate indices for the last two. Each vertex is only listed once in the vector array (ignoring split surfaces and other things) and contains three FLOAT...

forums.cgsociety.org

I think I'm wrong about how storing a triangle works though. But I'm also way undershooting the math I think.

2 million per GB would mean 500 GB per billion polygons. Or 50 TB for 100 billion.