Overall I think this is a pretty big win for AMD/Radeon compared to their past performance that a lot of people in this thread seem to be missing.

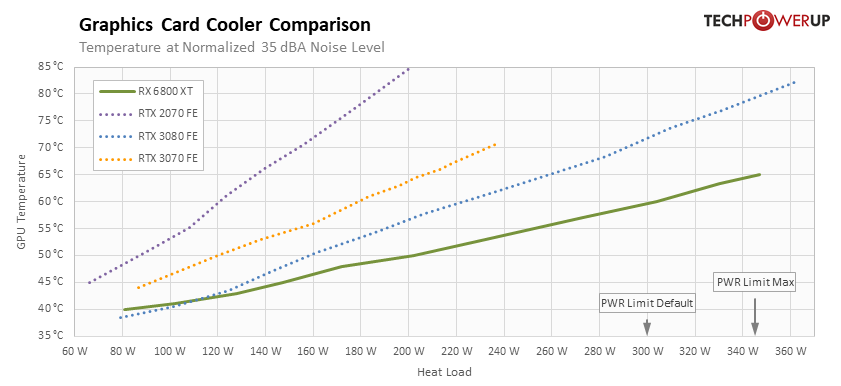

Reference Cooler:

AMD was notorious for releasing terrible "blower" style reference coolers that ran loud with high temps. Making only AIB models viable buys.

This time around their cooler seems to be pretty damn good essentially matching the expensive custom 3080 reference cooler in heat/noise. That is a pretty huge improvement from where they were in the past. Granted not everyone cares about reference models and AIBs will take the lions share of sales but this is still a huge improvement and nice win for AMD showing that they are serious about competing and are willing to up their game and improve on their obvious weak spots.

Power Draw:

For the last few generations of GPUs the Radeon family were consistently lambasted for higher power draw, hot running, inefficient designs. This time around we see that AMD have really focused on improving here and are actually running mostly below their specified 300w. This time around they draw less power across the board compared to Nvidia, which is a huge turn around/upset to the previous generations landscape. Another great win for AMD here.

Efficiency:

This goes hand in hand with power draw, but AMD has massively increased their efficiency even from RDNA1 to RDNA2 while running on the same 7nm node. You have to remember that RDNA1 was already a massive efficiency improvement over Vega. This is pretty impressive stuff and allows these GPUs to run at very high clocks while reducing power draw, a really impressive outcome for AMD, they seem to have a really great engineering team behind these RDNA2 card.

Rasterization Performance:

For the last 5-7 years of GPU releases AMD was mostly behind in performance at the highest end of the stack. Sometimes by a lot sometimes by a little but they were almost always behind in performance at the top end of the stack by a noticeable amount.

Prior to the RDNA2 reveal we had many rumours and tons of "concerned" Nvidia fans stating, almost as an outright fact that RDNA2 GPUs would definitely at the very top end be 2080ti levels of performance and might compete with a 3070. Were were told that in an absolute best case scenario that maybe they would scrape out 2080ti + 10-15% performance.

AMD could never compete with the powerhouse performance of Nvidia's latest GPUs you see, there were "power king" meme threads, the way it is meant to be played after all. As some later rumours started becoming clearer and it seemed likely that AMD might compete with 3080, we were told "Yeah but they will never touch the 3090! lolol". Along comes the 6900XT competing for $500 less. And here we are, Radeon group has seemingly done the impossible and is competing at the high end across the stack even with the hail mary release of the 3090. This is a pretty incredible improvement for Radeon group and cannot be overstated enough.

Release Cadence:

Previous Radeon GPUs tended to arrive much later than their Nvidia counterparts, often times more than a year behind Nvidia and often with worse performance or close to performance of a GPU that was to be replaced soon by a newer Nvidia model. Here we have essentially release parity, with only a month or two in the difference of releases. AMD have finally caught up to Nvidia here which again is a huge improvement.

Current rumours have AMD set to potentially release RDNA3 this time next year, which would be a huge improvement and upset to the normal 2 yearly development/release cycle we normally see for GPUs. We will have to wait and see how they progress but they already went from RDNA1 to RDNA2 in little over a year.

------

The above are some pretty fantastic improvements. In addition previously AMD cards were missing RT hardware/functionality altogether and they now have RT functionality performing at around 2080ti levels or higher depending on the title. Granted not as performant as Nvidia's 2nd generation of Ray Tracing with Ampere, but so far almost all of the RT enabled games were optimized/tested against Nvidia cards, most of them being sponsored by Nvidia to the point that Nvidia themselves coded most of the RTX functionality for some titles.

When you look at Dirt 5, which is the first RT enabled game optimized for AMD's solution it seems to perform pretty well on AMD cards. It will be interesting to revisit the RT landscape a year from now and see how these cards perform on (non Nvidia sponsored) console ports and on AMD sponsored titles.

Nvidia will still have a clear win with RT overall for this generation, if you need the best RT performance you should definitely go with Nvidia. However I find it interesting that 2080ti levels of RT performance (and some games higher) is suddenly considered "unplayable trash" but that is for another discussion.

Features:

Much like Nvidia was first to market with MS Direct Storage and rebranded it "RTX IO" and how Nvidia were first to market with Ray Tracing and rebranded DXR as "RTX", AMD here are first to market with resizable BAR support in windows as part of the PCIE spec. Like Nvidia, they have rebranded this feature as SAM.

SAM seems like a cool feature that can offer in a lot of cases 3-5% performance increases for essentially free. There are some games that don't benefit from this, and some outliers that get up to 11% increase such as Forza. Overall it seems like a cool tech that AMD are first to bring to the table.

Nvidia are working on adding their own resizable BAR support but we have no idea how long it will take them to release it or what Mobo/CPU combos will be certified to support it.

Regarding DLSS, this is definitely somewhere that AMD are behind. AMD are currently working on their FidelityFX Super Resolution technology to compete with DLSS. We don't know if this will be an algorithmic approach or if it will leverage ML in some way, or possibly a combination. AMD have mentioned their solution will work differently to DLSS so we can only guess for now until we have more information.

What we do know is that it will be a cross platform, cross vendor feature that should also work on Nvidia GPUs. If they go with an algorithmic approach then it may end up working on every game or a lot of them as an on/off toggle in the drivers. Of course this is all speculation until we have more concrete info to go off. What we do know is they are currently developing it and it should hopefully release by end of Q1 2021.

Value Proposition:

The 6800XT offers roughly equal rasterization performance with a 3080. It does this with less power draw and still runs cool and quiet. It has 16GB of VRAM vs 10GB VRAM on the 3080.

It offers RT but still behind 3080. I know this is a big issue for a lot of people, believe it or not there are actually tons of people out there who don't care about RT at all right now and won't for the forseable future. There are also people who view it as a "nice to have" but don't believe the benefits currently are worth the trade off until hardware improves to a much higher level and more games go heavily into designing their games around RT.

Personally I think RT is the future, in 10 years time almost all games will support RT and hardware will be fast enough with engines designed around it that performance will be great. That time is not quite right now though, as least for me. So I fall into the "nice to have" group at the moment.

AIB models seem to offer some overclocking headroom.

It offers SAM, which is a nice bonus but is currently missing a DLSS competitor. It is a little disappointing that Super Resolution was not ready for launch but they are currently working on it so hopefully the wait should not be too long.

Would it be more competitive if it was priced at $600 rather than $650? Definitely and I can understand the argument that for $50 more you can get better RT, DLSS and CUDA. The 3080 is a fantastic card afterall. Of course the chances of actually getting one for anywhere near MSRP even after shortages are sorted is another story altogether. Especially for AIB models.

The reality is that AMD and pretty much all manufacturers are wafer constrained on the supply side. AMD CPUs are very high profit margin products on the same 7nm wafer as a GPU. The consoles are also produced in huge quantities, AMD is on TSMC 7nm which is not cheap.

The simple reality is that right now AMD would sell out the entirety of their stock regardless of the price. They have no need to lower the price currently as they would simply be losing guaranteed money. AMD are also looking to position themselves out of the "budget" brand market.