-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So with Nvidia buying ARM, could PS6 or XBox actually go Nvidia?

- Thread starter Valued Gamer

- Start date

llien

Member

You are trusting (hidden) Apple ads too much.it does seem like ARM is the future over x86

4800U is a 15W Zen chip, with 9.8 billion transistors on 7nm.

Apple's M1 is on 5nm and is using 16 billion transistors, is 15-25W and... while impressive for a non x86 chips, nothing that would write off x86 either.

Omega Supreme Holopsicon

Member

You are trusting (hidden) Apple ads too much.

4800U is a 15W Zen chip, with 9.8 billion transistors on 7nm.

Apple's M1 is on 5nm and is using 16 billion transistors, is 15-25W and... while impressive for a non x86 chips, nothing that would write off x86 either.

Wait until desktops with m based chips come.

Performance per watt is higher on arm m1.

Intel previously tried while having node superiority to compete with arm, with their atom lineup, it was smoked badly.

Last edited:

Rikkori

Member

The M1 is weak outside of cherry picked scenarios. Has anyone actually seen gaming performance on these things? After all the praise I was shocked to see how poorly it actually does, and in fact no better than whatever APU's out now (which themselves are quite behind in tech compared to actual dedicated systems):

VS a 4 core APU from 2 years ago!!

All I have to say to the apple&arm fanboys:

VS a 4 core APU from 2 years ago!!

All I have to say to the apple&arm fanboys:

DeuceGamer

Member

It’s not just Apple, though they are obviously among the ARM leaders currently.You are trusting (hidden) Apple ads too much.

4800U is a 15W Zen chip, with 9.8 billion transistors on 7nm.

Apple's M1 is on 5nm and is using 16 billion transistors, is 15-25W and... while impressive for a non x86 chips, nothing that would write off x86 either.

ARM is more efficient and offers more performance at lower power consumption. It may not happen over night, but it does seem that’s the path we are on.

llien

Member

Even though it's 5nm vs 7nm and 1.6 times more transistors and running at lower speed.Performance per watt is higher on arm m1.

It is only "higher" in select applications.

And it is particularly funny to talk about, given that Lisa just announced 5000 series.

llien

Member

We have 8 core (!!!) no compromise x86-64 CPUs notebooks with 17+ hours battery life.ARM is more efficient and offers more performance at lower power consumption.

This "power consumption" is a rebrand of "RISCs are faster" myth.

llien

Member

Citation needed.Intel previously tried while having node superiority to compete with arm, with their atom lineup, it was smoked badly.

Intel's financials would barely change even if they'd grab 100% of the (dirt cheap) ARM market.

Omega Supreme Holopsicon

Member

You know most of that is asics, that are not involved in most applications.Even though it's 5nm vs 7nm and 1.6 times more transistors and running at lower speed.

It is only "higher" in select applications.

And it is particularly funny to talk about, given that Lisa just announced 5000 series.

You know battery size is a thing. The fact that arm is able to compete and outcompete x86 with only 4 real cores, is quite outstanding. Let's see what happens when 32 core arm desktop chips hit the sceneWe have 8 core (!!!) no compromise x86-64 CPUs notebooks with 17+ hours battery life.

This "power consumption" is a rebrand of "RISCs are faster" myth.

edit:

Most of the portable Macs we've tested recently can manage more than 18 hours away from an outlet, but the newest releases last even longer. The new MacBook Air and 13-inch MacBook Pro, running on Apple's own M1 processor for the first time, both lasted for over 20 hours on our video rundown test (the M1 MacBook Air ran for an incredible 29 hours).-pcmag

66WHrs, 4S1P, 4-cell Li-ion-asus expertbook- asus, only intel laptop with same battery life as m1 mac book

Smaller battery yet same battery life.

- Built-in 49.9‑watt‑hour lithium‑polymer battery-m1 battery apple

Here's an article detailing how intel intended to dominate low power sector tooCitation needed.

Intel's financials would barely change even if they'd grab 100% of the (dirt cheap) ARM market.

ARM vs. Atom: The battle for the next digital frontier

Small, inexpensive, power-efficient new chips from Intel and ARM are enabling the new wave of mobile devices -- and setting the two companies on a collision course

Last edited:

tkscz

Member

The M1 is weak outside of cherry picked scenarios. Has anyone actually seen gaming performance on these things? After all the praise I was shocked to see how poorly it actually does, and in fact no better than whatever APU's out now (which themselves are quite behind in tech compared to actual dedicated systems):

VS a 4 core APU from 2 years ago!!

All I have to say to the apple&arm fanboys:

Not defending Apple but any game not on mobile or the Switch ISN'T meant to run on an ARM chip natively. They are x86 based, even with an x86 emulator games wouldn't run correctly. You can't just run an x86 based application on an ARM chip and expect it to just run the same. There is no real comparison for this at the moment.

On topic. Pretty sure Nintendo would be the only one to benefit from it considering their console is powered by nVidia CUDA cores and ARM. No reason for the other two to jump ship vs sticking with AMD x86 CPUs that are far better in most cases.

llien

Member

Says who?You know most of that is asics

You know that thing can be measured, right? And there are well known figures what it was back in 2012 and is now, right?You know battery size is a thing.

Or is it too much to expect from an Apple fun?

No, that's not impressive, Intel can "outcompete" 8 core Ryzen in select benchmarked with the same number of cores.compete and outcompete x86 with only 4 real cores

Oh, and much smaller number of transistors involved.

Oh, and on Intel 10nm process, vs TSMC 5nm for Apple.

As for 32 cores, I need to know how many transistors would that be and how much power consume.

Last edited:

Omega Supreme Holopsicon

Member

We will see.No, that's not impressive, Intel can "outcompete" 8 core Ryzen in select benchmarked with the same number of cores.

Oh, and much smaller number of transistors involved.

Oh, and on Intel 10nm process, vs TSMC 5nm for Apple.

As for 32 cores, I need to know how many transistors would that be and how much power consume.

But arm cores are smaller, btw intel 10nm is said to be close to 7nm tsmc.

Edited and put battery sizes, smaller battery and apple still achieves practically the same battery life as the intel laptop with highest battery life.You know that thing can be measured, right? And there are well known figures what it was back in 2012 and is now, right?

Or is it too much to expect from an Apple fun?

Maybe not asics, but there's the gpu, the npu, several asics, and low power cores.Says who?

lostinblue

Banned

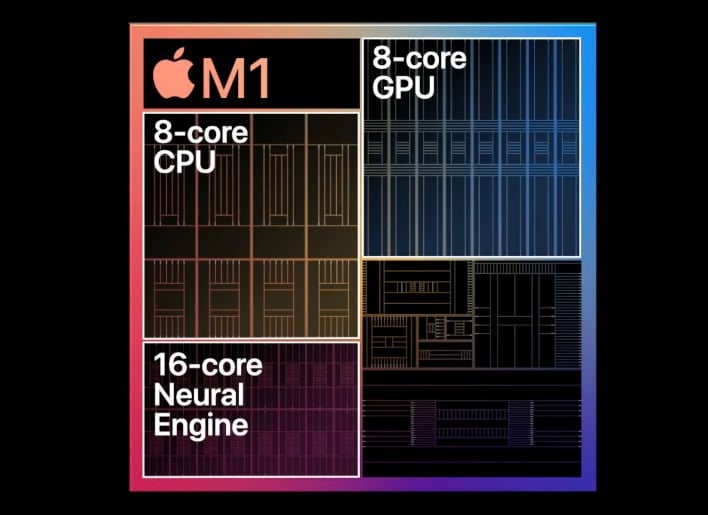

Here's the supposed floorplan:Maybe not asics, but there's the gpu, the npu, several asics, and low power cores.

If that's accurate, the 8 core GPU takes roughly as much space as the 8 core CPU.

That's a bit like saying Microsoft financials (and Microsoft as a company) wouldn't change much if they owned Android OS instead of Google. Business model notwitstanding.Intel's financials would barely change even if they'd grab 100% of the (dirt cheap) ARM market.

It would change the company business model, as profit margins are certainly not as big, but it means and moves a lot of money.

Last edited:

VAVA Mk2

Member

Nope. They will stick with AMD. x86/x64 architecture makes BC easier going forward. You don't need to worry about power savings like ARM focuses on with consoles since they are plugged in all the time. Also, Zen 3 architecture is so good. AMD is killing it these last few years in terms of price-performance which is ideal for console manufacturers given the price sensitivity of their core audience.

Last edited:

llien

Member

Original point was x86 full 8 core CPU (only AMD has those at he moment) being able to achieve 17+ hours of battery life (actually they claim 20+ for video).Edited and put battery sizes, smaller battery and apple still achieves practically the same battery life as the intel laptop with highest battery life.

This is due to progress in manufacturing/fabs/architecture and not due to increased battery size as you have claimed.

As for Apple's chip, it's not true 4 core, it is 4+4 core, with the second 4 created specifically for borderline "lower power" scenarios.

Anyhow, it still lands in the same ballpark as full blown x86 CPUs, no magic shown.

Maybe not asics, but there's the gpu, the npu, several asics, and low power cores.

As opposed to (chuckle):If that's accurate, the 8 core GPU takes roughly as much space as the 8 core CPU.

Microsoft has entered that business for the same reason they've entered console business: out of fear, but yeah, fair enough.That's a bit like saying Microsoft financials (and Microsoft as a company) wouldn't change much if they owned Android OS instead of Google. Business model notwitstanding.

It would change the company business model, as profit margins are certainly not as big, but it means and moves a lot of money.

Perhaps the best demonstration of what ARM can and cannot do is what Twitter is doing.

When EPYC (AMD's amazing Zen based server chip) was rolled out, they jumped on the platform, citing that they have ever increasing performance demand, but limited datacenter space.

Note that there is nothing x86 about software that they run (and even if it would have been, they would manage to port, in today's world, it is no big deal).

So, why aren't they using ARM chips which allegedly are out there somewhere (and are smaller, faster and consume less power too

Last edited:

lostinblue

Banned

I don't think anybody claimed magic.Anyhow, it still lands in the same ballpark as full blown x86 CPUs, no magic shown.

It's a jump for ARM processor single processor performance on computers that seemingly allows it to at least compete with current x86 offerings, generating less heat and allowing them to be passive cooled even. It's a proof of concept for more open platforms, but until then mostly useless for non-MacOS users and gaming in general. It's just something that exists and might drive demand and investment for similar solutions.

I also don't think anybody is an Apple fan here, it's just that they came up with a leap in ARM performance and if there's a trick I haven't seen it fall short on benchmarks yet. (but you and everybody claiming that they are just benchmarks are right - I myself want to see a Dhrystone/DMIPS test)

You mention transistors, but that's only part of the story.You are trusting (hidden) Apple ads too much.

4800U is a 15W Zen chip, with 9.8 billion transistors on 7nm.

Apple's M1 is on 5nm and is using 16 billion transistors, is 15-25W and... while impressive for a non x86 chips, nothing that would write off x86 either.

I'll lay the die size here:

AMD 4800U - 156 mm² @ 7nm TSMC

Apple M1 - 119 mm² @ 5nm TSMC

Even though you have more transistors they are not necessarily a disaster on the wafer area required.

Few takeaways here though:

- Die size is not that different from a regular x86 processor die area (with 8 high performance cores, against 4 high performance+4 low power), specially considering it's using a smaller node. And if I had to guess, the upcoming desktop variant for this CPU won't surpass 150 mm², no reason to. So the rumors of "6 Big cores" seem accurate. If I'm right, this is not a candidate for a manycore solution without "chiplets". You can't pull a 16 core part in the area of a 8 core x86 CPU, it's "not that good".

- You certainly don't pay per transistor, so if you get to fit more within the same area and get performance benefits from it, the better.

- Having less transistors on their processor doesn't exactly translate to smaller cores/lower cost for chip per wafer area taken for AMD.

- These are very different processors with different design philosophies, I doubt boost clocks will ever be as effective on the Apple/ARM, as demonstrated by the difference between macbook air (passive cooled and energy throttled) vs macbook pro/mac mini. I also doubt hyperthreading/SMT will make an appearance any time soon.

And Ryzen 4800U is not a 15W part with boost clocks enabled. It's 15-25W as well.

Last edited:

llien

Member

You mention transistors, but that's only part of the story.

I'll lay the die size here:

AMD 4800U - 156 mm² @ 7nm TSMC

Apple M1 - 119 mm² @ 5nm TSMC

Node matters though, in this case in particular.

TSMC claims 5nm is +84% of density over 7nm.

So 119mm2 would be 219mm2 chip in 7nm world, making M1 a 40% bigger chip than 4800u.

So even without taking into account power saving benefits, hardly anything to boast about, unless one adds "but it's ARM" sticker to it, with connotation of ARM being something inferior.

Agreed that it is an achievement _for_ARM_world_ and I also suspect a nice margin boost for the Apple.

Some users in this thread, however, talked about ARM taking the x86 world. I just don't see that happening, at least, with how things stand right now technology wise.

Last edited:

lostinblue

Banned

Twitter I don't know, but amazon already made the switch to ARM. They have 32% marketshare of the rentable cloud computing platform business, Microsoft Azure (19%) is looking into designing their own arm processors, Alibaba will also switch, albeit to RISC-V and they represent 6%.So, why aren't they using ARM chips which allegedly are out there somewhere (and are smaller, faster and consume less power too)?

Regardless of whether they trump single core/multicore performance on benchmarks I think manycore low-power/low-cost processors are the future for big datacenter solutions.

x86 might still thrive with small/medium costumers or be part of their pipeline there but sales/volume is going to drop.

That's a big leap. Although I do wonder if it is a best case scenario. But even 50% would be huge considering it is a 2nm/-30% node difference.Node matters though, in this case in particular.

TSMC claims 5nm is +84% of density over 7nm.

So 119mm2 would be 219mm2 chip in 7nm world, making M1 a 40% bigger chip than 4800u.

Well, that's a normal thing to say when a mobile part manages to seemingly compete with desktops. Thing is it isn't magic, will have it's caveats (not as scalable as people think it is, but perhaps it doesn't need to be) and it is Apple (non-licenceable and patented to hell and back - I don't even know if qualcomm or other competitor comes up with something similar if they are not in to be sued for years, this is Apple). I am mostly interested in seeing how the market will react.Some users in this thread, however, talked about ARM taking the x86 world. I just don't see that happening, at least, with how things stand right now technology wise.

I do think it might change the paradigm a little.

Last edited:

llien

Member

You are reading it wrong.Twitter I don't know, but amazon already made the switch to ARM.

Amazon has added custom ARM chip offering to their portfolio and the main benefit of the said instance is "price performance".

A far cry from ARM world dominance...

If you check the direction AMD is going, it's pumping more core and running them at lower clocks, which is how you get to many cores, low power.Regardless of whether they trump single core/multicore performance on benchmarks I think manycore low-power/low-cost processors are the future for big datacenter solutions.

Well, yeah, except it's "up to 24W" sort of "mobile part" and among "with desktop" we see 4800u, a 15W CPU.Well, that's a normal thing to say when a mobile part manages to seemingly compete with desktops.

lostinblue

Banned

I found a Transistor density table per mm for Apple processors:

Source: https://semianalysis.com/apples-a14...-but-falls-far-short-of-tsmcs-density-claims/

So, the 7nm node used on the A13 had 89.97 million transistors per mm², the 5nm node used on A14 (and M1) had 134.09 million transistors per mm².

"Despite TSMC claiming a 1.8x shrink for N5, Apple only achieves a 1.49x shrink"

49% better density. I wonder if it'll improve next year.

Source: https://semianalysis.com/apples-a14...-but-falls-far-short-of-tsmcs-density-claims/

So, the 7nm node used on the A13 had 89.97 million transistors per mm², the 5nm node used on A14 (and M1) had 134.09 million transistors per mm².

"Despite TSMC claiming a 1.8x shrink for N5, Apple only achieves a 1.49x shrink"

49% better density. I wonder if it'll improve next year.

lostinblue

Banned

I don't think it would be cost effective for amazon to invest in it if they weren't planning on switching. I do think it is a forward looking moveYou are reading it wrong.

Amazon has added custom ARM chip offering to their portfolio and the main benefit of the said instance is "price performance".

A far cry from ARM world dominance...

You're right that most of their x86 datacenter machines were certainly not sidelined because they consume more energy. But I do wonder if they're still buying more.

They will also offer access to Apple M1 macs through AWS, if the consumer demands them. So in a way, they'll do whatever their costumers demand them to do.

I look forward to that.If you check the direction AMD is going, it's pumping more core and running them at lower clocks, which is how you get to many cores, low power.

And don't look forward to the upcoming BIG.little intel processors. But I might be wrong.

Last edited:

llien

Member

A14 is not a "shrink" of A13 to begin with."Despite TSMC claiming a 1.8x shrink for N5, Apple only achieves a 1.49x shrink"

There are various reasons for going for not as dense designes (clockspeed, power limitation, yields among other)

llien

Member

I don't think you understand what AWS is.I don't think it would be cost effective for amazon to invest in it if they weren't planning on switching.

It is a cloud, that amazon "sells" as a service.

You go there and rent a "server".

What you are referring to is amazons new offering, now you could rent that new type of servers with that new CPU (that "Graviton" thing):

Amazon Cloud Offerings

Choice of processors

A choice of latest generation Intel Xeon, AMD EPYC, and AWS Graviton CPUs enables you to find the best balance of performance and price for your workloads. EC2 instances powered by NVIDIA GPUs and AWS Inferentia are also available for workloads that require accelerated computing such as machine learning, gaming, and graphic intensive applications.

Last edited:

lostinblue

Banned

It's not a shrink for sure, but it can still be indicative of how much density they were able to get out of it compared to a previous node.A14 is not a "shrink" of A13 to begin with.

There are various reasons for going for not as dense designes (clockspeed, power limitation, yields among other)

What you wrote is precisely what I thought it was/is.I don't think you understand what AWS is.

It is a cloud, that amazon "sells" as a service.

You go there and rent a "server".

What you are referring to is amazons new offering, now you could rent that new type of servers with that new CPU:

Amazon Cloud Offerings

But I realize there are certainly a lot of things things about it that I'm not overly familiar with.

I was (very) wrong in claiming "a switch" had occurred though, but I still think it might. What I understand now is that it won't be Amazon to do it, anymore than the clients specifically requesting that service (all amazon did was setting a lower price for it).

Last edited:

llien

Member

It would be indicative if Apple had a quest of packing as much stuff as possible. Which is not something we know happened.It's not a shrink for sure, but it can still be indicative of how much density they were able to get out of it compared to a previous node.

What you wrote is precisely what I thought it was/is.

But there are certainly a lot of things things about it that I'm not overly familiar with, though.

llien

Member

Then it is puzzling, to put it softly, how you read it as "ARM is now 3x% of the cloud space".What you wrote is precisely what I thought it was/is.

It is perhaps 1% of AWS at this point.

lostinblue

Banned

Right. Oversight on my part.Then it is puzzling, to put it softly, how you read it as "ARM is now 3x% of the cloud space".

It is perhaps 1% of AWS at this point.

Doesn't mean I don't understand what it is and what their business is.

As you said in regards to twitter needs: "there is nothing x86 about software that they run", I assumed the same thing in regards to Amazon.

Thing is, I assumed clients didn't even necessarily have to know what type of server they were using due to that - at least in some cases (storage, for instance). So there.

I didn't think necessarily that 30% of the market was ARM this soon, I thought there was a switch occuring. I still do think it is happening, but now I think it'll happen at a slower pace.

Kazekage1981

Member

Samsung and AMD

Question is, how will they make games using the ARM architecture and X86 architecture at the same time at 4k 60fps with HDR and raytracing with DLSS?

Question is, how will they make games using the ARM architecture and X86 architecture at the same time at 4k 60fps with HDR and raytracing with DLSS?

Omega Supreme Holopsicon

Member

There are no custom high performance arm based chips outside the m1. They'd have to custom design them, and spend several 100M. I think microsoft, amd, and apple are now designing high performance arm cpus. Nvidia might also be planning to get in on the action.So, why aren't they using ARM chips which allegedly are out there somewhere (and are smaller, faster and consume less power too)?

llien

Member

And there are no custom high performance arm based chips perhaps because even "high performance arm" M1, with node and size (+40% in equiv terms) advantage, at best, trades blows with (already dated at this point) 15W 4800U.There are no custom high performance arm based chips outside the m1.

Bad blood caused by money can also be fixed by money too.Nvidia is a pretty scummy company, there's bad blood between them and a lot of the major players so nobody wants to work with them anymore.

The reason nVidia isn’t in consoles isn’t because they can’t, it’s because they don’t see the value to them to engage in making some customized console chips vs their gpu lineup.

if they felt like there was a better opportunity there you can bet your ass they’d be on board. For what ever reason they believe there’s more value to be gained by allocating their resources elsewhere.

Omega Supreme Holopsicon

Member

M1And there are no custom high performance arm based chips perhaps because even "high performance arm" M1, with node and size (+40% in equiv terms) advantage, at best, trades blows with (already dated at this point) 15W 4800U.

In some tests single core performance is near state of the art ryzen 5000 desktop cpus. All while consuming 1/10th the energy. When we get higher core higher watt desktop m chips expect them to smoke x86

Rikkori

Member

Nope. That's not how things scale in the real world.When we get higher core higher watt desktop m chips expect them to smoke x86

Omega Supreme Holopsicon

Member

Doesn't matter how bad it scales if it goes from the 3~Ghz low power to say 4.5~Ghz a conservative speed that should be relatively easy to achieve, that should gain 50~% performance. Taking it from 1500~ score to 2250~ single core score, while ryzen state of the art single thread score is 1600~.Nope. That's not how things scale in the real world.

Last edited:

Rikkori

Member

Ah yes, I also love making up numbers to fit my fantasies. But why go so low? Why not 100%? Clearly Apple is such a special company they can do it! Don't lowball their abilities.Doesn't matter how bad it scales if it goes from the 3~Ghz low power to say 4.5~Ghz a conservative speed that should be relatively easy to achieve, that should gain 50~% performance. Taking it from 1500~ score to 2250~ single core score, while ryzen state of the art single thread score is 1600~.

llien

Member

Dude, you knew what the chances of me clicking on that were, didn't you?In some tests

When a list benchmarks to apply includes random bazinga like geekbench, you can "achieve" a lot.

That, however, doesn't reflect what the chip is really capable of.

Take cinebench (neither a perfect benchmark, but no random number generator like geekbench and also note that there are barely any apps that could be benchmarked), and what we've got in here, single threaded:

That is pretty formidable for a 24W CPU, but note how even 4800U isn't far behind, even though there is a major node upgrade between them.

And to get to what it really is, what happens when we go multi-threaded:

so in a real use case with a real application, 25W M1 is notably slower than 15W 4800U, start using emulation and you are at nearly half of 4800u's perf.

Nothing here hints at "future ARM domination", to put it softly.

(also note the +perf +wattage ratio)

25W is by no means "low power" in today's world. Many Zen's have TDP of 65W, some are even 45W.it goes from the 3~Ghz low power

No, bad blood caused by someone being an ass, leads to there being no trust, which leads to "risks are too high to deal with this company".Bad blood caused by money can also be fixed by money too.

Apple stopped touching anything green way before they've rolled out own chips and even back when NV had no real competition, with AMD just starting the ryzing.

Last edited:

DeepEnigma

Gold Member

RDNA 2 is entering and about to dominate mobile graphics, so we’ll see how that translates over the years with compatibility.

I don’t see it next gen, however.

I don’t see it next gen, however.

Dude, you knew what the chances of me clicking on that were, didn't you?

When a list benchmarks to apply includes random bazinga like geekbench, you can "achieve" a lot.

That, however, doesn't reflect what the chip is really capable of.

Take cinebench (neither a perfect benchmark, but no random number generator like geekbench and also note that there are barely any apps that could be benchmarked), and what we've got in here, single threaded:

That is pretty formidable for a 24W CPU, but note how even 4800U isn't far behind, even though there is a major node upgrade between them.

And to get to what it really is, what happens when we go multi-threaded:

so in a real use case with a real application, 25W M1 is notably slower than 15W 4800U, start using emulation and you are at nearly half of 4800u's perf.

Nothing here hints at "future ARM domination", to put it softly.

(also note the +perf +wattage ratio)

25W is by no means "low power" in today's world. Many Zen's have TDP of 65W, some are even 45W.

No, bad blood caused by someone being an ass, leads to there being no trust, which leads to "risks are too high to deal with this company".

Apple stopped touching anything green way before they've rolled out own chips and even back when NV had no real competition, with AMD just starting the ryzing.

risks caused by money.

It’s all about money. Everyone knows nVidia has the top product. It’s not like there’s “risk” because they can’t produce, there’s risk around nVidias willingness to customize or do so at a particular price point at a particular volume. nVidia just refuses to make cheap bids on contracts because they don’t need to - that’s it. AMD on the other hand has been forced to in order to survive.

Last edited:

Omega Supreme Holopsicon

Member

using multithread is disingenuous. The arm m1 only has 4 real cores, of course it won't keep up with 8 real cores.so in a real use case with a real application, 25W M1 is notably slower than 15W 4800U, start using emulation and you are at nearly half of 4800u's perf.

Nothing here hints at "future ARM domination", to put it softly.

(also note the +perf +wattage ratio)

Single thread you have around 25% more performance than a comparable ryzen mobile chip. When you get 8-16 real cores in a full chip expect multicore to also own ryzen and intel multicore.

Also the 4800u is a 4+Ghz chip going against a 3~Ghz chip, yet the 3~Ghz chip has 25% higher single thread performance. When apple releases 4+Ghz expect 50~% single thread performance improvement, and similar in multicore.

Do you think releasing a 4~Ghz chip is an outlandish fantasy using 3nm node? And again do you think 50% higher clocks will not lead to significantly higher performance?Ah yes, I also love making up numbers to fit my fantasies. But why go so low? Why not 100%? Clearly Apple is such a special company they can do it! Don't lowball their abilities.

Rikkori

Member

Do you think releasing a 4~Ghz chip is an outlandish fantasy using 3nm node? And again do you think 50% higher clocks will not lead to significantly higher performance?

What I think is that you are ignoring so many issues around scaling, which goes from how the chips are designed in the first place all the way to not understanding key aspects of the M1 itself and how that's knee capped from scaling further (in particular as it relates to the memory arrangement), such that it's pointless to get into these discussions of "oh but if they increase bla bla by bla bla then it's going to be super duper mega good and better than everything else x86". That's to not even get into the business side, which is just as crucial to these endeavours as the tech ability of the companies in question.

Right now I compare like for like, and mainly around what I'm most familiar with and interested in: games. From that perspective even Apple's ARM efforts (which btw, are magnitudes above anyone else on the market, simply due to their size & wealth) mostly put me to sleep with disinterest. I hear it's great but I've yet to actually see it outside of synthetic benchmarks, which are a further soporific.

So all I can do is treat claims as yours of these hypothetical monster ARM CPUs with mild amusement.

Omega Supreme Holopsicon

Member

We will see what happens when desktop high power apple chips are released and when companies like adobe release native versions of their apps. Right now the m1 hints that things are about to get ugly for x86.What I think is that you are ignoring so many issues around scaling, which goes from how the chips are designed in the first place all the way to not understanding key aspects of the M1 itself and how that's knee capped from scaling further (in particular as it relates to the memory arrangement), such that it's pointless to get into these discussions of "oh but if they increase bla bla by bla bla then it's going to be super duper mega good and better than everything else x86". That's to not even get into the business side, which is just as crucial to these endeavours as the tech ability of the companies in question.

Right now I compare like for like, and mainly around what I'm most familiar with and interested in: games. From that perspective even Apple's ARM efforts (which btw, are magnitudes above anyone else on the market, simply due to their size & wealth) mostly put me to sleep with disinterest. I hear it's great but I've yet to actually see it outside of synthetic benchmarks, which are a further soporific.

So all I can do is treat claims as yours of these hypothetical monster ARM CPUs with mild amusement.

llien

Member

Nobody would write off NV if all it did was perceived as normal in business.AMD on the other hand has been forced to in order to survive.

Exactly the opposite has happened, J.H. acted as an asshole and who could, learned to avoid making business with him.

Running cinebench on a single core is disingenuous - it is not something real humans do in practice.using multithread is disingenuous. The arm m1 only has 4 real cores, of course it won't keep up with 8 real cores.

M1 has 8 real cores, 4 of which are bigger than 4 others.

Too bad M1 consumes 25W even at 3Ghz, chuckle.4800u is a 4+Ghz chip going against a 3~Ghz chip

That funny take works both ways.Single thread you have around 25% more performance than a comparable ryzen mobile chip.

A 25W chip with 16 billion transistors that are unevenly split between 4+4 cores is beating single core of a 15W chip with 9.8 billion transistors that are evenly split between 8 cores and trailing behind when cores are actually used.

Don't forget to wake me up to check figures out, chuckle.When apple releases 4+Ghz

How big of a node advantage would it need to beet "inferior" x86 chips by AMD?

Last edited:

I think the chance to go ARM by either Sony or Microsoft has passed them by. They had their chance for last generation, it would have been the ideal time. But with two generations of X86, emulation, PC gaming space, a lot would have to have happen now. Also Nvidia burnt both companies within the last 20 years.

I'm not sure on the difficulties of X86 vs ARM for porting games. Obviously Switch and Nintendo went that direction for a mobile/handheld device. But for either company to do a clean break, goes against Microsoft's nature at this point. Unless emulation is possibel/easy. Sony on the other hand is more likely of the two, to do a reset or clean break.

I for one, welcome our new ARM overlords. Apple is doing some interesting things too.

I'm not sure on the difficulties of X86 vs ARM for porting games. Obviously Switch and Nintendo went that direction for a mobile/handheld device. But for either company to do a clean break, goes against Microsoft's nature at this point. Unless emulation is possibel/easy. Sony on the other hand is more likely of the two, to do a reset or clean break.

I for one, welcome our new ARM overlords. Apple is doing some interesting things too.

TheMay30thMan

Member

I hope one switches to NVIDIA and one stays. I want to see what the differences bring to the console wars.

Omega Supreme Holopsicon

Member

4 are high power cores 4 are for low power use and are significantly weaker. The performance per big core is probably indicative of what will happen when we get 8-16 big cores.Running cinebench on a single core is disingenuous - it is not something real humans do in practice.

M1 has 8 real cores, 4 of which are bigger than 4 others.

You will get them.Don't forget to wake me up to check figures out, chuckle.

Atom was owned by arm, and full body x86 will be owned by arm too.

Last edited:

I'm still one of those guys who wished 68k (Amiga, ST) chips were still a thing. Anything other than Intel and X86 to some degree. Mips, Risc, ARM, whatever. For the first time, I am very interested in the new M1 apple products, never owned a Mac of any kind. Is it worth the plunge, I honestly don't game much on my PC anymore, use it for remote work and browsing. Also have apple products in tablet and phone.

Omega Supreme Holopsicon

Member

It's still in the early period of the transition from x86 to arm, so some apps might have compatibility issues. But for light workloads like browsing it is said to be lightning fast also very low power consumption.I'm still one of those guys who wished 68k (Amiga, ST) chips were still a thing. Anything other than Intel and X86 to some degree. Mips, Risc, ARM, whatever. For the first time, I am very interested in the new M1 apple products, never owned a Mac of any kind. Is it worth the plunge, I honestly don't game much on my PC anymore, use it for remote work and browsing. Also have apple products in tablet and phone.

llien

Member

That take works both ways. Since 4 other cores are small, the first 4 can be bigger, while Ryzen has all at the same size.4 are high power cores 4 are for low power use and are significantly weaker.

With 4 cores pushing 25W at 3Ghz, what frequency would 8 core (let alone 16 core) run at?The performance per big core is probably indicative of what will happen when we get 8-16 big cores.

Ah, suddenly it is about "owning atom"...Atom was owned by arm, and full body x86 will be owned by arm too.

I also happen to miss when Atom was "owned", to me it looked like Atom actually debunked "low power = ARM world".

Ultimately, Intel has failed to capture mobile market (that was the ultimate goal, I guess and I don't remember if Microsoft is also to blame as they were supposed to push win32 on x86 on phones), on the other hand, product wise, its mobile chips (Haswell, Ivy Bridge) got to the "under 10W" levels, and if so, why bother with Atom.

Trimesh

Banned

Ultimately, Intel has failed to capture mobile market (that was the ultimate goal, I guess and I don't remember if Microsoft is also to blame as they were supposed to push win32 on x86 on phones), on the other hand, product wise, its mobile chips (Haswell, Ivy Bridge) got to the "under 10W" levels, and if so, why bother with Atom.

Because despite the reasonable looking headline power consumption the system level power drain of the Intel mobile chips is too high for the sort of applications Atom was aimed at. This is, IMO, simply down to Intel not being committed enough to the market to put the money into moving their designs forwards. OK, they got off on the wrong foot with the original series of single-issue in-order Atom chips - they ticked the "low power consumption" box, but the performance was grossly deficient. But the Moorefield Atoms (AKA Z-series) were a huge improvement and were highly competitive with the contemporary ARM designs in performance terms - although they lost out a bit in system-level power consumption due to the dual-channel memory setup that same feature made them very fast in applications that demanded a lot of memory bandwidth.

The only significant deficiency was the lack of integrated LTE (well, any integrated radio at all, in fact) - and a lot of people (including me) were expecting Intel to follow up with newer parts that addressed this and improved the performance to stay in line with what the ARM vendors were offering. But instead they did ... nothing. Well, released a few almost identical but slightly higher clocked SKUs that still needed a separate radio and now seemed quite slow compared with the ARMs. At this point, Asus (who were one of the few companies to get on board Intel's Atom based smartphone platform) went "fuck it" and made their next phone using a Qualcomm ARM architecture CPU.