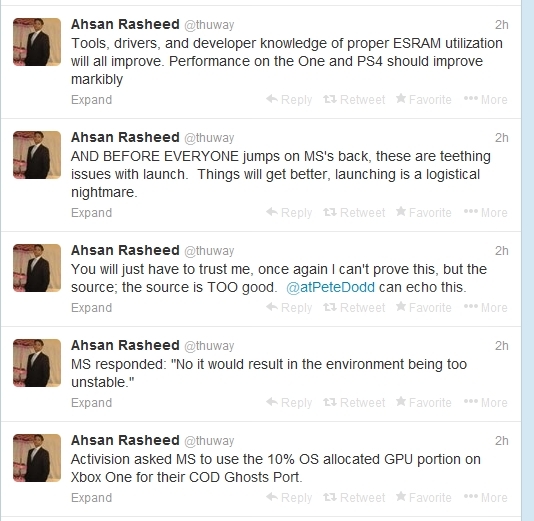

iamshadowlark

Banned

Seriously, the hot RAMZ was FUD, i thought this was dispelled months ago?

Dispelled? Its fact lol. The FUD was that the GDDR5 was unmanagable.

More pics:

Seriously, the hot RAMZ was FUD, i thought this was dispelled months ago?

Dispelled? Its fact lol. The FUD was that the GDDR5 was unmanagable.

More pics:

There isn't even a memory temp showing in this picture... Why are you trying to double down on the stupid?

Are you slow? The one thats clearly labeled MEMIO is the sensor by the memory bank...

And the sensor is placed there to measure the heat. It's not that hard.No, I'm not slow. GPU MEMIO is the subsection of the GPU that interfaces with the memory. Again, it is not RAM just like VRMs are not RAM.

And the sensor is placed there to measure the heat. It's not that hard.

No, its VRM.

On AMD 6900s the voltag regulator is VReg

Interesting, i wonder if Microsoft let them do it.

The VRM is not the RAM.Sure. ALUs are alot more sensitive to heat than ram chips. But yeah, ram runs hotter than anything.

not my captions

I don't think this is accurate..

Interesting, i wonder if Microsoft let them do it.

Because it's better to triple down.There isn't even a memory temp showing in this picture... Why are you trying to double down on the stupid?

According to another tweet from that timeline, no. Wouldn't make any sense anyway. The whole point of the XBO's OS setup and resource reservation is to provide solid isolation between the Win8/apps-part and the game-part. Compromising that isolation would also compromise the concept of the console. (Assuming that it could even be done.)

VRAM is the GDDR pool. VRM(Video Ram) is what you're looking for. Its 57C(VRAM) to 39C(GPU). GDDR runs very hot

Your points are going everywhere.

Yes again, RAM is alot less sensitive to heat than logic is. We don't know which card is being used but its very clear what is being shown. GPU-z pulls data directly from the GPU sensors, so its as accurate as they are.

VRAM runs hottest in most high-end systems, its well established, I can provide more pictures that all show the same conclusion if you want...

So even with the extra 10% APU allocation, CoD: Ghosts is still only 720p?

Is that what this amounts to?

So even with the extra 10% APU allocation, CoD: Ghosts is still only 720p?

Is that what this amounts to?

Yes, it is measuring the heat of the memory controller which is not RAM. I'm glad we are in agreement now.

Its measuring the temperature of the ram. There is hardly any logic even in a MC, there's no reason it would need a sensor as there is generally no reason to know its temperature.

You're wrong about this just like you were wrong about what a VRM is, but there is clearly no convincing you of that fact so enjoy living in ignorance I suppose.

Has this already been discussed somewhere?

I actually find that odd. I didn't think that the raw processing power was one of CoD's bottleneck, but the ESRAM size alone.

You first posted an image with GPU VRM Temperature circled while the GPU Memory temperature was at 31 degree Celsius.The voltage regulator is VREG. I'll take the real world if you don't mind

The voltage regulator is VREG. I'll take the real world if you don't mind

I don't see all the fuss. It's early and devs haven't even tapped into the cloud yet.

I don't see all the fuss. It's early and devs haven't even tapped into the cloud yet.

1) I do not see VREG anywhere and the VRM is the voltage regulation module. http://en.wikipedia.org/wiki/Voltage_regulator_module

2) The biggest heating device on dGPU is the part that draws the most power, that would be the GPU itself, this is why it is actively cooled unlike the VRM, and the memory modules that on stock configurations just settle for passive cooling.

3) The fact that the GPU core is at a lower temperature than the VRM/Memory does not mean it is generating less heat because it is actively cooled and a large portion of the heat generated is removed via the heatsink and fan.

You first posted an image with GPU VRM Temperature circled while the GPU Memory temperature was at 31 degree Celsius.

You then used the VRM acronym to mean Video RAM while the GPU memory obviously refers to the outside temperature. (My conjecture, you never said what it could mean if VRM is Video RAM.)

You doubled down on this misinterpretation.

I don't think ESRAM makes sense as an excuse for CoD. The engine is not using deferred rendering.

Weak GPU makes more sense. Fillrate issue.

I don't think ESRAM makes sense as an excuse for CoD. The engine is not using deferred rendering.

Weak GPU makes more sense. Fillrate issue.

I'm not a developer. I have no idea.

There are a lot of confounding factors. CoD: Ghosts should be higher than 720p on Xbox One. It is a marginally improved engine. My natural inclination is to say things will get better, but what if they upgrade their technology next year or the year after and the game becomes more technically demanding?

Keep in mind this gen the resolution of CoD got worse over time (until the end, when Blops 2 made it sorta wackily different).

The GPU is weak. Period. The PS4 GPU is not a superstar but the Xbone GPU is like worse than the RSX for its respective time period.

Can anyone with more technical knowledge than me comment on the capability of the ESRAM with deferred rendering? It seems like forward rendered games are capable of running at 1080p (Forza) while deferred engines are having problems. Does this have to do with the size of the framebuffer in relation to the 32MB of ESRAM? And it that is the problem, is there a possible workaround?

That's quite telling then. It would certainly imply that the XB1's GPU had trouble handling Ghosts at higher than 720p resolutions when only 90% of it is available

That's odd

The GPU is weak. Period. The PS4 GPU is not a superstar but the Xbone GPU is like worse than the RSX for its respective time period.

I don't think ESRAM makes sense as an excuse for CoD. The engine is not using deferred rendering.

Weak GPU makes more sense. Fillrate issue.

I think MS will have to settle with no snap mode for some games. May become a per game feature. 10% of GPU is a lot. Especially when you're already underpowered.

Well, unless I'm mistaken, so is the PS4's GPU. Not to downplay the comparison too much, but yeah.

As far as PS4 vs Xbox One GPU wise, here's how it stacks up.

PS4: 1.84TF GPU ( 18 CUs)

PS4: 1152 Shaders

PS4: 72 Texture units

PS4: 32 ROPS

PS4: 8 ACE/64 queues

8gb GDDR5 @ 176gb/s

Verses

Xbone: 1.31 TF GPU (12 CUs)

Xbone: 768 Shaders

Xbone: 48 Texture units

Xbone: 16 ROPS

Xbone: 2 ACE/ 16 queues

8gb DDR3 @ 69gb/s+ 32MB ESRAM @109gb/s

Yes but you know what, its always been like that. Reviews for example are often based on personal preferences and are rarely ever objective. Thats why i never read them. We are surrounded by it all year round and now people are surprised cause it concerns hardware? Where have these people been all this time?

Yes the PS4 GPU isn't particularly powerful but considering the differences the XB1's is very very weak

Well, unless I'm mistaken, so is the PS4's GPU.

It depends on the metric. In pure performance terms the PS4/Xbox One GPUs are slower compared to top tier pc parts than the 360/PS3 were back when they launched. In terms of power consumption the PS4/Xbox One parts are roughly on par with what was in the 360 and the PS3. The issue is that on the PC front power consumption for graphics cards has been going up.

When you want to design a box that is going to be small, quiet and stable you cannot put in a 200+ watt GPU into it without making a compromise on size or noise.

I think MS will have to settle with no snap mode for some games. May become a per game feature. 10% of GPU is a lot. Especially when you're already underpowered.

in terms of architecture too, the PS4 gpu is more modern also.

I'm not technically inclined but I've been reading on this stuff and size is the problem for the most part. Using deferred rendering on modern engines, using 32mb is just enough for around 720p-900p.

I'm not sure if there are any workaround other than not using deferred rendering but other more technically inclined GAF members should be able to answer you.