$450 for good 1440p performance is expensive?....Yet the Nvidia equivalent with less performance is more expensive......Raytracing is a non feature atm......No one is buying these cards for raytracing, to play what? Battlefield at low rez and squashed framerates, Quake II at 540p 25 fps? Is that why AMD is behind Nvidia?

So you see, these folk have no problem paying sky high for Nvidia and it's mediocrity, trumpeting raytracing like it's revolutionary, when all they have is hybrid raytracing for four games in one graphic feature, not even the real thing, minus a Quake demo......And yet, no one is tanking their rez+perf in Battlefield, in Tombraider or Metro for raytracing, when the visual differences are not even arresting to any degree....

$450 is too much money to spend on AMD for more perf, but $500/600 is A-OK to spend on Nvidia for less perf.....Better keep that logic at the door....The other thing that's a bit disingenuous is how no one speaks of AMD features and how revolutionary they are...Radeon Chill, ID buffer, HBCC etc.....and now Fidelity FX, Image Sharpening Filter and Anti-Lag, on average more ram over the competition...…..As you said, the only place you can get Variable rate refresh over HDMI, which is common knowledge, but nobody speaks of these strengths of AMD over Nvidia as a positive, when they do, like you, they say, "only reason I'm buying AMD is because of this or that", if not I'd buy Nvidia.......Yet, they can never praise AMD for doing things or offering features NVDON'T, instead they want all these AMD features that NV does not have at $5, whilst NVIDIA can charge $1200.00....to play a quake demo at 1080p 60fps, because that's what we've been waiting for and that's what justifies RTX...

It's just like Radeon 7, At $699, you can get the best productivity card for youtubers, amateurs and even entry level PRO's without breaking the bank, it's a great gaming 4k card and of course it has tremendous bandwidth and 16GB of HBM for futureproofing, (which is expensive as all hell)….Yet NV charges $700-800 for an RTX 2080 with half the vram/bandwidth (cheaper vram too) which plays quake at what? Where nobody enables RTX to play battlefield online or SP at lower frames and rez.......Yet every NV fan is justifying these prices.....Yet it's AMD that must be every NVIDIA FAN's charity sponsor....I think it's about high time AMD gives the proverbial finger to said persons...…No NV fan is saying to NV, hey RTX support is a mess, these cards are too expensive for just Pascal performance with less ram at a 40% markup...…Everyone wants 2080ti performance for the price of a GTX 1060 from AMD, but they will never ask this of NV....Yet they will blast AMD for offering better perf in many GPU classes, there's always something innit...…

Yet, I know that such internet talk is just NV fans huffing and puffing, they all have 2080ti's you see and AMD just can't put a card better than that, so why should they upgrade....

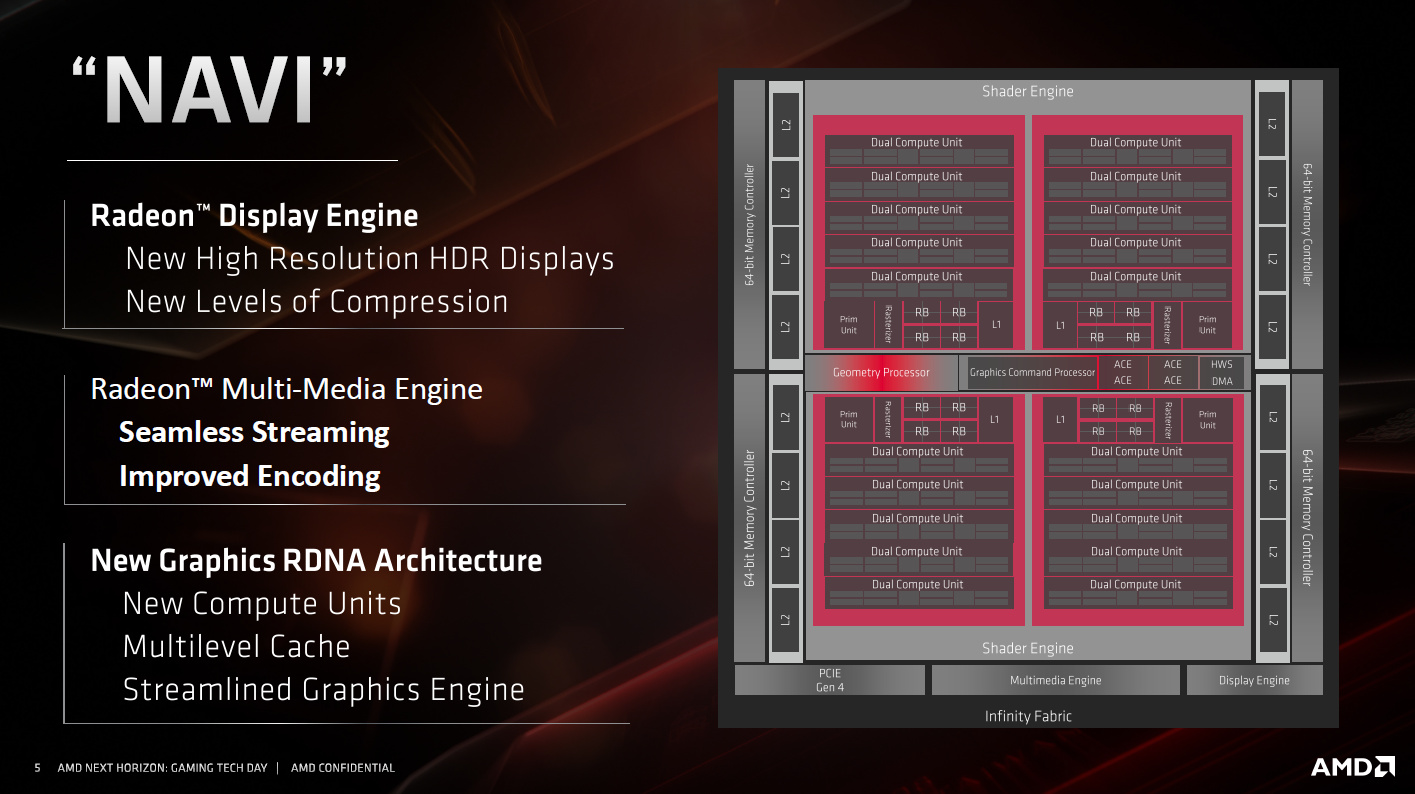

…...Lmao...… In any case, AMD has momentum now and Navi will do very well in this market......They targeted the right set of cards for the first line NAVI products....