Sebbi on Beyond3d talked about 30% performance boost with async compute. The Oxide dev mentioned something along those lines referring to those who used it on consoles.

This is the same Q's assessment of gains from async compute in Tomorrow's Children. This example is a) true for a PS4 console only and b) is actually somewhat light on asynchronous processing if their presentations on the matter are accurate. It is unknown if this is in fact a gain from running things asynchronously or a gain from doing stuff differently on the GCN architecture in question.

It's unfortunate that I will not see such gains considering Maxwell does not support this feature as well as GCN cards.

It is unknown right now if Maxwell handles the feature worse than GCN does. We don't have enough data to make such conclusion yet.

It's also a moot point of what is really unfortunate here - that you won't get some free performance out of async compute on Maxwell in D3D12/Vulkan or that all GCN owners will never get this additional performance anywhere but in D3D12/Vulkan.

It's not a magic button but AMD invested in it for a good reason. If it didn't matter we would not be talking about async compute.

There is no need to downplay this feature, I would have preferred Nvidia to offer something nearly identical, alas that will have to wait until Pascal probably. It seems to be a major D3D12 feature so I'm surprised Nvidia are behind the curve.

Nobody is downplaying anything. The only thing which I'm trying to accomplish is to kill some PR/marketing FUD around the issue as all things in GPUs are hardly as simple as they are presented by the interested parties.

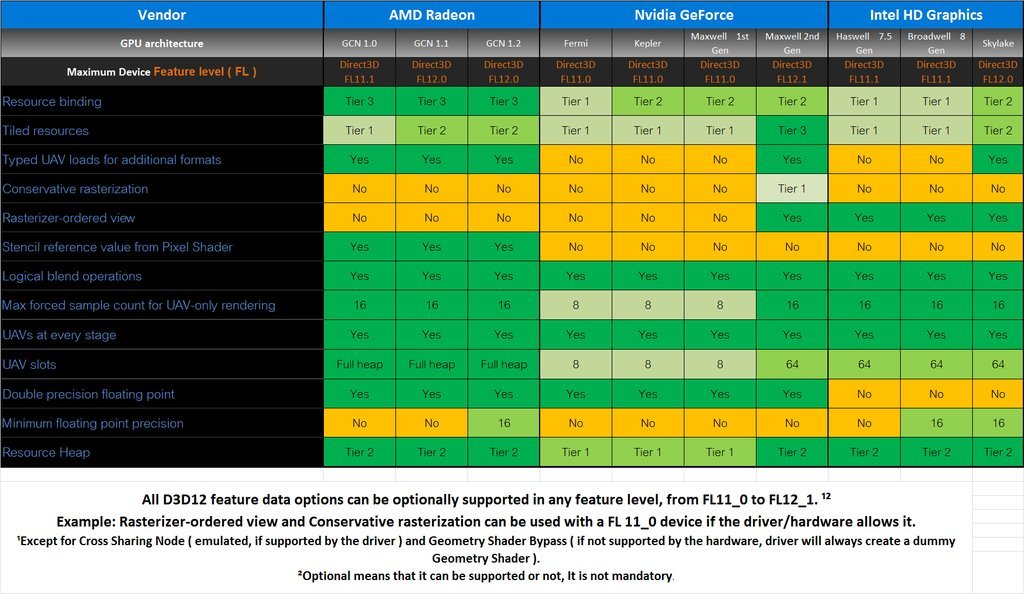

As for the major D3D12 feature and NV being behind the curve - NV has support for several major D3D12 features which are completely unsupported by GCN. What's even more important, async compute as a feature has no requirement of being implemented in any specific way. The only requirement is to support it in the drivers - the hardware can run it serially or asynchronously with varying results depending on the architecture.

As I've already said, from a pure architectural excellence point of view a GPU which is running good in all APIs with one workload as good as with several is a better designed GPU than the one which is running at its peak only in D3D12/Vulkan and with several workloads in parallel. So instead of being disappointed you should be glad as your GPU doesn't have much things idle right now, without any need for a new APIs and games.

I don't think Kepler or Maxwell cards will do poorly in DX12 games but not as good as their equivalently priced GCN cards.

I'm curious to see what gains will we see on DX12 games using this feature, to my knowledge there are none at the moment.

Kepler will do bad in DX12 games as it is a purely DX11 architecture targeted at a lighter workloads of three years ago. GCN 1.0 won't do much better than Kepler though.

And Maxwell 2 will do DX12 just fine as it is a DX12 architecture. It's a safe bet that Pascal and even Volta will be a better DX12 architectures - but that's how things always are.

"You can stop looking: all Maxwell 2 GPUs are fully compatible with the highest DX12 feature level."

You can argue semantics if you want but people are going to interpret that as you saying Maxwell 2 has full dx12 support.

I'm sorry but I'm not responsible for people's reading skills. Maxwell 2 supports the highest feature level of DX12 while GCN 1.2 doesn't - this is what I've said and this is completely true.